Spacy: How to convert simple NER format to spacy json

Currently I have data in the simple training data / offset format as shown in the docs:

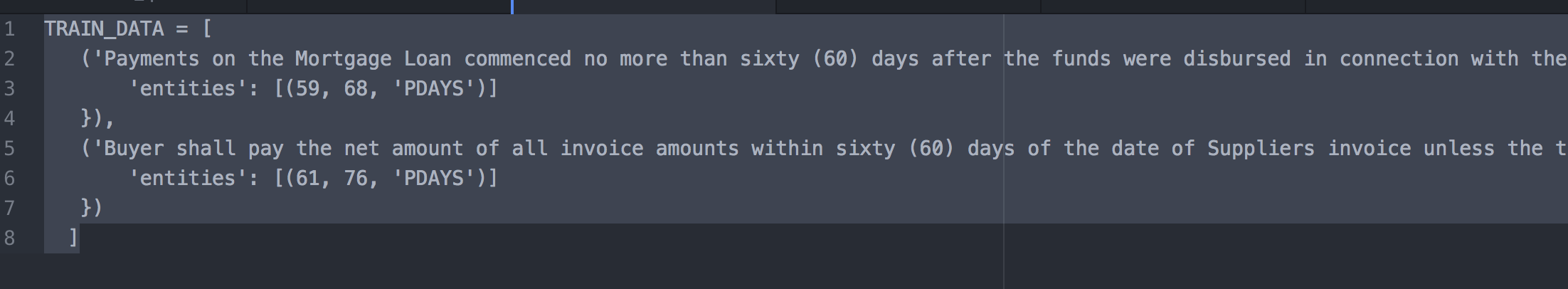

TRAIN_DATA = [

('Who is Shaka Khan?', {

'entities': [(7, 17, 'PERSON')]

}),

('I like London and Berlin.', {

'entities': [(7, 13, 'LOC'), (18, 24, 'LOC')]

})

]

In order to utilize the CLI trainer, this data needs to be in spacys internal json training data format. to do this I am currently converting the simple offset format to conllner, then using the spacy cli converter.

Is there a better way to directly get offset / simple format directly to spacy json format? Using the cli trainer seems to be able to train much faster than using the method specified here

Your Environment

- spaCy version: 2.0.5

- Platform: Linux-4.4.0-1049-aws-x86_64-with-debian-stretch-sid

- Python version: 3.6.4

- Models: en_core_web_lg, en

All 17 comments

Yes, there's a gold.biluo_tags_from_offsets helper function that converts the entity offsets to a list of per-token BILUO tags:

from spacy.gold import biluo_tags_from_offsets

doc = nlp(u'I like London.')

entities = [(7, 13, 'LOC')]

tags = biluo_tags_from_offsets(doc, entities)

assert tags == ['O', 'O', 'U-LOC', 'O']

Is there a way to save the bilou tags in the json format used by cli

trainer?

On Feb 10, 2018 12:16 PM, "Ines Montani" notifications@github.com wrote:

Yes, there's a gold.biluo_tags_from_offsets

https://spacy.io/api/goldparse#biluo_tags_from_offsets function that

converts the entity offsets to a list of per-token BILUO tags:from spacy.gold import biluo_tags_from_offsets

doc = nlp(u'I like London.')

entities = [(7, 13, 'LOC')]

tags = biluo_tags_from_offsets(doc, entities)assert tags == ['O', 'O', 'U-LOC', 'O']—

You are receiving this because you authored the thread.

Reply to this email directly, view it on GitHub

https://github.com/explosion/spaCy/issues/1966#issuecomment-364681964,

or mute the thread

https://github.com/notifications/unsubscribe-auth/AE8dWhfMVuXtBQM6NtcvP2T-EX5ruIx9ks5tTeskgaJpZM4SBCtQ

.

I think we currently lack a writer that does exactly that. I've been finding the current data-format situation frustrating too. Needs improvement.

I used bilou_tags_from_offsets, then saved these as conll format, then

spacy CLI converter, then spacy trainer. It worked but was a roundabout way

to leverage already existing code.

On Feb 11, 2018 2:15 AM, "Matthew Honnibal" notifications@github.com

wrote:

I think we currently lack a writer that does exactly that. I've been

finding the current data-format situation frustrating too. Needs

improvement.—

You are receiving this because you authored the thread.

Reply to this email directly, view it on GitHub

https://github.com/explosion/spaCy/issues/1966#issuecomment-364732317,

or mute the thread

https://github.com/notifications/unsubscribe-auth/AE8dWuQK7UbHD_NBbERCeq0mOgarVLEOks5tTqG3gaJpZM4SBCtQ

.

I have also the same problem in training NER.

@honnibal @r-wheeler

Is there any json sample training data for NER?

You can find the structure and an example of the training JSON format here:

https://spacy.io/api/annotation#json-input

So you should be able to write a script that produces data in this format, using the BILUO tags. I agree that spaCy should make this easier (and, as @honnibal mentioned above, we're also thinking about overhauling the training format in general to make it easier to work with).

Thanks @ines

That is a very good idea, I'm looking forward to it.

Same question that I posted on the chat channel today. I want my program to point to training data in a separate file rather than having it in the code. I currently have as follows based on the examples on the website

@rahulgithub, recently I was looking for similar need. I figured a way to put the content to a separate file instead of in code.

Code to read the text_file:

inpt_text = open("text_file","r")

TRAIN_DATA = inpt_text.read().replace('\n ', '')

TRAIN_DATA = ast.literal_eval(' '.join(TRAIN_DATA.split()))

text_file content format:

[

('Who is Shaka Khan?', {

'entities': [(7, 17, 'PERSON')]

}),

('I like London and Berlin.', {

'entities': [(7, 13, 'LOC'), (18, 24, 'LOC')]

})

]

Plz share if there are any other approaches to maintain TRAIN_DATA in a separate file instead of in code.

@rahulgithub @DevaChandraRaju How you solve this depends on which file formats you like to work with. ast.literal_eval isn't bad – but it's also a very uncommon way to pass data around. And the transformations (replacing, splitting, joining) you have to do to read in the file can very easily lead to bugs.

A simpler way might be working with JSON. Here's a an example of a file data.json:

[

["Who is Shaka Khan?", {"entities": [[7, 17, "PERSON"]]}],

["I like London and Berlin", {"entities": [[7, 13, "LOC"], [18, 24, "LOC"]]}]

]

JSON doesn't have tuples, so you need to use lists everywhere. In Python, you can then do the following:

import json

import io

with io.open('/path/to/data.json', encoding='utf8') as f:

train_data = json.load(f)

Also remember that you can always re-write the training script to format your data differently. If you're working with large training sets, we also recommend to use the spacy train command instead – this will take care of batching up your examples correctly for optimal performance, and it'll also give you much more useful command line output.

I am also about to write a script to convert from inline training format to CLI training format. However I train the model on sentences. So each paragraph entry will contain exactly one sentence instead. Is that fine? Since the NER model trains on context which are usually no more than sentences, I do not see a reason for paragraphs to be input with their raw text and then sentences. I think paragraphs are there for Dependency Parsing. Is that right?

I am pasting an answer derived from stackoverflow which is not showing up easily in searches. Ofcourse you will have to improvise a bit if you have multiple paragraphs.

def getcharoffsetsfromwordoffsets(doc,entities):

charoffsets = []

for entity in entities:

span = doc[entity[0]:entity[1]]

charoffsetentitytuple = (span.start_char, span.end_char, entity[2])

charoffsets.append(charoffsetentitytuple)

return charoffsets

def convertspacyapiformattocliformat(nlp, TRAIN_DATA):

docnum = 1

documents = []

for t in TRAIN_DATA:

doc = nlp(t[0])

charoffsetstuple = getcharoffsetsfromwordoffsets(doc,t[1]['entities'])

tags = biluo_tags_from_offsets(doc, charoffsetstuple)

ner_info = list(zip(doc, tags))

tokens = []

sentences = []

for n, i in enumerate(ner_info):

token = {"head" : 0,

"dep" : "",

"tag" : "",

"orth" : i[0].string,

"ner" : i[1],

"id" : n}

tokens.append(token)

sentences.append({'tokens' : tokens})

document = {}

document['id'] = docnum

docnum+=1

document['paragraphs'] = []

paragraph = {'raw': doc.text,"sentences" : sentences}

document['paragraphs'] = [paragraph]

documents.append(document)

return documents

@ines is "spacy convert" command the solution for this issue? The reason why I am asking this is I don't see a converter for BILOU. Is that something currently not supported?

@ines and a followup question on that. The documentation for "convert command" https://spacy.io/api/cli#convert says nothing about supported input file format. In my case I just have my training data in a list format. How can I proceed from here? To give you little more detail about the training data, it is currently stored in python list with BILOU tags.

1) From here I am not sure what file format should I store the list, in order to give it as input to convetrt command?

2) does "convert" command support for BILOU tags to Json conversion?

Hello everyone,

I have the same question as @eswar3 about the input data format which I have to pass to convert function in order to train my NER for my custom entities.

and I'm getting this error:

n_train_words = corpus.count_train()

File "gold.pyx", line 189, in spacy.gold.GoldCorpus.count_train

File "gold.pyx", line 169, in train_tuples

File "gold.pyx", line 300, in read_json_file

File "gold.pyx", line 301, in spacy.gold.read_json_file

ValueError: Expected object or value

I try NER simple format, as well as converted with the methode from @SandeepNaidu, but still having this issue.

Could you please specify the format and hoe can I solve the issue?

In v2.1.0a1, this is now finally be easier. Thanks for your patience with the annoying format, which has a few extra levels of nesting so that it can accomodate whole document annotations, along with paragraph structure.

In v2.1.0a1, there's now a new function spacy.gold.docs_to_json. This function takes a list of Doc objects and outputs properly formed json data, for use in spacy train. You just have to set up the Doc objects to have the annotations you want. For named entities, this is as easy as writing to the doc.ents attribute. For the parser, the best approach is to create an array with the head offsets you want, and then import the data with doc.from_array().

You can install v2.1.0a1 by running pip install spacy-nightly. I've also added more converters to v2.1.0a1, including a converter that can handle a jsonl format similar to the "simple training format". See here: https://github.com/explosion/spaCy/blob/develop/spacy/cli/converters/jsonl2json.py . The name of this converter might change, but for now if you do spacy convert /path/to/data.jsonl it should convert it into the json data format.

This thread has been automatically locked since there has not been any recent activity after it was closed. Please open a new issue for related bugs.

Most helpful comment

@rahulgithub @DevaChandraRaju How you solve this depends on which file formats you like to work with.

ast.literal_evalisn't bad – but it's also a very uncommon way to pass data around. And the transformations (replacing, splitting, joining) you have to do to read in the file can very easily lead to bugs.A simpler way might be working with JSON. Here's a an example of a file

data.json:JSON doesn't have tuples, so you need to use lists everywhere. In Python, you can then do the following:

Also remember that you can always re-write the training script to format your data differently. If you're working with large training sets, we also recommend to use the

spacy traincommand instead – this will take care of batching up your examples correctly for optimal performance, and it'll also give you much more useful command line output.