Spacy: Advice for parallel processing?

When I run the following code, spacy uses multiple threads. But a better way to do so might be to use one thread for each line and parallelize the processing of lines. Does anybody know a way to disable parallelism in spacy?

import spacy

nlp = spacy.load('en', disable=['tokenizer', 'ner', 'textcat'])

import re

regex='^([Aa]|[Aa]n|[Tt]he|[Tt]his|[Tt]hese|[Tt]hat|[Tt]hose)\s+'

from collections import Counter

for line in sys.stdin:

doc = nlp(line.rstrip('\n').decode('utf-8'))

for k, v in Counter([re.sub(regex, '', x.text) for x in doc.noun_chunks]).iteritems():

print '\t'.join([k.encode('utf-8'), str(v)])

All 11 comments

The short answer is that you should use nlp.pipe(), instead of calling nlp() within the loop. Like this:

lines = (line.rstrip('\n').decode('utf-8') for line in sys.stdin)

docs = nlp.pipe(lines)

# If you need the original input, you can do this:

data_tuples = ((line.rsplit('\n').decode('utf-8'), line) for line in sys.stdin)

for doc, raw_line in nlp.pipe(data_tuples, as_tuples=True)

The nlp.pipe() method allows the library to work on minibatches of documents, which is more efficient -- even when only a single thread is available. More work is done per call to various Python functions, so the impact of the Python overhead is reduced.

v2 involved a small step backward in one respect: in v1 we had full control, because we didn't delegate to any libraries. In v2 the hard work is currently being done in numpy (on CPU) or cupy (on GPU). This makes it rather difficult to control the parallelism. Efforts to fix this are ongoing.

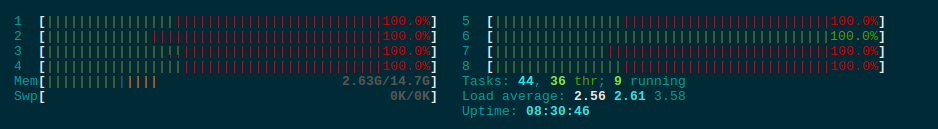

Here is my current code. When I use htop to monitor the CPU usage, all the CPUs are fully used. But 75% of the usage is about "kernel processes" and 25% is about normal (user) processes. This is not ideal. How to tune the parameters of pipe() to improve this? Should n_threads be set to be the same as the number of CPUs available?

http://www.deonsworld.co.za/2012/12/20/understanding-and-using-htop-monitor-system-resources/

import spacy

nlp = spacy.load('en', disable=['tokenizer', 'ner', 'textcat'])

import re

regex='^([Aa]|[Aa]n|[Tt]he|[Tt]his|[Tt]hese|[Tt]hat|[Tt]hose)\s+'

def get_text_idx(line):

array = line.rstrip('\n').split('\t', 1)

text = array[1].decode('utf-8')

idx = array[0]

return (text, idx)

data_tuples = (get_text_idx(line) for line in sys.stdin)

from collections import Counter

for doc, idx in nlp.pipe(data_tuples, as_tuples=True):

for k, v in Counter([re.sub(regex, '', x.text) for x in doc.noun_chunks]).iteritems():

print '\t'.join([idx, k.encode('utf-8'), str(v)])

@honnibal Given that the current parallelization code in spacy still cannot use CPUs efficiently, I still think disabling parallelization in spacy is the best solution in my case as my data can be easily split into multiple file and a separate python program call can be used to process each file. Therefore, there is no need to use parallelization within spacy. Could you please let me know how to disable parallelization in spacy? Thanks.

Hi @pengyu ,

Absolutely, I have the same problem with you

for doc in nlp.pipe(texts, n_threads=self.n_core, batch_size=10000):

entities = [Entity(ent.text, ent.start_char, ent.end_char, ent.label_)

for ent in doc.ents]

ret.append(entities)

The problem here I think I switch between threads/cpu core so that it not actual parallel runing.

It doesn't seem that n_threads has any effect.

I changed it to 1 (and also 0) but all threads seem to be doing processing, mainly context switching for that matter.

Running on a 32 core machine makes performance really bad.

As suggested in the issue #2075 , you can set the env flag OPENBLAS_NUM_THREADS=1

This actually made a difference and things work well now.

I just ran afoul of the n_threads having no effect issue. While spinning up only 10 worker processes, I was seeing all 80 cores on my machine light up in htop. Turns out spaCy was spinning up around 600 threads. Only after setting OPENBLAS_NUM_THREADS=1 did I see my core usage drop down to the expected amount.

While I'm here, I've found a couple of useful tricks for getting multiprocessing working well with spaCy:

The

multiprocessingstandard library module does not work out of the box withspacydue tothinchaving some nested functions, which can't be pickled with thepicklemodule. Instead, I usepathos.multiprocessing, which wraps the standard multiprocessing and (amongst other things) uses an enhanced pickler,dill, which can pickle nested functions.I then use the

pathos.multiprocessing.Poolto create a pool of worker processes, running my function withPool.imap(possibly some of the other maps could be fine/better).The additional trick is to also group up your documents for processing into chunks manually, so that you're multiprocess-mapping over chunks of documents rather than mapping over individual documents. This allows each worker process to stream a sequence of documents through spaCy's

nlp.pipe(), which is much faster than only passing one document tonlpeach time.

I'd be keen to hear if others have different/better recipes.

@ned2

I tried the approach you outlined above, but I'm ending up with this error. Did you encounter by this error by any chance.

Reason: 'TypeError('no default __reduce__ due to non-trivial __cinit__',)'

I haven't seen that one. A quick google suggests that it's something to do with not being able to pickle a cython object of some sort. Perhaps your pipeline returns some spaCy object that mine does not?

@ned2 Thanks for the tips! Some additional advice:

Instead of sending the nlp object through the serialization, you can also call spacy.load() within the worker. There's not really much advantage to loading once and going via Pickle: you're still going to have to rebuild the objects, which is expensive. If you're concerned that your worker might not have the models available, you can use nlp.to_bytes() and nlp = spacy.blank('en').from_bytes(byte_string).

For passing results out of the worker function, there's some code on the develop branch (shipped in spacy-nightly) that might be useful: https://github.com/explosion/spaCy/blob/develop/spacy/tokens/_serialize.py

This thread has been automatically locked since there has not been any recent activity after it was closed. Please open a new issue for related bugs.

Most helpful comment

Here is my current code. When I use htop to monitor the CPU usage, all the CPUs are fully used. But 75% of the usage is about "kernel processes" and 25% is about normal (user) processes. This is not ideal. How to tune the parameters of pipe() to improve this? Should n_threads be set to be the same as the number of CPUs available?

http://www.deonsworld.co.za/2012/12/20/understanding-and-using-htop-monitor-system-resources/