Servo: "Too many open files" crash after loading many small test cases via webdriver

Built from git: 7adf022 on 20190724. Running on Ubuntu 16.04.2 LTS with Radeon HD 7770.

I have a test system (for my PhD dissertation) that loads a bunch of smallish test cases repeatedly via webdriver on multiple browsers (currently Chrome, Firefox and Servo). I can trigger a "Too many open files" panic in Servo after enough test cases have been loaded. I'm pretty sure I can reproduce this fairly easily by running long enough (I believe this is third time I've hit it today). I'm including a crash dump below but is anything additional I can do that would be particularly helpful for debugging this?

Here is an example that crashed after the 587th test case was loaded:

servo-20190724.git $ ./mach run --release -z --webdriver=7002 --resolution=400x300

VMware, Inc.

softpipe

3.3 (Core Profile) Mesa 18.3.0-devel

[2019-08-01T03:03:00Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/19183-2-34/3.html:1:2 fields are not currently supported

[2019-08-01T03:03:03Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/19183-2-34/4.html:1:2 fields are not currently supported

[2019-08-01T03:03:07Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/19183-2-34/5.html:1:2 fields are not currently supported

[2019-08-01T03:03:17Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/19183-2-34/8.html:1:2 fields are not currently supported

[2019-08-01T03:03:20Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/19183-2-34/9.html:1:2 fields are not currently supported

[2019-08-01T03:03:33Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/19183-2-34/13.html:1:2 fields are not currently supported

[2019-08-01T03:03:43Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/19183-2-34/16.html:1:2 fields are not currently supported

[2019-08-01T03:03:52Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/19183-2-34/19.html:1:2 fields are not currently supported

[2019-08-01T03:04:02Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/19183-2-34/22.html:1:2 fields are not currently supported

Could not stop player Backend("Missing dependency: playbin")

called `Result::unwrap()` on an `Err` value: Os { code: 24, kind: Other, message: "Too many open files" } (thread ScriptThread PipelineId { namespace_id: PipelineNamespaceId(1), index: PipelineIndex(2) }, at src/libcore/result.rs:1051)

stack backtrace:

0: servo::main::{{closure}}::h1a74bd812d203c72 (0x55f41009633f)

1: std::panicking::rust_panic_with_hook::h0529069ab88f357a (0x55f413330105)

at src/libstd/panicking.rs:481

2: std::panicking::continue_panic_fmt::h6a820a3cd2914e74 (0x55f41332fba1)

at src/libstd/panicking.rs:384

3: rust_begin_unwind (0x55f41332fa85)

at src/libstd/panicking.rs:311

4: core::panicking::panic_fmt::he00cfaca5555542a (0x55f4133527ec)

at src/libcore/panicking.rs:85

5: core::result::unwrap_failed::h4239b9d80132a0db (0x55f4133528e6)

at src/libcore/result.rs:1051

6: script::stylesheet_loader::StylesheetLoader::load::he3c6d2f4f6b2d86d (0x55f410cc94b4)

7: script::dom::htmllinkelement::HTMLLinkElement::handle_stylesheet_url::h08d096fd325f038f (0x55f410da0119)

8: <script::dom::htmllinkelement::HTMLLinkElement as script::dom::virtualmethods::VirtualMethods>::bind_to_tree::h4b49aadc206a8bb4 (0x55f410d9f9d5)

9: script::dom::node::Node::insert::h5f83017d8cd2b0c4 (0x55f410e5f494)

10: script::dom::node::Node::pre_insert::hc92be38a4a5c65ff (0x55f410e5e7d2)

11: script::dom::servoparser::insert::hc2bdfe4c53d2659e (0x55f410c611de)

12: html5ever::tree_builder::TreeBuilder<Handle,Sink>::insert_element::h483a7708bc71a57c (0x55f410fe59cd)

13: html5ever::tree_builder::TreeBuilder<Handle,Sink>::step::h53264c73d52d73b7 (0x55f410ffaed7)

14: <html5ever::tree_builder::TreeBuilder<Handle,Sink> as html5ever::tokenizer::interface::TokenSink>::process_token::h8d8307514ab0ae38 (0x55f410fb0653)

15: _ZN9html5ever9tokenizer21Tokenizer$LT$Sink$GT$13process_token17h75019972a696a3eeE.llvm.7363365923994120909 (0x55f41062d408)

16: html5ever::tokenizer::Tokenizer<Sink>::emit_current_tag::h45460aa41ef12f15 (0x55f41062daf1)

17: html5ever::tokenizer::Tokenizer<Sink>::step::h866e9b63f9f503ff (0x55f41063dcf1)

18: _ZN9html5ever9tokenizer21Tokenizer$LT$Sink$GT$3run17h8ce04a69e91aa671E.llvm.7363365923994120909 (0x55f41063194a)

19: script::dom::servoparser::html::Tokenizer::feed::h9fdf5468e43321e7 (0x55f41065532a)

20: script::dom::servoparser::ServoParser::do_parse_sync::hed9498713ee0e8aa (0x55f410c5cb3e)

21: profile_traits::time::profile::h4e1d5a89a249ef96 (0x55f410e1b2c6)

22: _ZN6script3dom11servoparser11ServoParser10parse_sync17h88e07764d212f834E.llvm.530561247764639767 (0x55f410c5c72f)

23: <script::dom::servoparser::ParserContext as net_traits::FetchResponseListener>::process_response_chunk::h61914a25d0137f92 (0x55f410c60885)

24: script::script_thread::ScriptThread::handle_msg_from_constellation::h600baf3383c41b0e (0x55f410caa068)

25: _ZN6script13script_thread12ScriptThread11handle_msgs17haf4ee7b3baaa2b69E.llvm.530561247764639767 (0x55f410ca488d)

26: profile_traits::mem::ProfilerChan::run_with_memory_reporting::hce1ba89d5eb53e5e (0x55f410e1a1e7)

27: std::sys_common::backtrace::__rust_begin_short_backtrace::hc93943e85aa6fa26 (0x55f411198e52)

28: _ZN3std9panicking3try7do_call17hb93b842dfb1f8343E.llvm.11073267061158468562 (0x55f411245103)

29: __rust_maybe_catch_panic (0x55f413339db9)

at src/libpanic_unwind/lib.rs:82

30: core::ops::function::FnOnce::call_once{{vtable.shim}}::h7217876d16e085d4 (0x55f410a082b2)

31: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h10faab75e4737451 (0x55f41331e8ee)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

32: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h1aad66e3be56d28c (0x55f4133390df)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

std::sys_common::thread::start_thread::h9a8131af389e9a10

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h5c5575a5d923977b

at src/libstd/sys/unix/thread.rs:79

33: start_thread (0x7fab3e4cc6b9)

34: clone (0x7fab3cd6841c)

35: <unknown> (0x0)

[2019-08-01T04:24:22Z ERROR servo] called `Result::unwrap()` on an `Err` value: Os { code: 24, kind: Other, message: "Too many open files" }

Pipeline script content process shutdown chan: Os { code: 24, kind: Other, message: "Too many open files" } (thread Constellation, at src/libcore/result.rs:1051)

stack backtrace:

0: servo::main::{{closure}}::h1a74bd812d203c72 (0x55f41009633f)

1: std::panicking::rust_panic_with_hook::h0529069ab88f357a (0x55f413330105)

at src/libstd/panicking.rs:481

2: std::panicking::continue_panic_fmt::h6a820a3cd2914e74 (0x55f41332fba1)

at src/libstd/panicking.rs:384

3: rust_begin_unwind (0x55f41332fa85)

at src/libstd/panicking.rs:311

4: core::panicking::panic_fmt::he00cfaca5555542a (0x55f4133527ec)

at src/libcore/panicking.rs:85

5: core::result::unwrap_failed::h4239b9d80132a0db (0x55f4133528e6)

at src/libcore/result.rs:1051

6: constellation::pipeline::Pipeline::spawn::hc4537e426638cac3 (0x55f4101814af)

7: constellation::constellation::Constellation<Message,LTF,STF>::new_pipeline::h03e845e5b2cd3635 (0x55f410196bdf)

8: constellation::constellation::Constellation<Message,LTF,STF>::handle_panic::h9c59071776586bee (0x55f41019574b)

9: constellation::constellation::Constellation<Message,LTF,STF>::handle_log_entry::hff41c6262b0a65ca (0x55f410198de1)

10: constellation::constellation::Constellation<Message,LTF,STF>::handle_request_from_compositor::he6733721600a19c9 (0x55f4101ae38d)

11: constellation::constellation::Constellation<Message,LTF,STF>::run::hc89c0bcdfed723c1 (0x55f4101b64d9)

12: std::sys_common::backtrace::__rust_begin_short_backtrace::he8170c3b0c1833bc (0x55f41020014f)

13: _ZN3std9panicking3try7do_call17h6580004dd9fdb22cE.llvm.4941401487006519239 (0x55f4100db513)

14: __rust_maybe_catch_panic (0x55f413339db9)

at src/libpanic_unwind/lib.rs:82

15: core::ops::function::FnOnce::call_once{{vtable.shim}}::hd78ccb91aec8cf3a (0x55f4100efe12)

16: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h10faab75e4737451 (0x55f41331e8ee)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

17: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h1aad66e3be56d28c (0x55f4133390df)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

std::sys_common::thread::start_thread::h9a8131af389e9a10

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h5c5575a5d923977b

at src/libstd/sys/unix/thread.rs:79

18: start_thread (0x7fab3e4cc6b9)

19: clone (0x7fab3cd6841c)

20: <unknown> (0x0)

[2019-08-01T04:24:23Z ERROR servo] Pipeline script content process shutdown chan: Os { code: 24, kind: Other, message: "Too many open files" }

called `Result::unwrap()` on an `Err` value: RecvError (thread LayoutThread PipelineId { namespace_id: PipelineNamespaceId(1), index: PipelineIndex(695) }, at src/libcore/result.rs:1051)

stack backtrace:

0: servo::main::{{closure}}::h1a74bd812d203c72 (0x55f41009633f)

1: std::panicking::rust_panic_with_hook::h0529069ab88f357a (0x55f413330105)

at src/libstd/panicking.rs:481

2: std::panicking::continue_panic_fmt::h6a820a3cd2914e74 (0x55f41332fba1)

at src/libstd/panicking.rs:384

3: rust_begin_unwind (0x55f41332fa85)

at src/libstd/panicking.rs:311

4: core::panicking::panic_fmt::he00cfaca5555542a (0x55f4133527ec)

at src/libcore/panicking.rs:85

5: core::result::unwrap_failed::h4239b9d80132a0db (0x55f4133528e6)

at src/libcore/result.rs:1051

6: layout_thread::LayoutThread::start::hb675360933e398d4 (0x55f41047e063)

7: profile_traits::mem::ProfilerChan::run_with_memory_reporting::h8a534464fbe2083e (0x55f4104f3d23)

8: std::sys_common::backtrace::__rust_begin_short_backtrace::hab512255cc628b7e (0x55f4105a9144)

9: _ZN3std9panicking3try7do_call17h15789f162d15d069E.llvm.197603739779113467 (0x55f410513fd3)

10: __rust_maybe_catch_panic (0x55f413339db9)

at src/libpanic_unwind/lib.rs:82

11: core::ops::function::FnOnce::call_once{{vtable.shim}}::h1b24f55261127c94 (0x55f41053f242)

12: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h10faab75e4737451 (0x55f41331e8ee)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

13: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h1aad66e3be56d28c (0x55f4133390df)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

std::sys_common::thread::start_thread::h9a8131af389e9a10

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h5c5575a5d923977b

at src/libstd/sys/unix/thread.rs:79

14: start_thread (0x7fab3e4cc6b9)

15: clone (0x7fab3cd6841c)

16: <unknown> (0x0)

[2019-08-01T04:24:23Z ERROR servo] called `Result::unwrap()` on an `Err` value: RecvError [151/362]

called `Option::unwrap()` on a `None` value (thread ScriptThread PipelineId { namespace_id: PipelineNamespaceId(1), index: PipelineIndex(2) }, at src/libcore/option.rs:378)

stack backtrace:

0: servo::main::{{closure}}::h1a74bd812d203c72 (0x55f41009633f)

1: std::panicking::rust_panic_with_hook::h0529069ab88f357a (0x55f413330105)

at src/libstd/panicking.rs:481

2: std::panicking::continue_panic_fmt::h6a820a3cd2914e74 (0x55f41332fba1)

at src/libstd/panicking.rs:384

3: rust_begin_unwind (0x55f41332fa85)

at src/libstd/panicking.rs:311

4: core::panicking::panic_fmt::he00cfaca5555542a (0x55f4133527ec)

at src/libcore/panicking.rs:85

5: core::panicking::panic::h73f4a74a29ff704a (0x55f41335272b)

at src/libcore/panicking.rs:49

6: <script::dom::window::Window as script::dom::bindings::codegen::Bindings::WindowBinding::WindowBinding::WindowMethods>::Frames::h70e9762199220474 (0x55f410ab3346)

7: <script::dom::htmlmediaelement::HTMLMediaElement as core::ops::drop::Drop>::drop::h82f851c26fc8d1fe (0x55f4107912f6)

8: _ZN4core3ptr18real_drop_in_place17hb15edba9f2627c0eE.llvm.9711593096204109679 (0x55f410a27005)

9: _ZN3std9panicking3try7do_call17hf7bcf8d4fe880bf2E.llvm.11073267061158468562 (0x55f411258803)

10: __rust_maybe_catch_panic (0x55f413339db9)

at src/libpanic_unwind/lib.rs:82

11: script::dom::bindings::codegen::Bindings::HTMLAudioElementBinding::HTMLAudioElementBinding::_finalize::h510de5481af6c7d4 (0x55f410e9bcc0)

12: _ZNK2js5Class10doFinalizeEPNS_6FreeOpEP8JSObject (0x55f411880a25)

at /data/joelm/personal/UTA/dissertation/servo/servo-20190724.git/target/release/build/mozjs_sys-0c8fde13422c462f/out/dist/include/js/Class.h:872

_ZN8JSObject8finalizeEPN2js6FreeOpE

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/vm/JSObject-inl.h:83

_ZN2js2gc5Arena8finalizeI8JSObjectEEmPNS_6FreeOpENS0_9AllocKindEm

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/gc/GC.cpp:591

13: _ZL19FinalizeTypedArenasI8JSObjectEbPN2js6FreeOpEPPNS1_2gc5ArenaERNS4_15SortedArenaListENS4_9AllocKindERNS1_11SliceBudgetENS4_10ArenaLists14KeepArenasEnumE (0x55f411880872)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/gc/GC.cpp:651

14: _ZL14FinalizeArenasPN2js6FreeOpEPPNS_2gc5ArenaERNS2_15SortedArenaListENS2_9AllocKindERNS_11SliceBudgetENS2_10ArenaLists14KeepArenasEnumE (0x55f411861c3b)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/gc/GC.cpp:683

15: _ZN2js2gc10ArenaLists18foregroundFinalizeEPNS_6FreeOpENS0_9AllocKindERNS_11SliceBudgetERNS0_15SortedArenaListE (0x55f41186e169)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/gc/GC.cpp:5820

16: _ZN2js2gc9GCRuntime17finalizeAllocKindEPNS_6FreeOpERNS_11SliceBudgetEPN2JS4ZoneENS0_9AllocKindE (0x55f41186f0fa)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/gc/GC.cpp:6110

17: _ZN11sweepaction18SweepActionForEachI13ContainerIterIN7mozilla7EnumSetIN2js2gc9AllocKindEjEEES7_JPNS5_9GCRuntimeEPNS4_6FreeOpERNS4_11SliceBudgetEPN2JS4ZoneEEE3runESA_SC_SE_SH_ (0x55f4118891ab)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/gc/GC.cpp:6327

18: _ZN11sweepaction19SweepActionSequenceIJPN2js2gc9GCRuntimeEPNS1_6FreeOpERNS1_11SliceBudgetEPN2JS4ZoneEEE3runES4_S6_S8_SB_ (0x55f4118893d4)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/gc/GC.cpp:6296

19: _ZN11sweepaction18SweepActionForEachIN2js2gc19SweepGroupZonesIterEP9JSRuntimeJPNS2_9GCRuntimeEPNS1_6FreeOpERNS1_11SliceBudgetEEE3runES7_S9_SB_ (0x55f4118898b1)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/gc/GC.cpp:6327

20: _ZN11sweepaction19SweepActionSequenceIJPN2js2gc9GCRuntimeEPNS1_6FreeOpERNS1_11SliceBudgetEEE3runES4_S6_S8_ (0x55f411889af1)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/gc/GC.cpp:6296

21: _ZN11sweepaction20SweepActionRepeatForIN2js2gc15SweepGroupsIterEP9JSRuntimeJPNS2_9GCRuntimeEPNS1_6FreeOpERNS1_11SliceBudgetEEE3runES7_S9_SB_ (0x55f411889fc5)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/gc/GC.cpp:6356

22: _ZN2js2gc9GCRuntime19performSweepActionsERNS_11SliceBudgetE (0x55f41186f5dd)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/gc/GC.cpp:6528

23: _ZN2js2gc9GCRuntime16incrementalSliceERNS_11SliceBudgetEN2JS8GCReasonERNS0_13AutoGCSessionE (0x55f411871550)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/gc/GC.cpp:7049

24: _ZN2js2gc9GCRuntime7gcCycleEbNS_11SliceBudgetEN2JS8GCReasonE (0x55f4118727d8)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/gc/GC.cpp:7398

25: _ZN2js2gc9GCRuntime7collectEbNS_11SliceBudgetEN2JS8GCReasonE (0x55f41187335f)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/gc/GC.cpp:7569

26: _ZN2js2gc9GCRuntime2gcE18JSGCInvocationKindN2JS8GCReasonE (0x55f41185640c)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/gc/GC.cpp:7657

27: _ZN9JSRuntime14destroyRuntimeEv (0x55f41162eaa3)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/vm/Runtime.cpp:284

28: _ZN2js14DestroyContextEP9JSContext (0x55f4115c25d1)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/b2f8393/mozjs/js/src/vm/JSContext.cpp:197

29: <mozjs::rust::Runtime as core::ops::drop::Drop>::drop::hed132fc3d12a947c (0x55f4114bfe75)

30: _ZN4core3ptr18real_drop_in_place17h22626fd093207c2fE.llvm.9711593096204109679 (0x55f410a0f6dc)

31: <alloc::rc::Rc<T> as core::ops::drop::Drop>::drop::h025c8fd65330ce43 (0x55f410a3c0e9)

32: core::ptr::real_drop_in_place::hfb055ca4c92ce1d8 (0x55f4111a72b4)

33: std::sys_common::backtrace::__rust_begin_short_backtrace::hc93943e85aa6fa26 (0x55f41119907d)

34: _ZN3std9panicking3try7do_call17hb93b842dfb1f8343E.llvm.11073267061158468562 (0x55f411245103)

35: __rust_maybe_catch_panic (0x55f413339db9)

at src/libpanic_unwind/lib.rs:82

36: core::ops::function::FnOnce::call_once{{vtable.shim}}::h7217876d16e085d4 (0x55f410a082b2)

37: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h10faab75e4737451 (0x55f41331e8ee)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

38: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h1aad66e3be56d28c (0x55f4133390df)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

std::sys_common::thread::start_thread::h9a8131af389e9a10

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h5c5575a5d923977b

at src/libstd/sys/unix/thread.rs:79

39: start_thread (0x7fab3e4cc6b9)

40: clone (0x7fab3cd6841c)

41: <unknown> (0x0)

[2019-08-01T04:24:23Z ERROR servo] called `Option::unwrap()` on a `None` value

thread panicked while panicking. aborting.

Servo exited with return value -4

All 137 comments

If there's some way to increase the maximum number of file handles on your system, that might help delay it. This suggests that we might be leaking handles somehow, though.

@jdm This is single webdriver session and it's just repeatedly loading different pages and then doing a screenshot of each one. So yeah, I suspect a leak somewhere in servo. Once I get a few more kinks worked out of my testing system, I'm intending to load > 100,000 test cases so even a 10x longer run before crashing won't help me that much. My goal is test browser rendering engine differences in the happy path (e.g. valid/safe pages) but so far it's proven to be pretty adept at crashing Servo too :-)

Are there any knobs I can set or files I can provide that would help to track this down if it turns out I can fairly reliably reproduce it?

Yep, happened again after the 710th test case was loaded.

The stack is shorter and seems different so I'm including it although I suspect that this is basically just panic'ing wherever the file exhaustion happens to occur:

servo-20190724.git $ ./mach run --release -z --webdriver=7002 --resolution=400x300

VMware, Inc.

softpipe

3.3 (Core Profile) Mesa 18.3.0-devel

[2019-08-01T05:02:36Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/12072-2-34/3.html:1:2 fields are not currently supported

[2019-08-01T05:02:39Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/12072-2-34/4.html:1:2 fields are not currently supported

[2019-08-01T05:02:42Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/12072-2-34/5.html:1:2 fields are not currently supported

[2019-08-01T05:02:52Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/12072-2-34/8.html:1:2 fields are not currently supported

[2019-08-01T05:02:56Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/12072-2-34/9.html:1:2 fields are not currently supported

[2019-08-01T05:03:08Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/12072-2-34/13.html:1:2 fields are not currently supported

[2019-08-01T05:03:17Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/12072-2-34/16.html:1:2 fields are not currently supported

[2019-08-01T05:03:27Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/12072-2-34/19.html:1:2 fields are not currently supported

[2019-08-01T05:03:36Z ERROR script::dom::bindings::error] Error at http://127.0.0.1:3000/gen/12072-2-34/22.html:1:2 fields are not currently supported

called `Result::unwrap()` on an `Err` value: Os { code: 24, kind: Other, message: "Too many open files" } (thread ScriptThread PipelineId { namespace_id: PipelineNamespaceId(1), index: PipelineIndex(2) }, at src/libcore/result.rs:1051)

stack backtrace:

0: servo::main::{{closure}}::h1a74bd812d203c72 (0x5613fb31d33f)

1: std::panicking::rust_panic_with_hook::h0529069ab88f357a (0x5613fe5b7105)

at src/libstd/panicking.rs:481

2: std::panicking::continue_panic_fmt::h6a820a3cd2914e74 (0x5613fe5b6ba1)

at src/libstd/panicking.rs:384

3: rust_begin_unwind (0x5613fe5b6a85)

at src/libstd/panicking.rs:311

4: core::panicking::panic_fmt::he00cfaca5555542a (0x5613fe5d97ec)

at src/libcore/panicking.rs:85

5: core::result::unwrap_failed::h4239b9d80132a0db (0x5613fe5d98e6)

at src/libcore/result.rs:1051

6: script::fetch::FetchCanceller::initialize::hc2be3bff8c1dfac0 (0x5613fbf1fa95)

7: script::document_loader::DocumentLoader::fetch_async_background::hc49c09d7e9f54ad4 (0x5613fc435a2d)

8: script::dom::document::Document::fetch_async::h82c1d743aa88b6a2 (0x5613fbeaebf4)

9: script::stylesheet_loader::StylesheetLoader::load::he3c6d2f4f6b2d86d (0x5613fbf503ac)

10: script::dom::htmllinkelement::HTMLLinkElement::handle_stylesheet_url::h08d096fd325f038f (0x5613fc027119)

11: <script::dom::htmllinkelement::HTMLLinkElement as script::dom::virtualmethods::VirtualMethods>::bind_to_tree::h4b49aadc206a8bb4 (0x5613fc0269d5)

12: script::dom::node::Node::insert::h5f83017d8cd2b0c4 (0x5613fc0e6494)

13: script::dom::node::Node::pre_insert::hc92be38a4a5c65ff (0x5613fc0e57d2)

14: script::dom::servoparser::insert::hc2bdfe4c53d2659e (0x5613fbee81de)

15: html5ever::tree_builder::TreeBuilder<Handle,Sink>::insert_element::h483a7708bc71a57c (0x5613fc26c9cd)

16: html5ever::tree_builder::TreeBuilder<Handle,Sink>::step::h53264c73d52d73b7 (0x5613fc281ed7)

17: <html5ever::tree_builder::TreeBuilder<Handle,Sink> as html5ever::tokenizer::interface::TokenSink>::process_token::h8d8307514ab0ae38 (0x5613fc237653)

18: _ZN9html5ever9tokenizer21Tokenizer$LT$Sink$GT$13process_token17h75019972a696a3eeE.llvm.7363365923994120909 (0x5613fb8b4408)

19: html5ever::tokenizer::Tokenizer<Sink>::emit_current_tag::h45460aa41ef12f15 (0x5613fb8b4af1)

20: html5ever::tokenizer::Tokenizer<Sink>::step::h866e9b63f9f503ff (0x5613fb8c4cf1)

21: _ZN9html5ever9tokenizer21Tokenizer$LT$Sink$GT$3run17h8ce04a69e91aa671E.llvm.7363365923994120909 (0x5613fb8b894a)

22: script::dom::servoparser::html::Tokenizer::feed::h9fdf5468e43321e7 (0x5613fb8dc32a)

23: script::dom::servoparser::ServoParser::do_parse_sync::hed9498713ee0e8aa (0x5613fbee3b3e)

24: profile_traits::time::profile::h4e1d5a89a249ef96 (0x5613fc0a22c6)

25: _ZN6script3dom11servoparser11ServoParser10parse_sync17h88e07764d212f834E.llvm.530561247764639767 (0x5613fbee372f)

26: <script::dom::servoparser::ParserContext as net_traits::FetchResponseListener>::process_response_chunk::h61914a25d0137f92 (0x5613fbee7885)

27: script::script_thread::ScriptThread::handle_msg_from_constellation::h600baf3383c41b0e (0x5613fbf31068)

28: _ZN6script13script_thread12ScriptThread11handle_msgs17haf4ee7b3baaa2b69E.llvm.530561247764639767 (0x5613fbf2b88d)

29: profile_traits::mem::ProfilerChan::run_with_memory_reporting::hce1ba89d5eb53e5e (0x5613fc0a11e7)

30: std::sys_common::backtrace::__rust_begin_short_backtrace::hc93943e85aa6fa26 (0x5613fc41fe52)

31: _ZN3std9panicking3try7do_call17hb93b842dfb1f8343E.llvm.11073267061158468562 (0x5613fc4cc103)

32: __rust_maybe_catch_panic (0x5613fe5c0db9)

at src/libpanic_unwind/lib.rs:82

33: core::ops::function::FnOnce::call_once{{vtable.shim}}::h7217876d16e085d4 (0x5613fbc8f2b2)

34: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h10faab75e4737451 (0x5613fe5a58ee)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

35: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h1aad66e3be56d28c (0x5613fe5c00df)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

std::sys_common::thread::start_thread::h9a8131af389e9a10

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h5c5575a5d923977b

at src/libstd/sys/unix/thread.rs:79

36: start_thread (0x7f90ef5906b9)

37: clone (0x7f90ede2c41c)

38: <unknown> (0x0)

[2019-08-01T05:43:09Z ERROR servo] called `Result::unwrap()` on an `Err` value: Os { code: 24, kind: Other, message: "Too many open files" }

Pipeline script content process shutdown chan: Os { code: 24, kind: Other, message: "Too many open files" } (thread Constellation, at src/libcore/result.rs:1051)

stack backtrace:

0: servo::main::{{closure}}::h1a74bd812d203c72 (0x5613fb31d33f)

1: std::panicking::rust_panic_with_hook::h0529069ab88f357a (0x5613fe5b7105)

at src/libstd/panicking.rs:481

2: std::panicking::continue_panic_fmt::h6a820a3cd2914e74 (0x5613fe5b6ba1)

at src/libstd/panicking.rs:384

3: rust_begin_unwind (0x5613fe5b6a85)

at src/libstd/panicking.rs:311

4: core::panicking::panic_fmt::he00cfaca5555542a (0x5613fe5d97ec)

at src/libcore/panicking.rs:85

5: core::result::unwrap_failed::h4239b9d80132a0db (0x5613fe5d98e6)

at src/libcore/result.rs:1051

6: constellation::pipeline::Pipeline::spawn::hc4537e426638cac3 (0x5613fb4084af)

7: constellation::constellation::Constellation<Message,LTF,STF>::new_pipeline::h03e845e5b2cd3635 (0x5613fb41dbdf)

8: constellation::constellation::Constellation<Message,LTF,STF>::handle_panic::h9c59071776586bee (0x5613fb41c74b)

9: constellation::constellation::Constellation<Message,LTF,STF>::handle_log_entry::hff41c6262b0a65ca (0x5613fb41fde1)

10: constellation::constellation::Constellation<Message,LTF,STF>::handle_request_from_compositor::he6733721600a19c9 (0x5613fb43538d)

11: constellation::constellation::Constellation<Message,LTF,STF>::run::hc89c0bcdfed723c1 (0x5613fb43d4d9)

12: std::sys_common::backtrace::__rust_begin_short_backtrace::he8170c3b0c1833bc (0x5613fb48714f)

13: _ZN3std9panicking3try7do_call17h6580004dd9fdb22cE.llvm.4941401487006519239 (0x5613fb362513)

14: __rust_maybe_catch_panic (0x5613fe5c0db9)

at src/libpanic_unwind/lib.rs:82

15: core::ops::function::FnOnce::call_once{{vtable.shim}}::hd78ccb91aec8cf3a (0x5613fb376e12)

16: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h10faab75e4737451 (0x5613fe5a58ee)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

17: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h1aad66e3be56d28c (0x5613fe5c00df)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

std::sys_common::thread::start_thread::h9a8131af389e9a10

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h5c5575a5d923977b

at src/libstd/sys/unix/thread.rs:79

18: start_thread (0x7f90ef5906b9)

19: clone (0x7f90ede2c41c)

20: <unknown> (0x0)

[2019-08-01T05:43:09Z ERROR servo] Pipeline script content process shutdown chan: Os { code: 24, kind: Other, message: "Too many open files" }

called `Result::unwrap()` on an `Err` value: RecvError (thread LayoutThread PipelineId { namespace_id: PipelineNamespaceId(1), index: PipelineIndex(692) }, at src/libcore/result.rs:1051)

stack backtrace:

0: servo::main::{{closure}}::h1a74bd812d203c72 (0x5613fb31d33f)

1: std::panicking::rust_panic_with_hook::h0529069ab88f357a (0x5613fe5b7105)

at src/libstd/panicking.rs:481

2: std::panicking::continue_panic_fmt::h6a820a3cd2914e74 (0x5613fe5b6ba1)

at src/libstd/panicking.rs:384

3: rust_begin_unwind (0x5613fe5b6a85)

at src/libstd/panicking.rs:311

4: core::panicking::panic_fmt::he00cfaca5555542a (0x5613fe5d97ec)

at src/libcore/panicking.rs:85

5: core::result::unwrap_failed::h4239b9d80132a0db (0x5613fe5d98e6)

at src/libcore/result.rs:1051

6: layout_thread::LayoutThread::start::hb675360933e398d4 (0x5613fb705063)

7: profile_traits::mem::ProfilerChan::run_with_memory_reporting::h8a534464fbe2083e (0x5613fb77ad23)

8: std::sys_common::backtrace::__rust_begin_short_backtrace::hab512255cc628b7e (0x5613fb830144)

9: _ZN3std9panicking3try7do_call17h15789f162d15d069E.llvm.197603739779113467 (0x5613fb79afd3)

10: __rust_maybe_catch_panic (0x5613fe5c0db9)

at src/libpanic_unwind/lib.rs:82

11: core::ops::function::FnOnce::call_once{{vtable.shim}}::h1b24f55261127c94 (0x5613fb7c6242)

12: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h10faab75e4737451 (0x5613fe5a58ee)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

13: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h1aad66e3be56d28c (0x5613fe5c00df)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

std::sys_common::thread::start_thread::h9a8131af389e9a10

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h5c5575a5d923977b

at src/libstd/sys/unix/thread.rs:79

14: start_thread (0x7f90ef5906b9)

15: clone (0x7f90ede2c41c)

16: <unknown> (0x0)

[2019-08-01T05:43:09Z ERROR servo] called `Result::unwrap()` on an `Err` value: RecvError

called `Result::unwrap()` on an `Err` value: Io(Custom { kind: ConnectionReset, error: "All senders for this socket closed" }) (thread StorageManager, at src/libcore/result.rs:1051)

stack backtrace:

0: servo::main::{{closure}}::h1a74bd812d203c72 (0x5613fb31d33f)

1: std::panicking::rust_panic_with_hook::h0529069ab88f357a (0x5613fe5b7105)

at src/libstd/panicking.rs:481

2: std::panicking::continue_panic_fmt::h6a820a3cd2914e74 (0x5613fe5b6ba1)

at src/libstd/panicking.rs:384

3: rust_begin_unwind (0x5613fe5b6a85)

at src/libstd/panicking.rs:311

4: core::panicking::panic_fmt::he00cfaca5555542a (0x5613fe5d97ec)

at src/libcore/panicking.rs:85

5: core::result::unwrap_failed::h4239b9d80132a0db (0x5613fe5d98e6)

at src/libcore/result.rs:1051

6: net::storage_thread::StorageManager::start::h466bcb1173168185 (0x5613fd32d91c)

7: std::sys_common::backtrace::__rust_begin_short_backtrace::h210ea594b8a67611 (0x5613fd27c7b2)

8: _ZN3std9panicking3try7do_call17h615e22bdb7f28b31E.llvm.2387109977385246948 (0x5613fd2d242b)

9: __rust_maybe_catch_panic (0x5613fe5c0db9)

at src/libpanic_unwind/lib.rs:82

10: core::ops::function::FnOnce::call_once{{vtable.shim}}::he74969e58c7b4f78 (0x5613fd3660bf)

11: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h10faab75e4737451 (0x5613fe5a58ee)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

12: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h1aad66e3be56d28c (0x5613fe5c00df)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

std::sys_common::thread::start_thread::h9a8131af389e9a10

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h5c5575a5d923977b

at src/libstd/sys/unix/thread.rs:79

13: start_thread (0x7f90ef5906b9)

14: clone (0x7f90ede2c41c)

15: <unknown> (0x0)

[2019-08-01T05:43:09Z ERROR servo] called `Result::unwrap()` on an `Err` value: Io(Custom { kind: ConnectionReset, error: "All senders for this socket closed" })

Servo exited with return value -2

I think the most useful thing for narrowing down the cause in Servo would be if you can find the smallest set of unique webdriver commands that can reproduce this. In particular, if loading one particular file more times will trigger it, that's going to be easier to debug than loading a lot of different files fewer times.

I suspect it is

@kanaka Could you try again off of https://github.com/servo/servo/pull/23906 ? (Sorry for asking you to rebuild script)

If that is the case, we would have to find a way to not create an ipc channel for each stylesheet loader.

I can also see https://github.com/servo/servo/blob/45af8a34fe6cc6f304f935ababdbc90fb4c50ede/components/constellation/pipeline.rs#L279

So the question is whether this is because there are too many stylesheet loaders creating channels, or if it's more like webdriver is creating too many pipelines in general, and any creation of an ipc-chan will eventually fail.

Would it be acceptable for webdriver to run every pipeline in the same event-loop? That might be a way to naturally serialize webdriver commands and prevent them from flooding the system by creating many parallel event-loops(if that is the problem, off-course).

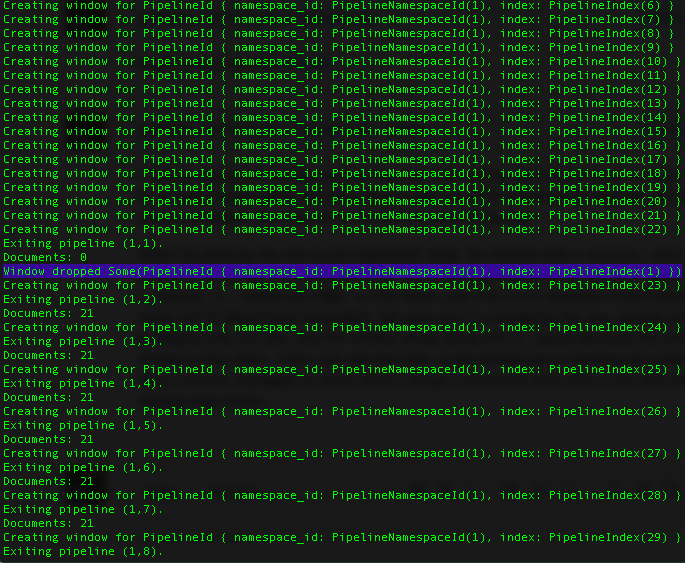

@kanaka actually could you please try running off of this branch https://github.com/servo/servo/pull/23761 (don't bother with the other one)

I was playing around with something the other day and realized we don't close event-loops when they're not managing any documents anymore, and I assume your script closes pages after taking a screenshot(you're not planning on keeping open 100k pages, are you?)?

In that case it might be that we're leaking event-loops...

@gterzian I will attempt to build and test with #23761. I'm not issuing an explicit close, just doing RemoteWebDriver.get calls to load a new page in the same window. My understanding was that a close command would close the browser if there is no other window loaded. I'm no creating new windows so my assumption was that I can just load a new page in the same window and I don't see issues in other browsers.

@gterzian hmm, we're not closing event loops? That's not good. What's meant to happen is that https://github.com/servo/servo/blob/master/components/constellation/constellation.rs#L3797 trim_history evicts old pipelines, and when there's no pipeline keeping en event loop alive it gets shut down by the Drop impl at https://github.com/servo/servo/blob/196c511d5ebf81b3fe202c5e0c5c1972a6408ab7/components/constellation/event_loop.rs#L21

Is webdriver not trimming the session history for some reason?

@gterzian with servo built from #23761 I still got a crash somewhere around 800 test cases. This one has some extra messages at the front end (most of which I've elided and I've also elided all but the first trace):

servo-20190724.git $ ./mach run --release -z --webdriver=7002 --resolution=400x300

Exiting pipeline (1,1).

shutting down layout for page (1,1)

Event loop empty

Exiting pipeline (1,2).

shutting down layout for page (1,2)

Exiting pipeline (1,3).

shutting down layout for page (1,3)

Exiting pipeline (1,4).

shutting down layout for page (1,4)

Exiting pipeline (1,5).

shutting down layout for page (1,5)

Exiting pipeline (1,6).

shutting down layout for page (1,6)

Exiting pipeline (1,7).

shutting down layout for page (1,7)

Exiting pipeline (1,8).

shutting down layout for page (1,8)

Exiting pipeline (1,9).

shutting down layout for page (1,9)

Exiting pipeline (1,10).

shutting down layout for page (1,10)

Exiting pipeline (1,11).

shutting down layout for page (1,11)

Exiting pipeline (1,12).

shutting down layout for page (1,12)

Exiting pipeline (1,13).

shutting down layout for page (1,13)

Exiting pipeline (1,14).

shutting down layout for page (1,14)

Exiting pipeline (1,15).

shutting down layout for page (1,15)

Exiting pipeline (1,16).

shutting down layout for page (1,16)

Exiting pipeline (1,17).

shutting down layout for page (1,17)

Exiting pipeline (1,18).

shutting down layout for page (1,18)

Exiting pipeline (1,19).

shutting down layout for page (1,19)

Exiting pipeline (1,20).

...

Exiting pipeline (1,446).

shutting down layout for page (1,446)

Exiting pipeline (1,447).

shutting down layout for page (1,447)

Could not stop player Backend("Missing dependency: playbin")

Exiting pipeline (1,448).

shutting down layout for page (1,448)

Exiting pipeline (1,449).

shutting down layout for page (1,449)

Exiting pipeline (1,450).

shutting down layout for page (1,450)

Exiting pipeline (1,451).

shutting down layout for page (1,451)

...

Exiting pipeline (1,705).

shutting down layout for page (1,705)

Exiting pipeline (1,706).

shutting down layout for page (1,706)

Exiting pipeline (1,707).

shutting down layout for page (1,707)

Exiting pipeline (1,708).

shutting down layout for page (1,708)

called `Result::unwrap()` on an `Err` value: Os { code: 24, kind: Other, message: "Too many open files" } (thread ScriptThread Pipelin

eId { namespace_id: PipelineNamespaceId(1), index: PipelineIndex(2) }, at src/libcore/result.rs:1051)

stack backtrace:

0: servo::main::{{closure}}::h8c27ca2bbd15260c (0x5582aa0070af)

1: std::panicking::rust_panic_with_hook::h0529069ab88f357a (0x5582ad21bda5)

at src/libstd/panicking.rs:481

2: std::panicking::continue_panic_fmt::h6a820a3cd2914e74 (0x5582ad21b841)

at src/libstd/panicking.rs:384

3: rust_begin_unwind (0x5582ad21b725)

at src/libstd/panicking.rs:311

4: core::panicking::panic_fmt::he00cfaca5555542a (0x5582ad23e48c)

at src/libcore/panicking.rs:85

5: core::result::unwrap_failed::h4239b9d80132a0db (0x5582ad23e586)

at src/libcore/result.rs:1051

6: script::stylesheet_loader::StylesheetLoader::load::h3dd3ec4cbe06d8f4 (0x5582aac30664)

7: script::dom::htmllinkelement::HTMLLinkElement::handle_stylesheet_url::ha79b800c69addf04 (0x5582aad25714)

8: <script::dom::htmllinkelement::HTMLLinkElement as script::dom::virtualmethods::VirtualMethods>::bind_to_tree::hcf3684abf360a4d0

(0x5582aad24fa5)

9: script::dom::node::Node::insert::h671b04c4cfccf944 (0x5582aadd2464)

10: script::dom::node::Node::pre_insert::hcd7ab729106469bb (0x5582aadd17a2)

11: script::dom::servoparser::insert::h023231dd0bb029f6 (0x5582aaa43e76)

12: html5ever::tree_builder::TreeBuilder<Handle,Sink>::insert_element::hca0ba5a42a6a47a6 (0x5582aaf83abf)

13: html5ever::tree_builder::TreeBuilder<Handle,Sink>::step::h6293e7deefac81b3 (0x5582aafaf4da)

14: <html5ever::tree_builder::TreeBuilder<Handle,Sink> as html5ever::tokenizer::interface::TokenSink>::process_token::hab5b17dae1a3b

a13 (0x5582aaf400f3)

15: _ZN9html5ever9tokenizer21Tokenizer$LT$Sink$GT$13process_token17h22febec6a83030edE.llvm.8593906748013008867 (0x5582aa5b4f48)

16: html5ever::tokenizer::Tokenizer<Sink>::emit_current_tag::h94be9c6ea3b74d2c (0x5582aa5b59d1)

17: html5ever::tokenizer::Tokenizer<Sink>::step::ha584afa55150868b (0x5582aa5be263)

18: _ZN9html5ever9tokenizer21Tokenizer$LT$Sink$GT$3run17hb2242a222c9c977bE.llvm.8593906748013008867 (0x5582aa5b948a)

19: script::dom::servoparser::html::Tokenizer::feed::h35b993cc2c817cb6 (0x5582aa5f70ea)

20: script::dom::servoparser::ServoParser::do_parse_sync::h2df0c4cbffab8da1 (0x5582aaa3e88c)

21: profile_traits::time::profile::h7d5f5cd3fcac1ddd (0x5582aad8dc2f)

22: _ZN6script3dom11servoparser11ServoParser10parse_sync17h635a7466468263c3E.llvm.2781459336530327564 (0x5582aaa3e3cf)

23: <script::dom::servoparser::ParserContext as net_traits::FetchResponseListener>::process_response_chunk::h5501101f641181cb (0x558

2aaa42e7a)

24: script::script_thread::ScriptThread::handle_msg_from_constellation::hf6b2b6295212cd4c (0x5582aac11758)

25: _ZN6script13script_thread12ScriptThread11handle_msgs17hd3872b21368d5c05E.llvm.1792426946693623503 (0x5582aac0ba91)

26: profile_traits::mem::ProfilerChan::run_with_memory_reporting::h62e13d53efdc9cd6 (0x5582aad8bf17)

27: std::sys_common::backtrace::__rust_begin_short_backtrace::h9104709f54b740ee (0x5582ab0c4862)

28: _ZN3std9panicking3try7do_call17h491abb1e9f5ae8a8E.llvm.4875581894382309184 (0x5582ab1d3c95)

29: __rust_maybe_catch_panic (0x5582ad225a59)

at src/libpanic_unwind/lib.rs:82

30: core::ops::function::FnOnce::call_once{{vtable.shim}}::hedfa976ea06cd78a (0x5582aa6e5175)

31: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h10faab75e4737451 (0x5582ad20a58e)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

32: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h1aad66e3be56d28c (0x5582ad224d7f)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

std::sys_common::thread::start_thread::h9a8131af389e9a10

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h5c5575a5d923977b

at src/libstd/sys/unix/thread.rs:79

33: start_thread (0x7fcfcee776b9)

34: clone (0x7fcfcd71341c)

35: <unknown> (0x0)

...

@gterzian hmm, we're not closing event loops? That's not good. What's meant to happen is that https://github.com/servo/servo/blob/master/components/constellation/constellation.rs#L3797 trim_history evicts old pipelines, and when there's no pipeline keeping en event loop alive it gets shut down by the Drop impl at

Oh yeah, you're right, the constellation only keeps a Weak<EventLoop>, which should be dropped once every pipeline in a given script-thread has been exited(since they keep an Rc<EventLoop>, and those are dropped when history is trimmed).

So this might be StylesheetLoader::load creating an ipc-channel, which gives "Too many open files" when a page loads lots of stylesheets?

Is webdriver not trimming the session history for some reason?

Could be, however it seems webdrive just ends-up using the "normal pipeline flow", so unless there is some webdriver specific logic that would prevent it, I think it should trim.

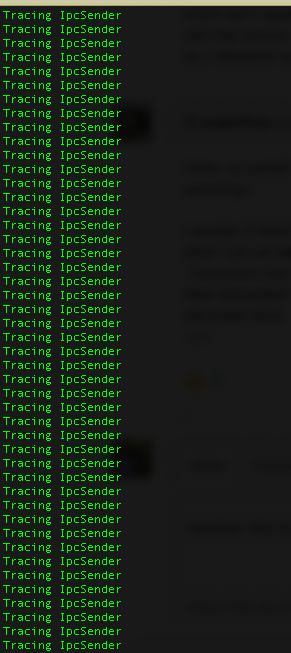

Maybe in general we shouldn't be creating an ipc-channel for each route, instead hiding the routing behind some interface that would clone a sender and route based on some sort of Id, re-using the same receiver?

I think it's the call to socketpair on each ipc::channel() that is causing this.

Something like:

GlobalScope {

fn add_ipc_callback<T>(&self callback: Box<Fn(T)>) -> IPCHandle<T>;

}

struct IPCHandle<T> {

callback_id: CallbackId, // based on the pipeline namespace

sender: IpcSender<CallbackMsg<T>>

}

impl IPCHandle<T> {

fn send(&self, msg: T) {

self.sender.send((self.callback_id.clone(), msg));

}

}

struct CallbackMsg<T>((CallbackId, T));

impl IpcRouter {

fn handle_msg(&self, msg: OpaqueIpcMessage) {

let (id, msg) = msg.to().unwrap();

let callback = self.callbacks.remove(&id).unwrap();

callback(msg);

}

fn add_callback(&self, callback: Box<Fn(T)>) -> IPCHandle<T> {

let callback_id = CallbackId::new();

self.callbacks.borrow_mut().insert(callback_id.clone(), callback);

let sender = self.opaque_sender.clone().to();

IPCHandle {

callback_id,

sender,

}

}

}

Not completely sure about the generics sketched above, but I think one could make it work.

then for each global in a script-process, upon init, do:

let (router_sender, router_receiver) = ipc::channel().unwrap();

let ipc_router = IpcRouter::new(router_sender);

ROUTER.add_route(

router_receiver.to_opaque(),

Box::new(move |message| {

ipc_router.handle_message(message);

}),

);

// Store ipc_router on the global

In practice it would be used like:

impl<'a> StylesheetLoader<'a> {

pub fn load(..) {

let listener = NetworkListener {

context,

task_source,

canceller: Some(canceller),

};

let callback = Box::new(move |message| {

listener.notify_fetch(message);

}),

let handle = self.global().add_ipc_callback(callback);

document.fetch_async(LoadType::Stylesheet(url), request, handle);

}

}

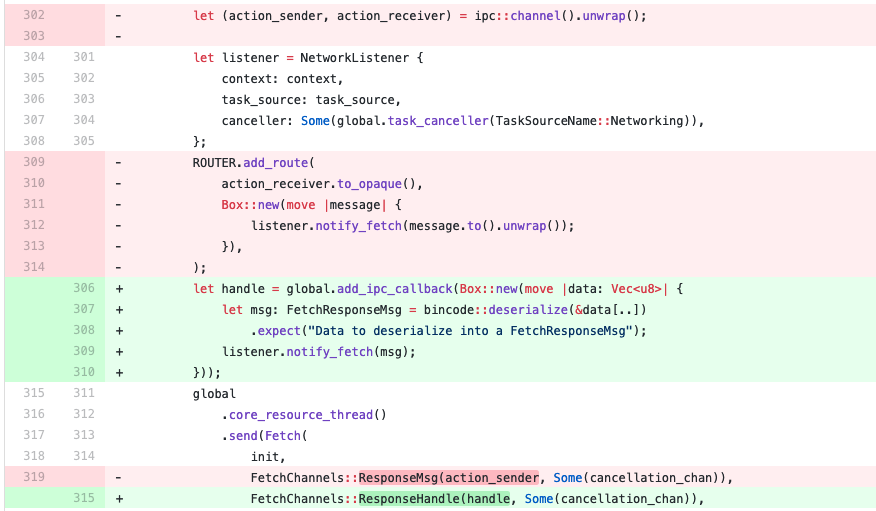

There's a prototype on the way: https://github.com/servo/servo/pull/23909

I'm not issuing an explicit close, just doing RemoteWebDriver.get calls to load a new page in the same window. My understanding was that a close command would close the browser if there is no other window loaded. I'm no creating new windows so my assumption was that I can just load a new page in the same window and I don't see issues in other browsers.

@kanaka

That's right, yes "close" was the wrong wording on my part, and it would indeed close the browser.

You could give it a try with the above mentioned branch, although I have only done some type checking, I haven't actually run it and I wouldn't be surprised if it crashes.

Also, even if this could fix one issue, we might still get the same crash as the current one for some other reasons with another part of your test-suite, since the problem of creating an ipc-channel(which creates a pair of sockets) for each operation is prevalent across the script component.

Besides the current problem that seems to happen when lots of stylesheets are loaded, we could get it with images, websockets, and a few other DOM objects. But if the proposed approach work, it shouldn't be hard to make the switch to all of them.

However it would be interesting to see if with this change we don't get a crash anymore with script::stylesheet_loader::StylesheetLoader::load.

Not sure that #23909 will help, because AFAICT a new fd is generated every time an IPC channel is sent, even if it's an IPC channel that's been sent before. @kanaka can you test that PR and see if it fixes your problem?

@gterzian @asajeffrey I will test with #23909 and report my results. I'm may or may not be able to get to it in the next couple of hours but hopefully at least by tonight sometime.

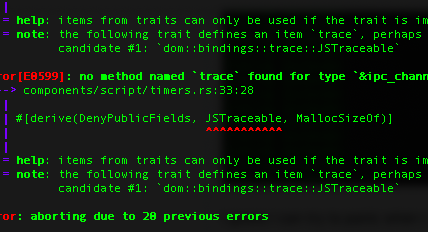

@gterzian I built it but I get several stacktraces on startup and trying to connect via webdriver causes more exceptions and fails to stay connected. Here are the first couple of traces:

./mach run --release -z --webdriver=7002 --resolution=400x300

called `Option::unwrap()` on a `None` value (thread <unnamed>, at src/libcore/option.rs:378)

stack backtrace:

0: servo::main::{{closure}}::h80e145b734dbd0e6 (0x55e752214f1f)

1: std::panicking::rust_panic_with_hook::h0529069ab88f357a (0x55e755424985)

at src/libstd/panicking.rs:481

2: std::panicking::continue_panic_fmt::h6a820a3cd2914e74 (0x55e755424421)

at src/libstd/panicking.rs:384

3: rust_begin_unwind (0x55e755424305)

at src/libstd/panicking.rs:311

4: core::panicking::panic_fmt::he00cfaca5555542a (0x55e75544706c)

at src/libcore/panicking.rs:85

5: core::panicking::panic::h73f4a74a29ff704a (0x55e755446fab)

at src/libcore/panicking.rs:49

6: script::dom::globalscope::IpcScriptRouter::new::{{closure}}::h75ab22a00e7dfa42 (0x55e752e7d797)

7: ipc_channel::router::Router::run::hbd6147421ea41418 (0x55e7553f0843)

8: std::sys_common::backtrace::__rust_begin_short_backtrace::h6fd18e901e895f8b (0x55e7553f2566)

9: _ZN3std9panicking3try7do_call17h1b444f82dc748186E.llvm.5578204044005263353 (0x55e7553f3fcb)

10: __rust_maybe_catch_panic (0x55e75542e639)

at src/libpanic_unwind/lib.rs:82

11: core::ops::function::FnOnce::call_once{{vtable.shim}}::h8d0a0826419b9608 (0x55e7553f2afe)

12: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h10faab75e4737451 (0x55e75541316e)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

13: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h1aad66e3be56d28c (0x55e75542d95f)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

std::sys_common::thread::start_thread::h9a8131af389e9a10

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h5c5575a5d923977b

at src/libstd/sys/unix/thread.rs:79

14: start_thread (0x7f6fd42d66b9)

15: clone (0x7f6fd2b7241c)

16: <unknown> (0x0)

[2019-08-02T19:04:15Z ERROR servo] called `Option::unwrap()` on a `None` value

Unexpected BHM channel panic in constellation: RecvError (thread Constellation, at src/libcore/result.rs:1051)

called `Result::unwrap()` on an `Err` value: RecvError (thread LayoutThread PipelineId { namespace_id: PipelineNamespaceId(1), index:

PipelineIndex(1) }, at src/libcore/result.rs:1051)

stack backtrace:

0: servo::main::{{closure}}::h80e145b734dbd0e6 (0x55e752214f1f)

1: std::panicking::rust_panic_with_hook::h0529069ab88f357a (0x55e755424985)

at src/libstd/panicking.rs:481

2: std::panicking::continue_panic_fmt::h6a820a3cd2914e74 (0x55e755424421)

at src/libstd/panicking.rs:384

3: rust_begin_unwind (0x55e755424305)

at src/libstd/panicking.rs:311

4: core::panicking::panic_fmt::he00cfaca5555542a (0x55e75544706c)

at src/libcore/panicking.rs:85

5: core::result::unwrap_failed::h4239b9d80132a0db (0x55e755447166)

at src/libcore/result.rs:1051

6: constellation::constellation::Constellation<Message,LTF,STF>::run::h47db071c3d684e72 (0x55e7523d1897)

7: std::sys_common::backtrace::__rust_begin_short_backtrace::hfad83bbe04097343 (0x55e7522c72c3)

8: std::panicking::try::do_call::hd86cbdbacff4f086 (0x55e7522e0db5)

9: __rust_maybe_catch_panic (0x55e75542e639)

at src/libpanic_unwind/lib.rs:82

10: core::ops::function::FnOnce::call_once{{vtable.shim}}::hdecb57578c643b74 (0x55e7522e0fd5)

11: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h10faab75e4737451 (0x55e75541316e)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

12: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h1aad66e3be56d28c (0x55e75542d95f)

at /rustc/273f42b5964c29dda2c5a349dd4655529767b07f/src/liballoc/boxed.rs:766

std::sys_common::thread::start_thread::h9a8131af389e9a10

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h5c5575a5d923977b

at src/libstd/sys/unix/thread.rs:79

13: start_thread (0x7f6fd42d66b9)

14: clone (0x7f6fd2b7241c)

15: <unknown> (0x0)

Related: https://github.com/servo/ipc-channel/issues/240

TL;DR sending the same ipc channel over ipc many times uses up an fd each time it's sent, so recycling the ipc channel doesn't help.

I have developed a very small script that can crash servo:

#!/bin/bash

set -e

SESSIONID=$(curl -X POST -d "{}" http://localhost:7002/session | jq -r ".value.sessionId")

echo "SESSIONID: ${SESSIONID}"

mkdir -p data

i=0

while true; do

echo "Run ${i}"

i=$(( i + 1 ))

curl -s -X POST -d '{"url": "http://localhost:9080/test3.html"}' http://localhost:7002/session/${SESSIONID}/url > /dev/null

curl -s http://localhost:7002/session/${SESSIONID}/screenshot | jq -r ".value" | base64 -d > data/test${i}.png

done

The HTML and CSS file that I used, along with the script the commands I used to start the various pieces is captured at the following gist: https://gist.github.com/kanaka/119f5ed9841e23e35d07e8944cca6aa7

I built servo just now from d2856ce8aeca and then ran the test against it. I got a crash on the 819th page load. The crash is not a "too many open files" crash, but I'm posting it here because I suspect it may be related; it's simplified version of the process I used earlier and it crashed after a similar order of page loads. I've included the crash below. If you determine that this is likely a different test case I'm happy to file a new ticket.

$ ./mach run --release -z --webdriver=7002 --resolution=400x300

assertion failed: self.is_double() (thread ScriptThread PipelineId { namespace_id: PipelineNamespaceId(1), index: PipelineIndex(2) },

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/src/jsval.rs:439)

stack backtrace:

0: servo::main::{{closure}}::ha22eb134993c4424 (0x555692dcbe8f)

1: std::panicking::rust_panic_with_hook::hec63884fa234b28d (0x555695fc4ae5)

at src/libstd/panicking.rs:481

2: std::panicking::begin_panic::hdcff812b537809ad (0x555693f72804)

3: script::dom::windowproxy::trace::h0eb5d450dfe2e522 (0x555693af3cf7)

4: _ZNK2js5Class7doTraceEP8JSTracerP8JSObject (0x5556945a3366)

at /data/joelm/personal/UTA/dissertation/servo/servo-20190724.git/target/release/build/mozjs_sys-79c54c059d530e2a/out/dis

t/include/js/Class.h:872

_ZL13CallTraceHookIZN2js14TenuringTracer11traceObjectEP8JSObjectE4$_11EPNS0_12NativeObjectEOT_P8JSTracerS3_15CheckGeneration

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Marking.cpp:1545

_ZN2js14TenuringTracer11traceObjectEP8JSObject

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Marking.cpp:2907

5: _ZL14TraceWholeCellRN2js14TenuringTracerEP8JSObject (0x5556945a315b)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Marking.cpp:2798

_ZL18TraceBufferedCellsI8JSObjectEvRN2js14TenuringTracerEPNS1_2gc5ArenaEPNS4_12ArenaCellSetE

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Marking.cpp:2835

_ZN2js2gc11StoreBuffer15WholeCellBuffer5traceERNS_14TenuringTracerE

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Marking.cpp:2852

6: _ZN2js2gc11StoreBuffer15traceWholeCellsERNS_14TenuringTracerE (0x5556945ba215)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/StoreBuffer.h:479

_ZN2js7Nursery12doCollectionEN2JS8GCReasonERNS_2gc16TenureCountCacheE

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Nursery.cpp:946

7: _ZN2js7Nursery7collectEN2JS8GCReasonE (0x5556945b94a5)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Nursery.cpp:783

8: _ZN2js2gc9GCRuntime7minorGCEN2JS8GCReasonENS_7gcstats9PhaseKindE (0x55569459aa83)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/GC.cpp:7787

9: _ZN2js2gc9GCRuntime13gcIfRequestedEv (0x55569457e074)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/GC.cpp:7846

10: _ZN2js2gc9GCRuntime22gcIfNeededAtAllocationEP9JSContext (0x55569457a63c)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Allocator.cpp:343

_ZN2js2gc9GCRuntime19checkAllocatorStateILNS_7AllowGCE1EEEbP9JSContextNS0_9AllocKindE

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Allocator.cpp:300

_ZN2js14AllocateObjectILNS_7AllowGCE1EEEP8JSObjectP9JSContextNS_2gc9AllocKindEmNS6_11InitialHeapEPKNS_5ClassE

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Allocator.cpp:55

11: _ZN2js11ProxyObject6createEP9JSContextPKNS_5ClassEN2JS6HandleINS_11TaggedProtoEEENS_2gc9AllocKindENS_13NewObjectKindE (0x5556943

4b63f)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/vm/ProxyObject.cpp:199

12: _ZN2js11ProxyObject3NewEP9JSContextPKNS_16BaseProxyHandlerEN2JS6HandleINS6_5ValueEEENS_11TaggedProtoERKNS_12ProxyOptionsE (0x555

69434b0e4)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/vm/ProxyObject.cpp:100

13: _ZN2js14NewProxyObjectEP9JSContextPKNS_16BaseProxyHandlerEN2JS6HandleINS5_5ValueEEEP8JSObjectRKNS_12ProxyOptionsE (0x5556944bd7a

1)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/proxy/Proxy.cpp:779

_ZN2js7Wrapper3NewEP9JSContextP8JSObjectPKS0_RKNS_14WrapperOptionsE

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/proxy/Wrapper.cpp:282

14: WrapperNew (0x5556941eb3d7)

15: _ZN2JS11Compartment18getOrCreateWrapperEP9JSContextNS_6HandleIP8JSObjectEENS_13MutableHandleIS5_EE (0x55569427b51c)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/vm/Compartment.cpp:268

16: _ZN2JS11Compartment6rewrapEP9JSContextNS_13MutableHandleIP8JSObjectEENS_6HandleIS5_EE (0x55569427b901)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/vm/Compartment.cpp:357

17: _ZN2js12RemapWrapperEP9JSContextP8JSObjectS3_ (0x5556944acf0e)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/proxy/CrossCompartmentWrapper.cpp:582

18: _ZN2js25RemapAllWrappersForObjectEP9JSContextP8JSObjectS3_ (0x5556944ad462)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/proxy/CrossCompartmentWrapper.cpp:633

19: _Z19JS_TransplantObjectP9JSContextN2JS6HandleIP8JSObjectEES5_ (0x55569447ec9c)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/jsapi.cpp:731

20: _ZN6script3dom11windowproxy11WindowProxy10set_window17h52ce1951a2216063E.llvm.1583787634904419619 (0x555693af1fd3)

21: script::dom::window::Window::resume::h6e86d312ad70993d (0x5556934f5dbd)

22: script::script_thread::ScriptThread::load::hf15b75fcfcd60129 (0x5556939e0c6e)

23: script::script_thread::ScriptThread::handle_page_headers_available::ha41037a7982b0452 (0x5556939dc581)

24: std::thread::local::LocalKey<T>::with::h3bc7497eb1a5059a (0x55569386eefd)

25: <script::dom::servoparser::ParserContext as net_traits::FetchResponseListener>::process_response::h72bfea20576003c5 (0x5556937fe

d92)

26: script::script_thread::ScriptThread::handle_msg_from_constellation::h37e0394a1b85329d (0x5556939cb48f)

27: _ZN6script13script_thread12ScriptThread11handle_msgs17hac0c1aacd24f0633E.llvm.18161580367316437218 (0x5556939c57f8) [186/1983]

28: profile_traits::mem::ProfilerChan::run_with_memory_reporting::h1b629c9f7392a890 (0x555693b450b7)

29: std::sys_common::backtrace::__rust_begin_short_backtrace::h0097fbef27bdc8ce (0x555693e7a4f2)

30: _ZN3std9panicking3try7do_call17h47262c4eba228992E.llvm.2547905980101764475 (0x555693f89d45)

31: __rust_maybe_catch_panic (0x555695fce789)

at src/libpanic_unwind/lib.rs:80

32: core::ops::function::FnOnce::call_once{{vtable.shim}}::h51b7628431ca69a2 (0x5556934a3285)

33: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h82a57145aa4239a7 (0x555695fb32ee)

at /rustc/dddb7fca09dc817ba275602b950bb81a9032fb6d/src/liballoc/boxed.rs:770

34: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h167c1ef971e93086 (0x555695fcdaaf)

at /rustc/dddb7fca09dc817ba275602b950bb81a9032fb6d/src/liballoc/boxed.rs:770

std::sys_common::thread::start_thread::h739b9b99c7f25b24

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h79a2f27ba62f96ae

at src/libstd/sys/unix/thread.rs:79

35: start_thread (0x7fb6f6dd06b9)

36: clone (0x7fb6f566c41c)

37: <unknown> (0x0)

[2019-08-04T03:33:58Z ERROR servo] assertion failed: self.is_double()

Stack trace for thread "ScriptThread PipelineId { namespace_id: PipelineNamespaceId(1), index: PipelineIndex(2) }"

stack backtrace:

0: servo::install_crash_handler::handler::h3244c709fa5cd0dc (0x555692dcb0d0)

1: _ZL15WasmTrapHandleriP9siginfo_tPv (0x5556948c718e)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/wasm/WasmSignalHandlers.cpp:967

2: <unknown> (0x7fb6f6dda38f)

3: script::dom::windowproxy::trace::h0eb5d450dfe2e522 (0x555693af3d32)

4: _ZNK2js5Class7doTraceEP8JSTracerP8JSObject (0x5556945a3366)

at /data/joelm/personal/UTA/dissertation/servo/servo-20190724.git/target/release/build/mozjs_sys-79c54c059d530e2a/out/dis

t/include/js/Class.h:872

_ZL13CallTraceHookIZN2js14TenuringTracer11traceObjectEP8JSObjectE4$_11EPNS0_12NativeObjectEOT_P8JSTracerS3_15CheckGeneration

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Marking.cpp:1545

_ZN2js14TenuringTracer11traceObjectEP8JSObject

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Marking.cpp:2907

5: _ZL14TraceWholeCellRN2js14TenuringTracerEP8JSObject (0x5556945a315b)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Marking.cpp:2798

_ZL18TraceBufferedCellsI8JSObjectEvRN2js14TenuringTracerEPNS1_2gc5ArenaEPNS4_12ArenaCellSetE

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Marking.cpp:2835

_ZN2js2gc11StoreBuffer15WholeCellBuffer5traceERNS_14TenuringTracerE

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Marking.cpp:2852

6: _ZN2js2gc11StoreBuffer15traceWholeCellsERNS_14TenuringTracerE (0x5556945ba215)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/StoreBuffer.h:479

_ZN2js7Nursery12doCollectionEN2JS8GCReasonERNS_2gc16TenureCountCacheE

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Nursery.cpp:946

7: _ZN2js7Nursery7collectEN2JS8GCReasonE (0x5556945b94a5)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Nursery.cpp:783

8: _ZN2js2gc9GCRuntime7minorGCEN2JS8GCReasonENS_7gcstats9PhaseKindE (0x55569459aa83)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/GC.cpp:7787

9: _ZN2js2gc9GCRuntime13gcIfRequestedEv (0x55569457e074)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/GC.cpp:7846

10: _ZN2js2gc9GCRuntime22gcIfNeededAtAllocationEP9JSContext (0x55569457a63c)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Allocator.cpp:343

_ZN2js2gc9GCRuntime19checkAllocatorStateILNS_7AllowGCE1EEEbP9JSContextNS0_9AllocKindE

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Allocator.cpp:300

_ZN2js14AllocateObjectILNS_7AllowGCE1EEEP8JSObjectP9JSContextNS_2gc9AllocKindEmNS6_11InitialHeapEPKNS_5ClassE

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/gc/Allocator.cpp:55

11: _ZN2js11ProxyObject6createEP9JSContextPKNS_5ClassEN2JS6HandleINS_11TaggedProtoEEENS_2gc9AllocKindENS_13NewObjectKindE (0x5556943

4b63f)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/vm/ProxyObject.cpp:199

12: _ZN2js11ProxyObject3NewEP9JSContextPKNS_16BaseProxyHandlerEN2JS6HandleINS6_5ValueEEENS_11TaggedProtoERKNS_12ProxyOptionsE (0x555

69434b0e4)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/vm/ProxyObject.cpp:100

13: _ZN2js14NewProxyObjectEP9JSContextPKNS_16BaseProxyHandlerEN2JS6HandleINS5_5ValueEEEP8JSObjectRKNS_12ProxyOptionsE (0x5556944bd7a

1)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/proxy/Proxy.cpp:779

_ZN2js7Wrapper3NewEP9JSContextP8JSObjectPKS0_RKNS_14WrapperOptionsE

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/proxy/Wrapper.cpp:282

14: WrapperNew (0x5556941eb3d7)

15: _ZN2JS11Compartment18getOrCreateWrapperEP9JSContextNS_6HandleIP8JSObjectEENS_13MutableHandleIS5_EE (0x55569427b51c)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/vm/Compartment.cpp:268

16: _ZN2JS11Compartment6rewrapEP9JSContextNS_13MutableHandleIP8JSObjectEENS_6HandleIS5_EE (0x55569427b901)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/vm/Compartment.cpp:357

17: _ZN2js12RemapWrapperEP9JSContextP8JSObjectS3_ (0x5556944acf0e)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/proxy/CrossCompartmentWrapper.cpp:582

18: _ZN2js25RemapAllWrappersForObjectEP9JSContextP8JSObjectS3_ (0x5556944ad462)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/proxy/CrossCompartmentWrapper.cpp:633

19: _Z19JS_TransplantObjectP9JSContextN2JS6HandleIP8JSObjectEES5_ (0x55569447ec9c)

at /home/joelm/.cargo/git/checkouts/mozjs-fa11ffc7d4f1cc2d/6dff104/mozjs/js/src/jsapi.cpp:731

20: _ZN6script3dom11windowproxy11WindowProxy10set_window17h52ce1951a2216063E.llvm.1583787634904419619 (0x555693af1fd3)

21: script::dom::window::Window::resume::h6e86d312ad70993d (0x5556934f5dbd)

22: script::script_thread::ScriptThread::load::hf15b75fcfcd60129 (0x5556939e0c6e)

23: script::script_thread::ScriptThread::handle_page_headers_available::ha41037a7982b0452 (0x5556939dc581)

24: std::thread::local::LocalKey<T>::with::h3bc7497eb1a5059a (0x55569386eefd)

25: <script::dom::servoparser::ParserContext as net_traits::FetchResponseListener>::process_response::h72bfea20576003c5 (0x5556937fe

d92)

26: script::script_thread::ScriptThread::handle_msg_from_constellation::h37e0394a1b85329d (0x5556939cb48f)

27: _ZN6script13script_thread12ScriptThread11handle_msgs17hac0c1aacd24f0633E.llvm.18161580367316437218 (0x5556939c57f8)

28: profile_traits::mem::ProfilerChan::run_with_memory_reporting::h1b629c9f7392a890 (0x555693b450b7)

29: std::sys_common::backtrace::__rust_begin_short_backtrace::h0097fbef27bdc8ce (0x555693e7a4f2)

30: _ZN3std9panicking3try7do_call17h47262c4eba228992E.llvm.2547905980101764475 (0x555693f89d45)

31: __rust_maybe_catch_panic (0x555695fce789)

at src/libpanic_unwind/lib.rs:80

32: core::ops::function::FnOnce::call_once{{vtable.shim}}::h51b7628431ca69a2 (0x5556934a3285)

33: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h82a57145aa4239a7 (0x555695fb32ee)

at /rustc/dddb7fca09dc817ba275602b950bb81a9032fb6d/src/liballoc/boxed.rs:770

34: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h167c1ef971e93086 (0x555695fcdaaf)

at /rustc/dddb7fca09dc817ba275602b950bb81a9032fb6d/src/liballoc/boxed.rs:770

std::sys_common::thread::start_thread::h739b9b99c7f25b24

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h79a2f27ba62f96ae

at src/libstd/sys/unix/thread.rs:79

35: start_thread (0x7fb6f6dd06b9)

36: clone (0x7fb6f566c41c)

37: <unknown> (0x0)

Servo exited with return value 4

I ran it again and it crashed at exactly the same point (819th page load) and with the same traces. I'm going to try and make the page a touch more complex and see if that affects anything as that might give a clue about where the crash is being triggered.

Okay, so with the more complicated test case (addition of second stylesheet file), it now panics with "Too many open files" traces after the 696th load. I've updated the gist with the new rend.css stylesheet and updated the HTML file to include it. Here is the trace/panic that was triggered this time:

$ ./mach run --release -z --webdriver=7002 --resolution=400x300

called `Result::unwrap()` on an `Err` value: Os { code: 24, kind: Other, message: "Too many open files" } (thread ScriptThread Pipelin

eId { namespace_id: PipelineNamespaceId(1), index: PipelineIndex(2) }, at src/libcore/result.rs:1084)

stack backtrace:

0: servo::main::{{closure}}::ha22eb134993c4424 (0x556fbdcc8e8f)

1: std::panicking::rust_panic_with_hook::hec63884fa234b28d (0x556fc0ec1ae5)

at src/libstd/panicking.rs:481

2: std::panicking::continue_panic_fmt::h1272190bb9afc9ca (0x556fc0ec1581)

at src/libstd/panicking.rs:384

3: rust_begin_unwind (0x556fc0ec1465)

at src/libstd/panicking.rs:311

4: core::panicking::panic_fmt::h25bfecd575ec5ea2 (0x556fc0ee41bc)

at src/libcore/panicking.rs:85

5: core::result::unwrap_failed::hc09c6b44d3dd6f08 (0x556fc0ee42b6)

at src/libcore/result.rs:1084

6: script::fetch::FetchCanceller::initialize::hf310501f87637b73 (0x556fbe8b6c85)

7: script::document_loader::DocumentLoader::fetch_async_background::h8687ec5c482a6f20 (0x556fbee1d09f)

8: script::document_loader::DocumentLoader::fetch_async::h915b5e1878d99c28 (0x556fbee1cffc)

9: script::dom::document::Document::fetch_async::h4749657441058e6d (0x556fbe857c18)

10: script::stylesheet_loader::StylesheetLoader::load::hb13f68bb9bd0715c (0x556fbe8e6c5e)

11: script::dom::htmllinkelement::HTMLLinkElement::handle_stylesheet_url::hbfdd56f3b098b5fc (0x556fbe9dc06f)

12: <script::dom::htmllinkelement::HTMLLinkElement as script::dom::virtualmethods::VirtualMethods>::bind_to_tree::h0a3ee608fcdb3fa4

(0x556fbe9db925)

13: script::dom::node::Node::insert::h55baed3a814aa657 (0x556fbea880b4)

14: script::dom::node::Node::pre_insert::hfd38fed65d3bff83 (0x556fbea87412)

15: script::dom::servoparser::insert::hbe38dce40e96d280 (0x556fbe6ffd46)

16: html5ever::tree_builder::TreeBuilder<Handle,Sink>::insert_element::h8083b8e2fe4adefe (0x556fbec39d1f)

17: html5ever::tree_builder::TreeBuilder<Handle,Sink>::step::hd2875f6f9563d33d (0x556fbec656ea)

18: <html5ever::tree_builder::TreeBuilder<Handle,Sink> as html5ever::tokenizer::interface::TokenSink>::process_token::he7420a061148e

71b (0x556fbebf4f63)

19: _ZN9html5ever9tokenizer21Tokenizer$LT$Sink$GT$13process_token17hff7c4f1817192eebE.llvm.18110466598814287016 (0x556fbe271988)

20: html5ever::tokenizer::Tokenizer<Sink>::emit_current_tag::he37613754012cdc7 (0x556fbe2722e1)

21: html5ever::tokenizer::Tokenizer<Sink>::step::h5bfde99192403598 (0x556fbe27ab73)

22: _ZN9html5ever9tokenizer21Tokenizer$LT$Sink$GT$3run17h161c6703aa6bbb12E.llvm.18110466598814287016 (0x556fbe27604a)

23: script::dom::servoparser::html::Tokenizer::feed::hb6ebdb521cf099cc (0x556fbe2b327a)

24: script::dom::servoparser::ServoParser::do_parse_sync::h30e5abb471f6aaa8 (0x556fbe6fa7ac)

25: profile_traits::time::profile::h19e93b2dd772b80a (0x556fbea42b8f)

26: _ZN6script3dom11servoparser11ServoParser10parse_sync17h9ef69ffecf72c88fE.llvm.8010111341268598948 (0x556fbe6fa305)

27: <script::dom::servoparser::ParserContext as net_traits::FetchResponseListener>::process_response_chunk::h82f963e90fd00dac (0x556

fbe6fed4a)

28: script::script_thread::ScriptThread::handle_msg_from_constellation::h37e0394a1b85329d (0x556fbe8c8368)

29: _ZN6script13script_thread12ScriptThread11handle_msgs17hac0c1aacd24f0633E.llvm.18161580367316437218 (0x556fbe8c27f8)

30: profile_traits::mem::ProfilerChan::run_with_memory_reporting::h1b629c9f7392a890 (0x556fbea420b7)

31: std::sys_common::backtrace::__rust_begin_short_backtrace::h0097fbef27bdc8ce (0x556fbed774f2)

32: _ZN3std9panicking3try7do_call17h47262c4eba228992E.llvm.2547905980101764475 (0x556fbee86d45)

33: __rust_maybe_catch_panic (0x556fc0ecb789)

at src/libpanic_unwind/lib.rs:80

34: core::ops::function::FnOnce::call_once{{vtable.shim}}::h51b7628431ca69a2 (0x556fbe3a0285)

35: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h82a57145aa4239a7 (0x556fc0eb02ee)

at /rustc/dddb7fca09dc817ba275602b950bb81a9032fb6d/src/liballoc/boxed.rs:770

36: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h167c1ef971e93086 (0x556fc0ecaaaf)

at /rustc/dddb7fca09dc817ba275602b950bb81a9032fb6d/src/liballoc/boxed.rs:770

std::sys_common::thread::start_thread::h739b9b99c7f25b24

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h79a2f27ba62f96ae

at src/libstd/sys/unix/thread.rs:79

37: start_thread (0x7f2a1d9536b9)

38: clone (0x7f2a1c1ef41c)

39: <unknown> (0x0)

[2019-08-04T04:42:51Z ERROR servo] called `Result::unwrap()` on an `Err` value: Os { code: 24, kind: Other, message: "Too many open fi

les" }

Pipeline script content process shutdown chan: Os { code: 24, kind: Other, message: "Too many open files" } (thread Constellation, at

src/libcore/result.rs:1084)

stack backtrace:

0: servo::main::{{closure}}::ha22eb134993c4424 (0x556fbdcc8e8f)

1: std::panicking::rust_panic_with_hook::hec63884fa234b28d (0x556fc0ec1ae5)

at src/libstd/panicking.rs:481

2: std::panicking::continue_panic_fmt::h1272190bb9afc9ca (0x556fc0ec1581)

at src/libstd/panicking.rs:384

3: rust_begin_unwind (0x556fc0ec1465)

at src/libstd/panicking.rs:311

4: core::panicking::panic_fmt::h25bfecd575ec5ea2 (0x556fc0ee41bc)

at src/libcore/panicking.rs:85

5: core::result::unwrap_failed::hc09c6b44d3dd6f08 (0x556fc0ee42b6)

at src/libcore/result.rs:1084

6: constellation::pipeline::Pipeline::spawn::h5d0c2ec40f26cd14 (0x556fbde4e4a8)

7: constellation::constellation::Constellation<Message,LTF,STF>::new_pipeline::h52453b5b66a96577 (0x556fbde63c91)

8: constellation::constellation::Constellation<Message,LTF,STF>::handle_panic::hc5c580ab97accd1e (0x556fbde627ab)

9: constellation::constellation::Constellation<Message,LTF,STF>::handle_log_entry::h90f95fbfb6405087 (0x556fbde65f01)

10: constellation::constellation::Constellation<Message,LTF,STF>::handle_request_from_compositor::h84ca30df4fac3450 (0x556fbde7b45d)

11: constellation::constellation::Constellation<Message,LTF,STF>::run::hab0833f05fdcdb29 (0x556fbde835a9)

12: std::sys_common::backtrace::__rust_begin_short_backtrace::h8e5b89d53f23b04f (0x556fbdd7b023)

13: std::panicking::try::do_call::hf7a763f365204be2 (0x556fbdd943c5)

14: __rust_maybe_catch_panic (0x556fc0ecb789)

at src/libpanic_unwind/lib.rs:80

15: core::ops::function::FnOnce::call_once{{vtable.shim}}::h5abd434a6e2da7db (0x556fbdd945e5)

16: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h82a57145aa4239a7 (0x556fc0eb02ee)

at /rustc/dddb7fca09dc817ba275602b950bb81a9032fb6d/src/liballoc/boxed.rs:770

17: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h167c1ef971e93086 (0x556fc0ecaaaf)

at /rustc/dddb7fca09dc817ba275602b950bb81a9032fb6d/src/liballoc/boxed.rs:770

std::sys_common::thread::start_thread::h739b9b99c7f25b24

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h79a2f27ba62f96ae

at src/libstd/sys/unix/thread.rs:79

18: start_thread (0x7f2a1d9536b9)

19: clone (0x7f2a1c1ef41c)

20: <unknown> (0x0)

[2019-08-04T04:42:51Z ERROR servo] Pipeline script content process shutdown chan: Os { code: 24, kind: Other, message: "Too many open

files" }

called `Result::unwrap()` on an `Err` value: RecvError (thread LayoutThread PipelineId { namespace_id: PipelineNamespaceId(1), index:

PipelineIndex(677) }, at src/libcore/result.rs:1084)

stack backtrace:

0: servo::main::{{closure}}::ha22eb134993c4424 (0x556fbdcc8e8f)

1: std::panicking::rust_panic_with_hook::hec63884fa234b28d (0x556fc0ec1ae5)

at src/libstd/panicking.rs:481

2: std::panicking::continue_panic_fmt::h1272190bb9afc9ca (0x556fc0ec1581)

at src/libstd/panicking.rs:384

3: rust_begin_unwind (0x556fc0ec1465)

at src/libstd/panicking.rs:311

4: core::panicking::panic_fmt::h25bfecd575ec5ea2 (0x556fc0ee41bc)

at src/libcore/panicking.rs:85

5: core::result::unwrap_failed::hc09c6b44d3dd6f08 (0x556fc0ee42b6)

at src/libcore/result.rs:1084

6: layout_thread::LayoutThread::start::h2c49640050891be9 (0x556fbe0e7303)

7: profile_traits::mem::ProfilerChan::run_with_memory_reporting::h0ca43ddf4d481a98 (0x556fbe15cde3)

8: std::sys_common::backtrace::__rust_begin_short_backtrace::h662b9825ec83f9f6 (0x556fbe211774)

9: _ZN3std9panicking3try7do_call17hc64d38bd13a9e5fbE.llvm.13006379931387932559 (0x556fbe17d5a3)

10: __rust_maybe_catch_panic (0x556fc0ecb789)

at src/libpanic_unwind/lib.rs:80

11: core::ops::function::FnOnce::call_once{{vtable.shim}}::h27113effb06c5b11 (0x556fbe2123c2)

12: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h82a57145aa4239a7 (0x556fc0eb02ee)

at /rustc/dddb7fca09dc817ba275602b950bb81a9032fb6d/src/liballoc/boxed.rs:770

13: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once::h167c1ef971e93086 (0x556fc0ecaaaf)

at /rustc/dddb7fca09dc817ba275602b950bb81a9032fb6d/src/liballoc/boxed.rs:770

std::sys_common::thread::start_thread::h739b9b99c7f25b24

at src/libstd/sys_common/thread.rs:13

std::sys::unix::thread::Thread::new::thread_start::h79a2f27ba62f96ae

at src/libstd/sys/unix/thread.rs:79

14: start_thread (0x7f2a1d9536b9)

15: clone (0x7f2a1c1ef41c)

16: <unknown> (0x0)

[2019-08-04T04:42:51Z ERROR servo] called `Result::unwrap()` on an `Err` value: RecvError

called `Result::unwrap()` on an `Err` value: Io(Custom { kind: ConnectionReset, error: "All senders for this socket closed" }) (thread

StorageManager, at src/libcore/result.rs:1084)

stack backtrace: