Salt: [BUG] Memory leak in salt-master version 3000.2

Description

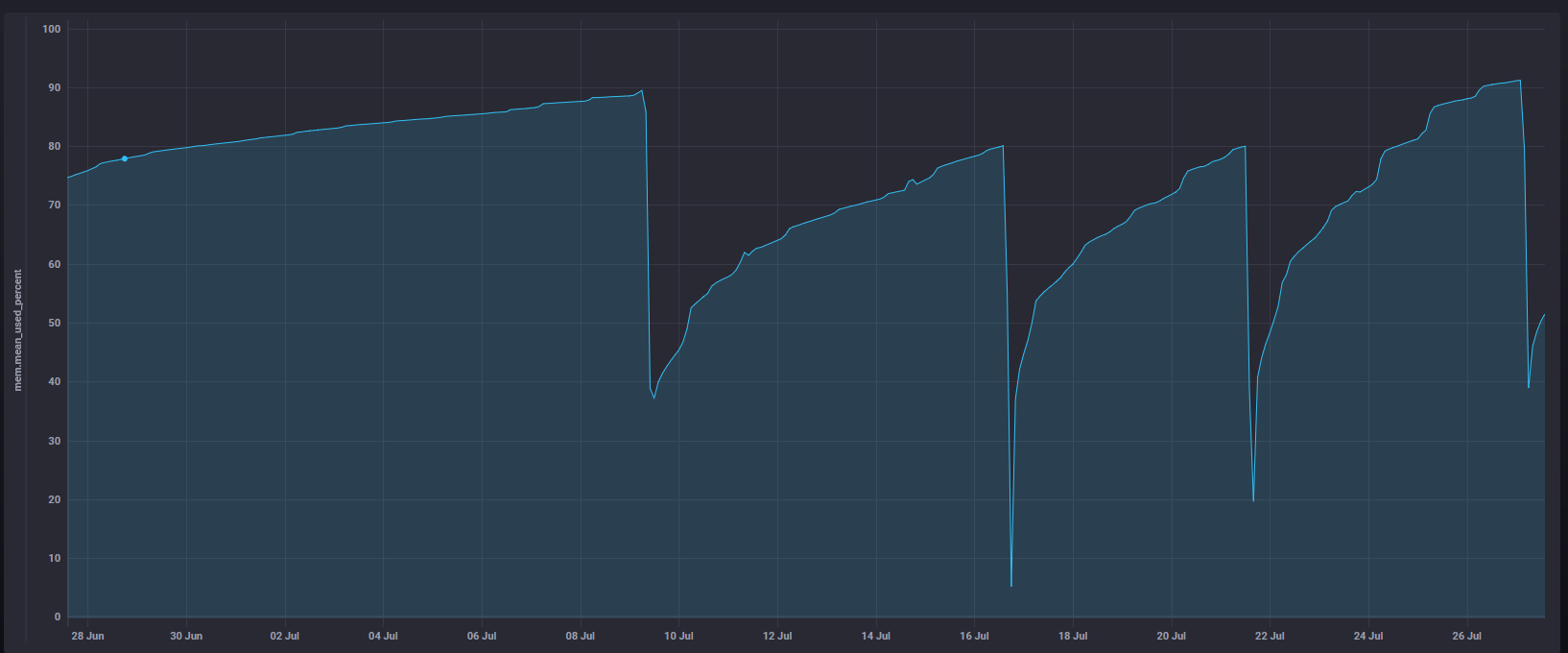

After an upgrade to 3000.2 for the security fixes on 30/4/2020, the memory usage of the salt-master simply keeps growing exponentially.

Setup

- Local filesystem based roots for pillars and states. No GitFS or external git pillars involved. I am explicitly mentioning this since gitfs has memory leak issues already because of pygit2.

Screenshots

Versions Report

Salt Version:

Salt: 3000.2

Dependency Versions:

cffi: 1.12.3

cherrypy: Not Installed

dateutil: 2.6.1

docker-py: Not Installed

gitdb: 2.0.3

gitpython: 2.1.8

Jinja2: 2.10

libgit2: 0.27.3

M2Crypto: Not Installed

Mako: Not Installed

msgpack-pure: Not Installed

msgpack-python: 0.5.6

mysql-python: Not Installed

pycparser: 2.17

pycrypto: 2.6.1

pycryptodome: Not Installed

pygit2: 0.27.3

Python: 3.6.9 (default, Apr 18 2020, 01:56:04)

python-gnupg: 0.4.1

PyYAML: 3.12

PyZMQ: 16.0.2

smmap: 2.0.3

timelib: Not Installed

Tornado: 4.5.3

ZMQ: 4.2.5

System Versions:

dist: Ubuntu 18.04 bionic

locale: UTF-8

machine: x86_64

release: 4.15.0-74-generic

system: Linux

version: Ubuntu 18.04 bionic

Note: The usage drop in above screenshot indicates salt-master restarts.

Debugging

From what I have been able to dig so far, it looks like the memory consumption keeps growing for the publisher process in particular.

[salt.transport.zeromq:903 ][INFO ][10938] Starting the Salt Publisher on tcp://[::]:4505

The above PID from logfile is the one whose memory usage keeps growing. I will try to dig further if I get the chance but dropping this issue here so that anyone with further insight can help.

All 30 comments

Hey @vin01 thanks for the report! IIRC this publisher was one of our suspects - I feel like either @dwoz or @s0undt3ch may have some more info about that, but that's some gnarly look there.

Out of curiosity, how are you running Salt? Is this inside a container, vm, or just on a regular server?

Thanks!

Additionally, from what version did you upgrade to 3000.2?

It's a VM. Was upgraded from 3000.1, and one day before that was an upgrade from 3000 -> 3000.1 which does not seem to have had the same impact memory-wise.

Thanks!

Same problem here. We had 2999, did an emergency upgrade to 3000.2+ds-1 and it now leaks a few GB of RAM every hour, killing the machine once or twice a day.

I found the leaking process and strace'd it.

epoll_ctl(7, EPOLL_CTL_MOD, 10, {EPOLLIN|EPOLLERR|EPOLLHUP, {u32=10, u64=94296006983690}}) = 0

epoll_wait(7, [{EPOLLIN, {u32=8, u64=94296006983688}}], 1023, 999) = 1

fstat(8, {st_mode=S_IFIFO|0600, st_size=0, ...}) = 0

lseek(8, 0, SEEK_CUR) = -1 ESPIPE (Illegal seek)

read(8, "x", 6) = 1

read(8, 0x7f47dcb63f55, 5) = -1 EAGAIN (Resource temporarily unavailable)

fstat(8, {st_mode=S_IFIFO|0600, st_size=0, ...}) = 0

lseek(8, 0, SEEK_CUR) = -1 ESPIPE (Illegal seek)

read(8, 0x7f47dcb63e64, 6) = -1 EAGAIN (Resource temporarily unavailable)

epoll_wait(7, [{EPOLLIN, {u32=10, u64=94296006983690}}], 1023, 999) = 1

recvfrom(10, "\202\244body\332\22\36salt/beacon/host-1-ssd0-us-central1-f-14-mmpz.c.fuchsia-infra.internal/status/2020-05-08T04:18:18.232090\n\n\203\246_stamp\2722020-05-08T04:18:18.248318\244data\205\247loadavg\203\24615-min\313\0\0\0\0\0\0\0\0\2455-min\313?\204z\341G\256\24{\2451-min\313\0\0\0\0\0\0\0\0\247meminfo\336\0.\254Active(file)\202\244unit\242kB\245v"..., 65536, 0, NULL, NULL) = 4653

epoll_ctl(7, EPOLL_CTL_MOD, 10, {EPOLLERR|EPOLLHUP, {u32=10, u64=94296006983690}}) = 0

epoll_wait(7, [], 1023, 0) = 0

write(9, "x", 1) = 1

mmap(NULL, 262144, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0x7f47dcaf0000

getpid() = 29777

brk(0x55c69856c000) = 0x55c69856c000

getpid() = 29777

fstat(9, {st_mode=S_IFIFO|0600, st_size=0, ...}) = 0

recvfrom(10, 0x55c6985397a4, 65536, 0, NULL, NULL) = -1 EAGAIN (Resource temporarily unavailable)

It's unfortunate that all the processes are called salt-master regardless of what they do. That one seems like a non essential logging one. I can't kill it because if I do, the master salt-master restarts it, but I can kill -STOP it without any adverse effects to the main functionality.

That seems to stop the leak

lsof shows

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

salt-mast 29777 root cwd DIR 8,1 4096 2 /

salt-mast 29777 root rtd DIR 8,1 4096 2 /

salt-mast 29777 root txt REG 8,1 3779512 394975 /usr/bin/python2.7

salt-mast 29777 root mem REG 8,1 22928 534214 /lib/x86_64-linux-gnu/libnss_dns-2.24.so

salt-mast 29777 root mem REG 8,1 38456 409263 /usr/lib/python2.7/lib-dynload/_csv.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 468920 524362 /lib/x86_64-linux-gnu/libpcre.so.3.13.3

salt-mast 29777 root mem REG 8,1 72024 402521 /usr/lib/x86_64-linux-gnu/liblz4.so.1.7.1

salt-mast 29777 root mem REG 8,1 154376 524331 /lib/x86_64-linux-gnu/liblzma.so.5.2.2

salt-mast 29777 root mem REG 8,1 155400 525415 /lib/x86_64-linux-gnu/libselinux.so.1

salt-mast 29777 root mem REG 8,1 557552 528710 /lib/x86_64-linux-gnu/libsystemd.so.0.17.0

salt-mast 29777 root mem REG 8,1 16464 2238 /usr/lib/python2.7/dist-packages/systemd/_daemon.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 25096 409435 /usr/lib/python2.7/lib-dynload/mmap.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 79936 530472 /lib/x86_64-linux-gnu/libgpg-error.so.0.21.0

salt-mast 29777 root mem REG 8,1 109296 398254 /usr/lib/x86_64-linux-gnu/libsasl2.so.2.0.25

salt-mast 29777 root mem REG 8,1 84848 534227 /lib/x86_64-linux-gnu/libresolv-2.24.so

salt-mast 29777 root mem REG 8,1 14256 526553 /lib/x86_64-linux-gnu/libkeyutils.so.1.5

salt-mast 29777 root mem REG 8,1 48152 395261 /usr/lib/x86_64-linux-gnu/libkrb5support.so.0.1

salt-mast 29777 root mem REG 8,1 1112184 530773 /lib/x86_64-linux-gnu/libgcrypt.so.20.1.6

salt-mast 29777 root mem REG 8,1 1138648 395207 /usr/lib/x86_64-linux-gnu/libunistring.so.0.1.2

salt-mast 29777 root mem REG 8,1 216776 394489 /usr/lib/x86_64-linux-gnu/libhogweed.so.4.3

salt-mast 29777 root mem REG 8,1 75776 394749 /usr/lib/x86_64-linux-gnu/libtasn1.so.6.5.3

salt-mast 29777 root mem REG 8,1 210968 526556 /lib/x86_64-linux-gnu/libidn.so.11.6.16

salt-mast 29777 root mem REG 8,1 411688 396101 /usr/lib/x86_64-linux-gnu/libp11-kit.so.0.2.0

salt-mast 29777 root mem REG 8,1 322896 395122 /usr/lib/x86_64-linux-gnu/libldap_r-2.4.so.2.10.7

salt-mast 29777 root mem REG 8,1 59576 395121 /usr/lib/x86_64-linux-gnu/liblber-2.4.so.2.10.7

salt-mast 29777 root mem REG 8,1 14248 524337 /lib/x86_64-linux-gnu/libcom_err.so.2.1

salt-mast 29777 root mem REG 8,1 203656 395266 /usr/lib/x86_64-linux-gnu/libk5crypto.so.3.1

salt-mast 29777 root mem REG 8,1 892616 395238 /usr/lib/x86_64-linux-gnu/libkrb5.so.3.3

salt-mast 29777 root mem REG 8,1 305688 395226 /usr/lib/x86_64-linux-gnu/libgssapi_krb5.so.2.2

salt-mast 29777 root mem REG 8,1 224504 394520 /usr/lib/x86_64-linux-gnu/libnettle.so.6.3

salt-mast 29777 root mem REG 8,1 55136 395233 /usr/lib/x86_64-linux-gnu/libpsl.so.5.1.1

salt-mast 29777 root mem REG 8,1 183576 403204 /usr/lib/x86_64-linux-gnu/libssh2.so.1.0.1

salt-mast 29777 root mem REG 8,1 118256 402264 /usr/lib/x86_64-linux-gnu/librtmp.so.1

salt-mast 29777 root mem REG 8,1 137208 415309 /usr/lib/x86_64-linux-gnu/libidn2.so.0.1.4

salt-mast 29777 root mem REG 8,1 153640 396180 /usr/lib/x86_64-linux-gnu/libnghttp2.so.14.12.3

salt-mast 29777 root mem REG 8,1 1670752 394553 /usr/lib/x86_64-linux-gnu/libgnutls.so.30.13.1

salt-mast 29777 root mem REG 8,1 518560 395188 /usr/lib/x86_64-linux-gnu/libcurl-gnutls.so.4.4.0

salt-mast 29777 root mem REG 8,1 128192 412052 /usr/lib/python2.7/dist-packages/pycurl.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 19008 524875 /lib/x86_64-linux-gnu/libuuid.so.1.3.0

salt-mast 29777 root mem REG 8,1 10496 396794 /usr/lib/python2.7/dist-packages/markupsafe/_speedups.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 35880 400937 /usr/lib/python2.7/dist-packages/Crypto/Cipher/_AES.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 15616 404597 /usr/lib/python2.7/dist-packages/Crypto/Util/_counter.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 537448 401848 /usr/lib/x86_64-linux-gnu/libgmp.so.10.3.2

salt-mast 29777 root mem REG 8,1 77856 401015 /usr/lib/python2.7/dist-packages/Crypto/PublicKey/_fastmath.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 2504576 393909 /usr/lib/x86_64-linux-gnu/libcrypto.so.1.0.2

salt-mast 29777 root mem REG 8,1 431232 393911 /usr/lib/x86_64-linux-gnu/libssl.so.1.0.2

salt-mast 29777 root mem REG 8,1 501984 419512 /usr/lib/python2.7/dist-packages/M2Crypto/__m2crypto.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 143424 396070 /usr/lib/python2.7/dist-packages/msgpack/_cmsgpack.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 217424 790840 /usr/local/lib/python2.7/dist-packages/zmq/backend/cython/_device.so

salt-mast 29777 root mem REG 8,1 101296 790845 /usr/local/lib/python2.7/dist-packages/zmq/backend/cython/_version.so

salt-mast 29777 root mem REG 8,1 295976 790844 /usr/local/lib/python2.7/dist-packages/zmq/backend/cython/_poll.so

salt-mast 29777 root mem REG 8,1 147024 790834 /usr/local/lib/python2.7/dist-packages/zmq/backend/cython/utils.so

salt-mast 29777 root mem REG 8,1 661872 790841 /usr/local/lib/python2.7/dist-packages/zmq/backend/cython/socket.so

salt-mast 29777 root mem REG 8,1 300104 790849 /usr/local/lib/python2.7/dist-packages/zmq/backend/cython/context.so

salt-mast 29777 root mem REG 8,1 424920 790850 /usr/local/lib/python2.7/dist-packages/zmq/backend/cython/message.so

salt-mast 29777 root mem REG 8,1 122144 790839 /usr/local/lib/python2.7/dist-packages/zmq/backend/cython/error.so

salt-mast 29777 root mem REG 8,1 92584 528624 /lib/x86_64-linux-gnu/libgcc_s.so.1

salt-mast 29777 root mem REG 8,1 1566168 393399 /usr/lib/x86_64-linux-gnu/libstdc++.so.6.0.22

salt-mast 29777 root mem REG 8,1 31744 534228 /lib/x86_64-linux-gnu/librt-2.24.so

salt-mast 29777 root mem REG 8,1 5791824 790858 /usr/local/lib/python2.7/dist-packages/zmq/.libs/libzmq-0576c57a.so.5.0.2

salt-mast 29777 root mem REG 8,1 219064 790848 /usr/local/lib/python2.7/dist-packages/zmq/backend/cython/constants.so

salt-mast 29777 root mem REG 8,1 77408 409504 /usr/lib/python2.7/lib-dynload/pyexpat.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 170128 524667 /lib/x86_64-linux-gnu/libexpat.so.1.6.2

salt-mast 29777 root mem REG 8,1 70744 409297 /usr/lib/python2.7/lib-dynload/_elementtree.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 170776 525416 /lib/x86_64-linux-gnu/libtinfo.so.5.9

salt-mast 29777 root mem REG 8,1 194480 525246 /lib/x86_64-linux-gnu/libncursesw.so.5.9

salt-mast 29777 root mem REG 8,1 78320 409281 /usr/lib/python2.7/lib-dynload/_curses.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 70536 409335 /usr/lib/python2.7/lib-dynload/_json.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 10624 405673 /usr/lib/python2.7/dist-packages/psutil/_psutil_posix.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 15008 402168 /usr/lib/python2.7/dist-packages/psutil/_psutil_linux.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 24736 409518 /usr/lib/python2.7/lib-dynload/termios.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 35296 397867 /usr/lib/x86_64-linux-gnu/libffi.so.6.0.4

salt-mast 29777 root mem REG 8,1 148376 409266 /usr/lib/python2.7/lib-dynload/_ctypes.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 125208 410414 /usr/lib/x86_64-linux-gnu/libyaml-0.so.2.0.5

salt-mast 29777 root mem REG 8,1 208960 396813 /usr/lib/python2.7/dist-packages/_yaml.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 29392 409359 /usr/lib/python2.7/lib-dynload/_multiprocessing.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 15296 409517 /usr/lib/python2.7/lib-dynload/resource.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 110472 409363 /usr/lib/python2.7/lib-dynload/_ssl.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 2715840 397427 /usr/lib/x86_64-linux-gnu/libcrypto.so.1.1

salt-mast 29777 root mem REG 8,1 442984 397429 /usr/lib/x86_64-linux-gnu/libssl.so.1.1

salt-mast 29777 root mem REG 8,1 29352 409330 /usr/lib/python2.7/lib-dynload/_hashlib.x86_64-linux-gnu.so

salt-mast 29777 root mem REG 8,1 1679776 403946 /usr/lib/locale/locale-archive

salt-mast 29777 root mem REG 8,1 47632 534216 /lib/x86_64-linux-gnu/libnss_files-2.24.so

salt-mast 29777 root mem REG 8,1 47688 534218 /lib/x86_64-linux-gnu/libnss_nis-2.24.so

salt-mast 29777 root mem REG 8,1 89064 534117 /lib/x86_64-linux-gnu/libnsl-2.24.so

salt-mast 29777 root mem REG 8,1 31616 534213 /lib/x86_64-linux-gnu/libnss_compat-2.24.so

salt-mast 29777 root mem REG 8,1 1689360 533508 /lib/x86_64-linux-gnu/libc-2.24.so

salt-mast 29777 root mem REG 8,1 1063328 534091 /lib/x86_64-linux-gnu/libm-2.24.so

salt-mast 29777 root mem REG 8,1 105088 532530 /lib/x86_64-linux-gnu/libz.so.1.2.8

salt-mast 29777 root mem REG 8,1 10688 534230 /lib/x86_64-linux-gnu/libutil-2.24.so

salt-mast 29777 root mem REG 8,1 14640 534090 /lib/x86_64-linux-gnu/libdl-2.24.so

salt-mast 29777 root mem REG 8,1 135440 534225 /lib/x86_64-linux-gnu/libpthread-2.24.so

salt-mast 29777 root mem REG 8,1 153288 533338 /lib/x86_64-linux-gnu/ld-2.24.so

salt-mast 29777 root DEL REG 0,20 5801462 /dev/shm/JueU0Y

salt-mast 29777 root DEL REG 0,20 5801461 /dev/shm/GVIrnl

salt-mast 29777 root DEL REG 0,20 5801460 /dev/shm/4nCZJH

salt-mast 29777 root DEL REG 0,20 5801459 /dev/shm/k5hy63

salt-mast 29777 root DEL REG 0,20 5801458 /dev/shm/s4D7sq

salt-mast 29777 root DEL REG 0,20 4085275 /dev/shm/KX6KPM

salt-mast 29777 root DEL REG 0,20 4085274 /dev/shm/8nuavJ

salt-mast 29777 root DEL REG 0,20 4085273 /dev/shm/5F6zaG

salt-mast 29777 root DEL REG 0,20 4085272 /dev/shm/4nZZPC

salt-mast 29777 root DEL REG 0,20 4085271 /dev/shm/Z9rqvz

salt-mast 29777 root DEL REG 0,20 4085270 /dev/shm/X6CTaw

salt-mast 29777 root DEL REG 0,20 4085269 /dev/shm/lWd8Qs

salt-mast 29777 root DEL REG 0,20 4085268 /dev/shm/gdbnxp

salt-mast 29777 root DEL REG 0,20 4085267 /dev/shm/9RCCdm

salt-mast 29777 root DEL REG 0,20 4085266 /dev/shm/NdiSTi

salt-mast 29777 root DEL REG 0,20 4085265 /dev/shm/RbN9zf

salt-mast 29777 root DEL REG 0,20 4085264 /dev/shm/37kghc

salt-mast 29777 root DEL REG 0,20 4085263 /dev/shm/On8mY8

salt-mast 29777 root DEL REG 0,20 4085262 /dev/shm/JT1tF5

salt-mast 29777 root DEL REG 0,20 4085261 /dev/shm/gAlBm2

salt-mast 29777 root DEL REG 0,20 4085260 /dev/shm/LuLK3Y

salt-mast 29777 root DEL REG 0,20 4085259 /dev/shm/do7NLV

salt-mast 29777 root DEL REG 0,20 4085258 /dev/shm/SLORtS

salt-mast 29777 root DEL REG 0,20 4085257 /dev/shm/0NTVbP

salt-mast 29777 root DEL REG 0,20 4085256 /dev/shm/wba0TL

salt-mast 29777 root DEL REG 0,20 4085255 /dev/shm/ndA5BI

salt-mast 29777 root DEL REG 0,20 4085254 /dev/shm/KmCDnF

salt-mast 29777 root DEL REG 0,20 4085253 /dev/shm/Yu1b9B

salt-mast 29777 root DEL REG 0,20 4085252 /dev/shm/XDOKUy

salt-mast 29777 root DEL REG 0,20 4085251 /dev/shm/mz3jGv

salt-mast 29777 root DEL REG 0,20 4085250 /dev/shm/MBFUrs

salt-mast 29777 root DEL REG 0,20 4085249 /dev/shm/uJdYmp

salt-mast 29777 root DEL REG 0,20 4085248 /dev/shm/8p81hm

salt-mast 29777 root DEL REG 0,20 4085247 /dev/shm/o0i6cj

salt-mast 29777 root DEL REG 0,20 4085246 /dev/shm/wDMa8f

salt-mast 29777 root DEL REG 0,20 4085245 /dev/shm/rZfg3c

salt-mast 29777 root DEL REG 0,20 4085244 /dev/shm/uelDEb

salt-mast 29777 root DEL REG 0,20 4085243 /dev/shm/EiB0fa

salt-mast 29777 root DEL REG 0,20 4085242 /dev/shm/oG9nR8

salt-mast 29777 root DEL REG 0,20 4085241 /dev/shm/ZWdMs7

salt-mast 29777 root DEL REG 0,20 4085240 /dev/shm/seQb45

salt-mast 29777 root DEL REG 0,5 4085239 /dev/zero

salt-mast 29777 root DEL REG 0,20 4085226 /dev/shm/wp3JG4

salt-mast 29777 root DEL REG 0,20 4085225 /dev/shm/NKJBG6

salt-mast 29777 root DEL REG 0,20 4085224 /dev/shm/hJGtG8

salt-mast 29777 root 0r CHR 1,3 0t0 1028 /dev/null

salt-mast 29777 root 1u unix 0xffff9a30768e5c00 0t0 4067317 type=STREAM

salt-mast 29777 root 2u unix 0xffff9a30768e5c00 0t0 4067317 type=STREAM

salt-mast 29777 root 3r FIFO 0,10 0t0 4085223 pipe

salt-mast 29777 root 4w FIFO 0,10 0t0 4085223 pipe

salt-mast 29777 root 5w REG 8,1 34459999 131592 /var/log/salt/master

salt-mast 29777 root 6r CHR 1,3 0t0 1028 /dev/null

salt-mast 29777 root 7u a_inode 0,11 0 8650 [eventpoll]

salt-mast 29777 root 8r FIFO 0,10 0t0 5830185 pipe

salt-mast 29777 root 9w FIFO 0,10 0t0 5830185 pipe

salt-mast 29777 root 10u unix 0xffff9a307aa6ac00 0t0 5830186 type=STREAM

salt-mast 29777 root 11r CHR 1,9 0t0 1033 /dev/urandom

Additional note, CPU usage also has been consistently high (25%) than it was before (0.x%). This also looks like the published process primarily.

And no, its not an exposed-to-the-world salt master :)

@vin01 @marcmerlin

Installing the setproctitle Python library will allow your Salt master to rename the processes to more meaningful names, and may be of benefit to troubleshooting this further since this would help determine which part of the Salt master is consuming memory.

Installing this module does require having the Python devel headers installed as well as GCC though. However, I believe once installed you may be able to remove these packages.

Having this will enable output like the following:

[root@testsaltify ~]# systemctl status salt-master

● salt-master.service - The Salt Master Server

Loaded: loaded (/usr/lib/systemd/system/salt-master.service; disabled; vendor preset: disabled)

Active: active (running) since Wed 2020-06-10 19:44:06 EDT; 36s ago

Docs: man:salt-master(1)

file:///usr/share/doc/salt/html/contents.html

https://docs.saltstack.com/en/latest/contents.html

Main PID: 15224 (/usr/bin/python)

CGroup: /system.slice/salt-master.service

├─15224 /usr/bin/python3 /usr/bin/salt-master ProcessManager

├─15230 /usr/bin/python3 /usr/bin/salt-master MultiprocessingLoggingQueue

├─15234 /usr/bin/python3 /usr/bin/salt-master ZeroMQPubServerChannel

├─15235 /usr/bin/python3 /usr/bin/salt-master EventPublisher

├─15238 /usr/bin/python3 /usr/bin/salt-master Maintenance

├─15239 /usr/bin/python3 /usr/bin/salt-master ReqServer_ProcessManager

├─15240 /usr/bin/python3 /usr/bin/salt-master FileserverUpdate

├─15241 /usr/bin/python3 /usr/bin/salt-master MWorkerQueue

├─15242 /usr/bin/python3 /usr/bin/salt-master MWorker-0

├─15243 /usr/bin/python3 /usr/bin/salt-master MWorker-1

├─15244 /usr/bin/python3 /usr/bin/salt-master MWorker-2

├─15252 /usr/bin/python3 /usr/bin/salt-master MWorker-3

└─15253 /usr/bin/python3 /usr/bin/salt-master MWorker-4

ZD-5267

@marcmerlin

Same problem here. We had 2999, did an emergency upgrade to 3000.2+ds-1

I am curious where you obtained Salt v2999 from , since that is an internal version number used for nightly builds and should be in no one's hands but QA and developers ?

@dmurphy18 I pasted wrong,, sorry. I meant 2019.2.5+ds-1

@doesitblend thanks,

apt-get install python-setproctitle python3-setproctitle

did the trick, I now see what salt process is what.

Does the salt-master package on debian use external packages visible by pip or is it fully self contained? We have 2 salt servers, one leaks and the other one does not. The one that leaks has those python packages visible on the system

root@salt:/etc/salt/pki/master# pip list

DEPRECATION: The default format will switch to columns in the future. You can use --format=(legacy|columns) (or define a format=(legacy|columns) in your pip.conf under the [list] section) to disable this warning.

backports-abc (0.5)

beautifulsoup4 (4.5.3)

boto (2.44.0)

cachetools (3.1.1)

chardet (2.3.0)

crcmod (1.7)

croniter (0.3.12)

cryptography (1.7.1)

enum34 (1.1.6)

futures (3.0.5)

gitdb2 (2.0.0)

GitPython (2.1.1)

google-auth (1.6.3)

google-compute-engine (2.3.7)

html5lib (0.999999999)

idna (2.2)

ipaddress (1.0.17)

Jinja2 (2.9.4)

keyring (10.1)

keyrings.alt (1.3)

lxml (3.7.1)

M2Crypto (0.24.0)

MarkupSafe (0.23)

msgpack (0.6.2)

mysqlclient (1.3.7)

pip (9.0.1)

protobuf (3.11.0)

psutil (5.0.1)

pyasn1 (0.4.6)

pyasn1-modules (0.2.6)

pycrypto (2.6.1)

pycurl (7.43.0)

pygobject (3.22.0)

python-apt (1.4.1)

python-dateutil (2.5.3)

python-gnupg (0.3.9)

pytz (2016.7)

pyxdg (0.25)

PyYAML (3.12)

pyzmq (16.0.2)

requests (2.12.4)

rsa (4.0)

salt (2019.2.4)

SecretStorage (2.3.1)

setproctitle (1.1.10)

setuptools (33.1.1)

singledispatch (3.4.0.3)

six (1.10.0)

smmap2 (2.0.1)

systemd-python (233)

tornado (4.4.3)

urllib3 (1.19.1)

webencodings (0.5)

wheel (0.29.0)

@doesitblend as luck would have it, the process that leaks has no extra information in its name:

root 18779 4.8 22.3 14045524 13831568 ? S 00:54 5:07 /usr/bin/python2 /usr/bin/salt-master

root 18788 5.2 0.2 4719788 140016 ? Sl 00:54 5:29 /usr/bin/python2 /usr/bin/salt-master MWorkerQueue

root 18778 5.2 0.1 1187544 89732 ? Sl 00:54 5:34 /usr/bin/python2 /usr/bin/salt-master Reactor

root 24276 0.9 0.1 1014376 66032 ? Sl Jun03 98:18 /usr/bin/python2 /usr/bin/salt-minion

root 24224 0.1 0.1 672120 81584 ? Sl Jun03 20:35 /usr/bin/python2 /usr/bin/salt-minion

root 18953 1.0 0.1 641360 118096 ? Sl 00:54 1:09 /usr/bin/python2 /usr/bin/salt-master MWorker-48

root 18830 1.3 0.1 639484 117056 ? Sl 00:54 1:22 /usr/bin/python2 /usr/bin/salt-master MWorker-3

root 18776 2.5 0.2 522380 171320 ? S 00:54 2:42 /usr/bin/python2 /usr/bin/salt-master EventPublisher

root 18773 1.9 0.3 505096 186184 ? Sl 00:54 2:00 /usr/bin/python2 /usr/bin/salt-master ZeroMQPubServerChannel

Its open files probably say what it is, though:

/usr/bin/ 18779 root DEL REG 0,20 105335341 /dev/shm/oxy5wt

/usr/bin/ 18779 root DEL REG 0,20 105335340 /dev/shm/K2GS1C

/usr/bin/ 18779 root DEL REG 0,20 105335339 /dev/shm/j80FwM

/usr/bin/ 18779 root DEL REG 0,20 105335338 /dev/shm/EzFt1V

/usr/bin/ 18779 root DEL REG 0,20 105335337 /dev/shm/nHHhw5

/usr/bin/ 18779 root DEL REG 0,20 105335336 /dev/shm/JE960e

/usr/bin/ 18779 root DEL REG 0,20 105335335 /dev/shm/3wDjxo

/usr/bin/ 18779 root DEL REG 0,20 105335334 /dev/shm/xokw3x

/usr/bin/ 18779 root DEL REG 0,20 105335333 /dev/shm/U2uJzH

/usr/bin/ 18779 root DEL REG 0,20 105335332 /dev/shm/AtXW5Q

/usr/bin/ 18779 root DEL REG 0,20 105335331 /dev/shm/5tWbC0

/usr/bin/ 18779 root DEL REG 0,20 105335330 /dev/shm/q8yZ99

/usr/bin/ 18779 root DEL REG 0,20 105335329 /dev/shm/detNHj

/usr/bin/ 18779 root DEL REG 0,20 105335328 /dev/shm/YkKBft

/usr/bin/ 18779 root DEL REG 0,20 105335327 /dev/shm/VzdqNC

/usr/bin/ 18779 root DEL REG 0,20 105335326 /dev/shm/ZcYflM

/usr/bin/ 18779 root DEL REG 0,20 105335325 /dev/shm/otje8V

/usr/bin/ 18779 root DEL REG 0,20 105335324 /dev/shm/4a9cV5

/usr/bin/ 18779 root DEL REG 0,20 105335323 /dev/shm/8eicIf

/usr/bin/ 18779 root DEL REG 0,20 105335322 /dev/shm/PUKbvp

/usr/bin/ 18779 root DEL REG 0,20 105335321 /dev/shm/JgRciz

/usr/bin/ 18779 root DEL REG 0,5 105335320 /dev/zero

/usr/bin/ 18779 root DEL REG 0,20 105335307 /dev/shm/7YoG6I

/usr/bin/ 18779 root DEL REG 0,20 105335306 /dev/shm/vLkEU4

/usr/bin/ 18779 root DEL REG 0,20 105335305 /dev/shm/lrrCIq

/usr/bin/ 18779 root 0r CHR 1,3 0t0 1028 /dev/null

/usr/bin/ 18779 root 1u unix 0xffff8888d9276000 0t0 105332538 type=STREAM

/usr/bin/ 18779 root 2u unix 0xffff8888d9276000 0t0 105332538 type=STREAM

/usr/bin/ 18779 root 3r FIFO 0,10 0t0 105335304 pipe

/usr/bin/ 18779 root 4w FIFO 0,10 0t0 105335304 pipe

/usr/bin/ 18779 root 5w REG 8,1 6133423 131562 /var/log/salt/master

/usr/bin/ 18779 root 6r CHR 1,3 0t0 1028 /dev/null

/usr/bin/ 18779 root 7u a_inode 0,11 0 9674 [eventpoll]

/usr/bin/ 18779 root 8r FIFO 0,10 0t0 105336771 pipe

/usr/bin/ 18779 root 9w FIFO 0,10 0t0 105336771 pipe

/usr/bin/ 18779 root 10u unix 0xffff888860346000 0t0 105336772 type=STREAM

/usr/bin/ 18779 root 11u a_inode 0,11 0 9674 [eventpoll]

/usr/bin/ 18779 root 12r FIFO 0,10 0t0 105350526 pipe

/usr/bin/ 18779 root 13r CHR 1,9 0t0 1033 /dev/urandom

/usr/bin/ 18779 root 14w FIFO 0,10 0t0 105350526 pipe

recvfrom(10, "\202\244body\332\21\351salt/beacon/n1-1-ssd0-us-central1-f-34-z1a7/status/2020-06-11T02:54:07.133298\n\n\203\246_stamp\2722020-06-11T02:54:07.149098\244data\205\247loadavg\203\24615-min\313?\271\231\231\231\231\231\232\2455-min\313?\236\270Q\353\205\36\270\2451-min\313?\261\353\205\36\270Q\354\247meminfo\336\0.\254Active(file)\202\244unit\242kB\245v"..., 65536, 0, NULL, NULL) = 4600

epoll_ctl(7, EPOLL_CTL_MOD, 10, {EPOLLERR|EPOLLHUP, {u32=10, u64=94330366722058}}) = 0

epoll_wait(7, [], 1023, 0) = 0

write(9, "x", 1) = 1

getpid() = 18779

getpid() = 18779

Is it fair to say that it's the process that gathers logs from all minions?

I should add that it leaks 6GB per hour, so it dies several times a day

01:00.log:root 18779 5.9 0.6 635420 421708 ? R 00:54 0:18 /usr/bin/python2 /usr/bin/salt-master

01:20.log:root 18779 5.2 4.0 2720008 2506324 ? S 00:54 1:19 /usr/bin/python2 /usr/bin/salt-master

01:40.log:root 18779 5.0 9.1 5900776 5686884 ? S 00:54 2:18 /usr/bin/python2 /usr/bin/salt-master

02:00.log:root 18779 4.8 14.0 8896976 8682856 ? S 00:54 3:09 /usr/bin/python2 /usr/bin/salt-master

02:20.log:root 18779 4.8 18.2 11501544 11287464 ? S 00:54 4:06 /usr/bin/python2 /usr/bin/salt-master

02:40.log:root 18779 4.8 22.3 14045524 13831568 ? S 00:54 5:07 /usr/bin/python2 /usr/bin/salt-master

03:00.log:root 18779 4.7 25.9 16241184 16027336 ? S 00:54 5:58 /usr/bin/python2 /usr/bin/salt-master

03:20.log:root 18779 4.7 29.1 18216324 18002528 ? S 00:54 6:50 /usr/bin/python2 /usr/bin/salt-master

03:40.log:root 18779 4.6 32.5 20364308 20150100 ? S 00:54 7:41 /usr/bin/python2 /usr/bin/salt-master

04:00.log:root 18779 4.7 36.6 22858156 22643852 ? S 00:54 8:53 /usr/bin/python2 /usr/bin/salt-master

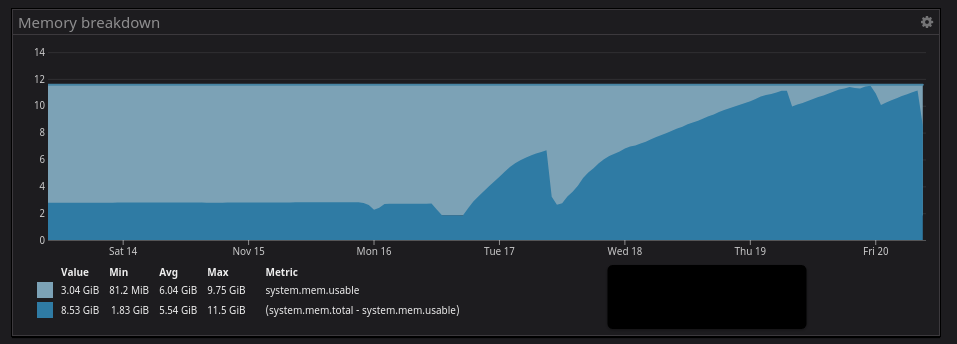

I've been observing a significant increase in memory utilization on a master server as I increase minions connecting to that server. Doing a few hundred minion installs a week for the last 6-8 weeks. I periodically have to restart the master services as it runs low on available RAM; and that period has been decreasing.

The server has 64GB RAM. Servicing a few thousand minions. Just about the only thing it's doing is a single highstate when a minion's added, and then scheduled test.ping every few minutes to keep the TCP Transport connected, working around #57085

Each dip is a restart of the salt masters running on the server.

If there's any information I can collect to help here, I'd be glad to. Or I can open a new bug report if that'd be better

I am no longer observing this for quite some time now, looks like it is fixed already?

i am running version 3001 right now.

I have the same problem. Btu I start the salt-api service with tornado that memory will leak in salt-master. If I use the cherrypy as the api service, it does not have this problem.

However, when I use the cherrypy as the api service, it will throw the error 'too many open files', although I set the configuration max_open_files

I have the same issue.

Can someone have a solution for this?

The bug is already open for a few months.

Thanks

does 3000.3 works good and problem is only in .2 ?

does 3000.3 works good and problem is only in .2 ?

No, Also in 3000.3

I recently upgraded to 3000.3 and python3 and I don't see an issue. this might be a python2 only issue.

can somebody confirm that they are only using python2 ? Maybe they can install the python3 version and test as well ?

also, a list of detailed installed packages would help specifically msgpack.

I have version : 0.6.2-2.el7.x64

Also, what would be a good way to reproduce ? I keep sending

salt \* --async test.ping

in a loop as a test.

This still happens with 3002.1+ds-1 (Debian 9).

I can confirm this: 3002.1+ds-1 (Ubuntu 18.04 and Ubuntu 16.04)

Have restart salt-master service every night...

After updating our salt master pair to 3002.1-1.el7 on Monday night, we're seeing lots of memory being used, requiring a service restart.

@JPerkster yes, please upgrade to 3002.2

After upgrading to 3002.2, looks fine so far.

@fangpsh yeah, there is a tiny memory leak on Salt's loader, and to address another issue we made some changes which actually made the tiny leak massive.

We reverted our changes to get back to the old loader code for 3002.2

For 3003 however, were working to address even that tiny leak.

Looks fine for me too...After 3 days without service restart the used memory is stable.

3002.2 fixed 100% CPU usage but I'm still seeing memory usage at 100% which eventually presents itself in the logs as gpg render cannot allocate. Should I open a new issue?

@s0undt3ch I think there's still something going on that is massively hitting performance.

I have a highstate that normally runs in 10min. On 3002.2 it takes about 90min now.

I am seeing this same behavior in CentOS 8 salt 3002.2. This is with or without gitfs enabled. With or without gpg encryption of sdb or pillar data.

Most helpful comment

I can confirm this: 3002.1+ds-1 (Ubuntu 18.04 and Ubuntu 16.04)

Have restart salt-master service every night...