salt-master process leaks memory when running in a container

Description of Issue/Question

When running in docker/kubernetes the salt-master process leaks memory over time

I have confirmed the same behavior with or without our custom engine installed

Setup

Dockerfile:

FROM centos:latest as base

RUN yum -y update && \

yum -y install python-ldap python-setproctitle epel-release git && \

yum -y install https://repo.saltstack.com/yum/redhat/salt-repo-2018.3-1.el7.noarch.rpm && \

yum clean all

FROM base

RUN yum -y install salt-master virt-what python-pygit2 python-pip && \

yum clean all

RUN pip install pika

ADD Dockerfiles/salt-master/entrypoint.sh /entrypoint.sh

RUN chmod 755 /entrypoint.sh

RUN useradd saltapi

RUN echo "salt" | passwd --stdin saltapi

EXPOSE 4505/tcp 4506/tcp

ENTRYPOINT ["/entrypoint.sh"]

CMD ["salt-master", "-l", "info"]

Enterypoint.sh

#!/bin/bash

# Sync gitfs

/usr/bin/salt-run saltutil.sync_all

# This may be redundant, but ensure we sync the

# engines after we've got the latest code from gitfs

/usr/bin/salt-run saltutil.sync_engines

touch /tmp/entrypoint_ran

# Ensure that the saltapi password matches the

# $SALTAPI_PASSWORD environment variable

stty -echo

if [ -n "$SALTAPI_PASSWORD" ];

then echo ${SALTAPI_PASSWORD} | passwd --stdin saltapi;

fi

stty echo

# Run command

exec "$@"

master.conf

hash_type: sha512

state_aggregate: True

log_level_logfile: info

fileserver_backend:

- roots

- git

gitfs_remotes:

- https://states.gitfs.repo

- user: secret

- password: secret

ext_pillar:

- git:

# HTTPS authentication

- master https://pillar.gitfs.repo:

- user: secret

- password: secret

external_auth:

pam:

saltapi:

- .*

- '@runner'

- '@wheel'

custom:

rabbitmq:

user: salt

password: super secret

server: superdupersecret

exchange: salt-events

vhost: salt-events

queue: salt-events

engines:

- custom-salt: {}

Steps to Reproduce Issue

1) Launch container

2) Add one or more minions

3) Watch as graph slowly rises

Versions Report

$ kubectl exec -it saltstack-54c9c75cc4-6mlf9 -c salt-master -- salt --versions-report

Salt Version:

Salt: 2018.3.3

Dependency Versions:

cffi: 1.6.0

cherrypy: Not Installed

dateutil: Not Installed

docker-py: Not Installed

gitdb: Not Installed

gitpython: Not Installed

ioflo: Not Installed

Jinja2: 2.7.2

libgit2: 0.26.3

libnacl: Not Installed

M2Crypto: Not Installed

Mako: Not Installed

msgpack-pure: Not Installed

msgpack-python: 0.5.6

mysql-python: Not Installed

pycparser: 2.14

pycrypto: 2.6.1

pycryptodome: Not Installed

pygit2: 0.26.4

Python: 2.7.5 (default, Jul 13 2018, 13:06:57)

python-gnupg: Not Installed

PyYAML: 3.11

PyZMQ: 15.3.0

RAET: Not Installed

smmap: Not Installed

timelib: Not Installed

Tornado: 4.2.1

ZMQ: 4.1.4

System Versions:

dist: centos 7.5.1804 Core

locale: ANSI_X3.4-1968

machine: x86_64

release: 4.4.0-133-generic

system: Linux

version: CentOS Linux 7.5.1804 Core

All 37 comments

ZD-2933

I have nearly identical issue though not in container. I have completely vanila Salt 2018.3.2 deployed with only 2 minions, only configuration changes from the default are the hostname of the salt master.

After a week of NOTHING running at all from the master the memory usage is nearly 5GB. At this point we are considering installing a cron task that restarts the service just to avoid this, which is frankly an insane solution. Is this really a P2 and not a P1?

Wow, I've been using salt since 0.14 ... had all kind of issues, this is literally the WORST. @rallytime @DmitryKuzmenko do you have any instructions on what to do, how to fix ... or should we all just abandon all hope .. cause currently salt is just unusable. How can a DEFAULT install on widely used OS (Ubuntu 16.04 LTS) be having such issues?

I'm not sure if this helps, but I have two salt masters running on two different kubernetes clusters in two different datacenter in two different states. One master has a single minion and the other master has zero minions. The memory leak is _exactly_ the same.

It's interesting to me that regardless of which cluster or if the master has a minion or not, the leak grows at the same rate. The bars are parallel at the exact same trajectory.

wow the silence on tis bug is completely deafening ... despite the obvious P1 status

Sorry for the silence guys. I'm here and working on it.

@mikeadamz is it possible to get output of ps aux | grep salt on one of VMs where master already grown enough to see what subprocess of master has that problem?

[root@saltstack-54c9c75cc4-6mlf9 /]# ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 0.0 0.3 310484 52384 ? Ss Oct26 0:37 /usr/bin/python /usr/bin/salt-master -l info ProcessManager

root 135 0.0 0.1 207264 22372 ? S Oct26 0:00 /usr/bin/python /usr/bin/salt-master -l info MultiprocessingLoggingQueue

root 140 0.0 0.2 389680 39788 ? Sl Oct26 0:00 /usr/bin/python /usr/bin/salt-master -l info ZeroMQPubServerChannel

root 143 0.0 0.2 308548 39520 ? S Oct26 0:00 /usr/bin/python /usr/bin/salt-master -l info EventPublisher

root 144 0.0 0.2 314232 46728 ? S Oct26 3:15 /usr/bin/python /usr/bin/salt-master -l info

root 145 0.5 1.6 608720 270652 ? S Oct26 102:29 /usr/bin/python /usr/bin/salt-master -l info Maintenance

root 146 0.0 0.2 310348 40676 ? S Oct26 0:36 /usr/bin/python /usr/bin/salt-master -l info ReqServer_ProcessManager

root 147 0.0 0.2 687344 42700 ? Sl Oct26 0:10 /usr/bin/python /usr/bin/salt-master -l info MWorkerQueue

root 148 0.0 0.5 583584 85080 ? Sl Oct26 0:30 /usr/bin/python /usr/bin/salt-master -l info MWorker-0

root 153 0.0 0.5 583816 85500 ? Sl Oct26 0:31 /usr/bin/python /usr/bin/salt-master -l info MWorker-1

root 156 0.0 0.5 434684 83908 ? Sl Oct26 0:31 /usr/bin/python /usr/bin/salt-master -l info MWorker-2

root 157 0.0 0.5 584080 85508 ? Sl Oct26 0:32 /usr/bin/python /usr/bin/salt-master -l info MWorker-3

root 158 0.0 0.5 583628 85044 ? Sl Oct26 0:30 /usr/bin/python /usr/bin/salt-master -l info MWorker-4

root 159 0.1 1.5 657024 250480 ? Sl Oct26 29:22 /usr/bin/python /usr/bin/salt-master -l info FileserverUpdate

root 29856 0.0 0.0 11832 3048 pts/0 Ss 13:13 0:00 /bin/bash

root 29877 0.0 1.5 607692 261288 ? R 13:13 0:00 /usr/bin/python /usr/bin/salt-master -l info Maintenance

root 29878 0.0 0.0 51720 3536 pts/0 R+ 13:13 0:00 ps aux

[root@saltstack-54c9c75cc4-6mlf9 /]#

@mikeadamz thank you! It's very helpful.

@mikeadamz Are you guys using any gitfs backends and if so, which library is being used (GitPython or pygit2)?

Yes. We’re using pygit2

@dwoz : no backend

@mikeadamz how big is your gitrepo? branches, refs etc? If you flip the library from pygit2 to GitPython, what happens?

@isbm I am able to reproduce this issue without using Git in my environment at all.

I have created https://github.com/doesitblend/quicklab to help provide an environment where you can reproduce the issue. Just update build the images as stated in the repo and then let it run. Use docker stats and watch memory usage grow over about an hour.

This was only tested on MacOS, so if on Linux you may need to add in the /sys mount to run systemd.

I know that @DmitryKuzmenko has been working on this issue and has reproduced the issue in his lab environment. I believe that he is making progress, but no solution to this issue just yet.

My results for this moment.

I made code review and found nothing in the core logic. Probably the issue is in some modules specifics but more likely I've just not found.

Trying to reproduce it in some ways I've got some positive results running salt over the weekend in the quicklab environment. But as I saw it stopped to grow after 1 day of working. I modified configuration and ran it again (till this moment) to check that.

Tomorrow if I'll proof the fact of reproduction I'll re-run it with memory monitoring tools.

@DmitryKuzmenko anything new on this. Still working around this via cron job which restarts master

I've started poking at this, and while I'm not seeing the same results you're getting, so far there does appear to be an increase. My docker is running on a Mac, if that makes a difference 🤷♂️

My increase doesn't appear to be as drastic... I did launch via docker-compose rather than kubernetes, but that doesn't seem like it should cause a problem.

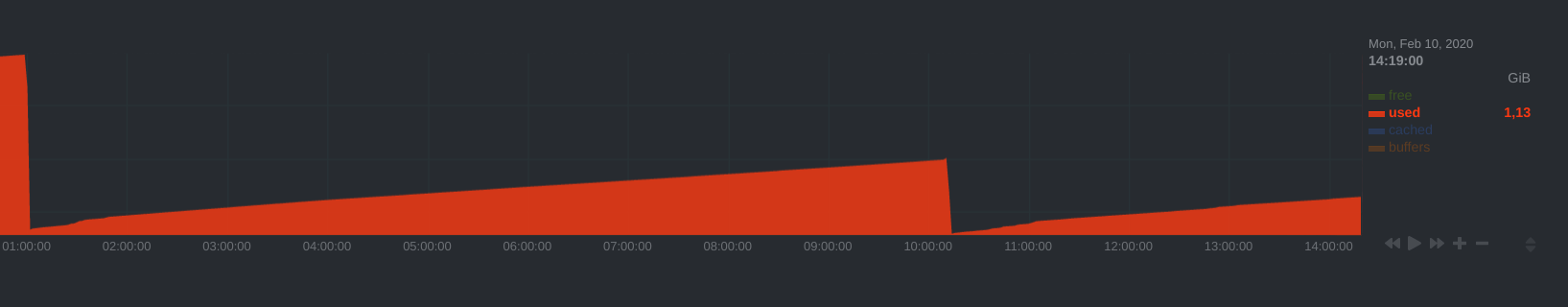

I tried an experiment without the git repos enabled like you have in this Dockerfile. Here's what I'm seeing (after over an hour of running):

That's just hovering around 300MiB RAM. Pretty clear GC cycles. At this point, I have some suspicions around the gitfs/ext_pillar backends - will look into it further tomorrow.

Oh yeah. That'll do it. It looks like the pygit2 backend also has a memory leak 😢

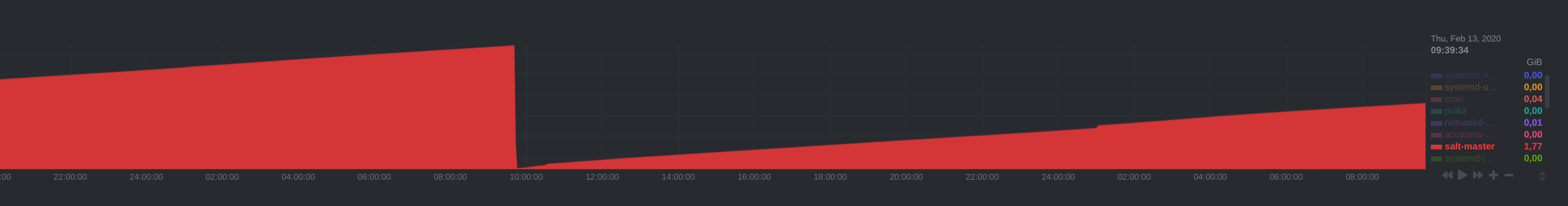

Interesting follow-up, leaving it running all weekend:

Memory use leveled out around 800mb yesterday and pretty much just stayed there. I'm not sure why exactly it leveled off. I'm really curious if a larger repository will change the amount of memory usage.

I just ran into this with gitfs and pygit2 provider.

I believe it is not specific to salt running in containers but salt running with gitfs enabled using pygit2 (as is also indicated in above comments). I have not tested gitpython yet however before enabling gitfs, memory usage was stable and now with gitfs enabled, it just keeps going up.

I will be happy to share core dump and memory profile from my vagrant box if that can help.

@DmitryKuzmenko it will be great to hear an update on this.

@vin01 that probably would be helpful.

Tomorrow I'm going to run pygit2 out side of salt to see if I can get a leak. If I do get a leak I'll try to find the function or functions that are doing the most damage.

pygit2 is leaking memory in multiple place. I think it is time to drop pygit2 and write salt-git.

@cmcmarrow Thanks for investigating and attempting to fix it. I would like to clarify if the plan now is to use dulwich instead of implementing salt-git?

I would like to hear because in my opinion this issue should be having a high priority as it basically renders the gitfs functionality unusable with machines regularly eating up memory and as pointed out above in the thread there are even cases where cron jobs are being used to restart master instance .. and it really has been a long time with this issue open since last year. gitpython already is not a nice option and not recommended for daemon like services because of memory leak issues.

I am not sure how the prioritization and tracking has worked with this issue (despite having high severity and P2 labels) but just to share my opinion, it has been really astounding for me that such a feature which allows seamless scaling and CI is really unusable and has been like this for almost an year. I believe it might be possible to finalize a long term stable approach so that people willing to contribute could put effort into testing some ideas in that direction.

Apologies for the rant.

I feel like pygit2 is still the way to go, as its foundation libgit2 is THE git library - used through corresponding bindings in basically every programming language/framework and heavily battle tested in all kind of large-scale git environments (GitHub, Bitbucket, ...).

Instead of re-inventing the wheel over and over again (GitPython, Dulwich, pygit2) I feel we should rather look into addressing the issues in pygit2 itself and thereby solving them at the right place and helping every other pygit2 user as well.

So since my new ticket got closed... what is the status here? Is there any progress? for some reason this also breaks new minion connections as the socket is not closed from the master when it runs out of memory

@eliasp some time ago I opened an issue on pygit2 because I discovered there was a memory leak. The issue is this one. In the issue you can find how to reproduce the problem as well as my results.

Unfortunately, I tried to investigate by myself but most of the code is a link to the C implementation of libgit2, and I can't figure it out. Maybe you guys can help on this!

So turns out if you have less than 2800 commits in your gitfs, the memleak is "relativ" small and will not kill your instance for months. If one hits a magic 2900+ commits salt eats 1.5G in 12 Hours, with each new commit adding a few MiB per hour.

So maybe P1 and some kind of progress? @dwoz @eliasp

Based on the response in the pygit2 repo they appear to have fixed some of these issues.

https://github.com/libgit2/pygit2/issues/943#issuecomment-578721216

Fix was backported to 1.0.x branch, so if someone can verify this works for them that would be splendid.

nope, did not fix the issue

```

salt --versions-report

Salt Version:

Salt: 2019.2.3

Dependency Versions:

cffi: 1.14.0

cherrypy: Not Installed

dateutil: 2.6.1

docker-py: Not Installed

gitdb: 2.0.6

gitpython: 3.0.5

ioflo: Not Installed

Jinja2: 2.10

libgit2: 0.28.4

libnacl: Not Installed

M2Crypto: Not Installed

Mako: Not Installed

msgpack-pure: Not Installed

msgpack-python: 0.5.6

mysql-python: Not Installed

pycparser: 2.19

pycrypto: 2.6.1

pycryptodome: Not Installed

pygit2: 1.0.3

Python: 3.6.9 (default, Nov 7 2019, 10:44:02)

python-gnupg: 0.4.1

PyYAML: 3.12

PyZMQ: 16.0.2

RAET: Not Installed

smmap: 2.0.5

timelib: Not Installed

Tornado: 4.5.3

ZMQ: 4.2.5

System Versions:

dist: Ubuntu 18.04 bionic

locale: UTF-8

machine: x86_64

release: 4.15.0-1057-aws

system: Linux

version: Ubuntu 18.04 bionic

```

4 hours runtime with 1.0.2 got me to 1.11 GiB, while 1.0.3 got me to 1.13 GiB

if anyone asks v3000 did not fix this

salt --versions-report

Salt Version:

Salt: 3000

Dependency Versions:

cffi: 1.14.0

cherrypy: Not Installed

dateutil: 2.6.1

docker-py: Not Installed

gitdb: 2.0.6

gitpython: 3.0.5

Jinja2: 2.10

libgit2: 0.28.4

M2Crypto: Not Installed

Mako: Not Installed

msgpack-pure: Not Installed

msgpack-python: 0.5.6

mysql-python: Not Installed

pycparser: 2.19

pycrypto: 2.6.1

pycryptodome: Not Installed

pygit2: 1.0.3

Python: 3.6.9 (default, Nov 7 2019, 10:44:02)

python-gnupg: 0.4.1

PyYAML: 3.12

PyZMQ: 16.0.2

smmap: 2.0.5

timelib: Not Installed

Tornado: 4.5.3

ZMQ: 4.2.5

System Versions:

dist: Ubuntu 18.04 bionic

locale: UTF-8

machine: x86_64

release: 4.15.0-1057-aws

system: Linux

version: Ubuntu 18.04 bionic

I see pygit2 is including the fix, is that the one you are using?

If possible try modifying your install to use valgrind: https://github.com/libgit2/pygit2/blob/master/docs/development.rst#running-valgrind

yes, it has the "latest" pygit2 fix, valgrind may be possible.

@wimo7083 can you reproduce this issue at all ?

this one is too big to get into Magnesium now and needs more attention, removing from Magnesium scope and back to planning for Aluminium

Most helpful comment

I feel like

pygit2is still the way to go, as its foundation libgit2 is THE git library - used through corresponding bindings in basically every programming language/framework and heavily battle tested in all kind of large-scale git environments (GitHub, Bitbucket, ...).Instead of re-inventing the wheel over and over again (GitPython, Dulwich, pygit2) I feel we should rather look into addressing the issues in pygit2 itself and thereby solving them at the right place and helping every other pygit2 user as well.