Runtime: JIT: devirtualization next steps

dotnet/coreclr#9230 introduced some simple devirtualization. Some areas for future enhancements:

- [x] Devirtualize interface calls. Logic for this is more complex than for virtual calls (see dotnet/coreclr#10192, dotnet/coreclr#10371).

- [x] Devirtualize calls on struct types. Make sure the right method entry point in invoked; virtual struct methods come in "boxed" and "unboxed" flavors (see dotnet/coreclr#14698, which removes the box).

- [ ] After calling the unboxed entry point, try and remove the upstream box and copy too (likely requires first-class structs. Some progress in dotnet/coreclr#14698 and dotnet/coreclr#17006).

- [ ] If we can't call the unboxed entry point, we may still know that the method called won't allow the the

thispointer in the call to escape. So we can perhaps stack-allocate the object in its boxed form and pass that. Here we'd be counting on the fact that methods on mutable GC value types should already be using checked write barriers for updates. - [ ] Likewise we can consider using "boxed on stack" for some boxed arguments to well known methods.

- [ ] Refactor the jit to retain type information for a larger variety of tree nodes. Likely requires carefully breaking the widespread assumption in the jit that class handles are captured only for struct types.

- [ ] Do some simplistic type propagation during importation. For instance during

fgFindBasicBlocksthe jit could count how many times each local appears in astargorldargainstruction. If there is just onestarg(which should be a fairly common case) then at thestargthe jit can check the type of the value being assigned and use it if more precise than the local's declared type. This in this case it is also safe to propagate the exactness flag. (Some of this now done, see dotnet/coreclr#10471, dotnet/coreclr#10633, dotnet/coreclr#10867, dotnet/coreclr#21251) - [ ] Consolidate tracking of types within the jit for structs and classes. Right now there is a general assumption that the class handle fields in various places (trees, lcl vars) imply struct types.

- [ ] Do more ambitious type propagation during optimization, once we have SSA. We should be able to trigger late devirtualization during optimization too.

- [ ] Improve the ability of the jit to gather type information from various operations, for instance type tests (see #9117).

- [ ] Enable inlining of late devirtualized calls -- at least for those calls devirtualized during inlining (see #39873; box cleanup as well, see #39519)

- [ ] Experiment with guarded devirtualization, by inserting appropriate type checks. Some preliminary work for this is noted in #9028. The basic transformation is now available via dotnet/coreclr#21270

- [ ] Experiment with speculative devirtualization by considering what is known about overrides at the time a method is jitted.

- [ ] Obtain more accurate return types from calls to shared generic methods whose return types are generic. This is typical of many collection types, eg

List<T>.get_ItemreturnsT. In many cases the caller will know the type ofTexactly, but currently the jit ends up with the shared generic ref type placeholder type__Canon. There's an example of this in thefromcollectionsregression test. See dotnet/coreclr#10172 for a partial fix. - [ ] Likewise, improve types in the inlinee when inlining shared generic methods (inlining effectively unshares the method); this may trigger devirtualization. Right now we may record shared types for inlinee args and locals, when we could potentially record unshared types (see dotnet/coreclr#10432, also #38477).

- [ ] Update logic to handle indirection patterns seen during prejit (see notes in dotnet/coreclr#9276)

- [ ] Improve test coverage by adding final/sealed to classes in existing test cases, perhaps creating some kind of tooling to experimentally attempt this and decide when it is correct to do so.

- [ ] Improve checked build test coverage by enabling jit optimization of loader tests, or else start running release build tests against a checked jit.

- [x] Sharpen types for temps used to pass inlinee args early (issue #8790; pr dotnet/coreclr#13530)

- [x] Sharpen return type if all reachable returns have more specialized type than the method's declared return type and there is a return spill temp (#15766)

- [ ] Avoid or propagate improved types through various layers of spill temps (#15783; some progress in dotnet/coreclr#20640)

- [x] See if there is any way to learn about actual types from readonly statics (issue dotnet/runtime#5502; implemented via dotnet/coreclr#20886)

- [ ] Enhance inlining heuristics to anticipate when inlining will enable devirtualization (#17935, also see #11711)

- [ ] Look into devirtualizing

ldvirtftnand calls that internally translate toldvirtftn(#32129) - [ ] (likely rare) if devirtualization results in a call to an intrinsic, expand the intrinsic appropriately

category:cq

theme:devirtualization

skill-level:expert

cost:extra-large

All 33 comments

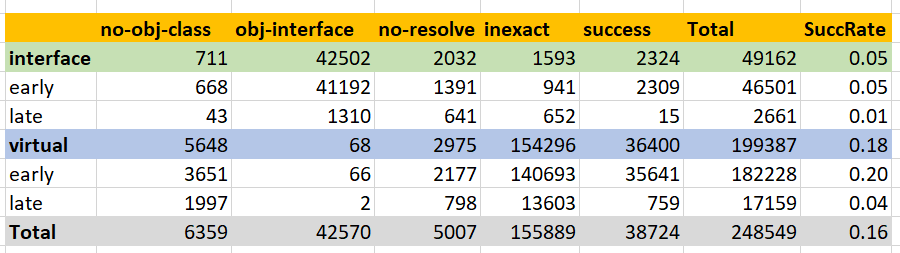

Some data from crossgen of System.Private.CoreLib, after dotnet/coreclr#10192. This shows the relative likelihood of devirtualization success or failure based on the operation feeding the call. The accounting is a bit tricky because this data includes callsites in inline candidates -- so from an IL standpoint some sites may be counted multiple times.

Overall around 8% of call sites are getting devirtualized. Virtual calls fare a bit better than interface calls. A number of operators never supply types.

It's not easy to know what a realistic upper bound is on how many sites can be devirtualized, but my feeling is that there is still quite a bit of headroom left and a number like 12% might not be out of the question.

| | Fail | Success | Total |

| :-- | --: | --: | --: |

| VIRTUAL | 7229 | 748 | 7977 |

| box | 45 | | 45 |

| call | 907 | 9 | 916 |

| cns_str | | 2 | 2 |

| field | 716 | 111 | 827 |

| ind | 1213 | | 1213 |

| index | 124 | | 124 |

| intrinsic | 225 | | 225 |

| lcl_var | 3383 | 568 | 3951 |

| ret_expr | 616 | 58 | 674 |

| INTERFACE | 4086 | 220 | 4306 |

| box | 34 | | 34 |

| call | 105 | | 105 |

| field | 935 | 76 | 1011 |

| ind | 32 | | 32 |

| lcl_var | 2955 | 140 | 3095 |

| ret_expr | 25 | 4 | 29 |

| Total | 11315 | 968 | 12283 |

Devirtualization can fail for several reasons; here's a crude accounting:

| | Count|

| :-- | --: |

| INTERFACE | 4086 |

| no-derived-method | 12 |

| no-type | 392 |

| obj-interface-type | 3682 |

| VIRTUAL | 7229 |

| no-derived-method | 29 |

| obj-interface-type | 120 |

| no-type | 2177 |

| not-final-or-exact | 4903 |

| Total | 11315 |

We should be able to reduce all of these numbers with improved tracking of types in the jit.

Update after dotnet/coreclr#10371. There is a marked decrease in interface success because we cannot safely devirtualize interface calls just with a final (sealed) method -- we need a final or exact class. Explicit interface implementations in C# mark the corresponding methods as final so this was incorrectly firing a lot before the fix.

| | Success | Fail | Total | Succ % |

| :--- | ---: | ---: | ---: | ---: |

| Virtual | 749 | 7206 | 7955 | 9.42 % |

| Interface | 96 | 4213 | 4309 | 2.23 % |

| Total | 845 | 11419 | 12264 | 6.89 % |

(edit: had virtual/interface labels swapped; now fixed)

More detailed breakdown, showing reasons and operations (reasons lower case, operators upper case , each partitions the total above it).

| CallKind | OPCODE / reason | Success | Fail | Total | Succ % |

| :--- | ---: | ---: | ---: | ---: | ---: |

| Virtual | | 749 | 7206 | 7955 | 9.42 % |

| | RET_EXPR | 58 | 619 | 677 | 8.57 % |

| | CALL | 9 | 906 | 915 | 0.98 % |

| | IND | 0 | 1216 | 1216 | 0.00 % |

| | LCL_VAR | 569 | 3356 | 3925 | 14.50 % |

| | INTRINSIC | 0 | 225 | 225 | 0.00 % |

| | FIELD | 111 | 715 | 826 | 13.44 % |

| | BOX | 0 | 45 | 45 | 0.00 % |

| | CNS_STR | 2 | 0 | 2 | 100.00 % |

| | INDEX | 0 | 124 | 124 | 0.00 % |

| | not-final-class-or-method-or-exact | 0 | 4878 | 4878 | 0.00 % |

| | no-type | 0 | 2179 | 2179 | 0.00 % |

| | final-class | 705 | 0 | 705 | 100.00 % |

| | obj-interface-type | 0 | 120 | 120 | 0.00 % |

| | no-derived-method | 0 | 29 | 29 | 0.00 % |

| | exact | 22 | 0 | 22 | 100.00 % |

| | final-method | 22 | 0 | 22 | 100.00 % |

| Interface | | 96 | 4213 | 4309 | 2.23 % |

| | RET_EXPR | 0 | 29 | 29 | 0.00 % |

| | CALL | 0 | 109 | 109 | 0.00 % |

| | IND | 0 | 32 | 32 | 0.00 % |

| | LCL_VAR | 81 | 3012 | 3093 | 2.62 % |

| | FIELD | 15 | 997 | 1012 | 1.48 % |

| | BOX | 0 | 34 | 34 | 0.00 % |

| | no-type | 0 | 391 | 391 | 0.00 % |

| | final-class | 93 | 0 | 93 | 100.00 % |

| | obj-interface-type | 0 | 3682 | 3682 | 0.00 % |

| | no-derived-method | 0 | 12 | 12 | 0.00 % |

| | not-final-class-or-exact | 0 | 128 | 128 | 0.00 % |

| | exact | 3 | 0 | 3 | 100.00 % |

| Total | | 845 | 11419 | 12264 | 6.89 % |

A blend of Devirtualize interface calls/Devirtualize calls on struct types.

Currently for a generic you can do:

T value

((int)(object)value) == ((int)(object)value)

And it will undo the object box for structs; however that requires a specific concrete type

Would an aim be to also work an generic interface/constraint so if T was an int this could also work and undo the interface box?

T value

((IEquatable<T>)(object)value).Equals(value)

Yes. We have some of the parts need for this, but not all. Today the jit produces

lvaGrabTemp returning 2 (V02 tmp0) called for Single-def Box Helper.

lvaSetClass: setting class for V02 to System.Int32 [exact]

impDevirtualizeCall: no type available (op=CALL)

--* CALLV stub int System.IEquatable`1[Int32][System.Int32].Equals

+--* CALL help ref HELPER.CORINFO_HELP_CHKCASTINTERFACE

| +--* CNS_INT(h) long 0x7fff9d4433a0 class

| \--* BOX ref

| \--* LCL_VAR ref V02 tmp0

\--* LCL_VAR int V01 arg1

and devirt is blocked because the jit does not yet understand what type the interface cast returns.

So the first bit is to teach the jit what this helper does- - I've been holding off because generally figuring out when a cast will always succeed or will always fail is not that easy and there are lots of these cast and isinst helpers. But maybe I should push ahead get the easier cases out of the way. This will require new jit -> vm queries for casting which will return yes/no/maybe answers; initially there will be a lot of maybes but we can improve over time.

If we do that and get back a yes then the cast is a no-op and we know the type going into the call. So we can devirtualize the call and remove the cast. However, we will still be boxing and calling the boxed entry point for the devirtualized Equals.

We should be able to inline, and one might hope that at this point if we do inline, we could propagate the value being boxed through the box to the ultimate use, then realize the box is dead, and remove it. Some of this might actually happen, at least for primitive types in simple cases. I'd have to try and see.

We should also be able to see that the boxing done here is "harmless" because Equals won't modify its this argument (we know this is true for inherited value class methods), and ask the VM to give us the unboxed entry point. Then we can call this unboxed entry point directly, passing the address of the original value and undo the boxing. This would get rid of the box more directly, even if we didn't or couldn't inline.

I have work in progress branches for some of the missing parts, so am slowly chipping away at this.

Hmm, we don't undo the box here after inlining, but we're not that far off from being able to:

```C#

using System;

class D

{

static bool TestMe(object o)

{

return ((int) o) == 33;

}

public static int Main()

{

return TestMe(33) ? 100 : 0;

}

}

```

Need importation of Unbox.Any to try and optimize away its type test.

Even if the cast will "maybe" succeed, the return type is still known if the cast throws (e.g., CORINFO_HELP_CHKCASTINTERFACE). This might not help with boxing/unboxing, but could help devirtualize if the resulting type is set as the intersection of the cast type and the type going into the cast.

In cases where it's unfeasible to prove that the unboxed value isn't modified, a value copy would work and still avoid boxing. If the cast result is always used as a unboxed value type, then even the helper call could work on a fake box (on stack).

We usually don't learn much about an object's type from successful a interface cast other than the fact that that the type indeed implements the interface.

And yeah, making a "fake" box would be a reasonable way to handle possible side effecting calls.

We usually don't learn much about an object's type from successful a interface cast other than the fact that that the type indeed implements the interface.

In a generic though, you also know what the actual type is? (if struct)

Yes, but (if I'm following you correctly) we knew that before the cast -- the question is whether the cast tells us anything new if it succeeds.

A successful "maybe" cast to a non-interface type tells us something new, and if we're lucky, might allow us to devirtualize interface calls on code guarded by the cast.

A successful "maybe" cast to an interface type doesn't.

Yes, but (if I'm following you correctly) we knew that before the cast

Expressing it in il, I'm suggesting that

bool DoThing2<T>(T val0, T val1)

{

return ((IEquatable<T>)val0).Equals(val1);

}

``

IL_0000: ldarg.1

IL_0001: box !!T

IL_0006: castclass class [netstandard]System.IEquatable1

IL_000b: ldarg.2

IL_000c: callvirt instance bool class [netstandard]System.IEquatable`1::Equals(!0)

IL_0011: ret

When `T` is known at runtime for a non-shared generic (struct); and that it implements the interface, it could be turned into the same output as

```csharp

bool DoThing1<T>(T val0, T val1) where T : IEquatable<T>

{

return val0.Equals(val1);

}

e.g.

IL_0000: ldarga.s val0

IL_0002: ldarg.2

IL_0003: constrained. !!T

IL_0009: callvirt instance bool class [netstandard]System.IEquatable`1<!!T>::Equals(!0)

IL_000e: ret

by dropping

IL_0001: box !!T

IL_0006: castclass class [netstandard]System.IEquatable`1<!!T>

and inserting

IL_0003: constrained. !!T

before the call

I'm not suggesting its that straight forward 😄 Is more to capture what I mean

Though in actual use it would have to be guarded

bool DoThing2<T>(T val0, T val1)

{

if (typeof(IEquatable<T>).IsAssignableFrom(typeof(T)))

{

return ((IEquatable<T>)val0).Equals(val1);

}

il

IL_0000: ldtoken class [netstandard]System.IEquatable`1<!!T>

IL_0005: call class [netstandard]System.Type [netstandard]System.Type::GetTypeFromHandle(valuetype [netstandard]System.RuntimeTypeHandle)

IL_000a: ldtoken !!T

IL_000f: call class [netstandard]System.Type [netstandard]System.Type::GetTypeFromHandle(valuetype [netstandard]System.RuntimeTypeHandle)

IL_0014: callvirt instance bool [netstandard]System.Type::IsAssignableFrom(class [netstandard]System.Type)

IL_0019: brfalse.s IL_002d

IL_001b: ldarg.1

IL_001c: box !!T

IL_0021: castclass class [netstandard]System.IEquatable`1<!!T>

IL_0026: ldarg.2

IL_0027: callvirt instance bool class [netstandard]System.IEquatable`1<!!T>::Equals(!0)

IL_002c: ret

IL_002d: ; alternate path when not type

And changing all that, is probably a much bigger thing - so it might not be practically worth it :-/

The first part is more or less where things are headed. If we know for sure T implements the interface and T is a value class then we might be able to determine which method will be called. So at least in some cases we should be able to devirtualize and unbox and inline and all that.

Is it correct that generics don't currently devirtualize?

I can't seem to get ArrayPool.Shared to devirtualize; even if I pull the Byte and Char pool out to a non-generic class, and reference them directly

internal sealed class ArrayPools

{

internal static TlsOverPerCoreLockedStacksArrayPool<byte> BytePool { get; } = new TlsOverPerCoreLockedStacksArrayPool<byte>();

internal static TlsOverPerCoreLockedStacksArrayPool<char> CharPool { get; } = new TlsOverPerCoreLockedStacksArrayPool<char>();

}

ArrayPools.CharPool.Rent

I don't see

Devirtualized virtual call to TlsOverPerCoreLockedStacksArrayPool`1:Rent;

now direct call to TlsOverPerCoreLockedStacksArrayPool`1:Rent [exact]

Which I was expecting (and definitely can't get it to work through ArrayPool<T>.Shared property when it inlines even if I rearrange the code to make sure the type is maintained and exposed: https://github.com/dotnet/coreclr/commit/dea46f4c9fc549720f48ccefdaab5d2b645787ea)

Not a covered scenario yet? Or are there further (or less) changes I could make to the source to trigger it?

Looking at the crossgened (rather than jitted) output so that might not be helping

The explanation will be there in the jit dump. I recall seeing some array pool methods devirtualizing in the past.

I should probably surface the reasons why methods don't devirtualize... most don't so the level of chatter will go up quite a bit.

This definition:

```C#

public static ArrayPool

typeof(T) == typeof(byte) || typeof(T) == typeof(char) ? new TlsOverPerCoreLockedStacksArrayPool

Create();

creates a hidden backing static field initialized by the class constructor. So when `Shared` gets inlined the jit only knows that the value has type `ArrayPool<T>` and not a more specific type. So no devirtualization happens:

impDevirtualizeCall: Trying to devirtualize virtual call:

class for 'this' is System.Buffers.ArrayPool1[Byte] (attrib 20000400)

base method is System.Buffers.ArrayPool1[Byte]::Rent

devirt to System.Buffers.ArrayPool1[Byte][System.Byte]::Rent -- speculative

[000005] --C-G------- * CALLV ind ref System.Buffers.ArrayPool1[Byte][System.Byte].Rent

[000022] ----G------- | /--* FIELD ref

If you call `Create` directly then devirtualization can happens for `Rent` -- eg:

```C#

byte[] b = ArrayPool<byte>.Create().Rent(33);

;; Devirtualized virtual call to System.Buffers.ArrayPool`1[Byte]:Rent; now direct call to System.Buffers.ConfigurableArrayPool`1[Byte][System.Byte]:Rent [exact]

So when Shared gets inlined the jit only knows that the value has type ArrayPool

and not a more specific type.

Which is why I tried to push it out to strongly typed fields in https://github.com/dotnet/coreclr/commit/dea46f4c9fc549720f48ccefdaab5d2b645787ea (the most extreme variant I was trying)

public static ArrayPool<T> Shared

{

[MethodImpl(MethodImplOptions.AggressiveInlining)]

get

{

if (typeof(T) == typeof(byte))

{

return (ArrayPool<T>)(object)ArrayPools.BytePool;

}

else if (typeof(T) == typeof(char))

{

return (ArrayPool<T>)(object)ArrayPools.CharPool;

}

else

{

return s_generalPool;

}

}

}

Also tried two fields which avoided the cast through (object), but wasn't sure it it was too much generic causing the issue

public static ArrayPool<T> Shared

{

[MethodImpl(MethodImplOptions.AggressiveInlining)]

get

{

if (typeof(T) == typeof(byte) || (typeof(T) == typeof(char))

{

return s_specificPool;

}

else

{

return s_generalPool;

}

}

}

Will explore with Jitdump; I think devirtualization will give much more benefit than for example using Lzcnt https://github.com/dotnet/coreclr/pull/15685

Looks like (with your dea46f4) we lose track of the type for the return value from get_Shared because we introduce a temp to hold the return value:

Invoking compiler for the inlinee method System.Buffers.ArrayPool`1[Byte][System.Byte]:get_Shared():ref :

...

lvaGrabTemp returning 1 (V01 tmp1) (a long lifetime temp) called for Inline return value spill temp.

Later when we've inlined get_Shared we try to devirtualize the call that it feeds but don't see any type information and bail:

**** Late devirt opportunity

[000005] --C-G------- * CALLV ind ref System.Buffers.ArrayPool`1[Byte][System.Byte].Rent

[000040] ------------ this in rcx +--* LCL_VAR ref V01 tmp1

[000004] ------------ arg1 \--* CNS_INT int 33

impDevirtualizeCall: no type available (op=LCL_VAR)

Likely we need to update the code in fgFindBasicBlocks to provide appropriate hinting about the return value. Even that may not be sufficient as we may also need to update the type post-inline since it gets "sharpened" by inlining revealing the actual field access.

Made PR to reduce my noise in this issue https://github.com/dotnet/coreclr/pull/15743

Here are some up-to-date status on devirtualization failures and successes, via PMI over the framework assembly set:

The colored rows show the summaries for interface calls and virtual calls. The rows below break down the failures/successes by the opcode of the instruction that provided the object to the call. So for instance a 51 call sites BOX opcodes fed interface calls, and 47 of those were devirtualized.

The failure reasons are:

- no-obj-class: jit has no information at all about the class of the object

- obj-interface: jit's best estimate for the type of the object is an interface class

- no-resolve: VM failed to resolve the call to a specific method

- inexact: VM resolved the method based on the jit provided type, but the type was not final or known exactly

Numbers look a bit better than I remember and are better than the original measurements above -- 18% of virtual sites and 5% of interface sites are devirtualized.

Main cause for interface calls not devirtualizing -- around 86% of the time -- is that the best type the jit has for the object being dispatched is an interface type.

Main cause for virtual calls not devirtualizing -- around 60% of the time -- is that the jit doesn't know the exact class (and/or the class or method is not final). We can't easily tell from this data whether inexactness is a consequence of poor type propagation / refinement in the jit, or if the exact type cannot be statically determined.

The jit has some kind of type information at most virtual sites; only 3% lack types. It's still probably worth shoring up cases where types are missing, even though they're not that prevalent. Suspect the IND failures here are largely class statics that in principle should be easy to track.

A couple of other bits of data: devirtualization works much better for call sites in inlinees than in the root method. Not too surprising.

Note devirtualization and inlining are interleaved...so a devirtualization often leads to an inline where we know the exact type of this or types of arguments, which leads to further devirtualization, which leads to further inlines... For root methods we're never going to be able (absent some magical interprocedural analysis) to know the actual types of parameters.

Also late devirtualization doesn't happen very often, and isn't very successful. Probably worth a deeper look.

Recall late devirtualization is done after an inline, where the jit propagates (better) information about the callee return type into the calling method. The low success rate here could have multiple causes -- for instance the jit doesn't do a thorough job of converting all shared types to unshared types, so if we inline a shared generic that returns T in a context where T is known exactly, we may fail to capitalize on this...

@dotnet/jit-contrib

@AndyAyersMS are there any ETW events for devirtualization similar to the ones we have for inlining?

I am asking because I recently wrote an ETWProfiler for BenchmarkDotNet which allows to profile the benchmarks and calculate some metrics based on the captured ETW event. If there is any interesting info in the trace file related to devirtualization I could calculate new metrics and include them in BenchView for our benchmarks (/cc @jorive)

No, there aren't any ETW events.

I probably should add something to the inline tree but haven't done that yet either.

PR to added some info to the inline tree: dotnet/coreclr#20395

Now merged, so the jit is tracking this information internally at least:

Inlines into 06000004 Y:Main():int

[1 IL=0005 TR=000008 06000002] [below ALWAYS_INLINE size] X`1[__Canon][System.__Canon]:.ctor(ref):this

[2 IL=0015 TR=000027 06000003] [profitable inline devirt unboxed] X`1[__Canon][System.__Canon]:Print():this

[0 IL=0011 TR=000049 06000080] [FAILED: noinline per IL/cached result] System.Console:WriteLine(ref)

Some further improvements over in dotnet/coreclr#20447.

FIrst steps towards guarded devirtualization: dotnet/coreclr#21270

@AndyAyersMS

Hey I just wanted to stop by to say thank you!

I'm writing a library for .net standard that really profits from all those optimizations ( https://github.com/rikimaru0345/Ceras ).

I really appreciate the hard work you're doing man! (you and everyone here actually)

Thanks! :smile: :heart:

@rikimaru0345 thanks for the kind words -- good to hear things are working well for you.

How does the CLR treat Default Interface Members when you use a reference to such interface AND the interface was implemented through a ValueType? Will it be devirtualized too?

interface I

{

public int X() { return 0; }

}

struct V : I { }

...

I iface = new V(); // Note: it is a **ValueType**

return iface.X(); // Devirtualization here? If so, how?

Would that run the same (or nearly) as a struct-defined method?

I'm just curious about these _ValueType<->DefaultInterfaceMembers_ interactions here.

Please, get technical.

Anyway, thanks for your hard work on this!

I'm eager to see what .NET 5 will bring next year. 😄

Most helpful comment

Here are some up-to-date status on devirtualization failures and successes, via PMI over the framework assembly set:

The colored rows show the summaries for interface calls and virtual calls. The rows below break down the failures/successes by the opcode of the instruction that provided the object to the call. So for instance a 51 call sites BOX opcodes fed interface calls, and 47 of those were devirtualized.

The failure reasons are:

Numbers look a bit better than I remember and are better than the original measurements above -- 18% of virtual sites and 5% of interface sites are devirtualized.

Main cause for interface calls not devirtualizing -- around 86% of the time -- is that the best type the jit has for the object being dispatched is an interface type.

Main cause for virtual calls not devirtualizing -- around 60% of the time -- is that the jit doesn't know the exact class (and/or the class or method is not final). We can't easily tell from this data whether inexactness is a consequence of poor type propagation / refinement in the jit, or if the exact type cannot be statically determined.

The jit has some kind of type information at most virtual sites; only 3% lack types. It's still probably worth shoring up cases where types are missing, even though they're not that prevalent. Suspect the

INDfailures here are largely class statics that in principle should be easy to track.