Roslyn: 'workspace/symbol' is slow to respond to cancellation

Version Used:

VS 2019 int.master 16.6

Steps to Reproduce:

- Open a large solution with lots of projects (in this scenario, I used internal Editor.sln).

- Search for a symbol.

Expected Behavior:

Any searches queued should be canceled by subsequent TYPECHARs.

Actual Behavior:

I typed 'CreateAsync' and Ctrl+Q queues one search for 'C'.. and then attempts to cancel it on the next TYPECHAR but Roslyn 'workspace/symbol' didn't yield/respond to cancellation for ~6 seconds.

It's not entirely clear to me what's happening, I debugged through the search task scheduler, LSP language client extension, and Roslyn and found:

- Cancellation token is canceled immediately on subsequent TYPECHAR.

- Cancellation message makes it to

WorkspaceSymbolAsync's cancellationToken immediately on the server side. - There's a six second delay...

- Then 'workspace/symbol' seems to return at that point with a canceled token.

Sequence of events:

** User types "C" {3/19/2020 2:31:44 PM}

** Start search for "C" {3/19/2020 2:31:44 PM}

** User types "reateAsync" {3/19/2020 2:31:46 PM}

** Client cancel {3/19/2020 2:31:45 PM}

*** SERVER recv. cancel message {3/19/2020 2:31:45 PM}

[6 second delay]

** SERVER WorkspaceSymbolAsync Returns {3/19/2020 2:31:51 PM}

** Client returns {3/19/2020 2:31:52 PM}

** Start search for "CreateAsync" {3/19/2020 2:31:52PM}

All 32 comments

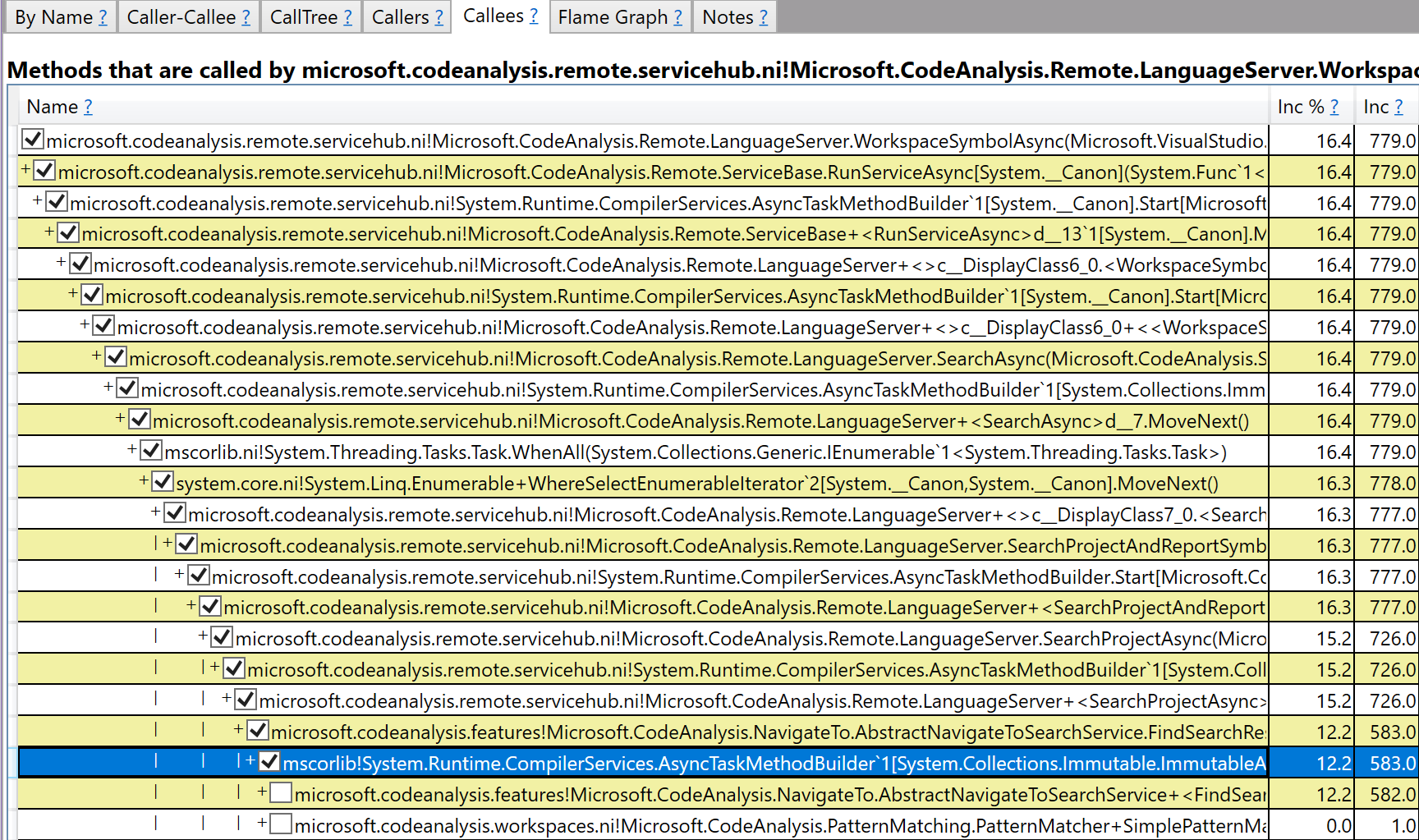

One reason why this might be taking a while is because it's creating >1 task per project and Editor has ~150 projects.

This creates a tree of 150 tasks..

When we cancel the token, every single one of the currently active tasks has to hit a cancellation exception, die, and get rethrown by the calling task, potentially up several levels, before the Task.WhenAll() will yield. This means we'd churn the CPU for several seconds handling CPU..I think.

I wonder if it'd make sense to instead partition the projects into n sets, where n is the number of CPUs so we're only canceling ~8 tasks, and hitting ~8 exceptions.

That stack above seems to be entirely outside of Roslyn. During that X second pause, what roslyn callstacks are active?

This creates a tree of 150 tasks..

This should be trivial to cancel out of. i.e. i've tested millions of tasks with TPL, and cancellatoin and whatnot is very fast (most of those tasks won't even start). It's likely that this is a case where there's a single bad egg not respecting cancellation, and those are causing the full wait.

That stack above seems to be entirely outside of Roslyn.

This is what's happening from the client's perspective. Effectively, we've sent the 'workspace/symbol' request and a subsequent cancellation and are waiting for a response.

The _Sequence of events_ section has outputs from tracepoints in the client and server. Effectively I put tracepoints at the beginning of 'WorkspaceSymbolAsync', inside a catch block for OperationCanceledException surrounding the body of the method, at the end, and in a _cancellationToken.Register()_ handler body.

I found that the cancellation handler is triggered instantly but the OperationCanceledException takes ~6 seconds before it's raised. In that time, we're awaiting the various tasks via Task.WhenAll().

That stack above seems to be entirely outside of Roslyn. During that X second pause, what roslyn callstacks are active?

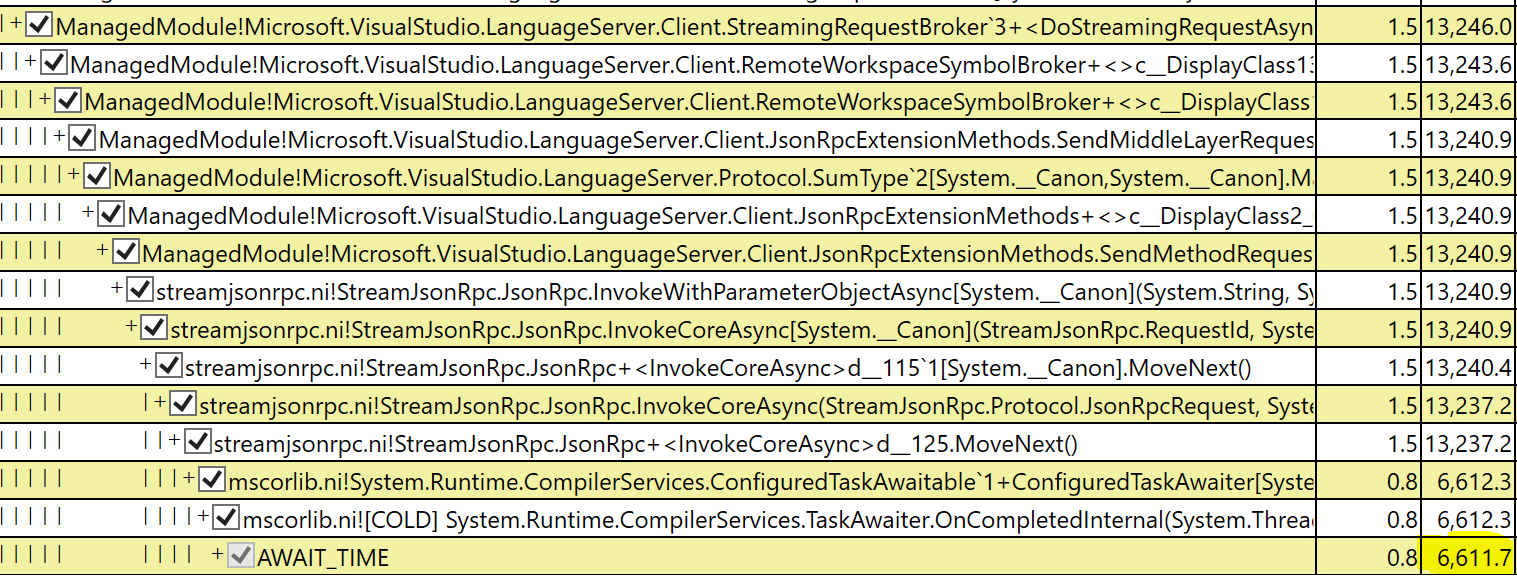

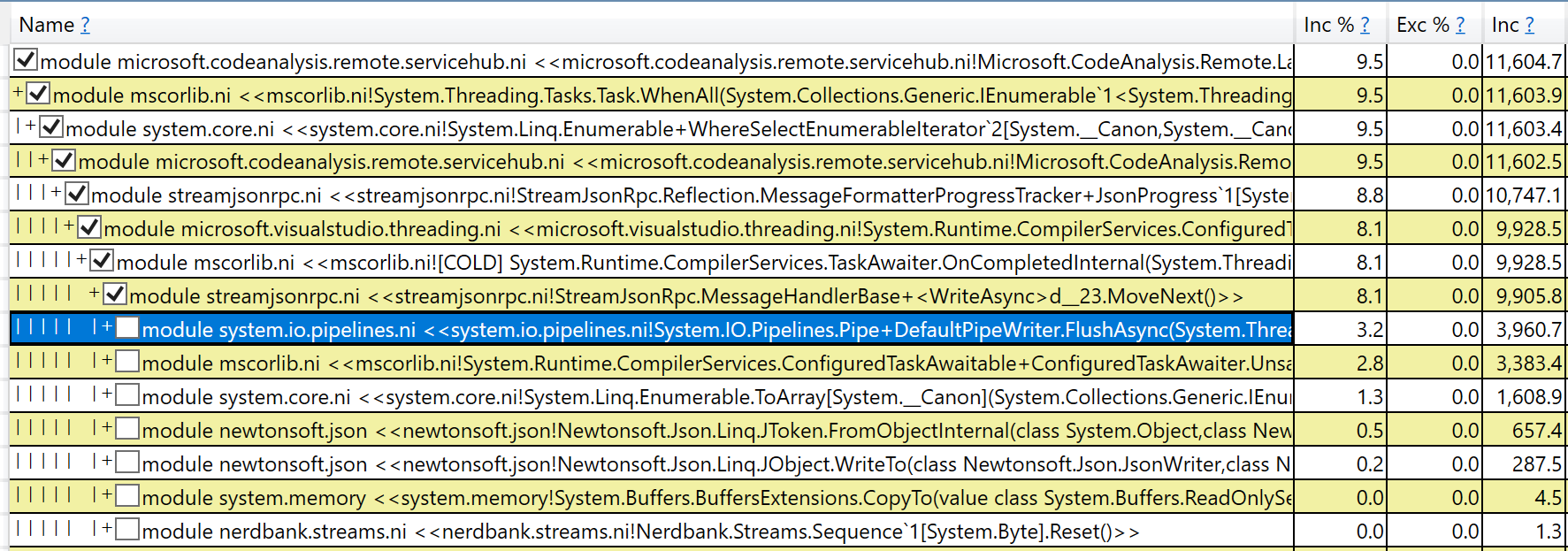

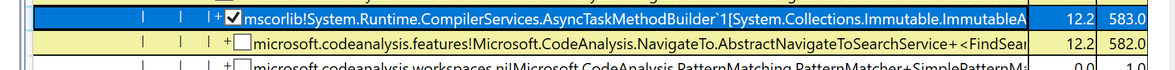

I'm not very familiar with Roslyn code, but the Tasks activity view shows time spent in Task.WhenAll() waiting for the various per project tasks to complete.

I see StreamJsonRPC in the stacks, presumably during the progress reporting stage, but that might just be time spent prior to the cancellation message being received.

Here's the CPU stacks as well.

Can you expand that? that's the relevant bits i need to know about. Thanks! :)

Knowing the names, and their children is what's important here. Thanks!

Tagging @tmat @dibarbet . This appears to be a problem both with the workspace/publishSymbol request and the underlying JsonRpc subsystem.

The problem here is that we figure out all result values and then send it in one large message back to VS. This messge must convert the values and then stream them over the wire back to VS.

There are two major problems here:

- we collect and report all results in one huge batch. I'm not sure if htat's a requirement of that api, or if we can stream things in smaller chunks. If we can stream things, we should at least do it at hte document level, cancelling in between. @dibarbet for views here since this is your area.

- When we do make an rpc call, we need it to respect cancellation more quickly, even as it's converting data to json. We likely have to communicate this with underlying platform (maybe @AArnott ).

This appears to be a problem both with the workspace/publishSymbol request and the underlying JsonRpc subsystem.

That's curious, the official streaming support for workspace/symbol should be using the built in IProgress<WorkspaceSymbol[]> support in StreamJsonRPC. IIRC, it sends a $/progress message.

workspace/publishSymbol was a temporary private protocol for VS that was introduced just for 16.3-16.4 as a temporary measure until LSP protocol was updated with a standardized pattern for streaming.

FWIW, this bug was filed in master-vs-deps as opposed to master, so there may be some code differences.

FWIW, this bug was filed in

master-vs-depsas opposed tomaster, so there may be some code differences.

I can look at changing to document as it seems more appropriate streaming level, but I'm not sure it will help with this specific delay.

StreamJsonRpc relies on Newtonsoft.Json to serialize JSON, or the MessagePack library for msgpack.

Newtonsoft.Json does not take a CancellationToken in its (de)serialization APIs, so if you are serializing a very large object graph, we can't cancel till it's done.

MessagePack does take a CancellationToken (I added it in 2.x), but that won't help you since you're not using messagepack here.

So if you're returning a very large result from your method, there's nothing I can see that streamjsonrpc can do for you. But as @gundermanc said, IProgress<T> allows you to break up the result into many distinct messages. Another (likely better) approach is relatively new and available too: IAsyncEnumerable<T> support.

But as @gundermanc said, IProgress

allows you to break up the result into many distinct messages. Another (likely better) approach is relatively new and available too: IAsyncEnumerable support.

Interesting. I'm starting to use that elsewhere. we can try to use that in our rpc system. Does it 'just work' if we make that the return type of the rpc entrypoint?

but I'm not sure it will help with this specific delay.

It definitely should. The delay is because we're serializing out a huge project graph and sending that over. so we only finish once the full graph is serialized out. Having this be doc-level instead gives us much finer grained cancellation.

Newtonsoft.Json does not take a CancellationToken in its (de)serialization APIs, so if you are serializing a very large object graph, we can't cancel till it's done.

I'm surprised we'd see it take seconds to serialize a single batch. My understanding was that we were already sending progress notifications per-project (at least before the switch to IProgress

My assumption would be that canceling between batches should be adequate to solve the issue, unless there's a huge number of results per projects... on that note: Editor's VSEditor project is 'all encompassing' and combines the entirety of the other ~50-60 impl projects into one assembly, so, perhaps batching per project results in a very large batch.

@gundermanc Even if IProgress<T> is already used today, if generation of the values is so fast on the server side that it has created a large backlog of progress messages to be transmitted, then cancelling won't have any effect on them and they'll still plug up the channel such that cancelling the overall server method won't get an ack back from the server till all the progress messages already in the pipe get through. There's no CancellationToken taken by IProgress<T>.Report.

@CyrusNajmabadi Yes, it Just Works. :) But be sure to read the doc I linked to. There's lots of considerations to be made.

IAsyncEnumerable

@gundermanc Even if IProgress

is already used today, if generation of the values is so fast on the server side that it has created a large backlog of progress messages to be transmitted, then cancelling won't have any effect on them and they'll still plug up the channel such that cancelling the overall server method won't get an ack back from the server till all the progress messages already in the pipe get through.

Gotcha! I'd imagine in this case though smaller batch size may be sufficient, as C++ doesn't have this problem, and seems to limit its batches to 10.

StreamJsonRpc relies on Newtonsoft.Json to serialize JSON, or the MessagePack library for msgpack.

Oh god...

Newtonsoft.Json does not take a CancellationToken in its (de)serialization APIs, so if you are serializing a very large object graph, we can't cancel till it's done.

Ugh... that's terrible :(

MessagePack does take a CancellationToken (I added it in 2.x), but that won't help you since you're not using messagepack here.

How do we switch to message pack? We shouldn't be using json period here. That's just extra cost (cpu+memory) for zero benefit. Is this an easy thing for us to just switch over to?

Thanks! :)

How do we switch to message pack?

AFAIK it's not supported by the LSP protocol, which assumes JSON, but I may be wrong.

Yes, switching from JSON to MessagePack is very easy -- at the streamjsonrpc level. But two gotchas that may getcha:

- The types you're passing back and forth that were serializable via Newtonsoft.Json attributes and patterns must now conform to the rules of MessagePack-CSharp instead.

- LSP is fundamentally a JSON protocol. The MessagePack encoding is conveniently _very_ compatible with JSON (which is why our JSON-RPC library allows MessagePack as an alternate encoding), but you can't expect to interop with any other LSP party if you use messagepack since they'll all be encoding with JSON.

Solution: make your types serializable both by newtonsoft.json and messagepack. Then initialize your RPC stream with StreamJsonRpc passing in the switch for either messagepack or JSON based on whether you know the remote party can speak messagepack. So you'll be fast when you can, but still interop with others when necessary.

That seems awful. Why would LSP mandate a format (especially one as inefficient as json). It just seems like overhead for no benefit. Wouldn't a pluggable system (with json maybe being lowest common denominator) be better? That way we could avoid the overhead for normal cases, and only have it when dealing with very low-capability systems?

Tagging @tmat @dibarbet for moving to messagepack for our rpc system so we don't have all this awful overhead (and so we support cancellation).

Json should only be the worst case case. We certainly would never want this for nexus as it will just absolutely balloon messages over the wire for no benefit.

We'll probably need to discuss messagepack support in the VS LSP client, also tagging @tinaschrepfer

Oh and since liveshare is in the middle doing path conversion, if we change to messagepack it would also probably affect them cc @daytonellwanger

FYI: Our MessagePackFormatter does not currently handle cancellation either, so while the capability to support canceling serialization is there it would require some work to do it (https://github.com/microsoft/vs-streamjsonrpc/issues/434).

FWIW though, MessagePack is 10x faster than Newtonsoft.Json for large data payloads in tests we've run. So even without cancellation it's very likely to mitigate your perf problems including the happier path where the user _didn't_ cancel the request.

FWIW though, MessagePack is 10x faster than Newtonsoft.Json for large data payloads in tests we've run

yeah, it seems unfathomable to me that anyone would build a latency sensitive data-interchange system on top of Json. It's probably the worst of all worlds for this sort of use case that i can think of. Doubly bad that it's on newtonsoft, which is completely non-compliant when it comes to json. @tinaschrepfer for visibility here.

Doubly bad that it's on newtonsoft, which is completely non-compliant when it comes to json.

It doesn't have to be newtonsoft. StreamJsonRpc is pluggable so you can stick whatever serializer in there that you like. But the LSP types in .NET are written for newtonsoft serialization so they may need adjustments too.

But as for compliance, that hasn't been a problem for us. We interop with LSP just fine using newtonsoft.

@CyrusNajmabadi Newtonsoft.Json is generally strict during serialization and relaxed during deserialization. This makes it rather easy to interoperate with other implementations.

@CyrusNajmabadi Newtonsoft.Json is generally strict during serialization

That may generally be true (not actually sure if that's the case), but is def not always true. I've been on the receiving side of json serialized from newtonsoft that had things like non-quoted property names as well as comments. It's been frustrating because pushing back on teams/companies producing this has been met with "that's what newtonsoft produced, you should accept it", even if we're not even written in .Net on the other side :-/ This has required an enormous amount of pain and hackery.

My preference would have strongly been that newtonsoft take the policy that it be strictly impossible to generate invalid json. i.e. any time that wasn't the case was considered a bug that must be fixed (regardless of whether that impacted a current producer). This is a policy seen in other json production systems. Importantly, it's something all the major browser vendors have abided by.

Any update on this? Perhaps it's worth imposing a maximum batch size so we can't have runaway serialization for large projects like Microsoft.VisualStudio.Platform.VSEditor.dll in the repro.

Any update on this? Perhaps it's worth imposing a maximum batch size so we can't have runaway serialization for large projects like Microsoft.VisualStudio.Platform.VSEditor.dll in the repro.

I have a change to batch per document rather than per project. Testing out to see if it seems any better right now.

https://github.com/dotnet/roslyn/pull/42649

Thanks for the update!

closing this - the cancellation issue is better with smaller batch sizes. However the search is not as smooth as it was in 16.4/16.5. Will look into that separately

closing this - the cancellation issue is better with smaller batch sizes. However the search is not as smooth as it was in 16.4/16.5. Will look into that separately

@dibarbet FWIW, can you see if this change makes a positive impact:

https://devdiv.visualstudio.com/DevDiv/_git/VS/pullrequest/239467