Rocket.chat: Users are never shown offline

Description:

Since our update from 2.1.2 to 2.4.5 users are shown as online even though they are not connected. This results in no offline notification emails sent and confused users.

Explicitly logging out from all clients (desktop, mobile) does not change the status either.

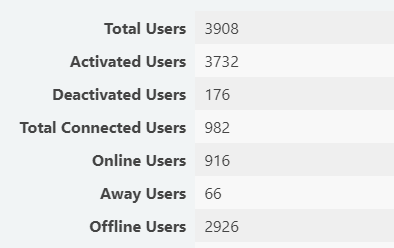

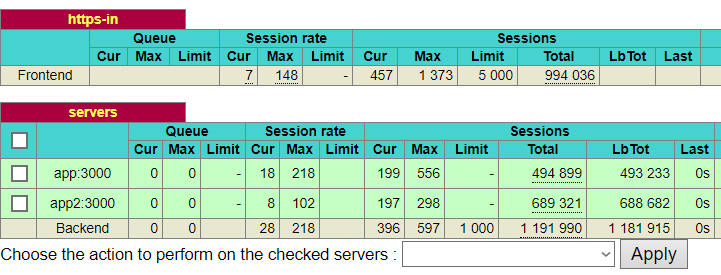

The numbers diplayed on the admin panel can not be correct as well. It shows 982 connected clients, but the load balancer has only 457 sessions.

rocket chat admin panel

Load balancer

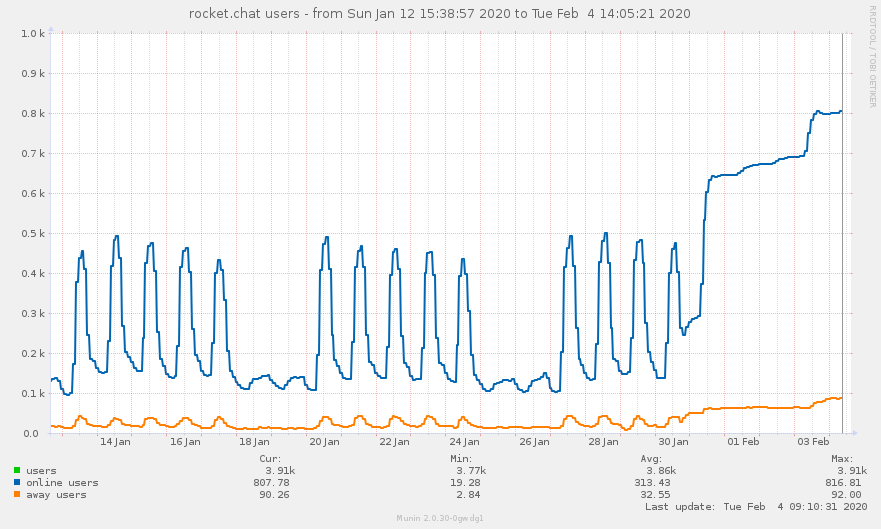

Online users after upgrade

Steps to reproduce:

Expected behavior:

Change user online status after logout/disconnect.

Actual behavior:

Users are always "online".

Server Setup Information:

- Version of Rocket.Chat Server: 2.4.5

- Operating System: Ubuntu 16.04.6 LTS

- Deployment Method: docker

- Number of Running Instances: 2

- DB Replicaset Oplog: Enabled

- NodeJS Version:

- MongoDB Version:

Client Setup Information

- Desktop App or Browser Version: electron client 2.17.2

- Operating System: win 10

Additional context

Relevant logs:

All 18 comments

Hi guys,

We suffer from the same bug. Do you need any more data from us (logs or sth)?

Hello,

We faced with same issue on Version of Rocket.Chat Server: 2.4.6

Hi,

Some issue, please fix ASAP. It's very important mark in our work.

We have the same situation after an update to server version 2.4.6...

thanks for your reports.. can you guys please share more info about your deployment setup?

- what deployment method are you using?

- what reverse proxy/load balancer are you using?

- what version did you start noticing this?

- how many instances are running on your cluster?

one thing you can do to "reset" all user status is renew the instances.. if you remove all running instances and start new ones, the status will also be reset.

in the mean time I've trying reproduce this issue without success, so please provide as many details as you can so we can reproduce and fix this as soon as possible.

Thank you for working on this @sampaiodiego

- deployment method: docker

docker version

Client: Docker Engine - Community

Version: 19.03.5

API version: 1.40

Go version: go1.12.12

Git commit: 633a0ea838

Built: Wed Nov 13 07:50:12 2019

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.5

API version: 1.40 (minimum version 1.12)

Go version: go1.12.12

Git commit: 633a0ea838

Built: Wed Nov 13 07:48:43 2019

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.2.6

GitCommit: 894b81a4b802e4eb2a91d1ce216b8817763c29fb

runc:

Version: 1.0.0-rc8

GitCommit: 425e105d5a03fabd737a126ad93d62a9eeede87f

docker-init:

Version: 0.18.0

GitCommit: fec3683

image in use:

REPOSITORY TAG IMAGE ID CREATED SIZE

rocketchat/rocket.chat 2.4.5 982bd958d3c9 6 days ago 1.63GB

- reverse proxy: HAProxy version 1.8.21, released 2019/08/16

- version noticing this: as I wrote above I skipped some versions and upgraded from 2.1.2 to 2.4.5

- how many instances: 2 instances running on the same host

Thank you for looking into the issue.

Deployment

We are using the stable/rocketchat (2.0.0) version on kubernetes. We are running 3 nodejs pods and 4 mongodb pods (primary + 3 secondary).

Versions

I think noticed since when upgrading from 2.4.3 to 2.4.5 (or 2.3.1 to 2.4.3 - we did those in the same week last week). This is still an issue with 2.4.7.

Proxy

We are using nginx-ingress (stable/nginx 1.29.6) as reverse proxy.

Additional

Redeploying all pods indeed resets the count, but it will build up again.

In addition to the setup described above by @bbrauns here is the Haproxy config we use:

haproxy.cfg

Hi, I talked to @bbrauns (I'm a user on his RC instance too) and I don't see that problem on our RC (2.4.5). The difference between his and mine setup is, that we run a single rocket (tar bundle, nginx as proxy). So an idea might be, that only multi instance/cluster setups are affected.

I am also seeing this in our setup:

- deployment method: docker

- reverse proxy: nginx/1.10.3

- version: 2.4.6 (image ID:

b7b1842ac76b), after upgrading from (I think) 2.2.0 - number of instances: 1

My setup:

deployment method: manual installation

reverse proxy: nginx/1.16.1

version: 2.4.6, after upgrading from 2.3.0

number of instances: 1

OS: CentOS Linux release 7.7.1908

mongo: db version v3.6.17

hi everyone, we've just released version 2.4.8 with a fix for this.. can you please test and let me know if it has indeed fixed the issue? thx

Thank you, we've just updated. For now it seems better.

2.4.8 👍

LGTM too, many thanks!

Seems to be fixed here as well after updating to 2.4.8. Thank you!

looks good 👍

thank you guys for the feedback.. I'll close the issue as it looks as fixed =)

damn github cache.. it was showing as opened to me 🙈 thanks @bbrauns 👍

Most helpful comment

hi everyone, we've just released version 2.4.8 with a fix for this.. can you please test and let me know if it has indeed fixed the issue? thx