Rocket.chat: High CPU on Node app

Description:

Issue with members connecting to the server. Some members cannot connect (just reload) when login-in. Some members are disconnected even login for a long time. Checking by top command we see that node application hogs 100%+ CPU. Restarting the Rocket.Chat server seems to temporarily solve the problem. But after a day or 2 we notice a very same issue, which restarting temporarily fix the problem.

Steps to reproduce:

From our observation: doing search from private chats or group chats seems to peak the CPU.

Although it normalize for a few minutes. But this is not an issue from the previous version of Rocket.Chat via Snap Installation 72.3.

Actual behavior:

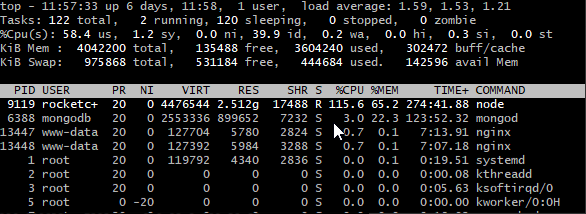

top command screenshot

node application is at 100%+ CPU

Server Setup Information:

- Version of Rocket.Chat Server: 0.74.2

- Operating System: Ubuntu 16.04.2 LTS

- Deployment Method: manual install

- Number of Running Instances: 1

- DB Replicaset Oplog: N/A

- NodeJS Version: v8.11.3

- MongoDB Version: db version v3.6.10

Additional context

Relevant logs:

All 23 comments

Please check your mongo logs and submit any observed anomalies during the hickup period. Redact sensitive information as necessary before posting.

this is the log from /var/log/mongodb/mongod.log from the time the CPU was high. changed some of info. hope this helps :)

From 11:25am today

2019-02-13T11:25:54.947+0800 I WRITE [conn12] update parties.rocketchat__trash command: { q: { _id: "https://xxxxxxxdomain.com/xxxxxfolder/lib/images/xxxx/entries/x/team/xxx_x005_x01352/x05.jpg" }, u: { data: { meta: {}, headers: { xAmzId2: "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx/xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx=", xAmzRequestId: "xxxxxxxxxxxxxxxx", date: "Sun, 13 Jan 2019 05:11:13 GMT", lastModified: "Mon, 15 Jan xxxxxxxxxxxx 05:35:55 GMT", etag: ""xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"", xAmzMetaCbModifiedtime: "Mon, 15 Jan xxxxxxxxxxxx 05:25:39 GMT", acceptRanges: "bytes", contentType: "image/jpeg", contentLength: "108106", server: "AmazonS3", connection: "close" }, parsedUrl: { host: "xxxxxxxxxxxxxxxxxxxxxxxx.com", hash: null, pathname: "/xxxxxxxxx/lib/images/xxxxxxx/entries/xxxxxxxxxxxx/team/xxxxxxxxxxxx0115_xxxxxxx_301352/xxxxxxxx05.jpg", protocol: "https:", port: null, query: null, search: null, hostname: "xxxxxxxxxxxxxxxxxxxxxxxx.com" }, content: { headers: { x-amz-id-2: "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx/xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx=", x-amz-request-id: "xxxxxxxxxxxxxxxx", date: "Sun, 13 Jan 2019 05:11:13 GMT", last-modified: "Mon, 15 Jan xxxxxxxxxxxx 05:35:55 GMT", etag: ""xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"", x-amz-meta-cb-modifiedtime: "Mon, 15 Jan xxxxxxxxxxxx 05:25:39 GMT", accept-ranges: "bytes", content-type: "image/jpeg", content-length: "108106", server: "AmazonS3", connection: "close" }, body: "����$ExifII����Duckyd��http://ns.adobe.com/xap/1.0/<?xpacket begin="" id="xxxxxxxxxxx...", parsedUrl: { host: "xxxxxxxxxxxxxxxxxxxxxxxx.com", hash: null, pathname: "/xxxxxxxxx/lib/images/xxxxxxx/entries/xxxxxxxxxxxx/team/xxxxxxxxxxxx0115_xxxxxxx_301352/xxxxxxxx05.jpg", protocol: "https:", port: null, query: null, search: null, hostname: "xxxxxxxxxxxxxxxxxxxxxxxx.com" }, statusCode: 200 } }, updatedAt: new Date(1547356272869), _updatedAt: new Date(1547356272869), _deletedAt: new Date(1550028354463), __collection__: "oembed_cache" }, multi: false, upsert: true } planSummary: IDHACK keysExamined:0 docsExamined:0 nMatched:0 nModified:0 upsert:1 keysInserted:3 numYields:0 locks:{ Global: { acquireCount: { r: 1, w: 1 } }, Database: { acquireCount: { w: 1 } }, Collection: { acquireCount: { w: 1 } } } 480ms

2019-02-13T11:25:54.951+0800 I COMMAND [conn12] command parties.$cmd command: update { update: "rocketchat__trash", ordered: true, lsid: { id: UUID("xxxx-xxxxx-xxx-xxxx-xxxxxxx") }, $db: "parties" } numYields:0 reslen:221 locks:{ Global: { acquireCount: { r: 1, w: 1 } }, Database: { acquireCount: { w: 1 } }, Collection: { acquireCount: { w: 1 } } } protocol:op_query 484ms

2019-02-13T11:25:55.223+0800 I WRITE [conn12] update parties.rocketchat__trash command: { q: { _id: "https://xxxxxxxxxxxxxxxxxxxxxxxx.com/xxxxxxxxx/lib/images/xxxxx/thum/xxxxxxxxxxxxplayers/xxxxx_17x57/xyaxxxoto_s.jpg" }, u: { data: { meta: {}, headers: { xAmzId2: "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx=", xAmzRequestId: "xxxxxxxxxxxxxx", date: "Mon, 14 Jan 2019 01:22:09 GMT", lastModified: "Sat, 03 Feb xxxxxxxxxxxx 10:07:53 GMT", etag: ""44ed77e9089e88bbe5f2f6676682c2a3"", xAmzMetaCbModifiedtime: "Sat, 03 Feb xxxxxxxxxxxx 03:36:55 GMT", acceptRanges: "bytes", contentType: "image/jpeg", contentLength: "89417", server: "AmazonS3", connection: "close" }, parsedUrl: { host: "xxxxxxxxxxxxxxxxxxxxxxxx.com", hash: null, pathname: "/xxxxxxxxx/lib/images/xxxxx/thum/xxxxxxxxxxxxplayers/xxxxx_175567/yxxto_s.jpg", protocol: "https:", port: null, query: null, search: null, hostname: "xxxxxxxxxxxxxxxxxxxxxxxx.com" }, content: { headers: { x-amz-id-2: "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx=", x-amz-request-id: "xxxxxxxxxxxxxx", date: "Mon, 14 Jan 2019 01:22:09 GMT", last-modified: "Sat, 03 Feb xxxxxxxxxxxx 10:07:53 GMT", etag: ""44ed77e9089e88bbe5f2f6676682c2a3"", x-amz-meta-cb-modifiedtime: "Sat, 03 Feb xxxxxxxxxxxx 03:36:55 GMT", accept-ranges: "bytes", content-type: "image/jpeg", content-length: "89417", server: "AmazonS3", connection: "close" }, body: "����ExifII��Duckyd���http://ns.adobe.com/xap/1.0/<?xpacket begin="" id="xxxxxxxxxxxreSzNTczkc9d"?...", parsedUrl: { host: "xxxxxxxxxxxxxxxxxxxxxxxx.com", hash: null, pathname: "/xxxxxxxxx/lib/images/xxxxx/thum/xxxxxxxxxxxxplayers/xxxxx_175567/xamxto_s.jpg", protocol: "https:", port: null, query: null, search: null, hostname: "xxxxxxxxxxxxxxxxxxxxxxxx.com" }, statusCode: 200 } }, updatedAt: new Date(1547428929273), _updatedAt: new Date(1547428929273), _deletedAt: new Date(1550028355037), __collection__: "oembed_cache" }, multi: false, upsert: true } planSummary: IDHACK keysExamined:0 docsExamined:0 nMatched:0 nModified:0 upsert:1 keysInserted:3 numYields:0 locks:{ Global: { acquireCount: { r: 1, w: 1 } }, Database: { acquireCount: { w: 1 } }, Collection: { acquireCount: { w: 1 } } } 182ms

2019-02-13T11:25:55.223+0800 I COMMAND [conn12] command parties.$cmd command: update { update: "rocketchat__trash", ordered: true, lsid: { id: UUID("xxxx-xxxxx-xxx-xxxx-xxxxxxx") }, $db: "parties" } numYields:0 reslen:220 locks:{ Global: { acquireCount: { r: 1, w: 1 } }, Database: { acquireCount: { w: 1 } }, Collection: { acquireCount: { w: 1 } } } protocol:op_query 182ms

2019-02-13T11:25:55.975+0800 I WRITE [conn15] update parties.rocketchat__trash command: { q: { _id: "https://www.google.com/search?q=deeco+cubao&rlz=1C1ASUM_enPH765PH765&oq=deeco&aqs=chrome.2.69i57j0l5.5003j0j7&sourceid=chrome&ie=UTF-8" }, u: { data: { meta: { pageTitle: "deeco cubao - Google Search", referrer: "origin" }, headers: { contentType: "text/html; charset=UTF-8", date: "Mon, 14 Jan 2019 02:03:46 GMT", expires: "-1", cacheControl: "private, max-age=0", strictTransportSecurity: "max-age=31536000", p3P: "CP="This is not a P3P policy! See g.co/p3phelp for more info."", contentEncoding: "gzip", server: "gws", xXssProtection: "1; mode=block", xFrameOptions: "SAMEORIGIN", setCookie: [ "1P_JAR=2019-01-14-02; expires=Wed, 13-Feb-2019 02:03:46 GMT; path=/; domain=.google.com", "CGIC=EhQxQzFBU1VNX2VuUEg3NjVQSDc2NQ; expires=Mon, 15-Jul-2019 16:58:22 GMT; path=/complete/search; domain=.google.com; HttpOnly", "CGIC=EhQxQzFBU1VNX2VuUEg3NjVQSDc2NQ; expires=Mon, 15-Jul-2019 16:58:22 GMT; path=/search; domain=.google.com; HttpOnly", "NID=154=IAgfnubG-pIBJxWWmzhmFfiGyPTPu-r2V3_AoMQmtG00iJUxtJJhZo3R1Q0_wwEaceOs4p1XUNTL-YlA5qX52rJbQH4kBpdQHVyx4MWoFDp_ijqGwFF3ntyxW1ra7wdaxirtexU2jNgPCE..." ], altSvc: "quic=":443"; ma=2592000; v="44,43,39,35"", connection: "close", transferEncoding: "chunked" }, parsedUrl: { host: "www.google.com", hash: null, pathname: "/search", protocol: "https:", port: null, query: "q=deeco+cubao&rlz=1C1ASUM_enPH765PH765&oq=deeco&aqs=chrome.2.69i57j0l5.5003j0j7&sourceid=chrome&ie=UTF-8", search: "?q=deeco+cubao&rlz=1C1ASUM_enPH765PH765&oq=deeco&aqs=chrome.2.69i57j0l5.5003j0j7&sourceid=chrome&ie=UTF-8", hostname: "www.google.com" }, content: { headers: { content-type: "text/html; charset=UTF-8", date: "Mon, 14 Jan 2019 02:03:46 GMT", expires: "-1", cache-control: "private, max-age=0", strict-transport-security: "max-age=31536000", p3p: "CP="This is not a P3P policy! See g.co/p3phelp for more info."", content-encoding: "gzip", server: "gws", x-xss-protection: "1; mode=block", x-frame-options: "SAMEORIGIN", set-cookie: [ "1P_JAR=2019-01-14-02; expires=Wed, 13-Feb-2019 02:03:46 GMT; path=/; domain=.google.com", "CGIC=EhQxQzFBU1VNX2VuUEg3NjVQSDc2NQ; expires=Mon, 15-Jul-2019 16:58:22 GMT; path=/complete/search; domain=.google.com; HttpOnly", "CGIC=EhQxQzFBU1VNX2VuUEg3NjVQSDc2NQ; expires=Mon, 15-Jul-2019 16:58:22 GMT; path=/search; domain=.google.com; HttpOnly", "NID=154=IAgfnubG-pIBJxWWmzhmFfiGyPTPu-r2V3_AoMQmtG00iJUxtJJhZo3R1Q0_wwEaceOs4p1XUNTL-YlA5qX52rJbQH4kBpdQHVyx4MWoFDp_ijqGwFF3ntyxW1ra7wdaxirtexU2jNgPCE..." ], alt-svc: "quic=":443"; ma=2592000; v="44,43,39,35"", connection: "close", transfer-encoding: "chunked" }, body: "<!doctype html><html itemscope="" itemtype="http://schema.org/SearchResultsPage" lang="en-SG"><head><meta content="/images/branding/googleg/1x/googleg...", parsedUrl: { host: "www.google.com", hash: null, pathname: "/search", protocol: "https:", port: null, query: "q=deeco+cubao&rlz=1C1ASUM_enPH765PH765&oq=deeco&aqs=chrome.2.69i57j0l5.5003j0j7&sourceid=chrome&ie=UTF-8", search: "?q=deeco+cubao&rlz=1C1ASUM_enPH765PH765&oq=deeco&aqs=chrome.2.69i57j0l5.5003j0j7&sourceid=chrome&ie=UTF-8", hostname: "www.google.com" }, statusCode: 200 } }, updatedAt: new Date(1547431428052), _updatedAt: new Date(1547431428052), _deletedAt: new Date(1550028355227), __collection__: "oembed_cache" }, multi: false, upsert: true } planSummary: IDHACK keysExamined:0 docsExamined:0 nMatched:0 nModified:0 upsert:1 keysInserted:3 numYields:0 locks:{ Global: { acquireCount: { r: 1, w: 1 } }, Database: { acquireCount: { w: 1 } }, Collection: { acquireCount: { w: 1 } } } 744ms

2019-02-13T11:25:55.975+0800 I COMMAND [conn15] command parties.$cmd command: update { update: "rocketchat__trash", ordered: true, lsid: { id: UUID("xxxx-xxxxx-xxx-xxxx-xxxxxxx") }, $db: "parties" } numYields:0 reslen:237 locks:{ Global: { acquireCount: { r: 1, w: 1 } }, Database: { acquireCount: { w: 1 } }, Collection: { acquireCount: { w: 1 } } } protocol:op_query 744ms

2019-02-13T11:30:56.230+0800 I COMMAND [conn13] command parties.rocketchat_message command: find { find: "rocketchat_message", filter: { _hidden: { $ne: true }, rid: "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx" }, sort: { ts: -1 }, limit: 30, returnKey: false, showRecordId: false, lsid: { id: UUID("xxxxx-xxx-xxx-x-xxxxxxxx-xxxx") }, $db: "parties" } planSummary: IXSCAN { rid: 1, ts: 1 } keysExamined:30 docsExamined:30 cursorExhausted:1 numYields:1 nreturned:30 reslen:8728 locks:{ Global: { acquireCount: { r: 4 } }, Database: { acquireCount: { r: 2 } }, Collection: { acquireCount: { r: 2 } } } protocol:op_query 187ms

We have the same problem. We are on version 0.74.3

The scenario is very similiar as above. Manual install etc.

Well, this is not only a performance issue, because the service completely hangs after a while..

@RocketChat/core we're experiencing similar issues after migration from node 8.9.4 (0.71.1) to 8.11.4 (0.73.2): The system load climbs to 100%, messages cannot be delivered (remain grayed out), Messages of newly opened channels cannot be displayed. After some 10-30s we can continue working as usual.

This has not happened earlier, no periodic occurrence could be identified. Any guesses or ideas how to debug further?

The workaround I've been using is to schedule a reboot of the server daily at non-office hours. Pretty annoying, but it works..

@Sing-Li Any progress on this issue? We're expiring this issue on a daily base and need to restart Rocket.Chat at least once a day. Also, we're permanently suffering from delayed message deliveries (message remains grayed out for more than 30 sec). The users on our platform are already complaining and we don't know what we can do. Please, urgent attention is required on this issue.

Anyone noticing a high memory usage too?

We just updated from 0.66.x to 0.74.3 (and mongodb and are experiencing the same issue, but daily reboots are nowhere near enough to fix the problem. RC hangs with 100% CPU usage somewhere between every 1-12 hours. However, I am not sure if this is related to searches or not. I do see a message in the mongo log about a search about 7 minutes before our last hang, but it seems unlikely to be related given the time differential.

For reference:

Version of Rocket.Chat Server: 0.74.3

Operating System: CentOS 7.6.1810

Deployment Method: Rocket.Chat.Ansible

Number of Running Instances: 1

DB Replicaset Oplog: N/A

NodeJS Version: v8.9.4

MongoDB Version: db version v4.0.8

@sketch242 please try converting your MongoDB to a replica set. also update your node version to 8.11.4

We experience this issue as well. Every 5-8 days the CPU will max out to 100% and the server will be inaccessible and the box its on has to be forcibly restarted.

Any here having the issue please provide details of your setup. We need to determine if there is any sort of pattern so we can try to reproduce.

If mongo is on the same host also might be worth moving it to its own to see if that changes anything

I'm running an AWS t2.micro

Users: 4. About 20-100 messages day with about 5-10 file uploads.

Image Storage: S3

CPUs: 1

RAM: 1gb

Storage Size: 4gb used out of 8gb

OS: Ubuntu 18.04 amd64

MongoDB is on the same instance as Rocket

Mongo Version: 3.6.3

Rocket Version: 0.74.3 - Manual install. All admin/install settings left on default except files are saved to S3

Node Version: 8.11.4

It runs fast and without problems until the 5th to 8th days then the box becomes inaccessible until forcibly restarted.

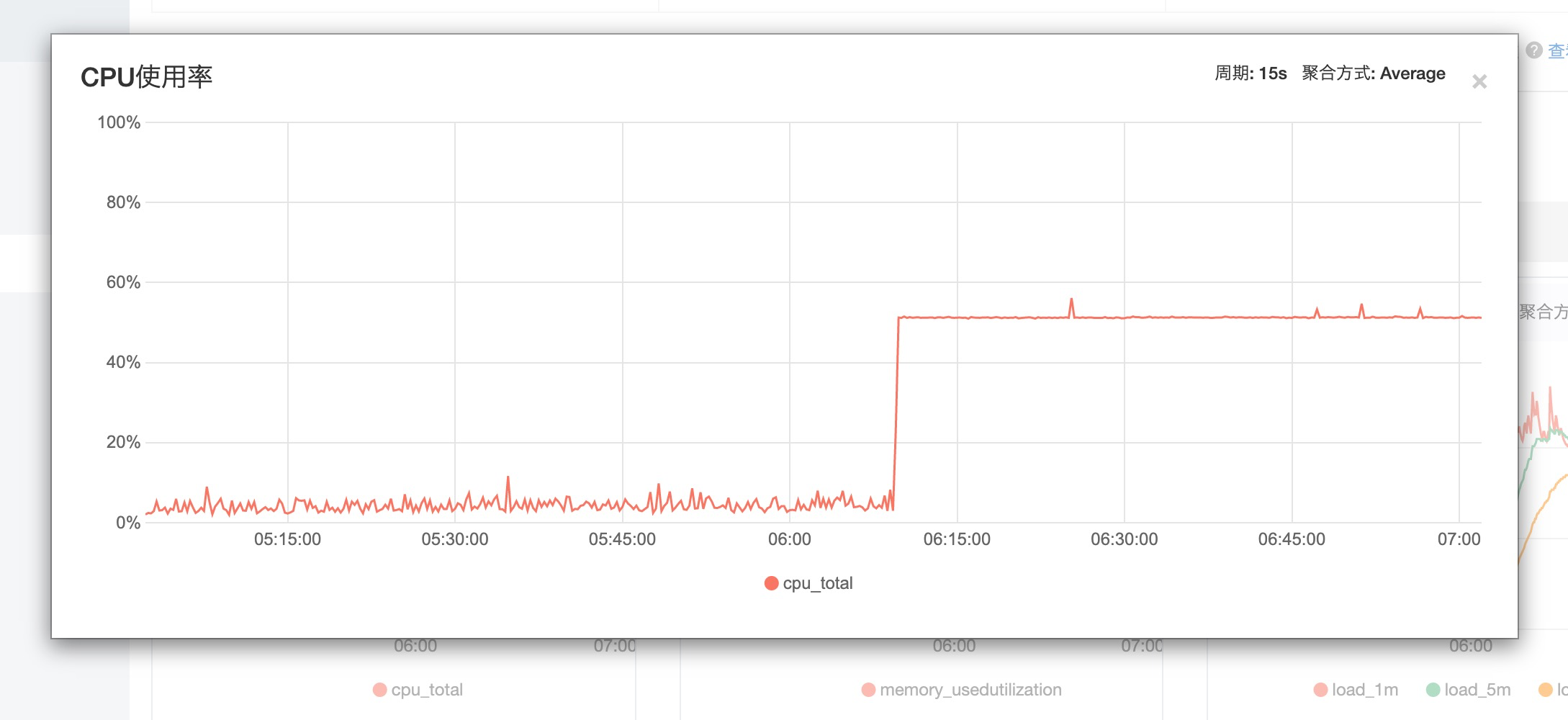

What happens is it maxes out the CPU on the 5th-8th day around 6am.

@sampaiodiego Interesting. I was actually running in a replica set with only one member before I updated mongo from 2.6 to 4.0. Figured I would just leave it off since I wasn't using it anyway.

I've re-enabled the replica set now, hopefully that will help.

On the node version, I notice that even the latest Rocket.Chat.Ansible role from github is still installing 8.9.4, not 8.11.4. Seems like someone ought to to update that, as I see that's been the recommended version for quite some time now.

Not sure if a MongoDB replica set will solve this issue as in @jagloro's case a very little instance with only 4 users has been affected too.

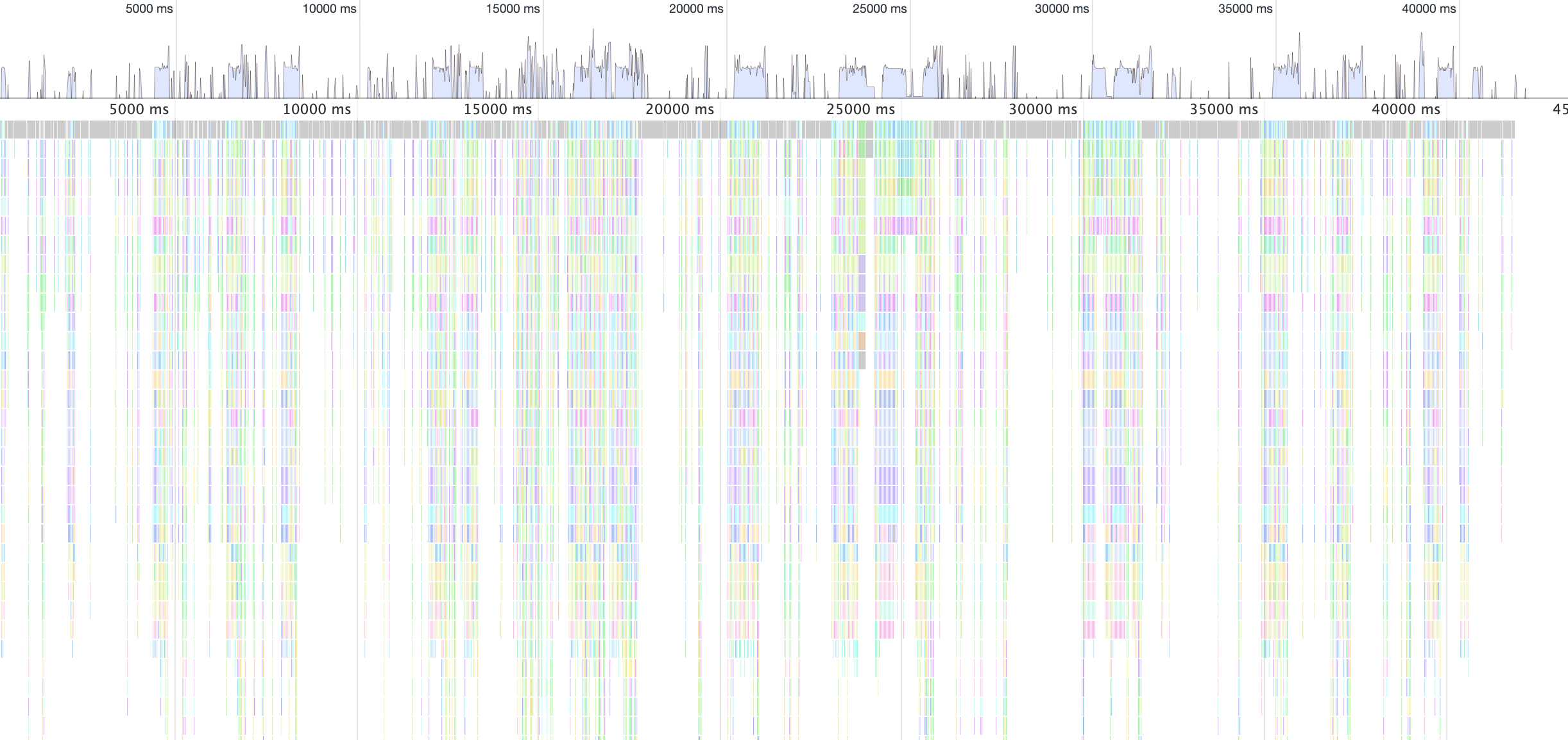

Luckily I was now finally able to create a CPU profile while the the CPU maxed out

A healthy profile looks like the following

As you can see while the CPU maxed out the Node process has been very busy with garbage collection. Not sure why the GC causes a high CPU load but it seems to be related.

Something to note: to the time the RC CPU maxed out we had another pod running in our k8s on the same node which put quite some pressure on the RAM. We're not sure if that caused the issues with the GC routines in the RC pod. Hence, we'll move RC now on a dedicated node and see if we still have this issue.

Thanks for sharing!

Just to clarify on replicaset. It does actually make a difference even if single mongo node and a few users. Meteor uses oplog to watch for some confirmations, if not there it has to poll which is generally more expensive.

@subesokun is that something you would be willing/able to share? Would be very interesting to dig into that a bit more.

@geekgonecrazy Sure, which information would help you?

@geekgonecrazy had just another crashed pod because the node ran out of memory after RC consumed > 3.2 GB RAM. During this time the CPU profile showed that the garbage collection was very busy and took up to 40 seconds per run/execution.

Can it be related to #14186?

Maybe related to this issue in meteor 1.8.0.

High CPU usage in production and 80%+ of time is spent in the GC, on both node 8.11.4 and 8.12.0

My RC server`s CPU increase into 100+ suddenly at 6:09 am, but its not restart, and all request return 502. the mongo instance is separatable from RC server

the record of cpu

the record of mongodb

We had quite the same issue and 'solved' by https://github.com/RocketChat/Rocket.Chat/issues/11144 , https://github.com/RocketChat/Rocket.Chat/issues/6738 if it can help...

Most helpful comment

Thanks for sharing!

Just to clarify on replicaset. It does actually make a difference even if single mongo node and a few users. Meteor uses oplog to watch for some confirmations, if not there it has to poll which is generally more expensive.