Rocket.Chat doesn't send push messages if connection to mongodb was lost or primary election occurs

Description:

If a rocket.chat instance lose the connection to its database and restore it, all works fine except push messages are not send anymore until a restart of the instance. Push messages are sent via push gateway.

Server Setup Information:

- Version of Rocket.Chat Server: 0.58.2

- Operating System: Ubuntu 16.04 LTS

- Deployment Method(snap/docker/tar/etc): docker

- Number of Running Instances: 1

- DB Replicaset Oplog: YES

- Node Version: shipped in docker image

Steps to Reproduce:

- Start your MongoDB Docker Container

- Start your Rocket.Chat Docker Container

- Send some test messages -> push is working

- Stop mongodb docker container, wait 10 sec

- start mongodb docker container

- Wait for reconnecting of the rc docker container

- Send some test messages -> no push messages anymore

Expected behavior:

Push messages should be send if a docker rocket.chat containers fail-over or reconnect to the database.

Actual behavior:

At a fail over or a reconnection to the database no push messages are sent anymore until a complete restart of the RC docker container is done.

Relevant logs:

No relevant log messages (just seeing that there are no log entries after reconnection)

All 11 comments

+1

+1

https://github.com/raix/push/issues/181 might be related?

Also likely related to this: https://github.com/meteor/meteor/issues/8598

Some news on this..

seems to be fixed: https://github.com/meteor/meteor/issues/8598 with version [email protected]

Since we are using [email protected] (https://github.com/RocketChat/Rocket.Chat/blob/34cd952f967a063f2b4d1275659381cf2d141652/.meteor/versions#L105) this seems to not fix my issue mentioned here.

Here is a sample log from the RC docker container:

{"log":"Exception while invoking method 'permissions/get' MongoError: no connection available\n","stream":"stdout","time":"2018-01-22T00:22:31.462456009Z"}

{"log":" at nextFunction (/app/bundle/programs/server/npm/node_modules/meteor/npm-mongo/node_modules/mongodb-core/lib/cursor.js:540:25)\n","stream":"stdout","time":"2018-01-22T00:22:31.4625298Z"}

{"log":" at Cursor.next [as _next] (/app/bundle/programs/server/npm/node_modules/meteor/npm-mongo/node_modules/mongodb-core/lib/cursor.js:699:3)\n","stream":"stdout","time":"2018-01-22T00:22:31.462540408Z"}

{"log":" at nextObject (/app/bundle/programs/server/npm/node_modules/meteor/npm-mongo/node_modules/mongodb/lib/cursor.js:680:8)\n","stream":"stdout","time":"2018-01-22T00:22:31.462547685Z"}

{"log":" at Cursor.next (/app/bundle/programs/server/npm/node_modules/meteor/npm-mongo/node_modules/mongodb/lib/cursor.js:270:12)\n","stream":"stdout","time":"2018-01-22T00:22:31.462554489Z"}

{"log":" at SynchronousCursor.fn [as _synchronousNextObject] (/app/bundle/programs/server/node_modules/fibers/future.js:93:26)\n","stream":"stdout","time":"2018-01-22T00:22:31.462561188Z"}

{"log":" at SynchronousCursor._nextObject (/app/bundle/programs/server/packages/mongo.js:1021:22)\n","stream":"stdout","time":"2018-01-22T00:22:31.462567703Z"}

{"log":" at SynchronousCursor.forEach (/app/bundle/programs/server/packages/mongo.js:1053:22)\n","stream":"stdout","time":"2018-01-22T00:22:31.46257414Z"}

{"log":" at SynchronousCursor.map (/app/bundle/programs/server/packages/mongo.js:1063:10)\n","stream":"stdout","time":"2018-01-22T00:22:31.462580728Z"}

{"log":" at SynchronousCursor.fetch (/app/bundle/programs/server/packages/mongo.js:1083:17)\n","stream":"stdout","time":"2018-01-22T00:22:31.462587058Z"}

{"log":" at Cursor.(anonymous function) [as fetch] (/app/bundle/programs/server/packages/mongo.js:898:44)\n","stream":"stdout","time":"2018-01-22T00:22:31.46259344Z"}

{"log":" at DDPCommon.MethodInvocation.permissions/get (/app/bundle/programs/server/packages/rocketchat_authorization.js:570:8)\n","stream":"stdout","time":"2018-01-22T00:22:31.462599901Z"}

{"log":" at DDPCommon.MethodInvocation.methodsMap.(anonymous function) (/app/bundle/programs/server/packages/rocketchat_lib.js:2130:26)\n","stream":"stdout","time":"2018-01-22T00:22:31.462606203Z"}

{"log":" at DDPCommon.MethodInvocation.methodMap.(anonymous function) (packages/rocketchat_monitoring.js:2731:30)\n","stream":"stdout","time":"2018-01-22T00:22:31.462612728Z"}

{"log":" at maybeAuditArgumentChecks (/app/bundle/programs/server/packages/ddp-server.js:1826:12)\n","stream":"stdout","time":"2018-01-22T00:22:31.462619184Z"}

{"log":" at DDP._CurrentMethodInvocation.withValue (/app/bundle/programs/server/packages/ddp-server.js:892:126)\n","stream":"stdout","time":"2018-01-22T00:22:31.462645055Z"}

{"log":" at Meteor.EnvironmentVariable.EVp.withValue (packages/meteor.js:1186:15)\n","stream":"stdout","time":"2018-01-22T00:22:31.462652913Z"}

{"log":" at DDPServer._CurrentWriteFence.withValue (/app/bundle/programs/server/packages/ddp-server.js:892:98)\n","stream":"stdout","time":"2018-01-22T00:22:31.462659309Z"}

{"log":" at Meteor.EnvironmentVariable.EVp.withValue (packages/meteor.js:1186:15)\n","stream":"stdout","time":"2018-01-22T00:22:31.462665749Z"}

{"log":" at Promise (/app/bundle/programs/server/packages/ddp-server.js:892:46)\n","stream":"stdout","time":"2018-01-22T00:22:31.462671929Z"}

{"log":" at new Promise (\u003canonymous\u003e:null:null)\n","stream":"stdout","time":"2018-01-22T00:22:31.462679958Z"}

{"log":" at Session.method (/app/bundle/programs/server/packages/ddp-server.js:865:23)\n","stream":"stdout","time":"2018-01-22T00:22:31.462687541Z"}

{"log":" at /app/bundle/programs/server/packages/ddp-server.js:744:85\n","stream":"stdout","time":"2018-01-22T00:22:31.462693895Z"}

{"log":"\n","stream":"stdout","time":"2018-01-22T00:22:31.462700201Z"}

If the connection to the mongodb is restored, more functions are also not working:

- Statistics not refreshing correctly (for example if you ask the instance for the activeuser, totalmessages..)

- Push messages are send after the next restart

- Under some pressure the sites are not delivered correctly:

(from our apache2 reverse proxy log)

[Mon Jan 22 03:20:38.575037 2018] [proxy_http:error] [pid 12469:tid 139978822612736] (20014)Internal error (specific information not available): [client <ip-address>:49996] AH01102: error reading status line from remote server 127.0.0.1:3000

[Mon Jan 22 03:20:38.575218 2018] [proxy:error] [pid 12469:tid 139978822612736] [client <ip-address>:49996] AH00898: Error reading from remote server returned by /

[Mon Jan 22 07:36:20.817075 2018] [proxy:error] [pid 10411:tid 139978847790848] (9)Bad file descriptor: [client <ip-address>:57620] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:21.469344 2018] [proxy:error] [pid 10411:tid 139978747078400] (9)Bad file descriptor: [client <ip-address>:59784] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:21.976655 2018] [proxy:error] [pid 12469:tid 139978831005440] (9)Bad file descriptor: [client <ip-address>:49948] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:21.982464 2018] [proxy:error] [pid 12469:tid 139978881361664] (9)Bad file descriptor: [client <ip-address>:42052] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:22.112732 2018] [proxy:error] [pid 12469:tid 139978847790848] (9)Bad file descriptor: [client <ip-address>:49772] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:22.780848 2018] [proxy:error] [pid 12468:tid 139978856183552] (9)Bad file descriptor: [client <ip-address>:44438] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:22.886964 2018] [proxy:error] [pid 10411:tid 139978772256512] (9)Bad file descriptor: [client <ip-address>:33062] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:23.165573 2018] [proxy:error] [pid 12469:tid 139978730292992] (9)Bad file descriptor: [client <ip-address>:46030] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:23.239498 2018] [proxy:error] [pid 12469:tid 139978688329472] (9)Bad file descriptor: [client <ip-address>:33568] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:23.726010 2018] [proxy:error] [pid 12469:tid 139978705114880] (9)Bad file descriptor: [client <ip-address>:49556] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:23.759851 2018] [proxy:error] [pid 12468:tid 139978889754368] (9)Bad file descriptor: [client <ip-address>:41064] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:24.343819 2018] [proxy:error] [pid 10411:tid 139978705114880] (9)Bad file descriptor: [client <ip-address>:60094] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:24.617520 2018] [proxy:error] [pid 12468:tid 139978831005440] (9)Bad file descriptor: [client <ip-address>:35064] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:25.593986 2018] [proxy:error] [pid 12469:tid 139978789041920] (9)Bad file descriptor: [client <ip-address>:47654] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:25.808764 2018] [proxy:error] [pid 12469:tid 139978772256512] (9)Bad file descriptor: [client <ip-address>:49494] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:26.231918 2018] [proxy:error] [pid 12469:tid 139978738685696] (9)Bad file descriptor: [client <ip-address>:47682] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:28.004572 2018] [proxy:error] [pid 12469:tid 139978747078400] (9)Bad file descriptor: [client <ip-address>:52030] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

[Mon Jan 22 07:36:28.723881 2018] [proxy:error] [pid 12468:tid 139978789041920] (9)Bad file descriptor: [client <ip-address>:42892] AH03307: ap_proxy_transfer_between_connections: error on sock - ap_pass_brigade

In bigger deployments and with multiple sites this is a big reliability issue IMHO.

Just a restart of the corresponding containers can resolve this. To get more attention to this @geekgonecrazy @rodrigok @engelgabriel

Interesting... I bet the various observes aren't being ran again or rather it's not checking the re-established oplog connection for the same thing

@geekgonecrazy some news to this issue.

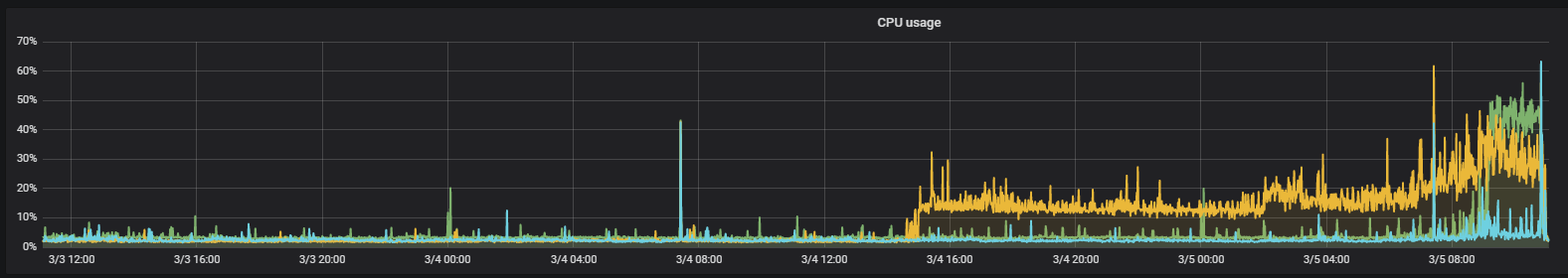

We had a neutron cluster restart where the rocket-chat containers lost their network connectivity for several seconds. The normal "push messages are not send" happend and after some time the cpu load of the node processes inside the containers is rising heavily.

This could be seen on this graph:

I couldnt find any "RC-Logs" that are in connection to the rising cpu load. Also, it seems that there is also a memory leak caused by this. (Memory consumption was heavily rising after the short connection loss)

Did it ever drop? If not sounds like we are holding on to variables not being used after the disconnect 🤔

@geekgonecrazy it just drops after a complete container restart.

Ok so something is definitely up here. We should look into it closer. At least it reconnects.. but if it reconnects wastes memory and push notifications don't work... Its not super useful for it to auto-recover the connection to mongo

Ran across this a few times now. Push notifications stop going out. Then reboot instance and suddenly they all go out. Accompanying this you can see mongo errors where at one point even briefly it suffered an interrupt in connection.