Realm-cocoa: {ERROR} [PERSISTENCE] mmap() failed:Cannot allocate memory size: 25346048 offset: 67108864

Goals

I am trying to update a fairly big object graph (33 RLMObject subclasses). I have 200 instances retrieved from the RLMRealm of one of the parent objects. I am trying to update some of the attributes of the parent along with its children, children of children, children of children of children, etc...

Expected Results

I would expect to be able to update all the fields for any of the parent and children objects that I previously retrieved from the store.

Actual Results

When updating a deep nested object I get an exception: {ERROR} [PERSISTENCE] mmap() failed: Cannot allocate memory size: 25346048 offset: 67108864

#0 0x000000010d5b51e8 in realm::util::EncryptedFileMapping::read_barrier(void const*, unsigned long, unsigned long (*)(char const*)) ()

#1 0x000000010d2a784c in realm::util::do_encryption_read_barrier(void const*, unsigned long, unsigned long (*)(char const*), realm::util::EncryptedFileMapping*) ()

#2 0x000000010d4eac80 in realm::BPlusTreeBase::create_root_from_ref(unsigned long) ()

#3 0x000000010d2a79e4 in realm::BPlusTreeBase::init_from_parent() ()

#4 0x000000010d525f8c in realm::Lst<realm::ObjKey>::Lst(realm::Obj const&, realm::ColKey) ()

#5 0x000000010d527230 in realm::LnkLst::LnkLst(realm::Obj const&, realm::ColKey) ()

#6 0x000000010d520124 in realm::Obj::get_listbase_ptr(realm::ColKey) const ()

#7 0x000000010d2b29f4 in realm::List::List(std::__1::shared_ptr<realm::Realm>, realm::Obj const&, realm::ColKey) ()

#8 0x000000010d3026f4 in -[RLMManagedArray initWithParent:property:] ()

#9 0x000000010d2f01e8 in ___ZN12_GLOBAL__N_113managedGetterEP11RLMPropertyPKc_block_invoke ()

#10 MyLocalObject.status = [MyServerObject retrieveStatus]

Steps to Reproduce

Instantiate an Encrypted Realm and store 200 difference instances of the same RLMObject subclass. Ensure the RLMObject has a big object graph with lots of nested children. Allocate in memory another 200 instances of the same RLMObject and try to update the 200 instances retrieved from the store with the attributes from the 200 instances allocated in memory.

Version of Realm and Tooling

Realm framework version: ? 5.0.3

Realm Object Server version: ? N/A

Xcode version: ? 11.3.1

iOS/OSX version: ? 13.5

Dependency manager + version: ? N/A

All 28 comments

Same with me. The issue started to appear after update from 4.4.1 -> 5.0.2 and on 5.0.3 too. Autorelease pool doesn't seem to fix the situation. On non-encrypted too.

We are only talking about 25MBs. I just checked and my realm file has a size of 101MB only

Just experienced this as well when downloading and merging lots of data (200+ objects). Lots of writes and deletes happening.

Database error: Error Domain=io.realm Code=9 "mmap() failed: Cannot allocate memory size: 65634304 offset: 67108864" UserInfo={NSLocalizedDescription=mmap() failed: Cannot allocate memory size: 65634304 offset: 67108864, Error Code=9}

Also use autoreleasepool {, with no difference in result.

v4.4.1 handled this fine. v5+ does not.

And the crash only occurs on actual devices. It does not occur in the iOS simulator.

Using Xcode 11.5, iOS 13 SDK. Swift.

Btw, I am only getting these type of crashes when using frozen objects. I have a UITableView powered by a datasource full of frozen objects. In a background thread, I have a "synchronizer" that every X minutes pulls new data from the backend, does some "merge" logic between the pulled data(unmanaged objects) and the locally stored data(managed but not frozen) and then tells the datasource to refresh with those local objects(freezing them first).

The crash was happening, in my scenario, when the synchronizer was doing all the merging logic between unmanaged objects and managed objects while the datasource was holding references to frozen objects. If the datasource, instead of holding references to frozen objects hold references to normal managed objects, I never get the crash doing the merging.

I really want to make usage of the frozen objects, its a good case scenario for my datasource, but at this point it is just impossible without getting all sort of different errors and crashes.

@sedwo Are you using Frozen Objects anywhere in your codebase?

@pkrmf Nope. I didn't even know about _frozen objects_ until this thread. And for now, still prefer _live objects_ for my use.

I'm also hitting these crashes since upgrading from 3.x to the latest 5.x releases.

I'm not using any frozen objects yet (it's one of the reasons I wanted to upgrade, to be able to use them).

I'm also hitting these crashes since upgrading from 3.x to the latest 5.x releases.

I'm not using any frozen objects yet (it's one of the reasons I wanted to upgrade, to be able to use them).

The issue was still happening for me without using frozen objects, just less often. I downgraded to 4.4.1(I think that is the latest 4.x) and all the problems are gone

@pkrmf Same here. I was about to release with v5.x, but now will continue my release updates using v4.4.1 .

cc: @bigfish24

Some acknowledgement of this one would be great. Would have been super dangerous to have released this to my users, without a way for them to downgrade back to a working Realm version!

Same here with Realm 5.2.0:

Error Domain=io.realm Code=9 "mmap() failed: Cannot allocate memory size: 23068672 offset: 67108864" UserInfo={NSLocalizedDescription=mmap() failed: Cannot allocate memory size: 23068672 offset: 67108864, Error Code=9}

we do not use frozen objects.

I tried investigating this today and yesterday. I saw similar spikes memory during update when:

- using an unmanaged object to update. so in

realm.add(object, update. modified),object.realmis nil, but matches a primary key of an existing object. - not when using managed objects.

- specifically updating direct relationships that created downstream orphaned objects. Using lists or just updating non-relationship properties in a zero-sum update didn't seem to cause the same spikes.

- using a deep, not wide object graph. I mainly tested with 2^6 classes, where each parent had two children, total depth of 6.

I couldn't personally induce mmap() failed:Cannot allocate memory size when using a physical device in either 4.4.1 _or_ 5.2.0, though.

Could someone share a sample data model they're using and which properties are being updated? If someone could share an example project, that'd greatly help.

Hi @ericjordanmossman,

Do your tests cycle through deletes too after adding/updating objects?

Try a loop of adding 200 objects, then deleting each object one at a time in a loop. And cycle through that whole thing several thousands of times.

If you are encountering issues due to mmap use Realm.Configuration.maxNumberOfActiveVersions to limit the amount of active versions a Realm contains. This may help you figure out areas in your code which may be holding onto a Realm version too long and cannot be cleaned up, hence causing memory issues.

Setting an arbitrary maxNumberOfActiveVersions to, lets say 5 may help narrow down the issue, but play around with this number.

Please see our release note regarding Realm.Configuration.maxNumberOfActiveVersions here: https://github.com/realm/realm-cocoa/releases/tag/v5.0.0

Interesting.

What is maxNumberOfActiveVersions default to?

Unlimited.

Well.. that happened.

config.maximumNumberOfActiveVersions = 64

I removed the delete steps and only perform the add/update calls:

func createOrUpdateAll<T: Object>(with objects: [T], update: Bool = true) {

autoreleasepool {

do {

let database = self.getDatabase()

try database?.write {

database?.add(objects, update: update ? .all : .error)

}

} catch let error {

DDLogError("Database error: \(error)")

fatalError("Database error: \(error)")

}

}

}

Still experience the fatal mmap error on v5.3.0

Is createOrUpdateAll performed in a loop at all @sedwo ? Are you also pinning a Realm instance anywhere in your code?

Correct. The loop is:

while(!sync_done) {

callbackQueue: DispatchQueue.global(qos: .utility) // separate thread

{

call_API_for_data(). // ~200 objects each time

DispatchQueue.main.async() {

createOrUpdateAll(data)

}

}

}

fyi:

func getDatabase() -> Realm? {

do {

return try Realm(configuration: realmConfig)

} catch let error {

DDLogError("Database error: \(error)")

fatalError("Database error: \(error)")

}

}

private var realmConfig: Realm.Configuration {

var config = Realm.Configuration(

// Migration Support

//

// Set the new schema version. This must be greater than the previously used

// version (if you've never set a schema version before, the version is 0).

schemaVersion: DALconfig.DatabaseSchemaVersion,

// Set the block which will be called automatically when opening a Realm with

// a schema version lower than the one set above.

migrationBlock: { migration, oldSchemaVersion in

// If we haven’t migrated anything yet, then `oldSchemaVersion` == 0

if oldSchemaVersion < DALconfig.DatabaseSchemaVersion {

// Realm will automatically detect new properties and removed properties,

// and will update the schema on disk automatically.

DDLogVerbose("⚠️ Migrating Realm DB: from v\(oldSchemaVersion) to v\(DALconfig.DatabaseSchemaVersion) ⚠️")

}

})

config.maximumNumberOfActiveVersions = 64

config.fileURL = userRealmFile

config.shouldCompactOnLaunch = { (totalBytes: Int, usedBytes: Int) -> Bool in

let bcf = ByteCountFormatter()

bcf.allowedUnits = [.useMB] // optional: restricts the units to MB only

bcf.countStyle = .file

// Compact if the file is over 100mb in size and less than 60% 'used'

let filesizeMB = 100 * 1024 * 1024

let compactRealm: Bool = (totalBytes > filesizeMB) && (Double(usedBytes) / Double(totalBytes)) < 0.6

if compactRealm {

DDLogVerbose("Compacting Realm db (\(DALconfig.realmStoreName)? : \(compactRealm ? "[YES]" : "[NO]")")

// totalBytes refers to the size of the file on disk in bytes (data + free space)

let totalBytesString = bcf.string(fromByteCount: Int64(totalBytes))

// usedBytes refers to the number of bytes used by data in the file

let usedBytesString = bcf.string(fromByteCount: Int64(usedBytes))

DDLogVerbose("size_of_realm_file: \(totalBytesString), used_bytes: \(usedBytesString)")

let utilization = Double(usedBytes) / Double(totalBytes) * 100.0

DDLogVerbose(String(format: "utilization: %.0f%%", utilization))

}

return compactRealm

}

return config

}

I'm not familiar with _pinning_.

Here is more detail on pinning.

The active version of a realm is only updated at the start of a run loop iteration. So,

if you read some data from the Realm and then block the thread on a long-running operation while writing to the Realm on other threads, the version is never updated and Realm has to hold on to intermediate versions

In the pic you shared, the versions aren't being released before you reach the limit of 64. But from the code you provided it looks like your use of autoreleasepool mostly matches the docs. I think @tgoyne or @leemaguire might catch something I'm missing.

You may also see this problem when accessing Realm using Grand Central Dispatch. This can happen when a Realm ends up in a dispatch queue’s autorelease pool as those pools may not be drained for some time after executing your code. The intermediate versions of data in the Realm file cannot be reused until the Realm object is deallocated. To avoid this issue, you should use an explicit autorelease pool when accessing a Realm from a dispatch queue.

Since you're performing the writes on the main thread, that suggests that you're holding onto a reference to a Realm on a background thread that can't be refreshed. You can use Xcode's Visual Memory Debugger to track down where this is happening. Enable Malloc Stacks in the scheme diagnostics settings, then run the app. When it hits the maximum version count error, switch to the View Memory Graph Hierarchy view in the debug navigator, and look for RLMRealm. The debugger then will show you where each live instance was allocated and how the object graph is retaining it. If you're lucky, there'll be an instance that should have been short-lived but instead was leaked somehow.

So I've made some interesting progress.

My VC sets up a DB notification token on those objects that the sync engine calls createOrUpdateAll(..).

[VC]

override func viewDidAppear(_ animated: Bool) {

super.viewDidAppear(animated)

self.viewModel.DatabaseSyncNotifications(true)

...

[VM]

// DB 'write' change notifications

private var syncCommentsToken: NotificationToken? = nil

private var syncSignAnnotationsToken: NotificationToken? = nil

// Observe database 'write' notifications.

func DatabaseSyncNotifications(_ enable: Bool, completion:@escaping () -> Void = {}) {

if enable {

if syncSignAnnotationsToken == nil {

syncSignAnnotationsToken = signAnnotationsDAL.setupNotificationToken(for: RLMProject.self) {

self.navigator.updateTitleSyncStatus()

self.updateVC()

completion()

}

}

if syncCommentsToken == nil {

syncCommentsToken = commentsDAL.setupNotificationToken(for: RLMComment.self) {

self.navigator.updateTitleSyncStatus()

self.updateVC()

completion()

}

}

} else { // disable

syncCommentsToken?.invalidate()

syncCommentsToken = nil

syncSignAnnotationsToken?.invalidate()

syncSignAnnotationsToken = nil

}

}

[DB Data Access Layer Class]

// MARK: - Change notifications

// Setup to observe Realm `CollectionChange` notifications

func setupNotificationToken(for observer: AnyObject, _ block: @escaping () -> Void) -> NotificationToken? {

let database = getDatabase()

return database?.objects(T.self).observe { [weak observer] (changes: RealmCollectionChange) in

if observer != nil {

switch changes {

case .initial:

return // ignore first setup

case .update(_, _, _, _):

block()

case .error(let err):

// An error occurred while opening the Realm file on the background worker thread

fatalError("\(err)")

}

}

}

}

I also simplified my createOrUpdateAll to be more verbose in witnessing each write.

func createOrUpdateAll<T: Object>(with objects: [T], update: Bool = true) {

for (index, object) in objects.enumerated() {

DDLogDebug("object[\(index)]")

do {

let database = self.getDatabase()

try database?.write {

database?.add(object, update: .all)

}

} catch let error {

DDLogError("Database error: \(error)")

fatalError("Database error: \(error)")

}

}

}

During each createOrUpdateAll, the observing token would trigger that VC's completion block (for status updates and view refreshes).

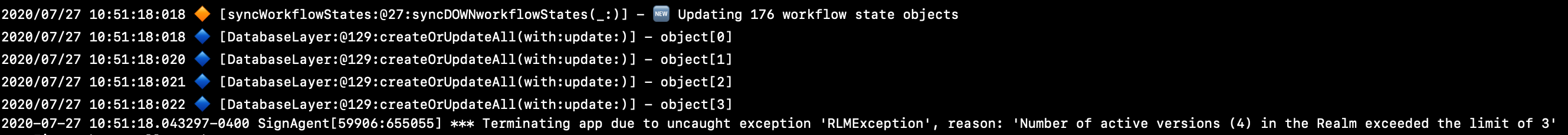

Setting config.maximumNumberOfActiveVersions = 3, I would witness my cap triggering to this maximum count of versions and then crash upon it. 'Number of active versions (4) in the Realm exceeded the limit of 3'

When I disable the DB notifications token, then everything works.

BUT... that didn't really help to determine _what_ and _why_ specifically.

So I simply setup the DB notifications with an empty completion block:

// Observe database 'write' notifications.

func DatabaseSyncNotifications(_ enable: Bool, completion:@escaping () -> Void = {}) {

if enable {

if syncSignAnnotationsToken == nil {

syncSignAnnotationsToken = signAnnotationsDAL.setupNotificationToken(for: RLMProject.self) {

}

}

if syncCommentsToken == nil {

syncCommentsToken = commentsDAL.setupNotificationToken(for: RLMComment.self) {

}

}

} else { // disable

syncCommentsToken?.invalidate()

syncCommentsToken = nil

syncSignAnnotationsToken?.invalidate()

syncSignAnnotationsToken = nil

}

}

AND.... the crash still occurred:

Notice though how it is in the Realm notification listener thread(?).

Once again, if I remove the Realm DB notifications setup line in the VC, then it all works fine.

So... am I setting up the db observer incorrectly? or something?

I've successfully reproduced a bug where we weren't releasing old versions in a place where we should, which would cause this problem.

I believe we've been seeing this issue on our app since we upgraded to v5 last week. Unfortunately we've already released to the App Store with v5 and we can't go back to 4.

I can reproduce the increasing number of active versions problem by adding a new object with a primary key to the realm in the same runloop as it's been created, this causes every subsequent write transaction to create a new version. However, if the object is added in another runloop the problem is avoided.

This causes version pinning (simplified):

let newObject = SomeObject()

try! realm.write {

realm.add(newObject, update: .all))

}

This avoids it:

let newObject = SomeObject()

DispatchQueue.main.async {

try! realm.write {

realm.add(newObject, update: .all))

}

}

Is this the same issue you've found or another one?

The problematic case that I've found is when you're holding onto a Results which you don't read from after every write transaction.

@tgoyne version 5.3.3 has fixed the issue, thanks. I've verified this was the problem we were seeing by removing the changes in results_notifier.cpp which makes the problem reappear (although I don't really understand why my workaround worked).

v5.3.3 _did improve_ my app from _immediately crashing_ re: the Realm notification listener. Thank you.

I confirmed that setting up a listener to .observe objects while the DB is in the process of writing objects is what causes my crash.

Of course now it's just a limit case.

Setting config.maximumNumberOfActiveVersions = 3, is too low and crashes as before.

Setting config.maximumNumberOfActiveVersions = 64 though seems to hold up much better without the exception crash. 👍

I'll run some more longer tests on my iPad that syncs down all 40k+ objects to confirm its stability. 🤞

[Update: Aug. 11, 2020]

This seems resolved..

Confirmed no crash on RealmSwift (5.4.0)

Most helpful comment

Some acknowledgement of this one would be great. Would have been super dangerous to have released this to my users, without a way for them to downgrade back to a working Realm version!