Ray: [tune] `hyperparam_mutation` values must be either

I use the example in https://github.com/ray-project/ray/tree/master/python/ray/tune/examples/pbt_transformers

Ray version=0.8.7

Python version=3.7.7

transformers=3.0.2

when run pbt_transformers.py it comes two errors:

first :

With the raw code ray.init(ray_address, log_to_driver=False), and the ray_address is None. The error is below:

2020-09-23 17:29:17,704 WARNING worker.py:1134 -- The dashboard on node dfc587d32eef failed with the following error:

Traceback (most recent call last):

File "/opt/conda/lib/python3.7/site-packages/ray/dashboard/dashboard.py", line 961, in <module>

dashboard.run()

File "/opt/conda/lib/python3.7/site-packages/ray/dashboard/dashboard.py", line 576, in run

aiohttp.web.run_app(self.app, host=self.host, port=self.port)

File "/opt/conda/lib/python3.7/site-packages/aiohttp/web.py", line 433, in run_app

reuse_port=reuse_port))

File "/opt/conda/lib/python3.7/asyncio/base_events.py", line 587, in run_until_complete

return future.result()

File "/opt/conda/lib/python3.7/site-packages/aiohttp/web.py", line 359, in _run_app

await site.start()

File "/opt/conda/lib/python3.7/site-packages/aiohttp/web_runner.py", line 104, in start

reuse_port=self._reuse_port)

File "/opt/conda/lib/python3.7/asyncio/base_events.py", line 1389, in create_server

% (sa, err.strerror.lower())) from None

OSError: [Errno 99] error while attempting to bind on address ('::1', 8265, 0, 0): cannot assign requested address

Therefore I change the code above to ray.init(dashboard_host="127.0.0.1") and the error disappeared. But Why ? How should I choose in my local machine?

second :

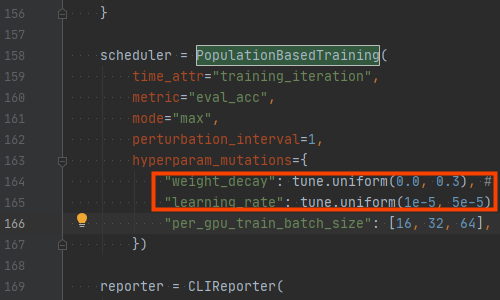

Traceback (most recent call last):

File "pbt_transformers.py", line 252, in <module>

ray_address=args.ray_address)

File "pbt_transformers.py", line 166, in tune_transformer

"per_gpu_train_batch_size": [16, 32, 64],

File "/opt/conda/lib/python3.7/site-packages/ray/tune/schedulers/pbt.py", line 190, in __init__

raise TypeError("`hyperparam_mutation` values must be either "

TypeError: `hyperparam_mutation` values must be either a List, Dict, or callable.

Check the code, it seems say the tune.uniform return a 'sample_from' object that is not match the "hyperparam_mutation values must be either a List, Dict, or callable.". How can I do to avoid the error? Thanks a lot!

All 9 comments

for your first question:

@MrRace can you tell me more about the machines you're running on? and can you send the output of lsof -n -i :8265

for your second question:

import random

from ray import tune

from ray.tune.schedulers import PopulationBasedTraining

def trainable(config, checkpoint_dir=None):

out = config['weight_decay'] \

* config['learning_rate'] \

* config['per_gpu_train_batch_size']

# this is a useless trainable!

# update this with your implementation.

tune.report(metric=out)

search_space = {

"weight_decay": tune.uniform(0.0, 0.3),

"learning_rate": tune.uniform(1e-5, 5e-5),

"per_gpu_train_batch_size": tune.choice((16, 32, 64)),

}

pbt = PopulationBasedTraining(

hyperparam_mutations={

"weight_decay": lambda: random.uniform(0.0, 0.3),

"learning_rate": lambda: random.uniform(1e-5, 5e-5),

"per_gpu_train_batch_size": [16, 32, 64],

},

# hyperparam_mutations={

# "weight_decay": tune.uniform(0.0, 0.3).func,

# "learning_rate": tune.uniform(1e-5, 5e-5).func,

# "per_gpu_train_batch_size": [16, 32, 64],

# },

metric='metric',

)

tune.run(

trainable,

config=search_space,

num_samples=2,

scheduler=pbt,

resources_per_trial={'gpu': 1},

)

both versions of the hyperparam_mutations kwarg should work as expected.

@richardliaw might be convenient for users if we accept tune.sample_from instances as values for hyperparam_mutation in 1.0.

@MrRace I hope this helped!

@MrRace Can you try upgrading ray to the latest nightly wheels (https://docs.ray.io/en/master/installation.html#latest-snapshots-nightlies)?. Both of these issues should be fixed in the latest wheels and will be included in the next 1.0 release.

If you want to continue using Ray 0.8.7, then your fix for the dashboard issue should work, and you should run the pbt_transformers.py example from the 0.8.7 release (https://github.com/ray-project/ray/blob/releases/0.8.7/python/ray/tune/examples/pbt_transformers/pbt_transformers.py) instead of the example from master.

Hope this helps!

Also just curious, what OS are you running on? Also are you using Docker?

Also just curious, what OS are you running on? Also are you using Docker?

Yeah, I run the script in Docker, the OS in the docker is debian buster/sid

Got it ok, that's the reason why the dashboard host was not working for you. More info can be found in this thread: https://github.com/ray-project/ray/issues/7084.

But we've recently fixed this issue (along with the hyperparam_mutation issue), so upgrading ray to the latest nightly wheels should work. Let me know how that goes!

Got it ok, that's the reason why the dashboard host was not working for you. More info can be found in this thread: #7084.

But we've recently fixed this issue (along with the hyperparam_mutation issue), so upgrading ray to the latest nightly wheels should work. Let me know how that goes!

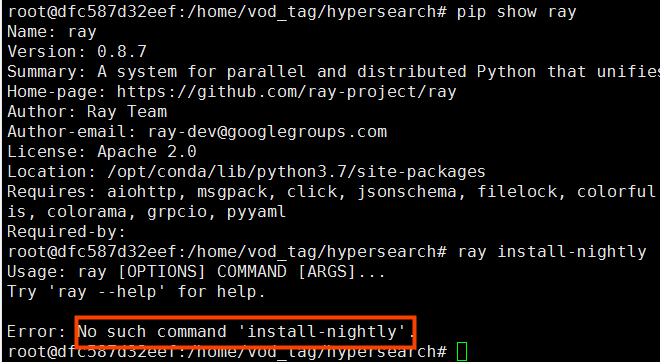

The command ray install-nightly seems not work.

Right install-nightly was added recently and is not in ray 0.8.7 😅. Can you try pip install -U ray==1.0.0rc2. This will give the release candidate for ray 1.0.

Right

install-nightlywas added recently and is not in ray 0.8.7 . Can you trypip install -U ray==1.0.0rc2. This will give the release candidate for ray 1.0.

Thanks a lot, it can work now in the master example.

Awesome!

Most helpful comment

Thanks a lot, it can work now in the master example.