Ray: [autoscaler] Local Cluster with Docker: Check failed: !local_node_id_.IsNil() This node is disconnected.

What is your question?

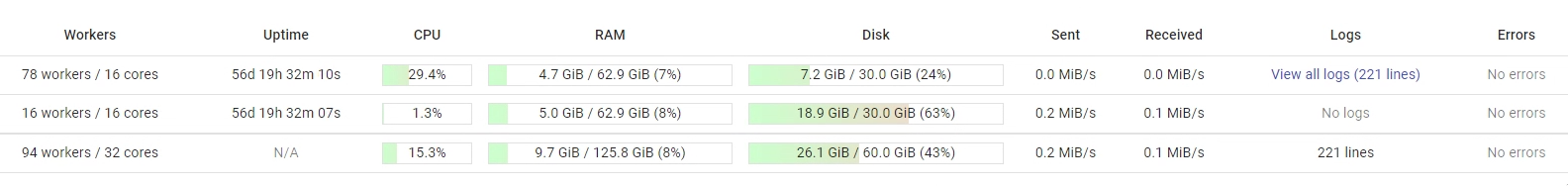

I've been trying to run a cluster using two hosts with docker. But after running ray up -y cluster.yml, I get the following situation after some minutes:

Workers keeps incresing by 16 (number of cores) on the worker node, and the same error popping up on the logs.

View all logs:

F0528 14:53:04.237076 138 service_based_accessor.cc:320] Check failed: !local_node_id_.IsNil() This node is disconnected.

*** Check failure stack trace: ***

@ 0x55bb8f0f676d google::LogMessage::Fail()

@ 0x55bb8f0f7bdc google::LogMessage::SendToLog()

@ 0x55bb8f0f6449 google::LogMessage::Flush()

@ 0x55bb8f0f6661 google::LogMessage::~LogMessage()

@ 0x55bb8edb7029 ray::RayLog::~RayLog()

@ 0x55bb8ec285fc ray::gcs::ServiceBasedNodeInfoAccessor::UnregisterSelf()

@ 0x55bb8eb37bd4 ray::raylet::Raylet::Stop()

@ 0x55bb8eb1c943 _ZZ4mainENKUlRKN5boost6system10error_codeEiE_clES3_i.isra.2014

@ 0x55bb8eb1cd58 _ZN5boost4asio6detail14signal_handlerIZ4mainEUlRKNS_6system10error_codeEiE_NS1_18io_object_executorINS0_8executorEEEE11do_completeEPvPNS1_19scheduler_operationES6_m

@ 0x55bb8f08841f boost::asio::detail::scheduler::do_run_one()

@ 0x55bb8f089921 boost::asio::detail::scheduler::run()

@ 0x55bb8f08a7c2 boost::asio::io_context::run()

@ 0x55bb8eb06669 main

@ 0x7fa7e5fd909b __libc_start_main

@ 0x55bb8eb17331 (unknown)

Relevant messages on logs/monitor.*

2020-05-28 14:52:26,124 WARNING autoscaler.py:536 -- StandardAutoscaler: host02: No heartbeat in 30.2060968875885s, restarting Ray to recover...

2020-05-28 14:53:01,399 WARNING autoscaler.py:536 -- StandardAutoscaler: host02: No heartbeat in 30.24538493156433s, restarting Ray to recover...

2020-05-28 14:53:36,620 WARNING autoscaler.py:536 -- StandardAutoscaler: host02: No heartbeat in 30.10585641860962s, restarting Ray to recover...

2020-05-28 14:54:11,807 WARNING autoscaler.py:536 -- StandardAutoscaler: host02: No heartbeat in 30.12926173210144s, restarting Ray to recover...

2020-05-28 14:54:46,926 WARNING autoscaler.py:536 -- StandardAutoscaler: host02: No heartbeat in 30.117576360702515s, restarting Ray to recover...

2020-05-28 14:55:22,223 WARNING autoscaler.py:536 -- StandardAutoscaler: host02: No heartbeat in 30.251209259033203s, restarting Ray to recover...

2020-05-28 14:55:57,461 WARNING autoscaler.py:536 -- StandardAutoscaler: host02: No heartbeat in 30.201629877090454s, restarting Ray to recover...

cluster.yml:

cluster_name: test

min_workers: 2

initial_workers: 2

max_workers: 2

docker:

image: "python:3.8.3"

container_name: "ray"

run_options: ["--shm-size 25GB"]

provider:

type: local

head_ip: host01

worker_ips: [host02]

auth:

ssh_user: root

ssh_private_key: ~/.ssh/id_rsa

head_start_ray_commands:

- ray stop

- ulimit -c unlimited && ray start --head --redis-port=6379 --redis-password='' --webui-host=0.0.0.0 --autoscaling-config=~/ray_bootstrap_config.yaml

worker_start_ray_commands:

- ray stop

- ray start --address=$RAY_HEAD_IP:6379 --redis-password=''

I wasn't able to detect any blocked ports by the firewall (using telnet, netstat and nc).

Ray version: 0.8.5 and 0.9.0-dev

OS: Centos 7, kernel 4.4.96

Python version: 3.6

All 14 comments

@ijrsvt This could possibly be heartbeat lag. I saw several cases when CPU load is high, heartbeats start to lag and are not reached on time, and it marks nodes as dead. @icaropires can you loosen the resource limit of your containers? That says, can you loosen the memory and cpu constraint on Docker containers a lot and see if the same error happens?

https://docs.docker.com/config/containers/resource_constraints/

@ijrsvt This could possibly be heartbeat lag. I saw several cases when CPU load is high, heartbeats start to lag and are not reached on time, and it marks nodes as dead. @icaropires can you loosen the resource limit of your containers? That says, can you loosen the memory and cpu constraint on Docker containers a lot and see if the same error happens?

https://docs.docker.com/config/containers/resource_constraints/

Thanks for the response @rkooo567 . But I don't think that's the problem, because I wasn't running any script (I've just ran ray up -y cluster.yml) and I haven't set any constraints. But, anyway, I've tried to run with:

run_options: ["--shm-size 25GB --cpus=16 --memory=50g"]

and got the same behavior. It seems to me that the heartbeats are never reaching the head node

@icaropires Thanks for the quick response. We will surely investigate it. Btw, is it urgent for you? Can you use some different solutions until we figure out the root cause (like running without Docker)?

@icaropires Thanks for the quick response. We will surely investigate it. Btw, is it urgent for you? Can you use some different solutions until we figure out the root cause (like running without Docker)?

@rkooo567 I was kind of testing something. But I'll figure it out, thanks for asking!

No problem! Please share your solution if you figured this out. We tried to fix this problem as it happens to many users, but we failed to reproduce it several times ourselves!

@rkooo567 The problem is the indicated at #8648 ! The GcsServerAddress is being set to localhost in head node, inside or outside the container. I've had success with the following steps:

- ray start the head node

- connect to redis using redis-cli and set

GcsServerAddressto<my_ip:<same port as before> - ray start other nodes

(I've tested inside containers, but it should work outside as well)

ray version: 0.8.6

I don't know the reason this is happening, in other environments it gets the correct IP

Would you mind checking what was GCSServerAddress in Redis before you manually set?

Would you mind checking what was GCSServerAddress in Redis before you manually set?

@rkooo567 No problem! It's 127.0.0.1:

_output from cat /tmp/ray/session_latest/logs/gcs_server.out:_

I0804 12:51:42.988648 27 27 redis_client.cc:141] RedisClient connected.

I0804 12:51:42.997473 27 27 redis_gcs_client.cc:88] RedisGcsClient Connected.

I0804 12:51:42.998004 27 27 gcs_redis_failure_detector.cc:29] Starting redis failure detector.

I0804 12:51:42.998190 27 27 gcs_actor_manager.cc:737] Loading initial data.

I0804 12:51:42.998234 27 27 gcs_object_manager.cc:270] Loading initial data.

I0804 12:51:42.998255 27 27 gcs_node_manager.cc:344] Loading initial data.

I0804 12:51:42.998407 27 27 gcs_actor_manager.cc:761] Finished loading initial data.

I0804 12:51:42.998421 27 27 gcs_object_manager.cc:285] Finished loading initial data.

I0804 12:51:42.998502 27 27 gcs_node_manager.cc:361] Finished loading initial data.

I0804 12:51:42.998709 27 27 grpc_server.cc:74] GcsServer server started, listening on port 33494.

I0804 12:51:43.106926 27 27 gcs_server.cc:217] Gcs server address = 127.0.0.1:33494

I0804 12:51:43.106974 27 27 gcs_server.cc:221] Finished setting gcs server address: 127.0.0.1:33494

I0804 12:51:43.221514 27 27 gcs_node_manager.cc:135] Registering node info, node id = 94ff5ed7b153cf383852da1001e32b69fa78e04b

I0804 12:51:43.221841 27 27 gcs_node_manager.cc:140] Finished registering node info, node id = 94ff5ed7b153cf383852da1001e32b69fa78e04b

_output from redis-cli_:

head:6379> get GcsServerAddress

"127.0.0.1:33494"

Really appreciate it! Would you mind trying one more thing actually? Could you run this command in the head node and see what's the IP addresses in this case?

import ray

ray.init(address='auto')

print(ray.services.get_node_ip_address())

The GCS server IP address is resolved in CPP, and it seems like it sometimes doesn't resolve the correct local IP addresses (It first pings Google DNS server, and if it fails, it uses asio resolver to get local IP addresses. If both fails, the address is set to be the localhost (your case)). I'd like to check if Python's IP resolution also fails when you run it inside Docker containers.

Really appreciate it! Would you mind trying one more thing actually? Could you run this command in the head node and see what's the IP addresses in this case?

import ray ray.init(address='auto') print(ray.services.get_node_ip_address())The GCS server IP address is resolved in CPP, and it seems like it sometimes doesn't resolve the correct local IP addresses (It first pings Google DNS server, and if it fails, it uses asio resolver to get local IP addresses. If both fails, the address is set to be the localhost (your case)). I'd like to check if Python's IP resolution also fails when you run it inside Docker containers.

Sure! Running the commands:

Python 3.8.2 (default, Jul 16 2020, 14:00:26)

[GCC 9.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import ray

>>> ray.init(address='auto')

WARNING: Logging before InitGoogleLogging() is written to STDERR

I0805 11:38:39.371309 611 611 global_state_accessor.cc:25] Redis server address = <my ip>:6379, is test flag = 0

I0805 11:38:39.372180 611 611 redis_client.cc:141] RedisClient connected.

I0805 11:38:39.380892 611 611 redis_gcs_client.cc:88] RedisGcsClient Connected.

I0805 11:38:39.381640 611 611 service_based_gcs_client.cc:75] ServiceBasedGcsClient Connected.

{'node_ip_address': '<my ip>', 'raylet_ip_address': '<my ip>, 'redis_address': '<my ip>:6379', 'object_store_address': '/tmp/ray/session_2020-08-05_11-37-59_349964_327/sockets/plasma_store', 'raylet_socket_name': '/tmp/ray/session_2020-08-05_11-37-59_349964_327/sockets/raylet', 'webui_url': 'localhost:8265', 'session_dir': '/tmp/ray/session_2020-08-05_11-37-59_349964_327'}

>>> print(ray.services.get_node_ip_address())

<my ip>

I've only replaced my valid IP for <my ip> for security reasons, but it's always the same and is not 127.0.0.1.

Some more informations

it first pings Google DNS server

ping 8.8.8.8works from my head node :thinking:

- This "GcsServerAddress as localhost" behaviour is happening outside the Docker containers too!

- In ray version 0.8.6, when opening the dashboard the behaviour is that described in #8805

- In ray version 0.8.5, when not using autoscaler, no problems can be seen in dashboard, but only the head node resources are used

I'll be happy to provide any more details if needed :smiley:

@ijrsvt I think the issue is that GcsServerAddress timeout is too short in CPP code. I can probably push the PR and see if it fixes the issue. If you have any other thought, please let me know!

@icaropires Would you mind downloading the latest Ray and test if my fix will help you solve the problem in some time like next week?

@ijrsvt I think the issue is that

GcsServerAddresstimeout is too short in CPP code. I can probably push the PR and see if it fixes the issue. If you have any other thought, please let me know!@icaropires Would you mind downloading the latest Ray and test if my fix will help you solve the problem in some time like next week?

@rkooo567 No problem, just let me know. If I get some time, I'll also try to investigate

I think I was able to solve it. I will update #10004 with the checkings and this issue with more details as soon as possible.

@rkooo567 I've tried to increase timeout to like 2s but I've got the same behaviour even my ping response being less than 100ms to 8.8.8.8.

So I invested in trying to fix the fallback option, the local resolve, and implemented the solution at #10004 .

But I guess that even being able to suggest the right IP most of the times, there might be situations in which the best is let the user specify GcsServerAddress (if there are more than one valid IP, for example), probably by passing a flag on CLI.