Ray: [tune] The status message (progress reporter) info is misleading for PBT scheduler

System information

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Mac OS

- Ray installed from (source or binary): source

- Ray version: 0.8.0.dev6 (master branch)

- Python version: 3.6

- Exact command to reproduce: Train a model with PBT

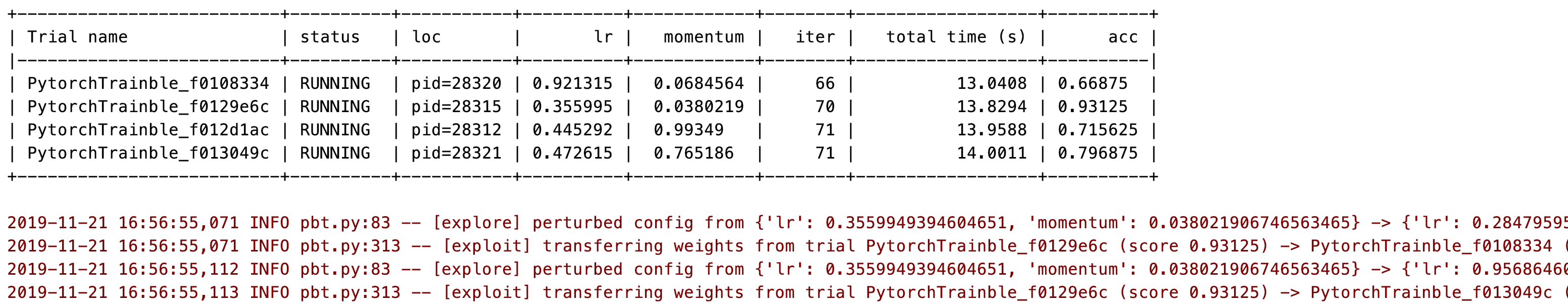

The (hyper)parameters from status console output is not updated as PBT perturbs config.

The parameters in the table are only the initial parameters and are no longer useful. IMO We can either change or add column to reflect latest config.

All 11 comments

Just want to check if other users redeem it as an issue, and also to collect suggestions.

E.g.

- overwrite with the updated config parameter.

- rename the old config columns and add "init_" prefix, add new columns for the latest config.

It used to show the perturbed values in the trial name I think. @ujvl is this a regression?

Agree that showing the new values makes sense.

not familiar with how PBT does the perturbation but my guess is that if it's perturbing the copy of the config on the Trial and not updating the copy on the Trainable that's probably the issue (since currently we use the last_result's config to print the current parameters). imo the last_result should contain the perturbed parameters.

but the easy fix for the output is to just make the reporter use trial.config—I can create a PR for that tomorrow.

Ah, we should make the last_result return the right parameters. The result["config"] should reflect the configuration used by that step (or else there's no point in returning a step-wise config :) )

Thanks for the feedback. My original thought is to update last_result config but I was not sure if the original config is used somewhere.

The trail name could be too long with the (perturbed) config, I'm not sure if it's necessary.

Hi @hhbyyh, are you sure the status console output is not updated as PBT perturbs config? In your screenshot, it looks like the config is being updated _after_ the last result. Therefore, it should be the case upon the next result that the new config is shown. Can you check if that's the case?

The trail name could be too long with the (perturbed) config, I'm not sure if it's necessary.

Agree it is too long to include.

Hi @richardliaw , here's the code the repro:

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

# import tensorflow as tf

import os

import numpy as np

import torch

import torch.optim as optim

from torchvision import datasets

from ray.tune.examples.mnist_pytorch import train, test, ConvNet, get_data_loaders

import ray

from ray import tune

from ray.tune.schedulers import PopulationBasedTraining

from ray.tune.util import validate_save_restore

class PytorchTrainble(tune.Trainable):

def _setup(self, config):

self.device = torch.device("cpu")

self.train_loader, self.test_loader = get_data_loaders()

self.model = ConvNet().to(self.device)

self.optimizer = optim.SGD(

self.model.parameters(),

lr=config.get("lr", 0.01),

momentum=config.get("momentum", 0.9))

def _train(self):

train(self.model, self.optimizer, self.train_loader, device=self.device)

acc = test(self.model, self.test_loader, self.device)

return {"mean_accuracy": acc}

def _save(self, checkpoint_dir):

checkpoint_path = os.path.join(checkpoint_dir, "model.pth")

torch.save(self.model.state_dict(), checkpoint_path)

return checkpoint_path

def _restore(self, checkpoint_path):

self.model.load_state_dict(torch.load(checkpoint_path))

def reset_config(self, new_config):

del self.optimizer

self.optimizer = optim.SGD(

self.model.parameters(),

lr=new_config.get("lr", 0.01),

momentum=new_config.get("momentum", 0.9))

return True

ray.init()

datasets.MNIST("~/data", train=True, download=True)

validate_save_restore(PytorchTrainble)

validate_save_restore(PytorchTrainble, use_object_store=True)

print("Success!")

scheduler = PopulationBasedTraining(

time_attr="training_iteration",

metric="mean_accuracy",

mode="max",

perturbation_interval=5,

hyperparam_mutations={

# distribution for resampling

"lr": lambda: np.random.uniform(0.0001, 1),

# allow perturbations within this set of categorical values

"momentum": [0.8, 0.9, 0.99],

})

analysis = tune.run(

PytorchTrainble,

name="pbt_test",

scheduler=scheduler,

reuse_actors=True,

verbose=1,

stop={

"training_iteration": 200,

},

num_samples=4,

checkpoint_at_end=True,

# PBT starts by training many neural networks in parallel with random hyperparameters.

config={

"lr": tune.uniform(0.001, 1),

"momentum": tune.uniform(0.001, 1),

})

Meanwhile I did see the status table config is updated in some other experiment.

Oh, I think it's that you need to update self.config in reset_config

Ah, my problem. But I'll also need to update the examples and maybe the docs.

Closing the issue now. Thanks for the comments.

Most helpful comment

Ah, we should make the

last_resultreturn the right parameters. Theresult["config"]should reflect the configuration used by that step (or else there's no point in returning a step-wise config :) )