Rasa: Error Training NLU model with SklearnIntentClassifier

I am getting this error, when I try to train NLU model with SklearnIntentClassifier:

2020-07-20 14:55:36 INFO rasa.nlu.model - Finished training component.

2020-07-20 14:55:36 INFO rasa.nlu.model - Starting to train component SklearnIntentClassifier

Fitting 2 folds for each of 6 candidates, totalling 12 fits

[Parallel(n_jobs=1)]: Using backend SequentialBackend with 1 concurrent workers.

[Parallel(n_jobs=1)]: Done 12 out of 12 | elapsed: 0.0s finished

Traceback (most recent call last):

File "anaconda3\envs\rasa-venv\lib\runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

File "anaconda3\envs\rasa-venv\lib\runpy.py", line 85, in _run_code

exec(code, run_globals)

File "Anaconda3\envs\rasa-venv\Scripts\rasa.exe\__main__.py", line 7, in <module>

File "anaconda3\envs\rasa-venv\lib\site-packages\rasa\__main__.py", line 92, in main

cmdline_arguments.func(cmdline_arguments)

File "anaconda3\envs\rasa-venv\lib\site-packages\rasa\cli\train.py", line 140, in train_nlu

persist_nlu_training_data=args.persist_nlu_data,

File "anaconda3\envs\rasa-venv\lib\site-packages\rasa\train.py", line 414, in train_nlu

persist_nlu_training_data,

File "anaconda3\envs\rasa-venv\lib\asyncio\base_events.py", line 587, in run_until_complete

return future.result()

File "anaconda3\envs\rasa-venv\lib\site-packages\rasa\train.py", line 453, in _train_nlu_async

persist_nlu_training_data=persist_nlu_training_data,

File "anaconda3\envs\rasa-venv\lib\site-packages\rasa\train.py", line 482, in _train_nlu_with_validated_data

persist_nlu_training_data=persist_nlu_training_data,

File "anaconda3\envs\rasa-venv\lib\site-packages\rasa\nlu\train.py", line 90, in train

interpreter = trainer.train(training_data, **kwargs)

File "anaconda3\envs\rasa-venv\lib\site-packages\rasa\nlu\model.py", line 191, in train

updates = component.train(working_data, self.config, **context)

File "anaconda3\envs\rasa-venv\lib\site-packages\rasa\nlu\classifiers\sklearn_intent_classifier.py", line 125, in train

self.clf.fit(X, y)

File "anaconda3\envs\rasa-venv\lib\site-packages\sklearn\model_selection\_search.py", line 739, in fit

self.best_estimator_.fit(X, y, **fit_params)

File "anaconda3\envs\rasa-venv\lib\site-packages\sklearn\svm\_base.py", line 148, in fit

accept_large_sparse=False)

File "anaconda3\envs\rasa-venv\lib\site-packages\sklearn\utils\validation.py", line 755, in check_X_y

estimator=estimator)

File "anaconda3\envs\rasa-venv\lib\site-packages\sklearn\utils\validation.py", line 578, in check_array

allow_nan=force_all_finite == 'allow-nan')

File "anaconda3\envs\rasa-venv\lib\site-packages\sklearn\utils\validation.py", line 60, in _assert_all_finite

msg_dtype if msg_dtype is not None else X.dtype)

ValueError: Input contains NaN, infinity or a value too large for dtype('float64').

I’m using the latest version of rasa (1.10.8) and scikit-learn 0.22.2.post1 on a virtual environment with Python3.7.

All 17 comments

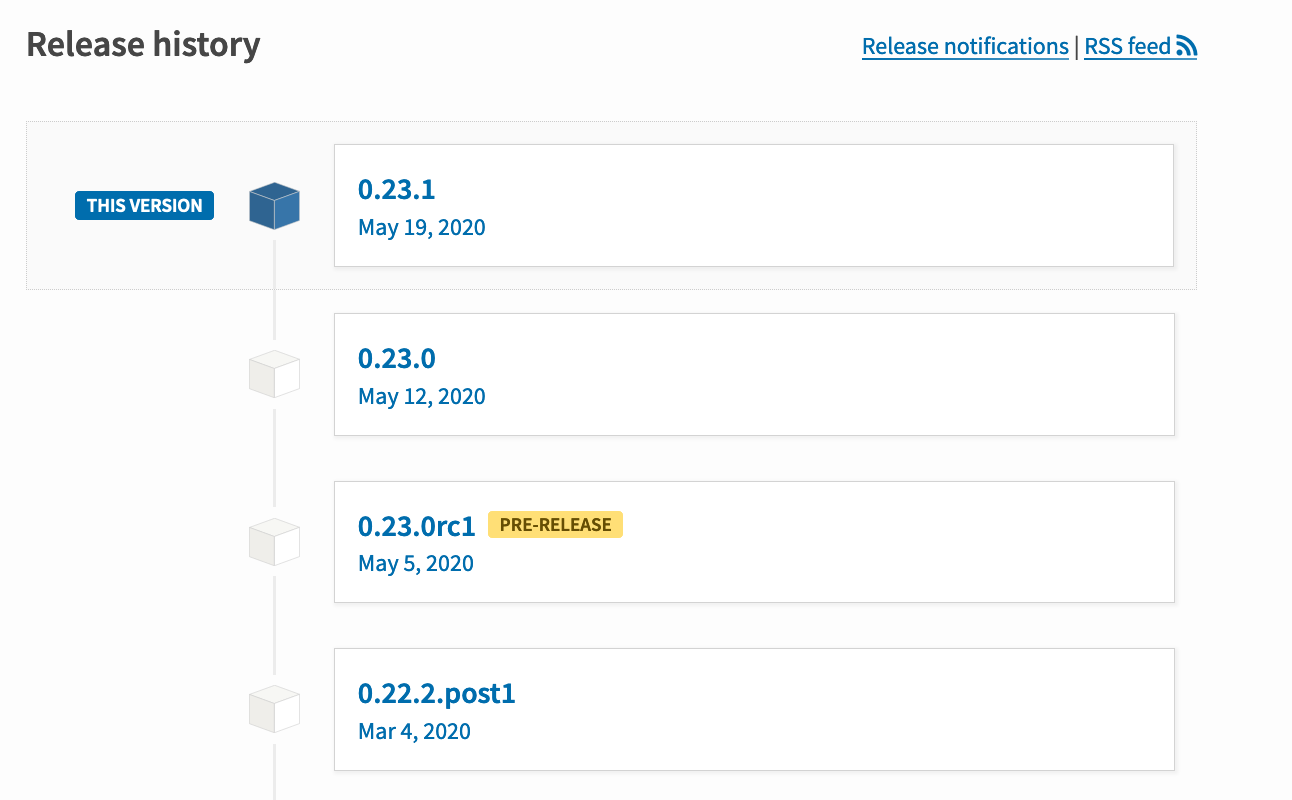

Mhm. I've just check the release history of scikit-learn on pypi.

I can't directly link it to your error message, but just for good measure, could you install the most recent version? Something in my gut feeling is wondering if the 0.22.2.post1 version might be shady release. Sometimes these in-between versions are not stable.

If that doesn't help, could you share your config.yml file as well?

First of all thanks for your answer. Even using a more updated version of scikit-learn the problem persists.

The configuration file is as follows:

# Configuration for Rasa NLU.

# https://rasa.com/docs/rasa/nlu/components/

language: it_core_news_sm

pipeline:

- name: "SpacyNLP"

- name: "SpacyTokenizer"

- name: "SpacyFeaturizer"

- name: "RegexFeaturizer"

- name: "CRFEntityExtractor"

- name: "EntitySynonymMapper"

- name: "SklearnIntentClassifier"

# Configuration for Rasa Core.

# https://rasa.com/docs/rasa/core/policies/

policies:

- name: "KerasPolicy"

- name: "FallbackPolicy"

- name: "MemoizationPolicy"

- name: "FormPolicy"

In that case it seems like the scikit-learn model is receiving bad values. I'm wondering what might be causing it. It also seems like like pooling setting for the SpacyFeaturizer is missing. Could you try that?

Another thing I am wonderin', any reason why you're not using DIET?

Even adding the pooling setting (e.g. "pooling": "mean") to the SpacyFeaturizer component the problem persists.

For my purposes I can also use another pipeline, but since I encountered this problem it seemed right to report it.

For example, by specifying the following pipeline in the configuration file the training is successful.

pipeline:

- name: "SpacyNLP"

- name: "SpacyTokenizer"

- name: "SpacyFeaturizer"

- name: "RegexFeaturizer"

- name: "LexicalSyntacticFeaturizer"

- name: "CountVectorsFeaturizer"

- name: "CountVectorsFeaturizer"

analyzer: "char_wb"

min_ngram: 3

max_ngram: 5 - name: "DIETClassifier"

- name: EntitySynonymMapper

- name: ResponseSelector

epochs: 100

Hi @koaning @cicciob95,

I got the same problem. Do u have any solution pls ?

Here is my config.yml :

language: "fr"

pipeline:

- name: "SpacyNLP"

model: "fr_core_news_sm"

- name: "SpacyTokenizer"

- name: SpacyFeaturizer

pooling: mean

- name: "SklearnIntentClassifier"

I’m using the latest rasa 1.10.11 and scikit-learn 0.22 with python 3.6.

(I can't upgrade scikit-learn to the latest version cause rasa 1.10.11 requires scikit-learn<0.23,>=0.22)

Thanks in advance.

I'm wondering if there's NaN generated by the spaCy features. That does seem to be what it is complaining about.

@koaning thanks for your response.

It works for me when I change fr_core_news_sm to fr_core_news_md or fr_core_news_lg or bert-based model bert-base-multilingual-cased :)

@cicciob95 would you upgrade to an md model?

Hi @koaning , could I ask you some more questions about rasa pls ?

I'm fairly new to rasa and my understanding of this powerful framework is probably limited :)

I saw there is a advantage for DIETClassifier, that the transformer part can learn knowledge of certain corpus for both intent classification and ner. But if we use it only for intent classification, is this a good choice ? I suppose we need construct a much bigger corpus if we only do intent, which size of corpus (nb of examples for each intent) will be enough pls ? If we use text generation util (e.g. chatito) to augment corpus (the sentences’ format will be limited), is that a good choice ? In our case, we need to do the classification between almost 40 categories, is that too much ? Do we need to reduce the number of classes (like convert similar intents to intent+entity) ? Other than DIETClassifier and svm (SklearnIntentClassifier), do you have other recommendations pls for intent ?

It will be very helpful to have your responses. Thanks in advance.

In the future, it might be better to ask this question on our forum (you can still tag me there @koaning too). I'll try to answer your question here for now though.

A transformer can still benefit you even if you're only interested in classifying intents. The transformer can be seen as a filter that adds context. The idea is that the context of the entire sentence might give extra context to a word vector (super useful for entities) but it also works the other way around. The presence of a certain word(s) can also very much influence the representation of the entire sentence. The sentence representation is often referred to as the __CLS__ token. It represents the entire sentence.

I'll try to make this more plausible with an example. Take the sentence:

I am at the city bank.

The representation for this sentence is probably has to do with location and a building.

I am at the river bank.

The word "river" should influence the embedding for the sentence a lot. We're no longer talking about buildings. We're probably in nature somewhere.

I hope this example at least makes it plausible why DIET can still be useful even for just intents.

Now, on the topic of improving intent scores. I'd be somewhat surprised if switching to another classifier will help you. This is in part because emperically I keep seeing that DIET performs well but also practically because of the nature of the problem that you might have.

You can help the classifier by giving it extra features. Are you building something in English (if so, add ConveRT) or Spanish (consider spaCy). Also, are your intents similar? Do they represent FAQ questions that are related? If so, you might want to investigate our response selector.

I'd be careful with text generation tools if they are based on rules. The synthetic datasets might become too artificial and there's a risk that DIET will overfit on the artificial patterns rather than the linguistic meaning.

I wouldn't be too worried about 40 categories. I might be more worried about the experience of the digital assistant. Have you ever had somebody talk to it yet? The main reason is this;

It may just be that you're predicting intents very accurately but that you've got a lot of intents that the user is not interested in. There's also a lot of conversational design that typically needs to happen when you design a good conversational interface and I'd typically try to get started with this as soon as possible.

@koaning really appreciate your help.

In fact, I'm building the system in French. As for the feauturizer, I'm currently trying :

- spaCy (sm / md / lg)

- multilingual bert

- camembert (according to https://forum.rasa.com/t/rasa-and-camembert/31882)

I'm also using a CountVectorsFeaturizer after the pre-trained models to capture words specific to my corpus cause the pre-trained models are frozen while training the rasa (according to https://blog.rasa.com/how-to-benchmark-bert/). Even though I'm curious about why adding a bag-of-words model after word embeddings works ? Could you pls explain a little about this ?

Thanks for the other advices, they are really inspiring :)

I also have another project called Rasa NLU examples. If you're really looking to try out all the options out there you can also try out FastText and BytePair embeddings. We also feature gensim as a method of training your own word embeddings. In my experience sofar it doesn't seem that those backends are much better than spaCy but if you're interested you're free to try.

One thing, again, getting the assistant in front of actual users is MoreImportant[tm] than what I've just suggested.

The CountVectorizer doesn't just do bag of words. It also does bag of character-ngrams. This is super important in the real where spelling errors might arise (which, happens a lot in chatbot-land).

I'll be closing this issue because the cause of the issue seems known now.