Rasa: Refactor Agent / Processor / TrackerStore

The Problem

- the

Agentclass is big and hard to maintain - new types of models are hard to implement (e.g. the response selector)

Goals

- Make it easy to implement different / new types of models without having to squeeze it into the existing code

- De-couple our classes to make Rasa Open Source more modular and easier adapt to change

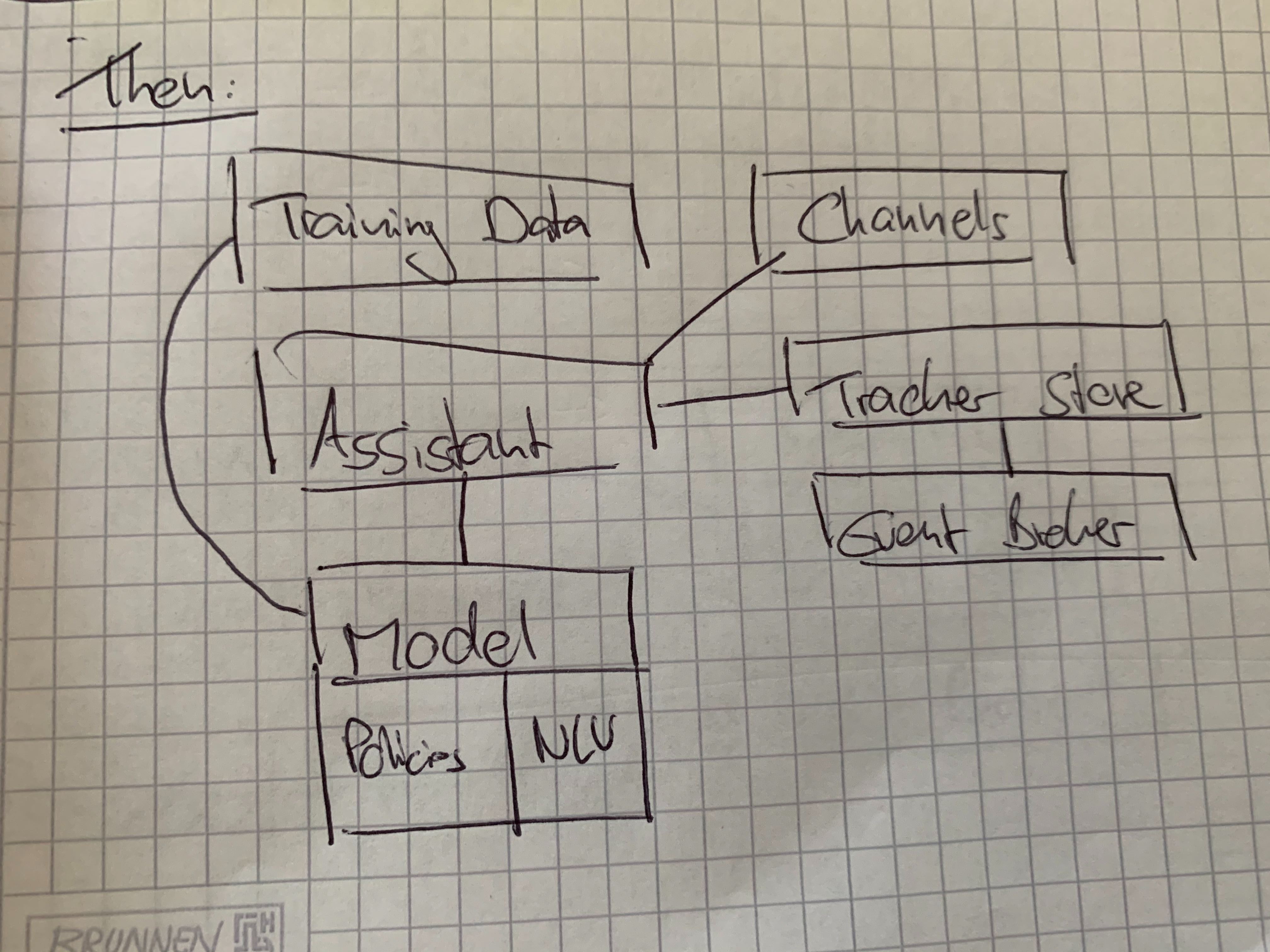

Agent:

- separate model loading logic from class

- separate training from agent class

- remove duplicate code between

Agent/Processor

TrackerStore: Separate it from the model specific domain (theTrackerStoreshould not know of the model domain)- make this not breaking with the existing Rasa Open Source models

Implementation Proposal

- This is about adding a layer before the actual model implementations. So we would e.g. have to adapt our

Processorclass to theModelinterface but could use this then as before

UML Diagrams:

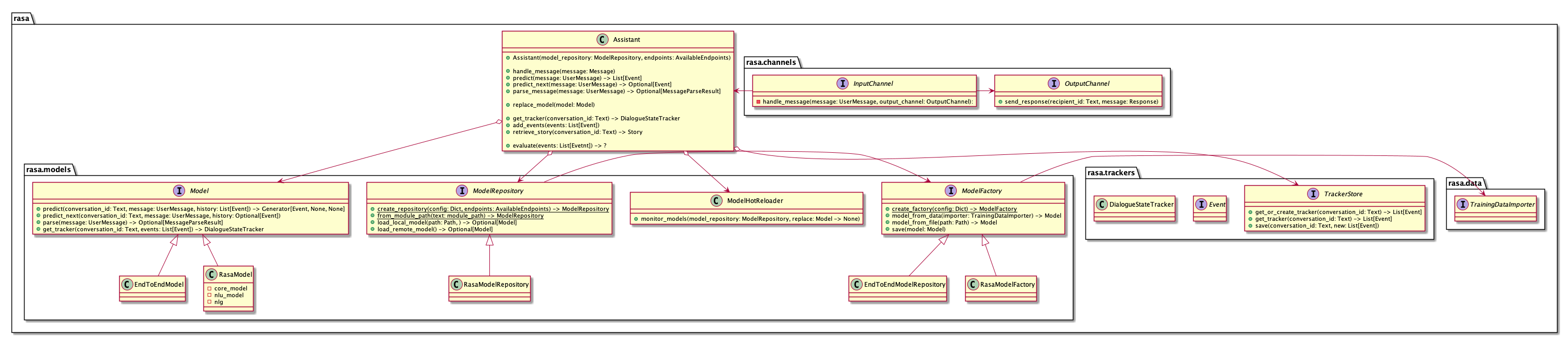

Class hierarchy

Running a training

This UML diagram how the workflow is when running rasa train

It shows how

- a model is trained using a

TrainingDataImporter - the

ModelFactoryis selected based on a default value / config

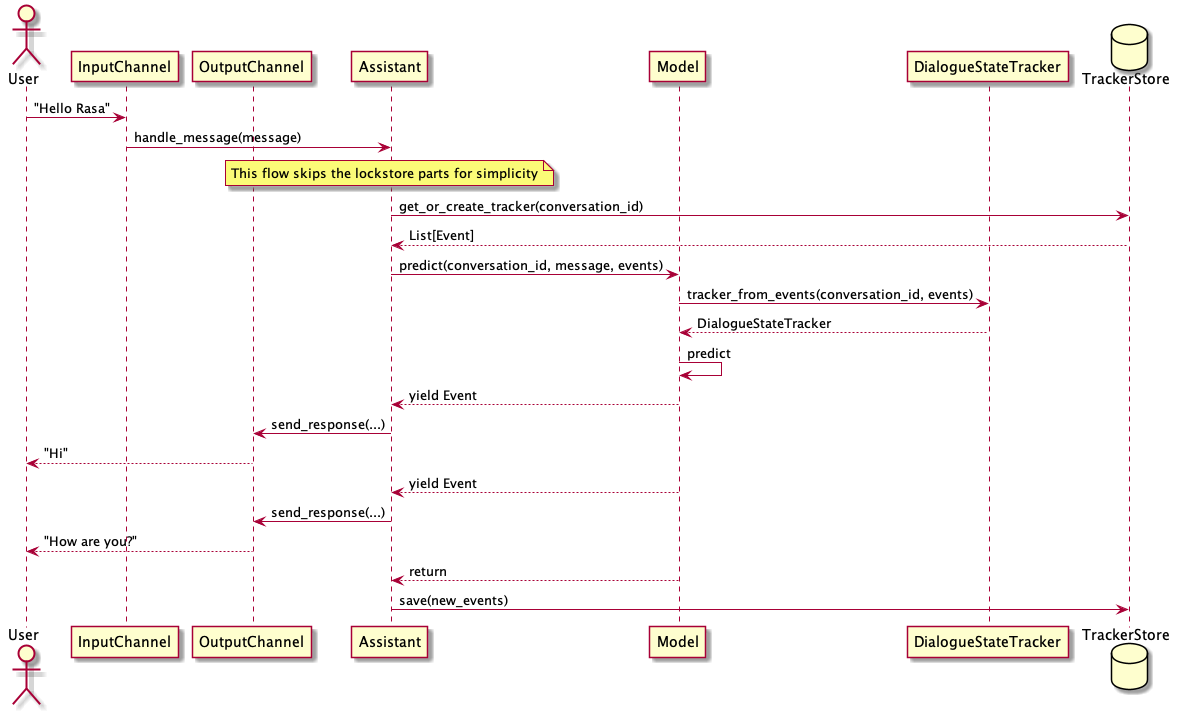

Inference Mode / Interacting with a running Rasa server

It shows how

- the interaction works with the changed

TrackerStore(returns a list of events instead of a DialogueStateTracker - getting response from a model which is behind a

Modelinterface

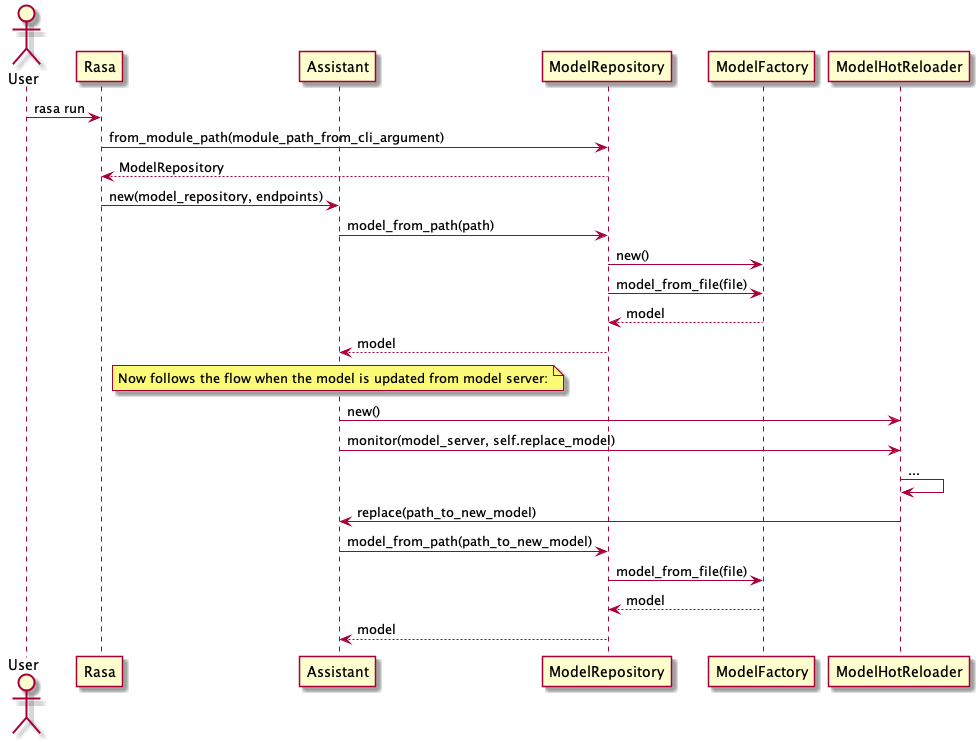

Starting a Rasa Server and loading a model

This shows how:

- the right repository / factory classes are selected to load the desired model type

- a model is loaded from disk

- how models are re-loaded when using a

ModelServer(that's the most vague part I'd say)

All 11 comments

This looks very interesting and I want to understand it better - would you mind adding a very short description of what each UML diagram describes? (just to understand their context better)

@federicotdn Done. Do you have any specific questions which I should clarify?

How does this simplify e.g. the implementation of the response selector?

A couple of questions:

- This means that you would like to merge

AgentandProcessorinto a new class,Assistant, right? Asking because it's not explicitly mentioned. - I like the idea of adding a

ModelFactory. It would separate the logic of creating a model (which is relatively complex, if training is involved) with actually using the model. However, I don't understand whyModelFactoryis in charge of saving the models to files. That sounds like it could go either inModelitself (like it is now), or maybeModelFactoryshould be renamed to represent the fact that it has more responsibilities than just loading models. - Why do you think

TrackerStore.get_or_create_trackershould return a list of events, rather than aDialogueStateTrackeritself? Isn't the latter a better way of representing a series of conversation events? - I couldn't understand the objective of having the

ModelRepositoryclass. In the last UML diagram, it looks like it is delegating the model loading toModelFactory. - Can we rename

DialogueStateTrackertoTracker? (and then addDialogueStateTracker = Trackerbelow the class declaration 🤠 )

How does this simplify e.g. the implementation of the response selector?

Good question. If we would have a structure as proposed then you would approach implementing the feature less from a "how can I fit this in" but rather a "where does this belong" perspective. To be honest I know too less about the difficulties of the response selector implementation to propose how I would have done it given the proposed changes.

An easier example is maybe implementing an end-to-end model. You could simply create a new Model subclass and start coding (while re-using what you need from the existing modules). Both models could live side by side until we decide that we are ready too switch completely.

This means that you would like to merge Agent and Processor into a new class, Assistant, right? Asking because it's not explicitly mentioned.

I wouldn't say merging. Actually it would be more separating them since they are quite entangled at the moment. I guess that a lot of Processor logic would go into RasaModel while the Assistant would be more focused on delegating between channels / tracker stores / lock stores / models .

However, I don't understand why ModelFactory is in charge of saving the models to files.

True, I think both is possible. Might be that the ModelFactory is too much abstraction. From my view the ModelFactory is responsible for everything around (de)-serialization of the model. You could e.g. implement a different ModelFactory to store models on different sources (e.g. S3, disk, ...) or in different formats.

I couldn't understand the objective of having the ModelRepository class. In the last UML diagram, it looks like it is delegating the model loading to ModelFactory.

The ModelRepository is responsible for selecting the appropriate model, e.g. selecting the suited model from a directory on disk or from bucket on AWS. The ModelFactory would then deal with converting the selected model to the right Python objet.

Why do you think TrackerStore.get_or_create_tracker should return a list of events, rather than a DialogueStateTracker itself? Isn't the latter a better way of representing a series of conversation events?

The TrackerStore currently requires the Domain to return the DialogueStateTracker. The Domain is part of the model and shouldn't be exposed to the TrackerStore in my opinion (e.g. different models might handle the events differently). I completely agree that the Model should then apply a suited data structure to this raw lists to make the handling easier.

Can we rename DialogueStateTracker to Tracker? (and then add DialogueStateTracker = Tracker below the class declaration 🤠

I'd prefer ConversationHistory actually since it's more intuitive 😄

In general this is just a first draft / proposal. A lot details about the interactions (e.g. between ModelRepository / ModelFactory still need revisiting.

Thanks for your detailed feedback @federicotdn !

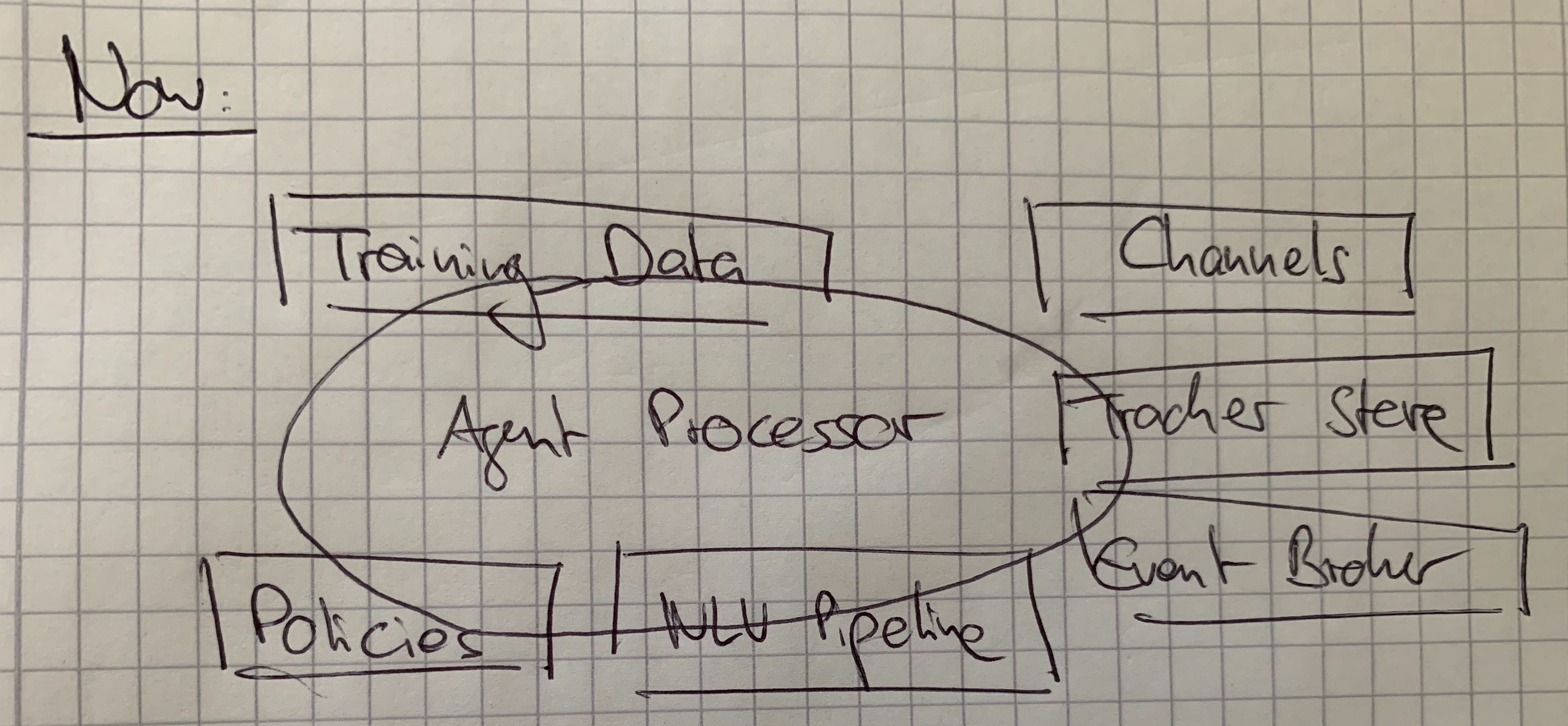

The way I see our code is as that it is organized as a bunch of lego blocks which interact with each other through clearly defined interfaces. This makes the single blocks replaceable, the code concise and hence more flexible.

Currently, the agent / processor logic leaks into some of our existing "lego blocks" (e.g. training data, tracker store, policies) (sorry, that I forgot the lockstore).

(I believe) Introducing a Model interface + the other changes would reinforce the re-usable blocks and remove the leaks.

That looks great, I like the idea of reworking the Agent and Processor classes into Assistant, while at the same time making the boundaries between all classes better defined. Currently Agent and Processor are very tightly related and sometimes it's difficult to see what each of them individually is meant to do. I think these changes also give us the opportunity to improve our public Python API.

An issue regarding process safe handling of models. Not necessary part of this issue, but worth thinking about before implementing this https://github.com/RasaHQ/rasa/issues/5376

Refactoring steps

Simplifications

- no need to dynamically load the factory for the model in the beginning. That can be a later step

Todos

- Think about

processor._cancel_remindersand_schedule_reminders

Steps

Part 0: Agree on a Model interface

- The current suggestion in the issue is just a quick draft and needs to be discussed with the team.

Part 1: The divorce of Domain and TrackerStore

Rough plan

- return a

List[Event]forget_or_create_tracker - move

init_trackersomewhere else (probably toDialogueStateTracker) - optional: refactor

TrackerStore.saveto accept aList[Event] - adapt sub implementations

Current Dependencies (just for plan ideation)

processor.get_trackerexporter._fetch_events_within_time_rangesavereturns a DialogueStateTracker

- imo it can continue to accept a

DialogueStateTracker

- imo it can continue to accept a

Part 2: Hello Model interface

Rough plan

- Create

Model Interface - Let

Processsorimplement model interface - Let

Agentonly speak to processor via interface

Current Dependencies (just for plan ideation)

- Dependencies Agent <- -> Processor

Model.parse_message

Agent.parse_message_using_nlu_interpreter

Model.predict

Agent.handle_messageAgent.trigger_intent

Model.predict_next

Agent.predict_next

Model.execute

- ‼️ This is missing in the interface declaration

Agent.execute_action

- do we want to remove this?

- Open for discussion

Agent.log_message

- Do we even need that?

- seems to a weird way to add the result of

parse_messagedirectly to the tracker + adding slots

Part 3: Remove TrackerStore from Processor

Rough plan

- Remove direct access from

servermodule toprocessor

- speak to my

Agent🤚

- speak to my

- Pass in current

Events(notDialogueStateTracker!) to every function of the processor which needs it - Remove

tracker_storefromProcessorconstructor

Current Dependencies (just for plan ideation)

- Server currently skips the agent and speaks to model directly, e.g.

get_tracker

- Processor always deals with TrackerStore itself

Situation after Part 3

- Agent still knows about training

- Agent still knows to much about model loading

- The

Agentstill knows to much about the insides ofProcessor

- interpreter

- domain

- policies

Part 4: Hello ModelFactory : Removing Rasa Core training from Agent

Rough plan

- Create

ModelFactoryinterface - Create a

ModelFactorywith afrom_training_data_importerfunction

- Do all of the

Agent.trainsteps in here - this will return a

PolicyEnsembleat this step

- Do all of the

- Add a save function to

ModelFactorywhich accepts the model and can save it - point

rasa.core.trainto implementation ofModelFactory - dump all the train code from

Agent - pass trained

Ensembleto Agent as usually

Current Dependencies for Rasa Core training (just for plan ideation)

- References

restaurant bot examplerasa.core.train

- Agent has

- policy ensemble

- domain

load_data

- this one is just the

Agenttaking to theEnsemble

- this one is just the

- This is equivalent to

- Create policy ensemble

- generate

DialogueStateTrackers using Domain - Tell the policy ensemble to train

- set a fingerprint

- Agent knows how to persist models 🙈

Situation after Part 4

- Agent doesn't know about Core training any more

- Agent still knows to much about model loading

- The

Agentstill knows to much about the insides ofProcessor

- interpreter

- domain

- policies

Part 5: ModelFactory, let me introduce you to NLU

Rough Steps

- Move

nlu.train.trainto factory (same part where core is trained) - Let factory method

from_training_data_importeran implementation of theModelinterface (akaProcessor) - pimp the

savefunction in theModelFactoryto save everything as a Rasa Model - optional: Move the

no need to retrainlogic toModelFactory delete

toggle_memoizationor think about itOpen Question

How does our "no need to retrain" fit in there

Part 6: Agent, forget about the model internals

Rough Steps

- Add a function to

ModelFactoryto loadModelfrom path - make

rasa.core.run.load_agent_on_startjust pass thepathinstead of theinterpreter - Refactor

load_agentto use theModelFactorywhen loading model from path

- There are 3 cases

load_from_serverload_from_remote_storageload_local_model

- There are 3 cases

Situation after Part 6

- Agent doesn't know about Core training any more

- Agent still knows to much about model loading

- The

Agentdoes not now how a model looks inside anymore

Part 7: Specialized Model loader

Requirements

- Needs a

ModelFactory - needs to be able to replace the

Modelwithin theAgent

Rough Steps (needs further thoughts ‼️)

- Needs a

there are at least two ways to handle this: we need to think about pros / cons

- one centralised model downloader. Sets a variable which points to the latest model on disk and each workers just loads it from disk

- Each workers pulls separately and updates their model

- Make

Agentmodel loading one simple / function callback and move other related code to a new / different module

Thoughts

- Appscheduler or sanic background task for repeatedly pulling

- This should be process safe -> Same Rasa model for all Sanic workers

- Monitoring + pulling for a newer one shouldn't be related to where it is stored imo but test condition might change 🤔

Step 8: Remove Agent.visualize

Current Dependencies (just for plan ideation)

- policies are required to determine

max_history - domain is required

interpreteris None which means probablyRegexInterpreter

Rough steps

- use

TrainingDataImporterto load required data - pass

importertorasa.core.visualizeand just skipagent.visualize - determine configured

max_historybased on config + default value + CLI variable - remove

Agent.visualize

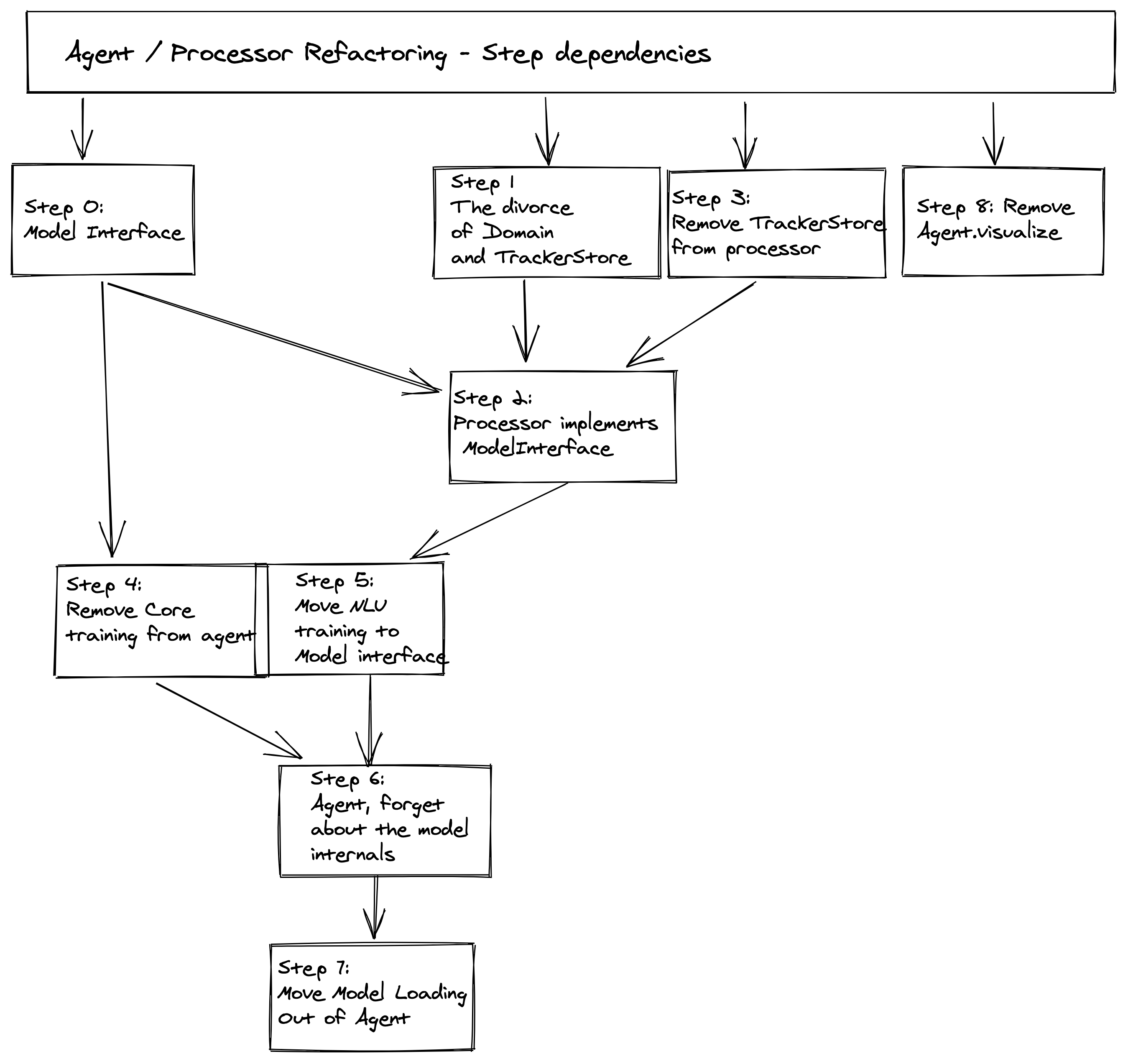

Step dependencies

@tmbo I split the proposal in single steps. The comment looks a bit daunting due to its size but you can focus on the step headlines and the rough plan sections. The image describes the dependencies between the steps (e.g. we can can't do Step 4 without doing Step 0 first). In my opinion every step (besides step 0, 2 & 5) makes sense to do, even if we don't continue with the subsequent steps. Some things are not figured out to the last detail, but imo that doesn't matter at this stage (e.g. how to move the details of model loading out of Agent)

Most helpful comment

Refactoring steps

Simplifications

Todos

processor._cancel_remindersand_schedule_remindersSteps

Part 0: Agree on a

ModelinterfacePart 1: The divorce of

DomainandTrackerStoreRough plan

List[Event]forget_or_create_trackerinit_trackersomewhere else (probably toDialogueStateTracker)TrackerStore.saveto accept aList[Event]Current Dependencies (just for plan ideation)

processor.get_trackerexporter._fetch_events_within_time_rangesavereturns a DialogueStateTrackerDialogueStateTrackerPart 2: Hello

ModelinterfaceRough plan

Model InterfaceProcesssorimplement model interfaceAgentonly speak to processor via interfaceCurrent Dependencies (just for plan ideation)

Model.parse_messageAgent.parse_message_using_nlu_interpreterModel.predictAgent.handle_messageAgent.trigger_intentModel.predict_nextAgent.predict_nextModel.executeAgent.execute_actionAgent.log_messageparse_messagedirectly to the tracker + adding slotsPart 3: Remove

TrackerStorefromProcessorRough plan

servermodule toprocessorAgent🤚Events(notDialogueStateTracker!) to every function of the processor which needs ittracker_storefromProcessorconstructorCurrent Dependencies (just for plan ideation)

get_trackerSituation after Part 3

Agentstill knows to much about the insides ofProcessorPart 4: Hello

ModelFactory: Removing Rasa Core training fromAgentRough plan

ModelFactoryinterfaceModelFactorywith afrom_training_data_importerfunctionAgent.trainsteps in herePolicyEnsembleat this stepModelFactorywhich accepts the model and can save itrasa.core.trainto implementation ofModelFactoryAgentEnsembleto Agent as usuallyCurrent Dependencies for Rasa Core training (just for plan ideation)

restaurant bot examplerasa.core.trainload_dataAgenttaking to theEnsembleDialogueStateTrackers using DomainSituation after Part 4

Agentstill knows to much about the insides ofProcessorPart 5: ModelFactory, let me introduce you to NLU

Rough Steps

nlu.train.trainto factory (same part where core is trained)from_training_data_importeran implementation of theModelinterface (akaProcessor)savefunction in theModelFactoryto save everything as a Rasa Modelno need to retrainlogic toModelFactorydelete

toggle_memoizationor think about itOpen Question

How does our "no need to retrain" fit in there

Part 6: Agent, forget about the model internals

Rough Steps

ModelFactoryto loadModelfrom pathrasa.core.run.load_agent_on_startjust pass thepathinstead of theinterpreterload_agentto use theModelFactorywhen loading model from pathload_from_serverload_from_remote_storageload_local_modelSituation after Part 6

Agentdoes not now how a model looks inside anymorePart 7: Specialized Model loader

Requirements

ModelFactoryModelwithin theAgentRough Steps (needs further thoughts ‼️)

there are at least two ways to handle this: we need to think about pros / cons

Agentmodel loading one simple / function callback and move other related code to a new / different moduleThoughts

Step 8: Remove

Agent.visualizeCurrent Dependencies (just for plan ideation)

max_historyinterpreteris None which means probablyRegexInterpreterRough steps

TrainingDataImporterto load required dataimportertorasa.core.visualizeand just skipagent.visualizemax_historybased on config + default value + CLI variableAgent.visualizeStep dependencies