Rasa: Training Rasa NLU docker image on Amazon Linux fails with BrokenProcessPool error

Rasa NLU version: Latest

Operating system (windows, osx, ...): Amazon Linux on AWS

Content of model configuration file: Attached

Issue:

I am able to run and train the Rasa NLU on Ubuntu successfully. But, when I try to do the same on Amazon Linux the training step generates an error.

Could any of the dev team help me resolve this issue?

Following are the details

Step1: Running the Docker image with volume mapping

docker run -d -p 5000:5000 -v

pwd/data:/app/data -vpwd/logs:/app/logs -vpwd/proj:/app/projects rasa/rasa_nlu:latest-full

Step 2: Training with demo data

cat demo-rasa.json | curl --request POST --header 'content-type: application/json' -d@- --url 'localhost:5000/train?project=test_model'

This is failing with an error. Container logs shows the following message.

docker logs 7b831341f489

2018-07-12 09:02:12+0000 [-] Log opened.

2018-07-12 09:02:12+0000 [-] Site starting on 5000

2018-07-12 09:02:12+0000 [-] Starting factory

2018-07-12 09:02:16+0000 [-] "172.17.0.1" - - [12/Jul/2018:09:02:15 +0000] "GET /status HTTP/1.1" 200 294 "-" "curl/7.53.1"

2018-07-12 09:02:26+0000 [-] 2018-07-12 09:02:26 WARNING rasa_nlu.data_router - [Failure instance: Traceback (failure with no frames):

2018-07-12 09:02:26+0000 [-] ]

2018-07-12 09:02:26+0000 [-] Unhandled Error

Traceback (most recent call last):

File "/usr/local/lib/python3.6/site-packages/twisted/internet/defer.py", line 500, in errback

self._startRunCallbacks(fail)

File "/usr/local/lib/python3.6/site-packages/twisted/internet/defer.py", line 567, in _startRunCallbacks

self._runCallbacks()

File "/usr/local/lib/python3.6/site-packages/twisted/internet/defer.py", line 653, in _runCallbacks

current.result = callback(current.result, args, *kw)

File "/usr/local/lib/python3.6/site-packages/twisted/internet/defer.py", line 1442, in gotResult

_inlineCallbacks(r, g, deferred)

---

File "/usr/local/lib/python3.6/site-packages/twisted/internet/defer.py", line 1384, in _inlineCallbacks

result = result.throwExceptionIntoGenerator(g)

File "/usr/local/lib/python3.6/site-packages/twisted/python/failure.py", line 422, in throwExceptionIntoGenerator

return g.throw(self.type, self.value, self.tb)

File "/app/rasa_nlu/server.py", line 351, in train

RasaNLUModelConfig(model_config), model_name)

File "/usr/local/lib/python3.6/site-packages/twisted/internet/defer.py", line 653, in _runCallbacks

current.result = callback(current.result, args, *kw)

File "/app/rasa_nlu/data_router.py", line 325, in training_errback

failure.value.failed_target_project)

builtins.AttributeError: 'BrokenProcessPool' object has no attribute 'failed_target_project'

2018-07-12 09:02:26+0000 [-] "172.17.0.1" - - [12/Jul/2018:09:02:24 +0000] "POST /train?project=test_model HTTP/1.1" 500 5711 "-" "curl/7.53.1"

demo-rasa.zip

All 20 comments

I just encountered a similar issue. I could build a container locally and and also run locally in that container where both the host OS and container are Ubuntu. I then deployed to Google GKE which is running Container-Optimized OS and I basically get the same error as @shashijeevan

i get the same error too within Openshift. I am only running the weatherbot app; but, i am getting the error "BrokenProcessPool' object has no attribute 'failed_target_project'

Have any of you managed to resolve this yet? Seems there's an issue with the process pool but it's not immediately clear as to what it could be

Also encountered the problem on GKE. Thanks to this thread I understood it was orchestration related, which hinted a possible storage volume problem. projects in --paths projects wasn't mounted on any volume. That fixed the issue.

Then it uncovered a new one as my training set started growing:builtins.AttributeError: 'CancelledError' object has no attribute 'failed_target_project' which turned out to be the proxy timing out after 60 seconds.

Those missing failed_target_project left the project in training status, forcing to kill the pod to restore the service. That is the real Rasa issue I think.

@amn41 any ideas?

met the same error with 0.13.7. Is there any workaround?

@carolWu1206 Are you sure your volumes are mapped to actual drives?

@znat Yes. I'm running RASA in K8S, projects folder is mount to a volume. After reduced the pod cpu and memory limitation, I can reproduce the error every time. So I guess it is related to resource in my environment.

The problem is, after meeting this error, the training will be marked ongoing forever, so I cannot trigger the training again untill the pod is restarted. But in fact, it is already failed and stopped.

What is the last container log entry?

2018-10-24 14:24:04+0800 [-] Unhandled Error

Traceback (most recent call last):

File "/usr/local/lib/python3.6/site-packages/twisted/internet/defer.py", line 501, in errback

self._startRunCallbacks(fail)

File "/usr/local/lib/python3.6/site-packages/twisted/internet/defer.py", line 568, in _startRunCallbacks

self._runCallbacks()

File "/usr/local/lib/python3.6/site-packages/twisted/internet/defer.py", line 654, in _runCallbacks

current.result = callback(current.result, *args, **kw)

File "/usr/local/lib/python3.6/site-packages/twisted/internet/defer.py", line 1475, in gotResult

_inlineCallbacks(r, g, status)

--- <exception caught here> ---

File "/usr/local/lib/python3.6/site-packages/twisted/internet/defer.py", line 1416, in _inlineCallbacks

result = result.throwExceptionIntoGenerator(g)

File "/usr/local/lib/python3.6/site-packages/twisted/python/failure.py", line 491, in throwExceptionIntoGenerator

return g.throw(self.type, self.value, self.tb)

File "vanlu/server.py", line 364, in train

RasaNLUModelConfig(model_config), model_name)

File "/usr/local/lib/python3.6/site-packages/twisted/internet/defer.py", line 654, in _runCallbacks

current.result = callback(current.result, *args, **kw)

File "/usr/local/lib/python3.6/site-packages/rasa_nlu/data_router.py", line 356, in training_errback

failure.value.failed_target_project)

builtins.AttributeError: 'BrokenProcessPool' object has no attribute 'failed_target_project'

Also encountered the problem on GKE. Thanks to this thread I understood it was orchestration related, which hinted a possible storage volume problem.

projectsin--paths projectswasn't mounted on any volume. That fixed the issue.Then it uncovered a new one as my training set started growing:

builtins.AttributeError: 'CancelledError' object has no attribute 'failed_target_project'which turned out to be the proxy timing out after 60 seconds.Those missing

failed_target_projectleft the project intrainingstatus, forcing to kill the pod to restore the service. That is the real Rasa issue I think.

I guess we should have an asynch training endpoint [tracked by some training id] instead of it being synchronous . This will help in training specially through HTTP endpoints

I had BrokenProcessPool errors again, not storage related anymore (see above). The model has 275 intents and has a higher cpu/memory footprint. So I suppose this errors also occur when training takes more than the remaining resources available on a cluster.

I'm using the built-in TensorFlow pipeline for categorization, and found that the memory usage is increased each time I train. There is memory leak somewhere.

Thanks for providing all these details guys, we'll make sure to take a look what's going wrong there

I was about creating a PR when I realized this is probably fixed by https://github.com/RasaHQ/rasa_nlu/blob/e7901773a7ce0af18707019c849909fd3bbde8ef/rasa_nlu/data_router.py#L337

If you're stuck on an earlier version, there is a patch below.

So now at least those errors won't leave Rasa with an erroneous number of training processes.

But the fact that the connection is reset doesn't reset the training process itself.

So if you start a new training, you will get a MaxTraininingError again, which looks normal (since a training process is ongoing) but not normal too because the current_training_processes has been reset.

Looking at the logs it's even weirder:

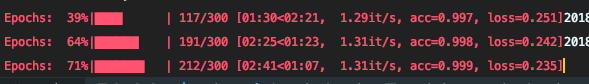

- I started a training (with the

/trainendpoint) and then dropped the connection, triggering aCancelledError. Training continues. - I started a second training, get a MaxTrainingError, then the training stops, and another training starts (2nd line) at the same epoch the previous started (117)

- Same thing for the 3rd line

Is it a display stuff or training processing stopping and restarting using the same Keras checkpoints?

Patch for ->0.13.8

def training_errback(failure):

logger.warning(failure)

self._current_training_processes -= 1

self.project_store[project].current_training_processes -= 1

# Some errors don't carry on the target project.

# See https://github.com/RasaHQ/rasa_nlu/issues/1231.

# In that case we just reset the status from the project store

try:

target_project = self.project_store.get(

failure.value.failed_target_project)

if (target_project and

self.project_store[project].current_training_processes ==

0):

target_project.status = 0

except AttributeError:

if (self.project_store[project].status == 1 and

self.project_store[project].current_training_processes ==

0):

self.project_store[project].status = 0

return failure

@ricwo you worked on fixing this -- any ideas?

This issue is due to a client request timeout (ESOCKETTIMEDOUT Error). The problem appears when for example the training process takes a long time. I guess, Rasa server must deal with the case when the client drops the connection before any response has been sent out.

@marrouchi @znat @shashijeevan are you still experiencing this issue?

@ricwo , Yes i faced the issue again when having a large dataset. After further investigation i found that the issue is also related to memory since i cannot reproduce the issue locally while the server has only ~700Mb available. I've added a 1Gb SWAP, and i did not encounter the error message again.

Closing this as more memory resolves the issue