Pytorch: RuntimeError: freeze_support()

- OS:

windows 10 - PyTorch version:

0.3.1.post2 - How you installed PyTorch (conda, pip, source):

conda install -c peterjc123 pytorch-cpu Python version:

3.5.4

Error Message:

C:\Install\Anaconda3\envs\ame\python.exe C:/DeepLearning/InPytorch/torch_CNN.py

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 106, in spawn_main

exitcode = _main(fd)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 115, in _main

prepare(preparation_data)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 226, in prepare

_fixup_main_from_path(data['init_main_from_path'])

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 278, in _fixup_main_from_path

run_name="__mp_main__")

File "C:\Install\Anaconda3\envs\ame\lib\runpy.py", line 263, in run_path

pkg_name=pkg_name, script_name=fname)

File "C:\Install\Anaconda3\envs\ame\lib\runpy.py", line 96, in _run_module_code

mod_name, mod_spec, pkg_name, script_name)

File "C:\Install\Anaconda3\envs\ame\lib\runpy.py", line 85, in _run_code

exec(code, run_globals)

File "C:\DeepLearning\InPytorch\torch_CNN.py", line 69, in <module>

for i,(batch_x,batch_y) in enumerate(train_loader):

File "C:\Install\Anaconda3\envs\ame\lib\site-packages\torch\utils\data\dataloader.py", line 417, in __iter__

return DataLoaderIter(self)

File "C:\Install\Anaconda3\envs\ame\lib\site-packages\torch\utils\data\dataloader.py", line 234, in __init__

w.start()

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\process.py", line 105, in start

self._popen = self._Popen(self)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\context.py", line 212, in _Popen

return _default_context.get_context().Process._Popen(process_obj)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\context.py", line 313, in _Popen

return Popen(process_obj)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\popen_spawn_win32.py", line 34, in __init__

prep_data = spawn.get_preparation_data(process_obj._name)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 144, in get_preparation_data

_check_not_importing_main()

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 137, in _check_not_importing_main

is not going to be frozen to produce an executable.''')

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support()

...

The "freeze_support()" line can be omitted if the program

is not going to be frozen to produce an executable.

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 106, in spawn_main

exitcode = _main(fd)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 115, in _main

prepare(preparation_data)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 226, in prepare

_fixup_main_from_path(data['init_main_from_path'])

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 278, in _fixup_main_from_path

run_name="__mp_main__")

File "C:\Install\Anaconda3\envs\ame\lib\runpy.py", line 263, in run_path

pkg_name=pkg_name, script_name=fname)

File "C:\Install\Anaconda3\envs\ame\lib\runpy.py", line 96, in _run_module_code

mod_name, mod_spec, pkg_name, script_name)

File "C:\Install\Anaconda3\envs\ame\lib\runpy.py", line 85, in _run_code

exec(code, run_globals)

File "C:\DeepLearning\InPytorch\torch_CNN.py", line 69, in <module>

for i,(batch_x,batch_y) in enumerate(train_loader):

File "C:\Install\Anaconda3\envs\ame\lib\site-packages\torch\utils\data\dataloader.py", line 417, in __iter__

return DataLoaderIter(self)

File "C:\Install\Anaconda3\envs\ame\lib\site-packages\torch\utils\data\dataloader.py", line 234, in __init__

w.start()

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\process.py", line 105, in start

self._popen = self._Popen(self)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\context.py", line 212, in _Popen

return _default_context.get_context().Process._Popen(process_obj)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\context.py", line 313, in _Popen

return Popen(process_obj)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\popen_spawn_win32.py", line 34, in __init__

prep_data = spawn.get_preparation_data(process_obj._name)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 144, in get_preparation_data

Traceback (most recent call last):

File "<string>", line 1, in <module>

_check_not_importing_main()

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 137, in _check_not_importing_main

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 106, in spawn_main

is not going to be frozen to produce an executable.''')

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support()

...

The "freeze_support()" line can be omitted if the program

is not going to be frozen to produce an executable.

exitcode = _main(fd)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 115, in _main

prepare(preparation_data)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 226, in prepare

_fixup_main_from_path(data['init_main_from_path'])

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 278, in _fixup_main_from_path

run_name="__mp_main__")

File "C:\Install\Anaconda3\envs\ame\lib\runpy.py", line 263, in run_path

pkg_name=pkg_name, script_name=fname)

File "C:\Install\Anaconda3\envs\ame\lib\runpy.py", line 96, in _run_module_code

mod_name, mod_spec, pkg_name, script_name)

File "C:\Install\Anaconda3\envs\ame\lib\runpy.py", line 85, in _run_code

exec(code, run_globals)

File "C:\DeepLearning\InPytorch\torch_CNN.py", line 69, in <module>

for i,(batch_x,batch_y) in enumerate(train_loader):

File "C:\Install\Anaconda3\envs\ame\lib\site-packages\torch\utils\data\dataloader.py", line 417, in __iter__

return DataLoaderIter(self)

File "C:\Install\Anaconda3\envs\ame\lib\site-packages\torch\utils\data\dataloader.py", line 234, in __init__

w.start()

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\process.py", line 105, in start

self._popen = self._Popen(self)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\context.py", line 212, in _Popen

return _default_context.get_context().Process._Popen(process_obj)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\context.py", line 313, in _Popen

return Popen(process_obj)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\popen_spawn_win32.py", line 34, in __init__

Traceback (most recent call last):

File "<string>", line 1, in <module>

prep_data = spawn.get_preparation_data(process_obj._name)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 144, in get_preparation_data

_check_not_importing_main()

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 137, in _check_not_importing_main

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 106, in spawn_main

is not going to be frozen to produce an executable.''')

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support()

...

The "freeze_support()" line can be omitted if the program

is not going to be frozen to produce an executable.

exitcode = _main(fd)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 115, in _main

prepare(preparation_data)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 226, in prepare

_fixup_main_from_path(data['init_main_from_path'])

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 278, in _fixup_main_from_path

run_name="__mp_main__")

File "C:\Install\Anaconda3\envs\ame\lib\runpy.py", line 263, in run_path

pkg_name=pkg_name, script_name=fname)

File "C:\Install\Anaconda3\envs\ame\lib\runpy.py", line 96, in _run_module_code

mod_name, mod_spec, pkg_name, script_name)

File "C:\Install\Anaconda3\envs\ame\lib\runpy.py", line 85, in _run_code

exec(code, run_globals)

File "C:\DeepLearning\InPytorch\torch_CNN.py", line 69, in <module>

for i,(batch_x,batch_y) in enumerate(train_loader):

File "C:\Install\Anaconda3\envs\ame\lib\site-packages\torch\utils\data\dataloader.py", line 417, in __iter__

return DataLoaderIter(self)

File "C:\Install\Anaconda3\envs\ame\lib\site-packages\torch\utils\data\dataloader.py", line 234, in __init__

w.start()

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\process.py", line 105, in start

self._popen = self._Popen(self)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\context.py", line 212, in _Popen

return _default_context.get_context().Process._Popen(process_obj)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\context.py", line 313, in _Popen

return Popen(process_obj)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\popen_spawn_win32.py", line 34, in __init__

prep_data = spawn.get_preparation_data(process_obj._name)

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 144, in get_preparation_data

_check_not_importing_main()

File "C:\Install\Anaconda3\envs\ame\lib\multiprocessing\spawn.py", line 137, in _check_not_importing_main

is not going to be frozen to produce an executable.''')

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support()

...

The "freeze_support()" line can be omitted if the program

is not going to be frozen to produce an executable.

- about me

Even though I use:

torch.multiprocessing.freeze_support()

All 30 comments

Now that's okay!

def run():

torch.multiprocessing.freeze_support()

print('loop')

if __name__ == '__main__':

run()

@shinalone Actually you can remove the freeze_support line but the if __name__ == '__main__:' is necessary.

Yeah, thank you, @peterjc123 author of pytorch for windows. But When will pytorch for windows be official version?

@shinalone It's planned to be released in the next version. Hope you will like it.

@peterjc123 Cheers!

Close this please.

Hello, please help me

I had a problem with installing pytorch, Win 8.1, Python 3.6, PyCharm 2017.3.3 x64

I saw you had such a problem, how did you solve it?

Traceback (most recent last call last):

File "

File "C: \ ProgramData \ Miniconda3 \ lib \ multiprocessing \ spawn.py", line 105, in spawn_main

exitcode = _main (fd)

File "C: \ ProgramData \ Miniconda3 \ lib \ multiprocessing \ spawn.py", line 114, in _main

prepare (preparation_data)

File "C: \ ProgramData \ Miniconda3 \ lib \ multiprocessing \ spawn.py", line 225, in prepare

_fixup_main_from_path (data ['init_main_from_path'])

File "C: \ ProgramData \ Miniconda3 \ lib \ multiprocessing \ spawn.py", line 277, in _fixup_main_from_path

run_name = "__ mp_main__")

File "C: \ ProgramData \ Miniconda3 \ lib \ runpy.py", line 263, in run_path

pkg_name = pkg_name, script_name = fname)

File "C: \ ProgramData \ Miniconda3 \ lib \ runpy.py", line 96, in _run_module_code

mod_name, mod_spec, pkg_name, script_name)

File "C: \ ProgramData \ Miniconda3 \ lib \ runpy.py", line 85, in _run_code

exec (code, run_globals)

File "C: \ Users \ DmitrySony \ PycharmProjects \ PyTorch \ Torch1.py", line 35, in

dataiter = iter (trainloader)

File "C: \ ProgramData \ Miniconda3 \ lib \ site-packages \ torch \ utils \ data \ dataloader.py", line 451, in __iter__

return _DataLoaderIter (self)

File "C: \ ProgramData \ Miniconda3 \ lib \ site-packages \ torch \ utils \ data \ dataloader.py", line 239, in __init__

w.start ()

File "C: \ ProgramData \ Miniconda3 \ lib \ multiprocessing \ process.py", line 105, in start

self._popen = self._Popen (self)

File "C: \ ProgramData \ Miniconda3 \ lib \ multiprocessing \ context.py", line 223, in _Popen

return _default_context.get_context (). Process._Popen (process_obj)

File "C: \ ProgramData \ Miniconda3 \ lib \ multiprocessing \ context.py", line 322, in _Popen

return Popen (process_obj)

File "C: \ ProgramData \ Miniconda3 \ lib \ multiprocessing \ popen_spawn_win32.py", line 33, in __init__

prep_data = spawn.get_preparation_data (process_obj._name)

File "C: \ ProgramData \ Miniconda3 \ lib \ multiprocessing \ spawn.py", line 143, in get_preparation_data

_check_not_importing_main ()

File "C: \ ProgramData \ Miniconda3 \ lib \ multiprocessing \ spawn.py", line 136, in _check_not_importing_main

is not going to be frozen to produce an executable. '' ')

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This is probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support ()

...

The "freeze_support ()" line can be omitted if the program

is not going to be frozen.

Traceback (most recent last call last):

File "C: /Users/DmitrySony/PycharmProjects/PyTorch/Torch1.py", line 35, in

dataiter = iter (trainloader)

File "C: \ ProgramData \ Miniconda3 \ lib \ site-packages \ torch \ utils \ data \ dataloader.py", line 451, in __iter__

return _DataLoaderIter (self)

File "C: \ ProgramData \ Miniconda3 \ lib \ site-packages \ torch \ utils \ data \ dataloader.py", line 239, in __init__

w.start ()

File "C: \ ProgramData \ Miniconda3 \ lib \ multiprocessing \ process.py", line 105, in start

self._popen = self._Popen (self)

File "C: \ ProgramData \ Miniconda3 \ lib \ multiprocessing \ context.py", line 223, in _Popen

return _default_context.get_context (). Process._Popen (process_obj)

File "C: \ ProgramData \ Miniconda3 \ lib \ multiprocessing \ context.py", line 322, in _Popen

return Popen (process_obj)

File "C: \ ProgramData \ Miniconda3 \ lib \ multiprocessing \ popen_spawn_win32.py", line 65, in __init__

reduction.dump (process_obj, to_child)

File "C: \ ProgramData \ Miniconda3 \ lib \ multiprocessing \ reduction.py", line 60, in dump

ForkingPickler (file, protocol) .dump (obj)

BrokenPipeError: [Errno 32] Broken pipe

Process finished with exit code 1

Have you tried the guidance in the error messagE:

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This is probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support ()

...

The "freeze_support ()" line can be omitted if the program

is not going to be frozen.

Hi . I added, but still an error?

.....

def run():

torch.multiprocessing.freeze_support()

print('loop')

if __name__ == '__main__':

freeze_support() %--- here

def _check_not_importing_main():

if getattr(process.current_process(), '_inheriting', False):

raise RuntimeError('''

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

.......

@dimamillion Add it to your code, not the Python internal code.

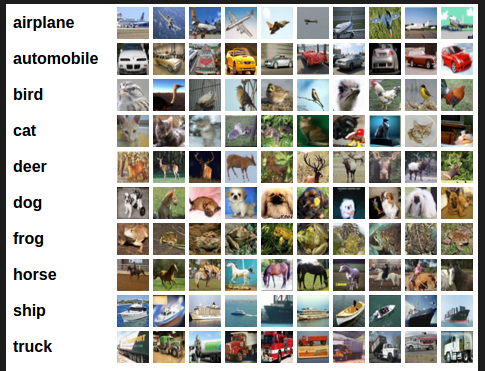

Hi, Now it works but does not show the picture as in tutorials

please tell me why does not the picture

image in /data

Out

Files already downloaded and verified

Files already downloaded and verified

bird ship car deer

my code is

import torch

import torchvision

import torchvision.transforms as transforms

from multiprocessing import Process, freeze_support

if __name__ == '__main__':

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

trainset = torchvision.datasets.CIFAR10(root='./data', train=True,

download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=4,

shuffle=True, num_workers=2)

testset = torchvision.datasets.CIFAR10(root='./data', train=False,

download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=4,

shuffle=False, num_workers=2)

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

import matplotlib.pyplot as plt

import numpy as np

# functions to show an image

def imshow(img):

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

# get some random training images

dataiter = iter(trainloader)

images, labels = dataiter.next()

# show images

imshow(torchvision.utils.make_grid(images))

# print labels

print(' '.join('%5s' % classes[labels[j]] for j in range(4)))

@peterjc123 为啥我加上if __name__ == '__main__'就可以了?

@Prologueyan Well, it's a long story. Please refer to my article

(In Chinese) for a detailed answer.

@peterjc123 thank you!

on windows, use the multiprocessing must in the main function, if you use it in other file, you also must use it under the if __name__ == '__main__':

@dimamillion Yeah, I had the same problem. Apparently, when you run the code from a main function, pyplot behaves a little differently. You have to actively force it to show, by adding the show command: plt.show() . I didn't need this when running it from a python console, but do when running the file as a whole.

# functions to show an image

def imshow(img):

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

plt.show()

you have to add the plt.show method

Now that's okay!

def run(): torch.multiprocessing.freeze_support() print('loop') if __name__ == '__main__': run()

I have the same error as yours.But I do not know which file you edited. I have checked spaw.py. There is no such codes there. Could you please help me?

I am dealing with this problem too. The code is here environment spec: win10, python 3.7.0, pytorch 1.0.1, installed via conda.

After I add these lines in my code, the problem is still there.

if __name__ == '__main__':

torch.multiprocessing.freeze_support()

or

def run():

torch.multiprocessing.freeze_support()

print('loop')

if __name__ == '__main__':

run()

And I tried the below:

if __name__ == '__main__':

torch.multiprocessing.freeze_support()

# Get a batch of training data

inputs, classes = next(iter(dataloaders['train']))

# Make a grid from batch

out = torchvision.utils.make_grid(inputs)

imshow(out, title=[class_names[x] for x in classes])

The result seems right. Could anyone give some explanation? Thank you!

@shinalone Actually you can remove the freeze_support line but the

if __name__ == '__main__:'is necessary.

Above on.

@Guo-Shuai adding the code in the beginning of your code.

on windows, use the multiprocessing must in the main function, if you use it in other file, you also must use it under the if name == 'main':

that works. thanks

hello guys I try to run this code from GitHub

https://github.com/zllrunning/Deep-Steganography

on my windows 10 but I have error

F:\project\Deep-Steganography\Deep-Steganography>python main.py

main.py:16: UserWarning: nn.init.kaiming_normal is now deprecated in favor of nn.init.kaiming_normal_.

init.kaiming_normal(m.weight.data, a=0, mode='fan_in')

F:\project\Deep-Steganography\Deep-Steganography\main.py:16: UserWarning: nn.init.kaiming_normal is now deprecated in favor of nn.init.kaiming_normal_.

init.kaiming_normal(m.weight.data, a=0, mode='fan_in')

Traceback (most recent call last):

Traceback (most recent call last):

File "main.py", line 49, in

File "

for i, (secret, cover) in enumerate(dataloader): File "C:\Program Files\Python36\lib\multiprocessing\spawn.py", line 105, in spawn_main

File "C:\Program Files\Python36\lib\site-packages\torch\utils\data\dataloader.py", line 193, in _iter_

exitcode = _main(fd)

File "C:\Program Files\Python36\lib\multiprocessing\spawn.py", line 114, in _main

prepare(preparation_data)

File "C:\Program Files\Python36\lib\multiprocessing\spawn.py", line 225, in prepare

_fixup_main_from_path(data['init_main_from_path'])

File "C:\Program Files\Python36\lib\multiprocessing\spawn.py", line 277, in _fixup_main_from_path

run_name="_mp_main_")

File "C:\Program Files\Python36\lib\runpy.py", line 263, in run_path

pkg_name=pkg_name, script_name=fname)

File "C:\Program Files\Python36\lib\runpy.py", line 96, in _run_module_code

mod_name, mod_spec, pkg_name, script_name)

File "C:\Program Files\Python36\lib\runpy.py", line 85, in _run_code

exec(code, run_globals)

File "F:\project\Deep-Steganography\Deep-Steganography\main.py", line 49, in

for i, (secret, cover) in enumerate(dataloader):

File "C:\Program Files\Python36\lib\site-packages\torch\utils\data\dataloader.py", line 193, in _iter_

return _DataLoaderIter(self)return _DataLoaderIter(self)

File "C:\Program Files\Python36\lib\site-packages\torch\utils\data\dataloader.py", line 469, in _init_

File "C:\Program Files\Python36\lib\site-packages\torch\utils\data\dataloader.py", line 469, in _init_

w.start()

File "C:\Program Files\Python36\lib\multiprocessing\process.py", line 105, in start

w.start()

File "C:\Program Files\Python36\lib\multiprocessing\process.py", line 105, in start

self._popen = self._Popen(self)self._popen = self._Popen(self)

File "C:\Program Files\Python36\lib\multiprocessing\context.py", line 223, in _Popen

File "C:\Program Files\Python36\lib\multiprocessing\context.py", line 223, in _Popen

return _default_context.get_context().Process._Popen(process_obj)

File "C:\Program Files\Python36\lib\multiprocessing\context.py", line 322, in _Popen

return _default_context.get_context().Process._Popen(process_obj)

return Popen(process_obj)

File "C:\Program Files\Python36\lib\multiprocessing\context.py", line 322, in _Popen

File "C:\Program Files\Python36\lib\multiprocessing\popen_spawn_win32.py", line 65, in _init_

return Popen(process_obj)

File "C:\Program Files\Python36\lib\multiprocessing\popen_spawn_win32.py", line 33, in _init_

reduction.dump(process_obj, to_child)

prep_data = spawn.get_preparation_data(process_obj._name) File "C:\Program Files\Python36\lib\multiprocessing\reduction.py", line 60, in dump

File "C:\Program Files\Python36\lib\multiprocessing\spawn.py", line 143, in get_preparation_data

_check_not_importing_main()

File "C:\Program Files\Python36\lib\multiprocessing\spawn.py", line 136, in _check_not_importing_main

is not going to be frozen to produce an executable.''')

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if _name_ == '_main_':

freeze_support()

...

The "freeze_support()" line can be omitted if the program

is not going to be frozen to produce an executable.

ForkingPickler(file, protocol).dump(obj)

BrokenPipeError: [Errno 32] Broken pipe

please can any one help .....

I feel that this issue should not be closed - it is still happening 100% of the time on Windows on the example project:

https://pytorch.org/tutorials/beginner/blitz/cifar10_tutorial.html#sphx-glr-beginner-blitz-cifar10-tutorial-py

Thank you to everyone who has helped with this issue! Adding (if __name__ = '__main__':) to the beginning of my file worked perfectly!

OS: macOS Catalina 10.15.5 also encountered this problem 😭

I am experiencing the same error on macOS Catalina 10.15.6

Now that's okay!

def run(): torch.multiprocessing.freeze_support() print('loop') if __name__ == '__main__': run()

请问你这个应该加在什么py文件中,是train.py吗?

which python file should i add the code in?is train.py?

my email is [email protected],please,thanks

@Prologueyan Well, it's a long story. Please refer to my article

(In Chinese) for a detailed answer.

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support()

...

The "freeze_support()" line can be omitted if the program

is not going to be frozen to produce an executable.

ForkingPickler(file, protocol).dump(obj)

BrokenPipeError: [Errno 32] Broken pipe

你好,我在文件中添加了“if __name__ == '__main__':”【从RuntimeError: freeze_support() · Issue #5858 · pytorch/pytorch这里过来的】,也还是报这个错,请问怎么解决?

@BinchaoPeng @TheRedCamaro30 My example codeprint('loop') means Model multi-layer training code which you write. Get it?

Most helpful comment

@shinalone Actually you can remove the freeze_support line but the

if __name__ == '__main__:'is necessary.