Pytorch-lightning: WandbLogger fails in 1.0.2 due to non-JSON serializable object

🐛 Bug

After updating to PL 1.0.2, the WandbLogger fails with the following TypeError:

Traceback (most recent call last):

File "wandblogger_issue.py", line 12, in <module>

wandb_logger.log_hyperparams(vars(args))

File "/home/groups/mignot/miniconda3/envs/pl/lib/python3.7/site-packages/pytorch_lightning/utilities/distributed.py", line 35, in wrapped_fn

return fn(*args, **kwargs)

File "/home/groups/mignot/miniconda3/envs/pl/lib/python3.7/site-packages/pytorch_lightning/loggers/wandb.py", line 138, in log_hyperparams

self.experiment.config.update(params, allow_val_change=True)

File "/home/groups/mignot/miniconda3/envs/pl/lib/python3.7/site-packages/wandb/sdk/wandb_config.py", line 87, in update

self._callback(data=self._as_dict())

File "/home/groups/mignot/miniconda3/envs/pl/lib/python3.7/site-packages/wandb/sdk/wandb_run.py", line 587, in _config_callback

self._backend.interface.publish_config(data)

File "/home/groups/mignot/miniconda3/envs/pl/lib/python3.7/site-packages/wandb/interface/interface.py", line 496, in publish_config

cfg = self._make_config(config_dict)

File "/home/groups/mignot/miniconda3/envs/pl/lib/python3.7/site-packages/wandb/interface/interface.py", line 232, in _make_config

update.value_json = json_dumps_safer(json_friendly(v)[0])

File "/home/groups/mignot/miniconda3/envs/pl/lib/python3.7/site-packages/wandb/util.py", line 524, in json_dumps_safer

return json.dumps(obj, cls=WandBJSONEncoder, **kwargs)

File "/home/groups/mignot/miniconda3/envs/pl/lib/python3.7/json/__init__.py", line 238, in dumps

**kw).encode(obj)

File "/home/groups/mignot/miniconda3/envs/pl/lib/python3.7/json/encoder.py", line 199, in encode

chunks = self.iterencode(o, _one_shot=True)

File "/home/groups/mignot/miniconda3/envs/pl/lib/python3.7/json/encoder.py", line 257, in iterencode

return _iterencode(o, 0)

File "/home/groups/mignot/miniconda3/envs/pl/lib/python3.7/site-packages/wandb/util.py", line 480, in default

return json.JSONEncoder.default(self, obj)

File "/home/groups/mignot/miniconda3/envs/pl/lib/python3.7/json/encoder.py", line 179, in default

raise TypeError(f'Object of type {o.__class__.__name__} '

TypeError: Object of type function is not JSON serializable

To Reproduce

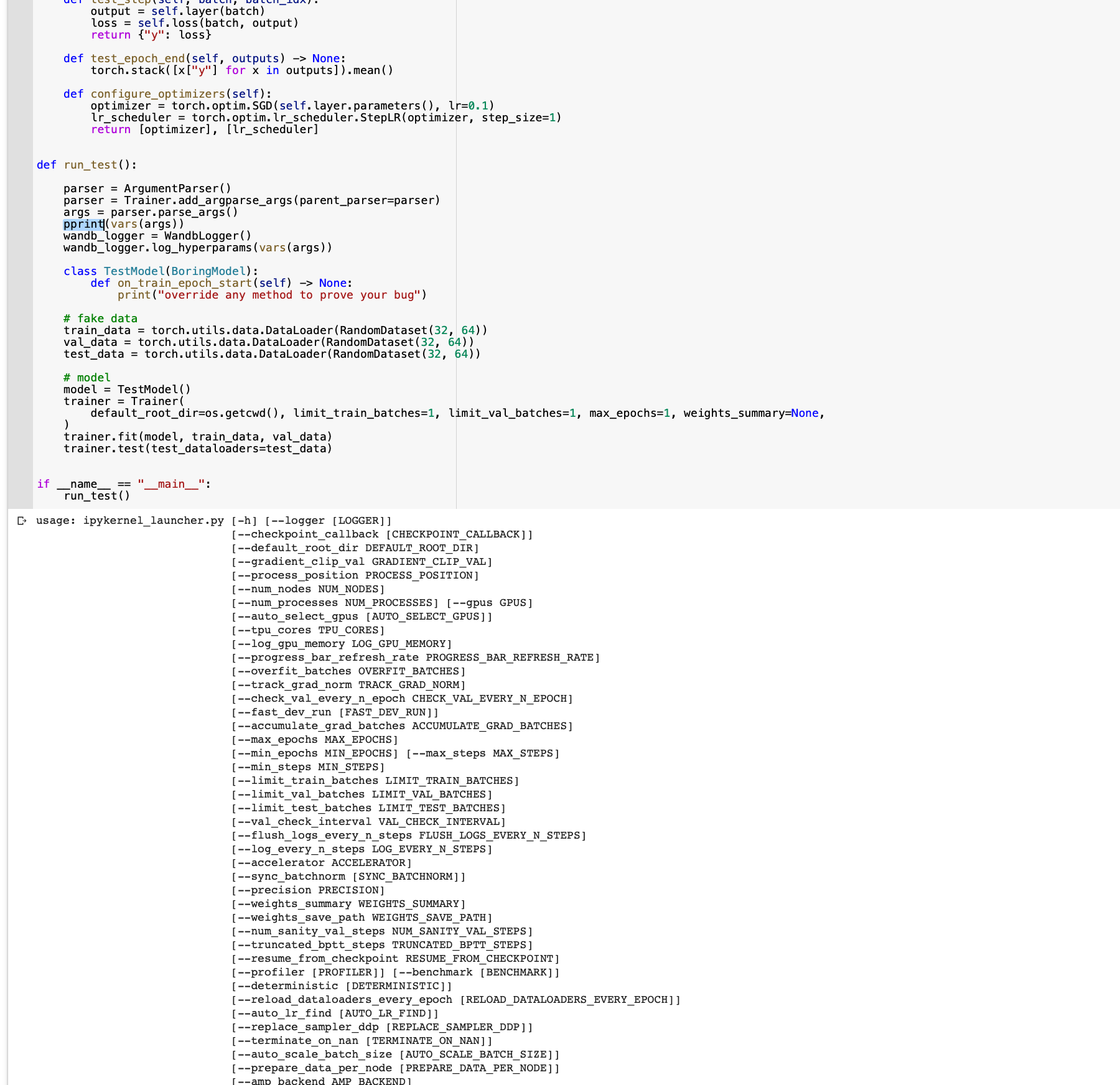

Run the following code snippet to reproduce:

from argparse import ArgumentParser

from pprint import pprint

from pytorch_lightning import Trainer

from pytorch_lightning.loggers import WandbLogger

if __name__ == "__main__":

parser = ArgumentParser()

parser = Trainer.add_argparse_args(parent_parser=parser)

args = parser.parse_args()

pprint(vars(args))

wandb_logger = WandbLogger()

wandb_logger.log_hyperparams(vars(args))

Expected behavior

Hyperparams are logged as usual without any TypeError.

Environment

* CUDA:

- GPU:

- available: False

- version: 10.2

* Packages:

- numpy: 1.19.1

- pyTorch_debug: False

- pyTorch_version: 1.6.0

- pytorch-lightning: 1.0.2

- tensorboard: 2.3.0

- tqdm: 4.50.2

* System:

- OS: Linux

- architecture:

- 64bit

-

- processor: x86_64

- python: 3.7.9

- version: #1 SMP Mon Jul 29 17:46:05 UTC 2019

Additional context

Pretty printing the arguments gives the following clue about the error:

{'accelerator': None,

'accumulate_grad_batches': 1,

'amp_backend': 'native',

'amp_level': 'O2',

'auto_lr_find': False,

'auto_scale_batch_size': False,

'auto_select_gpus': False,

'automatic_optimization': True,

'benchmark': False,

'check_val_every_n_epoch': 1,

'checkpoint_callback': True,

'default_root_dir': None,

'deterministic': False,

'distributed_backend': None,

'fast_dev_run': False,

'flush_logs_every_n_steps': 100,

'gpus': <function _gpus_arg_default at 0x7f26b7788f80>,

'gradient_clip_val': 0,

'limit_test_batches': 1.0,

'limit_train_batches': 1.0,

'limit_val_batches': 1.0,

'log_every_n_steps': 50,

'log_gpu_memory': None,

'logger': True,

'max_epochs': 1000,

'max_steps': None,

'min_epochs': 1,

'min_steps': None,

'num_nodes': 1,

'num_processes': 1,

'num_sanity_val_steps': 2,

'overfit_batches': 0.0,

'precision': 32,

'prepare_data_per_node': True,

'process_position': 0,

'profiler': None,

'progress_bar_refresh_rate': 1,

'reload_dataloaders_every_epoch': False,

'replace_sampler_ddp': True,

'resume_from_checkpoint': None,

'sync_batchnorm': False,

'terminate_on_nan': False,

'tpu_cores': <function _gpus_arg_default at 0x7f26b7788f80>,

'track_grad_norm': -1,

'truncated_bptt_steps': None,

'val_check_interval': 1.0,

'weights_save_path': None,

'weights_summary': 'top'}

I assume the issue comes from the gpus and tpu_cores values, which are function calls, when not explicitly supplied as arguments.

All 14 comments

Trainer.add_argparse_args adds some functions to the args Namespace which are not JSON serializable, so an error is thrown when WandbLogger tries to save the hyperparameters of the run. I temporarily got around the issue by removing functions before calling save_hyperparameters, but this definitely needs a fix.

class MyModel(LightningModule):

def __init__(self, hparams, *args, **kwargs):

super().__init__()

self.save_hyperparameters({k:v for (k,v) in vars(hparams).items() if not callable(v)})

Hey @neergaard,

Would you mind creating a test to reproduce this bug (https://github.com/PyTorchLightning/pytorch-lightning/blob/master/pl_examples/bug_report_model.py).

Best regards,

T.C

Hi @tchaton sure, do you want me to just do it in a Colab notebook or do you want a gist with the script you linked to?

Hey @neergaard, I would prefer the gist :) Easier to integrated in our tests :)

@tchaton For know here's a gist with the test (https://gist.github.com/neergaard/ed0620ab9405b79d420b99db3e43605a). I've basically inserted the code snippet I supplied in my orig post without deleting anything of the bug report code, but it should run and return the TypeError still.

Hey @neergaard,

Without the provided arguments, I can't use the gist :)

@tchaton That's why I asked if you preferred a gist or a colab notebook, as the script as such does not function in a notebook, but the gist works using the command line.

I don't know how to get the default arguments from the Trainer in a notebook?

Hey, you can provide the command line as a string :)

opt = "--name_1 arg_1 .... --name_n arg_n".split(" ")

parser = ArgumentParser()

parser = Trainer.add_argparse_args(parent_parser=parser)

args = parser.parse_args(opt)

@tchaton D'oh! Simple solution works great, thanks!

I've updated the gist, can you try it now?

Hey @neergaard ,

I will also update bug_report to add this trick :) Thanks for asking about it :)

Best regards

@tchaton thanks for helping out!

I can add that I investigated the issue more, and it doesn't seem to be a problem in WandB version 0.10.8, but it is an issue in version 0.10.7.

edit: what I mean is, using wandb==0.10.8 does not result in a TypeError, but I still think the _gpus_arg_default default value should be handled properly in Pytorch Lightning.

I will look into this afternoon or tomorrow. Feel free to investigate and submit a PR if you find the bug :)

Hey @neergaard,

Feel free to have a look at the PR.

Best,

T.C