Pytorch-lightning: Strange loss graph with wandb after switching to LambdaLR scheduler (from transformers)

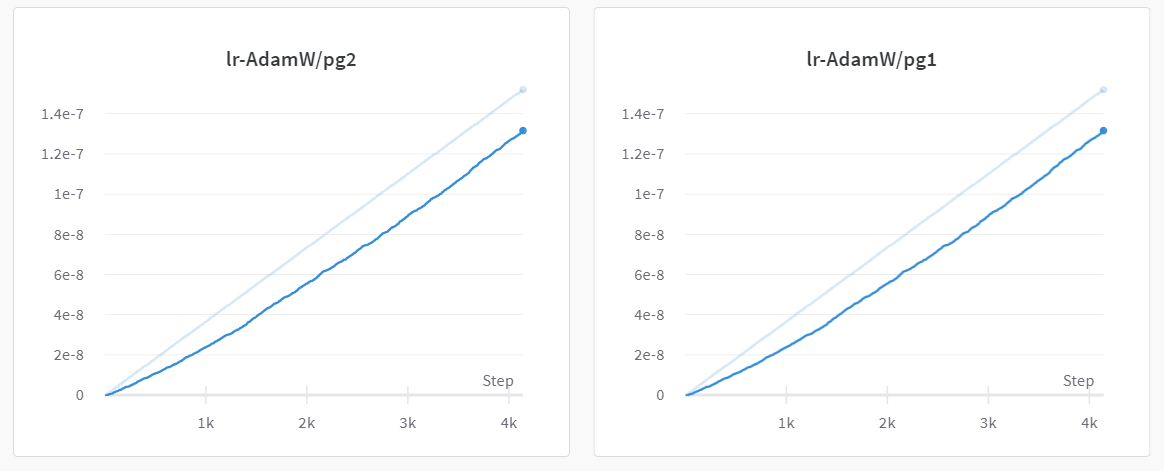

Hello. I'm seeing the graph above during my training, which is weird. My graph wasn't like this until I swap the optimizer and scheduler with those from transformers. I'm using wandb for the logging.

The only changes in the code is below

# ref: https://huggingface.co/transformers/_modules/transformers/trainer.html#Trainer

def create_optimizer_and_scheduler(

self, lr, num_training_steps, num_warmup_steps, weight_decay=0.01

):

"""

Setup the optimizer and the learning rate scheduler.

We provide a reasonable default that works well. If you want to use something else, you can pass a tuple in the

Trainer's init through :obj:`optimizers`, or subclass and override this method in a subclass.

"""

# optimizer

no_decay = ["bias", "LayerNorm.weight", "BatchNorm1d.weight"]

optimizer_grouped_parameters = [

{

"params": [

p

for n, p in self.named_parameters()

if not any(nd in n for nd in no_decay)

],

"weight_decay": weight_decay,

},

{

"params": [

p

for n, p in self.named_parameters()

if any(nd in n for nd in no_decay)

],

"weight_decay": 0.0,

},

]

optimizer = torch.optim.AdamW(

optimizer_grouped_parameters,

lr=lr,

)

# scheduler

lr_scheduler = transformers.get_linear_schedule_with_warmup(

optimizer,

num_warmup_steps=num_warmup_steps,

num_training_steps=num_training_steps,

)

return optimizer, lr_scheduler

# https://github.com/PyTorchLightning/pytorch-lightning/issues/3900#issuecomment-704291250

def configure_optimizers(self):

train_params = self.hparams.train

lr = train_params.lr

num_training_steps = train_params.max_steps * train_params.max_epochs

num_warmup_steps = train_params.warmup_steps

optimizer, lr_scheduler = self.create_optimizer_and_scheduler(

lr, num_training_steps, num_warmup_steps

)

scheduler = {'scheduler': lr_scheduler, 'interval': 'step'}

# BEFORE

# optimizer = torch.optim.AdamW(self.parameters(), lr=self.hparams.train.lr)

# lr_scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer)

# scheduler = {

# "scheduler": lr_scheduler,

# "reduce_on_plateau": True,

# "interval": "epoch",

# "monitor": "val_loss",

# }

return [optimizer], [scheduler]

lr is being logged properly

All 3 comments

@kyoungrok0517 what happens if you select global_step for the x-axis in wandb? (click on edit graph -> change step to global_step)

@kyoungrok0517 what happens if you select global_step for the x-axis in wandb? (click on edit graph -> change step to global_step)

Wow that fixed the issue. Thanks! But it'll be better if global_step is by default used as x-axis

Thanks! But it'll be better if global_step is by default used as x-axis

not possible as far as I know. wandb always chooses their own "step" as default.