Pytorch-lightning: the self.log problem in validation_step()

as doc say we should use self.log in last version,

but the loged data are strange if we change EvalResult() to self.log(on_epoch=True)

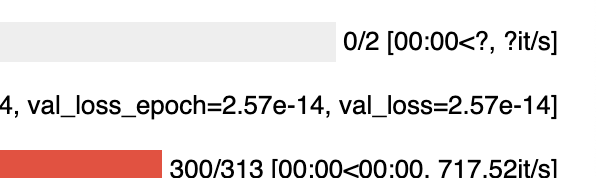

Then we check the data in tensorboard, the self.log() will only log the result of last batch each epoch, instead of the mean of them.

That is quite unreliable about this issue, it must be turned back to EvalResult() for correct experiments.

All 20 comments

Hi! thanks for your contribution!, great first issue!

I am having the same issue... Using PL 1.0.0. The progress bar does get the correct values for validation loss, on the other hand.

I found something wrong when saving the model. This is a serious bug.

To speed things up: here is a boring model demonstrating the error. In the TB output, observe that:

- the value for

val_lossandval_loss_epochare simply equal to the value ofval_loss_stepfor the last step (same asval_loss_stepin progress bar). - the values for

val_lossandval_loss_epochin TB are different from their equivalents in the progress bar.

So what's happening is that when logging to loggers, reduction is not applied.

This occurs in version 0.10.0 as well as 1.0.0

I don't understand how a bug this serious could've made it through all the tests from 0.10.0, through all the 1.0 rcs and all they way to the final version...

The Issue is still present in version 1.0.1.

I also found out that mean function used to calculate value at the and of the epoch (the value that is present in the progress bar) does not return the correct average value.

def validation_step(self, batch, batch_idx):

loss = self.step(batch)

self.log('batch_idx', batch_idx, prog_bar=True)

self.batch_idxs.append(batch_idx)

return loss

def validation_epoch_end(self, outputs: List[Any]) -> None:

super().validation_epoch_end(outputs)

print("batch_idxs mean", sum(self.batch_idxs) / len(self.batch_idxs))

print("batch_idxs torch mean", torch.mean(torch.tensor(self.batch_idxs, dtype=torch.float)))

self.batch_idxs = []

logged value = 31

progress bar value = 15.1

mean value = 15.5

torch mean value = 15.5

Here https://github.com/PyTorchLightning/pytorch-lightning/blob/09c2020a9325850bc159d2053b30c0bb627e5bbb/pytorch_lightning/trainer/evaluation_loop.py#L199 this private variable (_results) keeps last step_log. Maybe this is the problem, but I don’t know how to fix it yet.

I also find this problem, then I log it in validation_epoch_end and it seems ok

def validation_step(self,...):

return {'val_loss': loss}

def validation_epoch_end(self, outputs):

val_loss = torch.stack([x['val_loss'] for x in outputs]).mean()

dist.all_reduce(val_loss, op=dist.ReduceOp.SUM)

val_loss = val_loss / self.trainer.world_size

self.log('val_loss', val_loss, on_epoch=True, sync_dist=True)

return {'val_loss': val_loss,}

ok. good catch. fixing this now!

Running into the same problem with the logger only reporting the last value for the epoch rather than the average across the epoch. I was wondering why I was getting funky test scores 😅 .

Is this what you mean?

val_loss_epoch and val_loss are the same? but instead, val_loss should be the same as val_loss_step?

Just wrote a test and it looks like everything is correct, except that the val_loss gets overwritten by the val_loss_epoch by mistake. So:

- val_loss_step is correct

- val_loss_epoch is correct

- val_loss is incorrect

@williamFalcon Yes, that's correct. Maybe even val_loss_epoch is incorrect, per @Alek96's comment. Although the bigger issue is wrong values being sent to the (tensorboard of anything non pbar) logger.

def validation_step(self, batch, batch_idx):

loss = self.step(batch)

self.log('batch_idx', batch_idx, prog_bar=True)

self.batch_idxs.append(batch_idx)

return lossdef validation_epoch_end(self, outputs: List[Any]) -> None:

super().validation_epoch_end(outputs)

print("batch_idxs mean", sum(self.batch_idxs) / len(self.batch_idxs))

print("batch_idxs torch mean", torch.mean(torch.tensor(self.batch_idxs, dtype=torch.float)))

self.batch_idxs = []

Well... there is a bug in this code:

def validation_step(self, batch, batch_idx):

loss = self.step(batch)

self.log('batch_idx', batch_idx, prog_bar=True)

self.batch_idxs.append(batch_idx)

return loss

def validation_epoch_end(self, outputs: List[Any]) -> None:

super().validation_epoch_end(outputs)

print("batch_idxs mean", sum(self.batch_idxs) / len(self.batch_idxs))

print("batch_idxs torch mean", torch.mean(torch.tensor(self.batch_idxs, dtype=torch.float)))

self.batch_idxs = []

You return the loss but then compare the batch_idxs...

outputs has losses, not batch_idxs.

ie:

def validation_step(self, batch, batch_idx):

loss = self.step(batch)

self.log('batch_idx', batch_idx, prog_bar=True)

self.batch_idxs.append(batch_idx)

return loss # <---------- causes outputs to be losses not indexes

def validation_epoch_end(self, outputs: List[Any]) -> None:

outputs = outputs # <------------- losses not indexes!

ok, i think i found it. posting a fix now. Looks like the calculations are correct, but the wrong value got logged

Thanks for the fast fix.

To explain my intention:

You return the loss but then compare the batch_idxs...

outputs has losses, not batch_idxs.

In my understanding it should not matter if I returned loss value and then compared the batch_idxs. self.log method should work the same regardless of the value you pass in. That is way I called a method with a value that easily shows if the calculated mean is correct.

python

self.log('batch_idx', batch_idx, prog_bar=True)

The "bug" was not in the code, but in my understating of mean function. PyTorch lightning is using weighted_mean that is also taking in the account the size of each batch.

https://github.com/PyTorchLightning/pytorch-lightning/blob/f064682786d4e0dbbd2dfce488577a147306c656/pytorch_lightning/core/step_result.py#L446-L456

Boring_model that shows the behavior.

all good!

Please try on master now. Let me know if the errors are fixed.

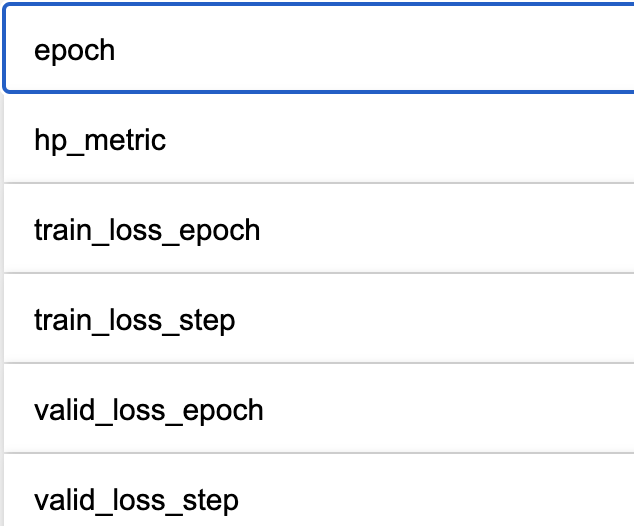

For reference, here is the new test for this:

as a bonus, got rid of the duplicate metric, metric_step chart without losing support for callbacks

@tchaton FYI

ok, mind verifying that this worked for you guys?

this is a critical bug, so, we'll do a minor release now to fix it for everyone.

Here's the new colab with master showing it's fixed:

https://colab.research.google.com/drive/1lEZms9QjRZ7kPosu_m7Gbr_sdBZ7exzg?usp=sharing

@williamFalcon it works now, thanks a lot.

Works here too :)

Just testing and it works for me too 💪

Most helpful comment

The Issue is still present in version 1.0.1.

I also found out that mean function used to calculate value at the and of the epoch (the value that is present in the progress bar) does not return the correct average value.

logged value = 31

progress bar value = 15.1

mean value = 15.5

torch mean value = 15.5