Pytorch-lightning: Redundant metric logging for TensorBoard

The following line causes logging a metric as a scalar in TensorBoard, although it is supposed to go only to the "hparams" section.

https://github.com/PyTorchLightning/pytorch-lightning/blob/01f131665aa5a7c86b984ece7214390076fd2a98/pytorch_lightning/loggers/tensorboard.py#L169

The resulting scalar graph is a vertical line with step 0 at x-axis and the metric going vertically upwards, which does not convey much information.

So I would suggest to remove this line

All 31 comments

Hi! thanks for your contribution!, great first issue!

@s-rog you added this line in #2974. Do you rememmber why this is needed?

@awaelchli Without that line tensorboard would not show any hparams. Also that line was there prior to my PR, I just made it actually log a metric so hparams show up.

Edit:

If you don't log an accompanying metric with hparams, then they won't show up in tensorboard, so this is intended behavior (until tensorboard can display hparams without a metric).

@s-rog Would you say this is a TensorBoard issue and we have this as a workaround? (sorry not a TB user myself)

@awaelchli I think tensorboard hparams is meant to be used for performance comparisons between trials and thus needs at least a metric to compare. But pl's tensorboard logger still allows users to log and display hparams without using metrics, so it's more of an added functionality for pl as opposed to an issue workaround for tensorboard as far as I understand.

For just pure hparam logging/display purposes there is still the simple text functionality of tensorboard (like how the test_tube logger logs hparams). But having 2 completely different methods to log hparams in one logger seems unintuitive...

so this is to log hyperparameters before training starts, when no metrics are available yet?

what if we logged the hyperparameters together with the first training/eval step?

If it's not possible, I suggest to close this issue. I remember this was discussed in older issues as well.

The code line I mentioned is not a TensorBoard problem, but it is just a redundant part. Commenting out the line does not affect the functionality of TensorBorad logging at all. Having the line, however, generates an extra metric graph, which is not informative at all, because the steps are not logged properly (all steps are set to zero as you can see in the code line). I think you can delete the line safely and have less clutter on the TensorBorad.

which tensorboard / pytorch version do you have?

pytorch 1.6.0

tensorboard 2.2.0

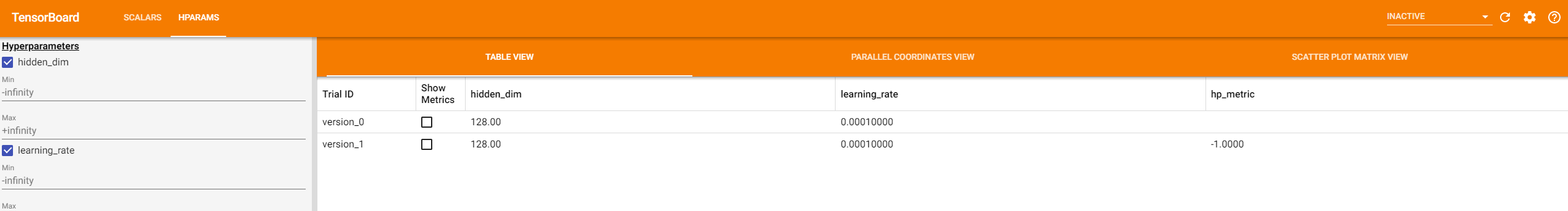

Indeed, if I remove the line tensorboard logs and shows the hyperparameters anyways. In the above screenshots, version 0 is with the line removed (does not log hp_metric) and version 1 is what we have on master.

and also when no val_loss is logged, so no metrics logged and it still works.

@s-rog what do you think? Am I missing something?

@awaelchli Does version_0 display hparams correctly with version_1 deleted? I'll do some more testing as well (feel free to assign me), but based on the behavior on your end it seems like perhaps hparams only need a metric key to display properly and not a metric value (before my PR it had neither).

Yes, I created version 0 with an empty log dir and having that one line of code commented out. I also removed all logging from training step, so the "scalars" tab did not even show up. only the hparams tab shows and it has the special hp_metric key but no value in the cell. Maybe you are right, it only needs the key not the value

@awaelchli so we have a custom hparam writer instead of using torch.utils.tensorboard.writer.add_hparams due to it writing to a different summary file as explained here https://github.com/PyTorchLightning/pytorch-lightning/issues/2406#issuecomment-673958176. Our current implementation matches the built in method which logs metric values, the question now is do we want to diverge from the pytorch implementation?

What would be the difference? I don't see a similar line like self.log_metrics(metrics, 0) in the original writer, so removing it should not be a big divergence?

It has this on master:

for k, v in metric_dict.items():

w_hp.add_scalar(k, v)

Our current implementation matches the built in method which logs metric values, the question now is do we want to diverge from the pytorch implementation?

I honestly don't know what is the right thing to do here :(

I wouldn't mind to have the metrics plotted as long as their values change with the steps. At the moment the steps of a metric don't change and are set always to 0, but the metric values change within step 0 (i.e., the values grow vertically).

@dschaehi If you log to hp_metric in your training or val steps/epochs, it will be updated in hparams (you should not be logging hparams multiple times). If you want to use your own or multiple keys (instead of hp_metric) you can disable it when initializing tensorboard logger and manually call log_hyperparams with your metrics (in on_fit_start or wherever you see fit).

Edit:

The vertical line means you're logging to step 0 instead of the current step, let me know if you're having any more issues with it.

@s-rog Actually I am using the method self.logger.log_hyperparams for logging a metric and that metric is logged to step 0 instead of the current step.

@dschaehi I see, for now try calling log_hyperparams in on_fit_start (or use the default call) then updating the metric in other logging steps.

Looking at the torch.utils.tensorboard.writer.add_hparams it also exclusively logs to step 0 (default when no step is entered). @awaelchli thoughts?

@s-rog I did as you suggest, but this didn't change the behavior.

I wasn't aware that TensorBoard logs hparms to step 0 (which in my opinion is redundant). I was used to Ray Tune's TensorBoard hparam logging, which does not call add_scalar (cf. https://github.com/ray-project/ray/blob/4e9888ce2f89689b31dc0b436467ba01789f249b/python/ray/tune/logger.py#L289-L293).

I am unable to replicate this behavior on my end, you'll have to share some minimal reproducible code for me to diagnose it.

What is the correct/intended way to get Tensorboard to load into HPARAMS and metric?

I am trying to save the best validation loss as a metric (not a scalar), but documentation is sparse on this.

@s-rog I now think that the code line I mentioned in my original post https://github.com/PyTorchLightning/pytorch-lightning/issues/4030#issue-718190759 is necessary to register the metric. Removing this did not allow the metric to show up in HPARAMS. I wonder whether there is a way to get around this, i.e., exclusively showing the metric in HPARAMS and not in SCALARS. Ray Tune could do this, as I mentioned https://github.com/PyTorchLightning/pytorch-lightning/issues/4030#issuecomment-707583293, perhaps because it is using TensorBoardX. If there is no immediate solution for this, then you can close this issue.

@igorbb

- Set

logger=TensorBoardLogger(default_hp_metric=False)and pass it topl.Trainer - In the

__init__method of your model class addself.save_hyperparameters("your_metric_1", "your_metric_2", ...)to register the hyperparams - Add

self.logger.log_hyperparams(params=self.hparams, metrics={"your_metric": value})anywhere you want to log the metric.

@dschaehi I see, so removing that line has side effects... I have not looked into tensorboardx, but I'm sure there are differences, thanks for looking into it.

@igorbb Check out our forums for questions!

@awaelchli Close? or do you think some changes are in order?

@awaelchli Close? or do you think some changes are in order?

we can close, though I must say this logging of hyperparameters in TB is not very satisfiying, also not knowing why the things are the way they are.

tensorboardx has almost the same implementation as native pytorch but allows for a step input

for k, v in metric_dict.items():

w_hp.add_scalar(k, v, global_step)

https://www.tensorflow.org/tensorboard/hyperparameter_tuning_with_hparams

the official tensorflow tutorial also logs with metrics

tensorboardx has almost the same implementation as native pytorch but allows for a step input

for k, v in metric_dict.items(): w_hp.add_scalar(k, v, global_step)https://www.tensorflow.org/tensorboard/hyperparameter_tuning_with_hparams

the official tensorflow tutorial also logs with metrics

In my opinion, allowing for a step input is more reasonable than logging to step zero.

@dschaehi can you submit a PR?

@s-rog I wish I had the time to go through the code in TensoBoradX and suggest changes for PyTorch Lightning, but at the moment I don't have the time for that. It would be nice if someone else could do it.