Pytorch-lightning: TensorBoardLogger log_hyperparameters fails when explicitly specified.

🐛 Bug

TensorBoardLogger log_hyperparameters fails with TypeError: log_hyperparams() missing 1 required positional argument: 'params' when explicitly specified. It behaves correctly when not specified (even though its the default logger).

To Reproduce

See code snippet:

import pytorch_lightning as pl

from pytorch_lightning import Trainer

# This is fine, even though TensorBoardLogger is the default logger.

trainer = Trainer()

# This breaks when `fit` is called, see stacktrace

trainer = Trainer(logger=pl.loggers.tensorboard.TensorBoardLogger)

trainer.fit(model, train_loader, val_loader)

Stacktrace (I removed some Hydra calls from the top of the trace that don't contribute anything). This doesn't happen when logger is not explicitly specified in the Trainer constructor.

Traceback (most recent call last):

File "train.py", line 65, in train

trainer.fit(model, train_loader, val_loader)

File "/opt/conda/lib/python3.7/site-packages/pytorch_lightning/trainer/trainer.py", line 301, in fit

results = self.accelerator_backend.train()

File "/opt/conda/lib/python3.7/site-packages/pytorch_lightning/accelerators/gpu_backend.py", line 50, in train

self.trainer.train_loop.setup_training(model)

File "/opt/conda/lib/python3.7/site-packages/pytorch_lightning/trainer/training_loop.py", line 124, in setup_training

self.trainer.logger.log_hyperparams(ref_model.hparams)

File "/opt/conda/lib/python3.7/site-packages/pytorch_lightning/utilities/distributed.py", line 27, in wrapped_fn

return fn(*args, **kwargs)

TypeError: log_hyperparams() missing 1 required positional argument: 'params'

Environment

Ran into issue on release 0.9.0 as well as current master ba01ec9dbf3bdac1a26cfbbee67631c3ff1fadad

- CUDA:

- GPU:

- GeForce GTX 1070

- available: True

- version: 10.1 - Packages:

- numpy: 1.18.5

- pyTorch_debug: False

- pyTorch_version: 1.6.0

- pytorch-lightning: 0.9.1rc3

- tqdm: 4.46.0 - System:

- OS: Linux

- architecture:

- 64bit

-

- processor: x86_64

- python: 3.7.7

- version: #1 SMP Sun Mar 8 14:34:03 CDT 2020

Additional context

I'm explicitly specifying this because I have my own subclass of tensorboard's SummaryWriter that I would like TensorBoardLogger to use. I'm doing this by subclassing TensorBoardLogger and copying the experiment method, replacing the SummaryWriter with my own.

All 9 comments

Hi! thanks for your contribution!, great first issue!

follow up: with the default logger (not explicitly specified), hparams are not logged. I'm using the newer hparams api where the hparams attribute is not explicitly set.

@teddykoker take a look?

2974 btw this has already been merged into master, but hasn't made it into release yet, current release implementation is broken.

Ran into issue on release 0.9.0 as well as current master ba01ec9

You need to initialize a logger instance

Trainer(logger=pl.loggers.tensorboard.TensorBoardLogger(save_dir))

Closing, everything works as it should. See https://colab.research.google.com/drive/1bvsyanpnMwf3RZerfybcH6w-cFNuduUw?usp=sharing for an example of using your own tensorboard logger.

Closing, everything works as it should. See https://colab.research.google.com/drive/1bvsyanpnMwf3RZerfybcH6w-cFNuduUw?usp=sharing for an example of using your own tensorboard logger.

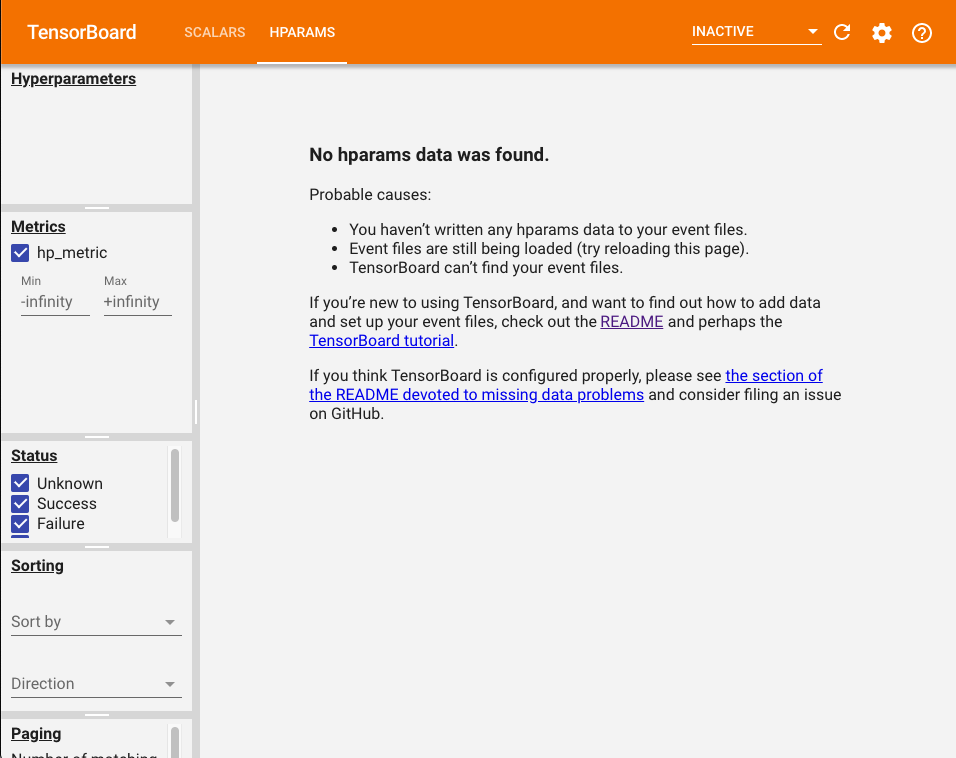

I wrote this to turn tensorboard on:

%load_ext tensorboard

%tensorboard --logdir .

Hyperparams are still not logged, like there https://github.com/PyTorchLightning/pytorch-lightning/issues/3610#issuecomment-696884730

To be clear, I am still having the hyperparams issues as mentioned by @vladimirbelyaev , but the whole constructor thing was totally user error on my part. That said, I shouldn't have brought up 2 separate issues in a single github issue.

Most helpful comment

You need to initialize a logger instance

Trainer(logger=pl.loggers.tensorboard.TensorBoardLogger(save_dir))