Pytorch-lightning: Not able to train lightning with tpu

All 17 comments

Hi! thanks for your contribution!, great first issue!

@lezwon mind have look? 🐰

This looks to be more like a kaggle memory issue. Maybe try reducing the batch size? Also, to make the notebook public :)

@lezwon are u asking me ?

oh shoot :D. Accidently tagged someone else. Yep, I was referring to you :]

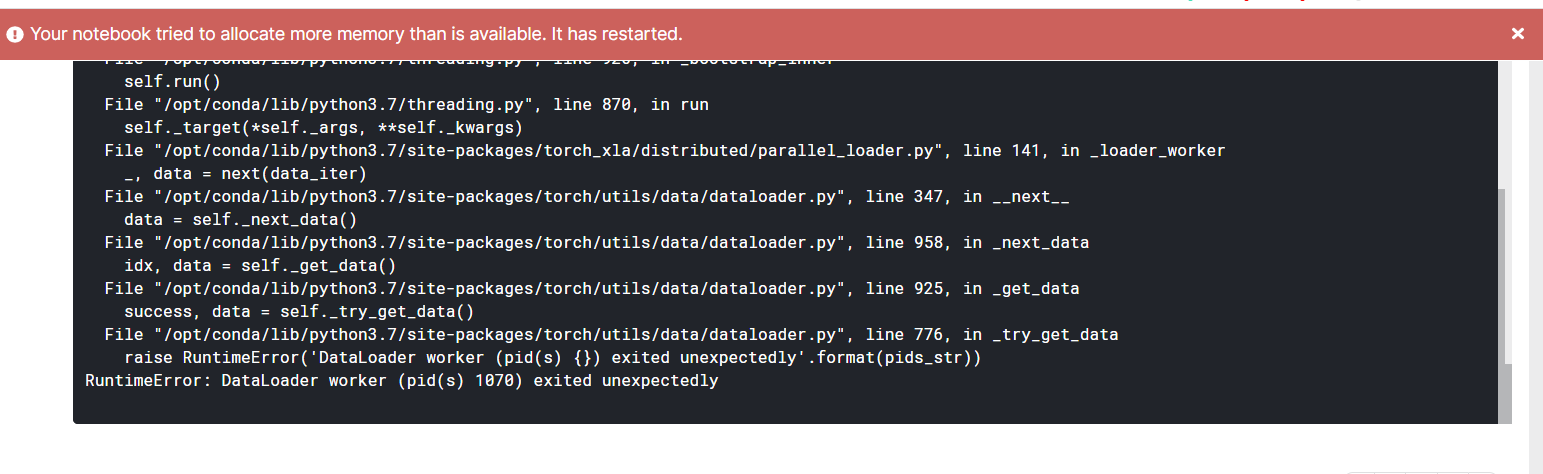

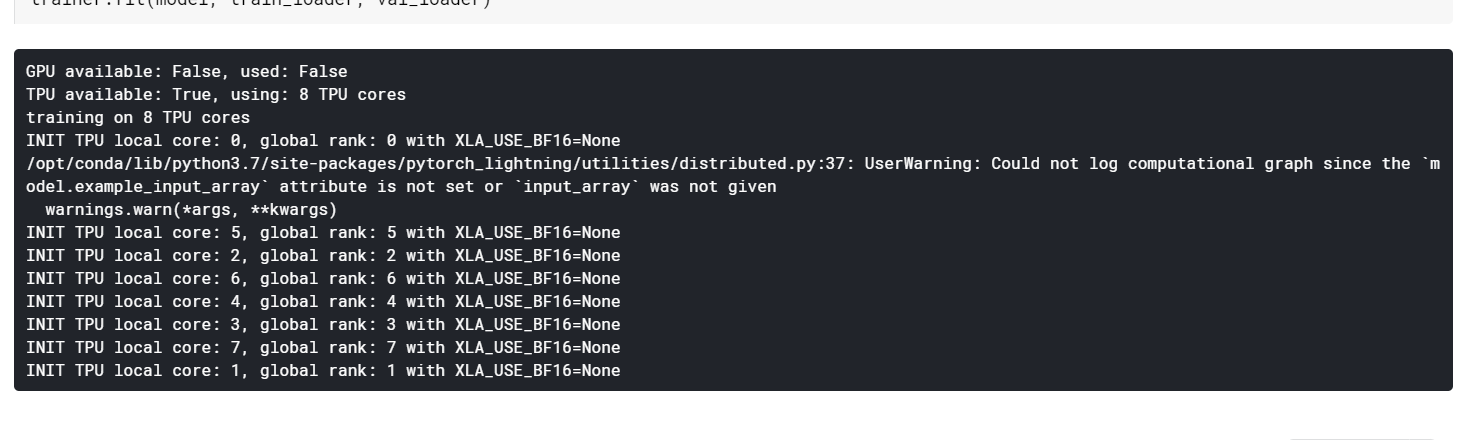

Tried batch_size of 512 but still its same ... i also tried to train this 98 GB dataset on Kaggle Gpu but getting cuda device-side assert error . Made the notebook public Have a look and please let me know where I am doing mistake

The batch size of 1024 or 512 should be the problem. I tried with a batch size of 32. Seemed to work fine.

I cann't see any changes after changes.. only this thing is appearing

@lezwon Can u please post ur changes with the progress bar ...

Try this one: https://www.kaggle.com/lezwon/landmark-detection

Thanks @lezwon Seems like it is training n

I saw you added these lines

!pip uninstall typing -y

!pip install -qU git+https://github.com/PyTorchLightning/pytorch-lightning.git

and changed the batchsize to 16 for the model to start training on tpu ????????

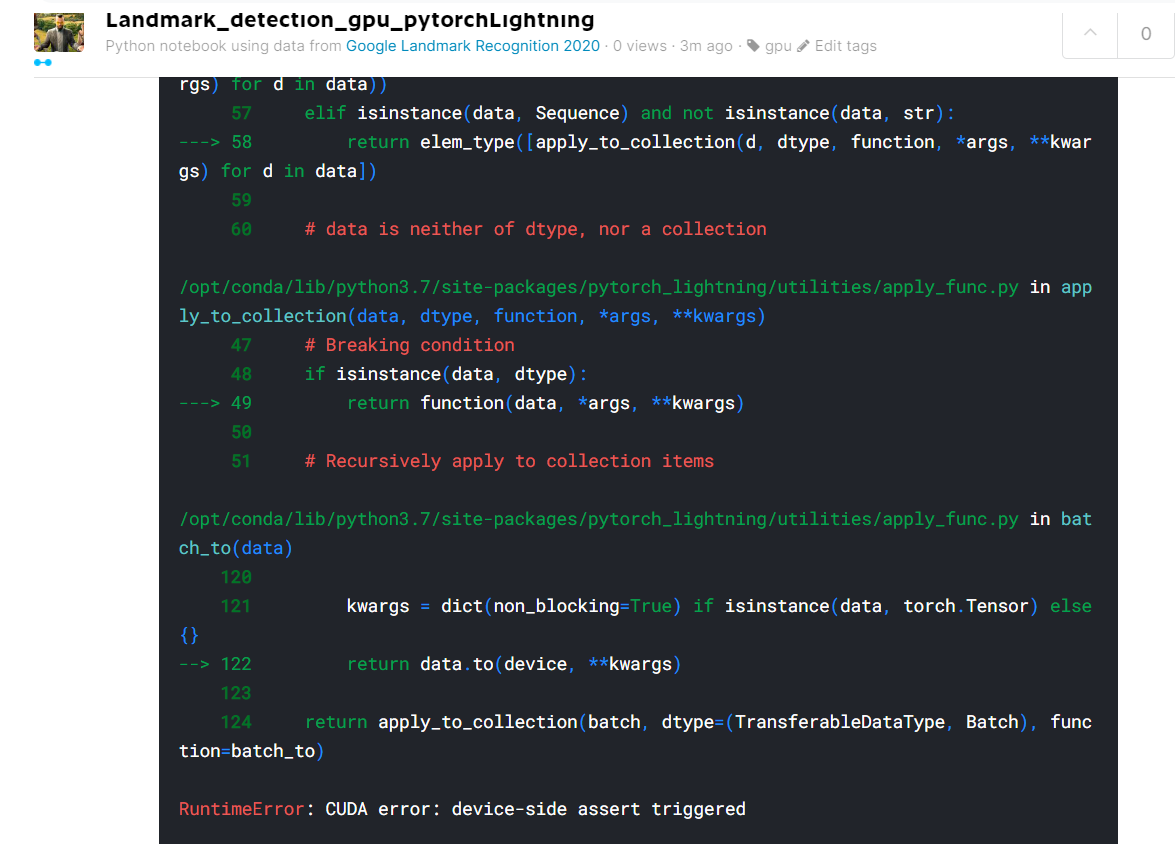

Also getting the RuntimeError: CUDA error: device-side assert triggered while running

this notebook on gpu

I installed PyTorch lightning from master itself as the TPU support is more stable there. As for batch size, I faced an out of memory issue after a while when choosing 32. Hence 16.

@rohitgr7 any idea about the cuda error?

there shall not be anything about CUDA in TPU ran... mind trace it to the source?

I think it occurs when he runs it on gpu.

@soumochatterjee just LabelEncode landmark_id, it will work.

Also, you need to fix this:

self.aug = albumentations.Compose([

albumentations.Resize(img_height , img_width, always_apply = True) ,

albumentations.Normalize(mean , std , always_apply = True),

Cutout(),

albumentations.ShiftScaleRotate(shift_limit = 0.0625,

scale_limit = 0.1 ,

rotate_limit = 5,

p = 0.9)

])

since Cutout is not from albumentations here, you will get an error later on. You can use albumetations.Cutout().

@soumochatterjee is your issue resolved?

Yes @lezwon thanks for all the help .