Pytorch-lightning: mlflow training loss not reported until end of run

I think I'm logging correctly, this is my training_step

result = pl.TrainResult(loss)

result.log('loss/train', loss)

return result

and validation_step

result = pl.EvalResult(loss)

result.log('loss/validation', loss)

return result

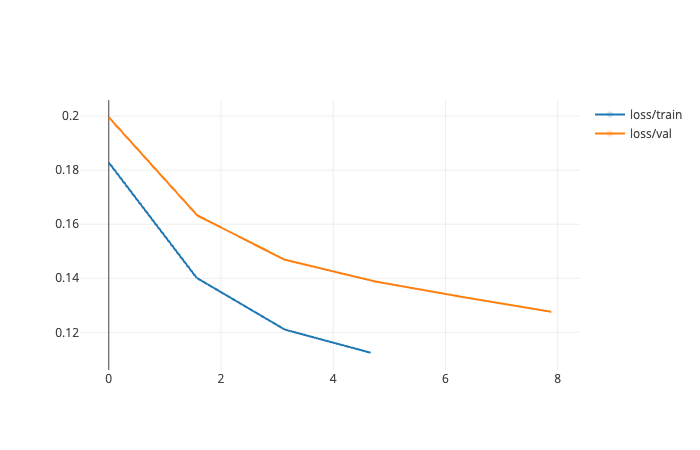

The validation loss is updated in mlflow each epoch, however the training loss isn't displayed until training has finished. Then it's available for every step. This may be a mlflow rather than pytorch-lighting issue - somewhere along the line it seems to be buffered?

Versions:

pytorch-lightning==0.9.0

mlflow==1.11.0

Edit: logging TrainResult with on_epoch=True results in the metric appearing in mlflow during training, it's only the default train logging which gets delayed. i.e.

result.log('accuracy/train', acc, on_epoch=True)

is fine

All 8 comments

When using the minimal example provided in the linked issue, and using the default training logging shown above, I don't see the behaviour described.

I can sometimes see a discrepancy between the reported steps for each metrics, but I suspect this is to do with MLFlow and not the PL logger.

@david-waterworth could you elaborate on a few points?

- When you set up the MLFLowLogger, if your

tracking_urioverhttp:or usingfile:? - If

http, is the tracking server remote? - How long does a model training run typically take?

- does this behaviour consistently happen even when refreshing the MLFlow page?

@patrickorlando

When you set up the MLFLowLogger, if your tracking_uri over http: or using file:?

I'm using file i.e. mlflow = loggers.MLFlowLogger("Transformer")

How long does a model training run typically take?

10-20 minutes

does this behavior consistently happen even when refreshing the MLFlow page?

Yes

I've tried to reproduce this but cant seem to. I can confirm that the MLFlow logger is logging metrics at the end of each epoch and for me they show up in the MLFlow UI as I refresh the page.

Do you have a working code sample that can reproduce the issue?

So I can actually see the behaviour you've described, but not when using the minimal example in #3393. I'll try to work out why.

So I _think_ this is because of the default behaviour of the TrainResult and the way row_log_interval works. And it only appears if the number of batches per epoch is less than row_log_interval

By default TrainResult logs on step and not on epoch.

https://github.com/PyTorchLightning/pytorch-lightning/blob/aaf26d70c4658e961192ba4c408558f1cf39bb18/pytorch_lightning/core/step_result.py#L510-L517

When logging only per step, the logger connector only logs when the batch_idx is a multiple of row_log_interval. However if you don't have more than row_log_interval batches, the metrics are not logged.

https://github.com/PyTorchLightning/pytorch-lightning/blob/aaf26d70c4658e961192ba4c408558f1cf39bb18/pytorch_lightning/trainer/logger_connector.py#L229-L237

@david-waterworth Do you have less than 50 batches per epoch in your model? can you try setting row_log_interval to be less than the number of train batches to confirm whether the issue is caused by this?

@patrickorlando yes I have 38 batches per epoch. I set row_log_interval=1 and now the training step metrics are being displayed as they're generated.

yes I have 38 batches per epoch. I set row_log_interval=1 and now the training step metrics are being displayed as they're generated.

That makes sense now :)

@david-waterworth Should we close this? or is there something left unresolved?

Thanks for the assistance, no nothing unresolved.