Pytorch-lightning: Logging hyperparams and metrics

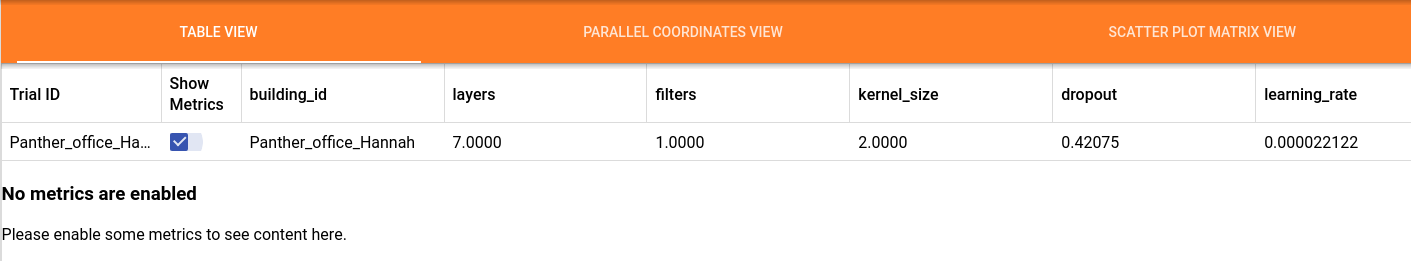

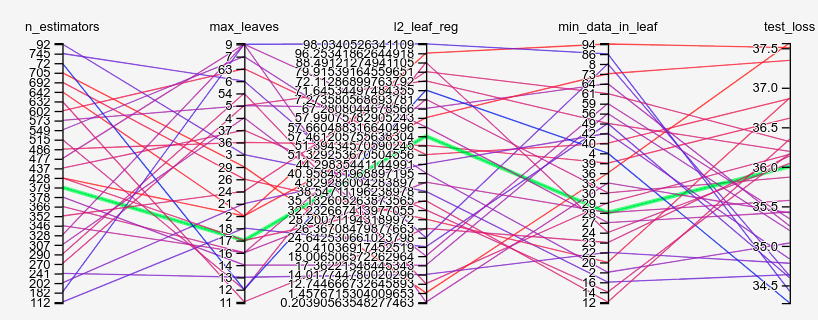

My question is how do I log both hyperparams and metrics so that tensorboard works "properly". I've copied pytorch_lightning.loggers.TensorBoardLogger into a catboost/hyperopt project, and using the code below after each iteration I get the result I'm after, on the tensorboard HPARAMS page both the hyperparameters and the metrics appear and I can view the Parallel Coords View etc.

self.logger.log_hyperparams(

params=dict(n_estimators=n_estimators, max_leaves=max_leaves, l2_leaf_reg=l2_leaf_reg, min_data_in_leaf=min_data_in_leaf),

metrics=dict(val_loss=val_loss, train_loss=train_loss))

However when I follow the lightning tutorials, only the hyperparams are being logged, there's no metrics so the charts don't display.

Is there something additional I should be doing to ensure that log_hyperparams passes the metrics on_train_end (or wherever is appropriate, since on_train_end also doesn't appear to pass outputs)

Edit:

I can see in run_pretrain_routine that log_hyperparams is invoked with no metrics

# log hyper-parameters

if self.logger is not None:

# save exp to get started

self.logger.log_hyperparams(ref_model.hparams)

self.logger.save()

This seems to be the wrong place to call log_hyperparams, for tensorboard at least it should really be post training with the validation loss.

Edit 2:

And my example which does work, only sort of works. Looking closer it seems to treat all the hyperparameters as categorical (i,e, they're not ordered). Examples of use with keras callbacks have the hyperparameters and metrics defined first (i.e. type and range).

All 16 comments

What I did is I just overwrite the log_hyperparams function in my own logger and do the right thing.

Indeed I agree with you that the way lightning invokes log_hyperparams at the wrong place, but the dev believes otherwise.

But anyway, you can just have your own logger that inherits from TensorboardLogger.

Also you need to log metrics yourself manually somewhere else for this to work.

Typically that is done in validation_end, that's why I didn't include it in the function.

@rank_zero_only

def log_hyperparams(self, params: Dict[str, Any], metrics: Dict[str, Any] = None):

# HACK: just log hparams to text when there's no metrics

if metrics is None:

self.writer.add_text("config", str(params))

else:

from torch.utils.tensorboard.summary import hparams as HP

params = self.prepare_hparams(params)

exp, ssi, sei = HP(params, metrics)

writer = self.writer._get_file_writer()

writer.add_summary(exp)

writer.add_summary(ssi)

writer.add_summary(sei)

Hi @versatran01 Thanks for your answer. We agree, that this is very unfortunate, but our main concern is, that if the training process is killed, we won't log any hyperparams, which is quite bad. This is the main reason, we log them at the beginning and don't just wait for the end, where we have all the metrics.

What do you propose? How and where should we call it?

But this essentially make the whole hparam thing unusable for the normal case, right? In my setting hparams are always logged using sacred, and as text to tensorboard like in the above code. I only log hparams with valid metrics, which is what it is designed for, to compare multiple runs. My suggestion is always to not log it at the beginning, but let the user decide when to log it.

I think saving hparams is a valid concern but lighting should use the right tool for that, tensorboard hparam is not designed for this purpose.

At least for my use case I log metrics in validation end and call log hparams with the best metric in logger.finalize but I cannot speak for others.

okay, I'll bring this up for discussion internally :) thanks @versatran01

My solution has been to switch to mlfow. I've decided logging should focus on recording the experimental data, then I'll generate reports / parallel component charts etc afterwards using a separate tool. I think the tensorboard approach of requiring the data to be logged in a specific manner to support a plugin isn't ideal.

Also I like how you can nest contexts in mlflow so you can create a study record with child trial records which match the optuna structure better than some of the others which seem to treat a trial as a single experiment and use tags to group them.

cc: @PyTorchLightning/core-contributors

in my duplicate issue: #2971 I proposed a solution of a placeholder metric that can be updated at the end of training, something like hp_metric.

any thoughts? @justusschock

currently the default behavior attempts to save hparams incorrectly on training start, which causes future hparams that are manually and correctly saved to not work regardless.

I think, that's a way we could handle this. But I also think, they should live in the logger itself, not in the module. So for the module nothing should change. Are you interested in taking this @s-rog ?

yeah in log_hyperparams metrics should be able to take a scalar that will get logged with a default key of hp_metric

(if dict then it will log with the user specified key)

at the end of training the user can then call log_hyperparams again with the actual scalar if they want to compare in tensorboard

sound good? @justusschock

I think you should be able to call it as many times as you want to and it will always just be overwritten and then you can log it during finalize?

yeah that's what I meant, I'll work on a PR

@justusschock is there a reason why hparams is written like:

exp, ssi, sei = hparams(params, metrics)

writer = self.experiment._get_file_writer()

writer.add_summary(exp)

writer.add_summary(ssi)

writer.add_summary(sei)

instead of just:

self.experiment.log_hyperparams(params, metrics)

I'm not entirely sure what it was, but I recall there was an issue with self.experiment.log_hyperparams.

@s-rog and @justusschock the problem with using self.experiment.add_hparams is this line https://github.com/pytorch/pytorch/blob/e182ec97b3ce7980b3901c8d162cfa63bd334ce8/torch/utils/tensorboard/writer.py#L313, which will create a new summary file just for the hparams. Thats why we need to call

exp, ssi, sei = hparams(params, metrics)

writer = self.experiment._get_file_writer()

writer.add_summary(exp)

writer.add_summary(ssi)

writer.add_summary(sei)

to log the hparams in the same file as all other scalars

@SkafteNicki Thanks for the clarification, will keep the original implementation

closing as tb logger hparams works properly now, re-open if you're having issues again

Most helpful comment

yeah that's what I meant, I'll work on a PR