Pytorch-lightning: epoch_end Logs Default to Steps Instead of Epochs

🐛 Bug

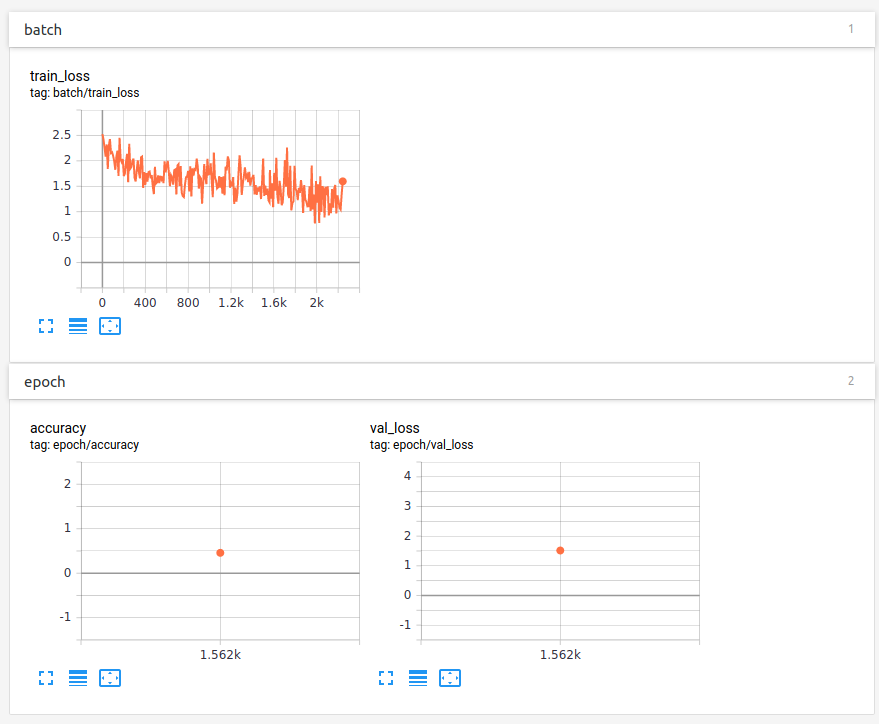

Logs generated within validation_epoch_end have their iteration set to the number of steps instead of number of epochs.

To Reproduce

Steps to reproduce the behavior:

- Create LightningModile with the below functions.

- Train the module for 1 or more epochs

- Run Tensorboard

- Note the iteration for logs generated in

validation_epoch_end

Code Sample

def training_step(self, train_batch, batch_idx):

x, y = train_batch

y_hat = self.forward(x)

loss = self.cross_entropy_loss(y_hat, y)

accuracy = self.accuracy(y_hat, y)

logs = {'batch/train_loss': loss}

return {'loss': loss, 'log': logs}

def validation_step(self, val_batch, batch_idx):

x, y = val_batch

y_hat = self.forward(x)

loss = self.cross_entropy_loss(y_hat, y)

accuracy = self.accuracy(y_hat, y)

return {'batch/val_loss': loss, 'batch/accuracy': accuracy}

def validation_epoch_end(self, outputs):

avg_loss = torch.stack([x['batch/val_loss'] for x in outputs]).mean()

avg_accuracy = torch.stack([x['batch/accuracy'] for x in outputs]).mean()

tensorboard_logs = {'epoch/val_loss': avg_loss, 'epoch/accuracy' : avg_accuracy}

return {'avg_val_loss': avg_loss, 'accuracy' : avg_accuracy, 'log': tensorboard_logs}

Version

v0.7.1

Screenshot

Expected behavior

Logs generated in *_epoch_end functions use the epoch as their iteration on the y axis

The documentation seems unclear on the matter. The Loggers documentation is empty. The LightningModule class doesn't describe logging in detail and the Introduction Guide has a bug in the example self.logger.summary.scalar('loss', loss) -> AttributeError: 'TensorBoardLogger' object has no attribute 'summary'.

Is there a workaround for this issue?

All 2 comments

Looks like the LightningModule documentation for validation_epoch_end has a note regarding this, to use step key as in: 'log': {'val_acc': val_acc_mean.item(), 'step': self.current_epoch}. This does work correctly as a workaround - perhaps this should be the default for epoch end?

Looks like the LightningModule documentation for validation_epoch_end has a note regarding this, to use

stepkey as in:'log': {'val_acc': val_acc_mean.item(), 'step': self.current_epoch}. This does work correctly as a workaround - perhaps this should be the default for epoch end?

In the current version of PytorchLightening we are encouraged to use self.log() to log statistics, as opposed to returning a dictionary as mentioned in the previous comment. I can not see an obvious way to control the step with the new self.log() method?

Most helpful comment

Looks like the LightningModule documentation for validation_epoch_end has a note regarding this, to use

stepkey as in:'log': {'val_acc': val_acc_mean.item(), 'step': self.current_epoch}. This does work correctly as a workaround - perhaps this should be the default for epoch end?