Prisma1: [Mongo] improve relational link design to enhance performance

Is your feature request related to a problem? Please describe.

Consider the following mongodb datamodel:

type A {

id: ID! @id

name: String!

bs: [B!]!

}

type B {

id: ID! @id

title: String!

a: A! @relation(link: INLINE)

}

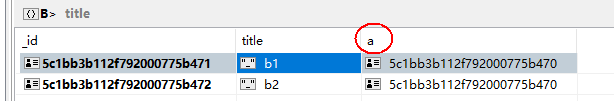

It generates a mongo database like this:

Note that in B the foreign key field is named a, not aId. Seeing from the level of mongodb, here a means aId.

But when querying via prisma, a means the whole a object, which includes id and name fields. For example:

This design can bring troubles when we only need to use the de facto aId in prisma. For example, the query shown above can be very simply (one single find) using the raw mongodb api:

db.b.find({a: 'xxxxx'})

But in prisma api we cannot write

The only way to do this in prisma is

and it is translated to the following mongodb queries/aggregations:

2018-12-20T15:23:06.409+0000 I COMMAND [conn10] command default_default.A command: aggregate { aggregate: "B", pipeline: [

{ $lookup: { from: "A", localField: "a", foreignField: "_id", as: "a" } },

{ $unwind: "$a" },

{ $match: { a._id: ObjectId('5c1bb3b112f792000775b470') } },

{ $sort: { _id: 1 } },

{ $skip: 0 },

{ $project: { _id: 1 }

} ], cursor: { batchSize: 2147483647 }, $db: "default_default", ...

2018-12-20T15:23:06.411+0000 I COMMAND [conn10] command default_default.B command: find {

find: "B",

filter: { _id: { $in: [

ObjectId('5c1bb3b112f792000775b471'),

ObjectId('5c1bb3b112f792000775b472')

] } }, ...

A aggregation pipeline starting with $lookup, which is a terrible performance killer, and a find with a long $in array, which is also not performance-friendly.

Describe the solution you'd like

Two possible solutions:

1) At the mongodb level, use aId instead of a to store the foreign key, and allow using query { bs(where: { aId: "xxx" }) {...} } to directly query with aId. This could be a neat and fundamental solution, but it introduces a breaking change for the mongo connector api.

2) Intelligently detect queries which can be simplified to a simple find (like the case shown above) and avoid translating them to aggregations.

All 6 comments

Hey @beeplin,

thanks for the suggestion. Your observation is correct, in that special case we could avoid using the aggregation framework and that would result in a significant speedup. As you probably noticed we are already trying to use that one sparingly due to the performance penalty.

At the moment we are still working on general performance improvements (like only fetching and parsing the necessary fields) and this proposed improvement is a very specific optimisation that will come later.

But keep up the suggestions, they are welcome! We are just trying not to prematurely optimize, which is why some of the improvements we are only doing now and others we might push even a bit further down the road until most big features we want in the Mongo connector are implemented.

If we were to implement this optimization, I think we would go with your second proposal btw.

@do4gr More throughts about this:

In the example above, even if we are querying bs with not a.id but a.name, it could still be done faster by using find rather than $lookup/$unwind/$match.

query {

bs(where: { a: { name: "xxx" } }) {

id

title

}

Instead of:

aggregate { aggregate: "B", pipeline: [

{ $lookup: { from: "A", localField: "a", foreignField: "name", as: "a" } },

{ $unwind: "$a" },

{ $match: { a.name: "xxx" } },

{ $sort: { _id: 1 } },

{ $skip: 0 },

{ $project: { _id: 1 }

} ], ...

find {

find: "B",

filter: { _id: { $in: [

ObjectId('5c1bb3b112f792000775b471'),

ObjectId('5c1bb3b112f792000775b472')

] } }, ...

It is better to translate into:

db.a.find({ name: "xxx" }, { _id: 1 }) // get ALL_A_IDs whose name === "xxx"

db.b.find({ a: { $in: [ALL_A_IDs] })

You are correct that in this specific case we could run two find queries instead of an aggregation + find.

The problem is that the filter API we offer gives the user a lot of freedom in how to formulate a filter. Since we do not know beforehand which filters we will have to translate we choose a generic approach that allows us to translate the full spectrum of possible filters. This has the effect that we will not always generate the most efficient queries to fetch the needed data.

We always have to strike a balance between expressiveness of our API, performance and code maintainability when deciding how and what to implement. If we get the impression that the performance penalty is too high and too common we will start handling special cases separately.

At the moment I think it is still to early to determine whether this is already necessary. But having issues like this to discuss this and to reference is super helpful so thanks a lot!

@do4gr yes I agree that optimization for the second case bs(where: { a: { name: "xxx" } } is not urgent. While the firset case bs(where: { a: { id: "xxx" } } is very common and can be easily optimized.

Prisma's postgres connector generally generates quite good quality SQLs. I know for the new mongo connector it will take time before being production-ready.

BTW the datamodel v1.1 is really cool, and we are eager to see it applied to the postgres connector. :)

@beeplin Just a heads-up that I've edited your comment and changed datamodel v2 into v1.1 since that's how we call this iteration of the datamodel now.

@nikolasburk Do you know if this will be addressed when the MongoDB connector is released for Prisma 2?

We are also seeing the same performance penalty of using pipeline": ["$lookup": {}] when a filter: { _id: { $in: [ ] } } would suffice