Pixi.js: [v5-rc.2] Bug when rendering many polygons with WebGL

Hello.

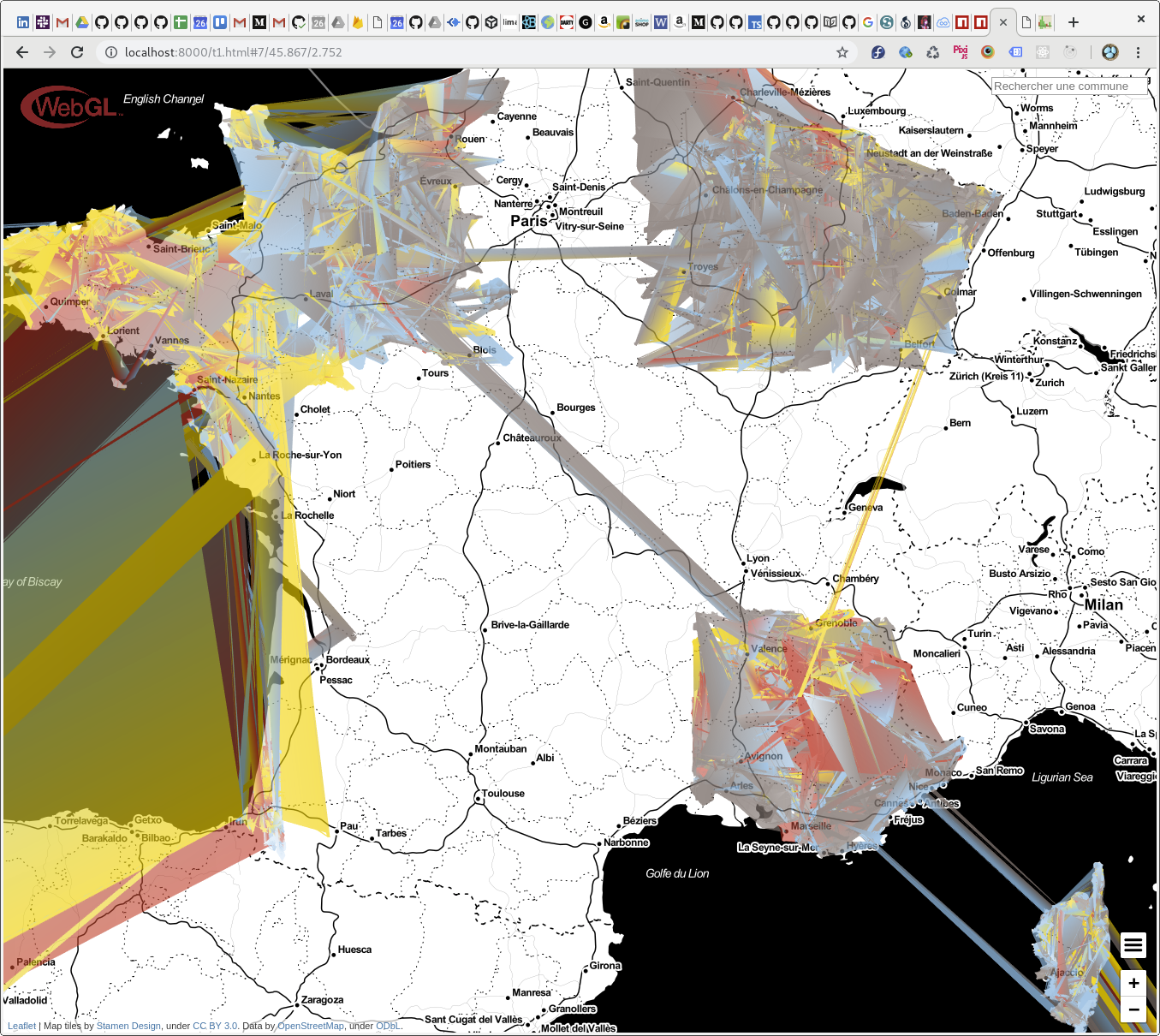

I think there is a problem in Graphics class. When i render a large number of polygons (35419) with WebGL, i obtain:

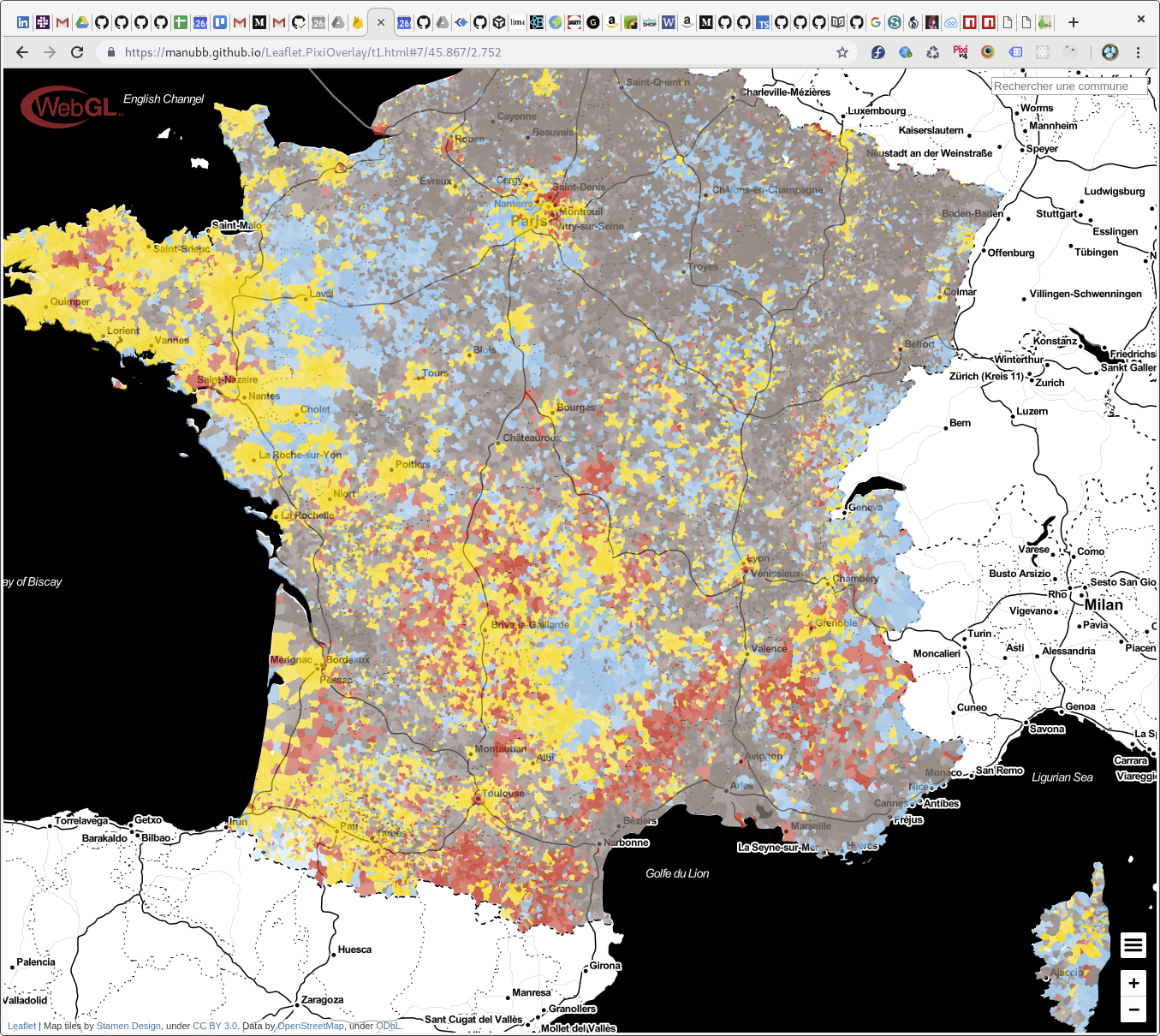

Using 2d canvas or pixi.js@4, i get the following expected result:

The problem does not occur apparently when displaying only 5000 polygons. (Threshold is close to 5650 and the polygons have about 10 edges.)

I can give additional details if needed.

All 5 comments

65536 vertices is a limit because of 16-bit index buffer, that's a webgl detail. We need to split it to two buffers instead of one, somehow.

You can try hack Graphics class that way it manages more vertices, or just use two graphics instead of one.

There's a thing about different algorithm for arcs. in v4 pixi used 20 vertices per a quadratic curve, and here its determined based on arc coordinates. If your coordinates are not fit for pixels, it will spawn more vertices. By default, it spawns extra edge per 10 pixels. If that algo is calculating wrong value, this appears: https://github.com/pixijs/pixi.js/pull/5443

Maybe you use big coords and it detects more vertices in arcs. Try to turn off adaptive curves or select different min length.

https://github.com/pixijs/pixi.js/blob/dev/packages/graphics/src/utils/QuadraticUtils.js#L61

related settings in PIXI.GRAPHICS_CURVES:

https://github.com/pixijs/pixi.js/blob/dev/packages/graphics/src/const.js#L16

Hi @ivanpopelyshev . Thanks for your answer and hope you're going well.

The problem is indeed the 16-bit index buffer. I was able to patch pixi to fix this using Uint32Array (see this commit).

This works in webGL2 and also in webGL1 (using extension OES_element_index_uint).

Do you think that pixi@5 could always use 32-bits integers for indices? Or maybe allow the user to choose with a PIXI.settings?

Allow people to increase it does sound useful

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

Most helpful comment

Hi @ivanpopelyshev . Thanks for your answer and hope you're going well.

The problem is indeed the 16-bit index buffer. I was able to patch pixi to fix this using

Uint32Array(see this commit).This works in webGL2 and also in webGL1 (using extension

OES_element_index_uint).Do you think that pixi@5 could always use 32-bits integers for indices? Or maybe allow the user to choose with a

PIXI.settings?