Parity-ethereum: Parity timeout on RPC after intensive work

I'm running:

- Which Parity version?: 1.9.7, 1.10.6

- Which operating system?: Linux

- How installed?: Docker

- Are you fully synchronized?: yes

- Which network are you connected to?: local PoA ethereum network (2 authority, 3 members)

- Did you try to restart the node?: yes

I have a critical issue with the parity chain.

I have a smart contract which has one method createRequest(foo, bar) that adds a new entry into inner array.

The problem is that when I run this method once per second then it works like a charm. But when I start to run multiple requests at parallel (30+/sec) then at some moment it just breaks. All RPCs including pure functions calls stop to work, I'm unable to get data, set data or view anything in the blockchain. I tried to use this advice but it didn't fork to me. It also look similar to https://github.com/paritytech/parity/issues/8708 , however, I'm not sure if it's the same problem.

Same behavior on 1.10.6

The complete contract code is below

All 31 comments

My stress test code:

private static async Task CreateMultipleRequests(Web3 web3)

{

var newSeason = await newFactory.CreateSeasonAsync(DateTimeOffset.UtcNow, DateTimeOffset.UtcNow.AddDays(1), "Foo");

WriteLine($"Season = {newSeason.Address}");

var sw = Stopwatch.StartNew();

const int n = 1000;

int processed = 0;

var tasks = Enumerable.Range(1, n).Select(async i =>

{

while (true)

{

try

{

await newSeason.CreateRequestAsync(Request.CreateDefault());

WriteLine($"{Interlocked.Increment(ref processed) / ((double)n):P} done");

break;

}

catch

{

WriteLine("Fail. Trying yet another time");

}

}

});

await Task.WhenAll(tasks);

sw.Stop();

WriteLine($"Created '{n}' requests, elasped time '{sw.Elapsed}'");

ReadKey();

Environment.Exit(0);

}

It just creates 1000 tasks and runs them in parallel. But it doesn't work, it just hangs and RPC stop to work. I just reproduced it on fresh installed 1.10.6.

I can compile a sample application that you can run on your environment, if you wish so.

Btw it's not just annoyance, it's complete unavailability of the network.

From the chat, using Nethereum.

@tomusdrw any idea?

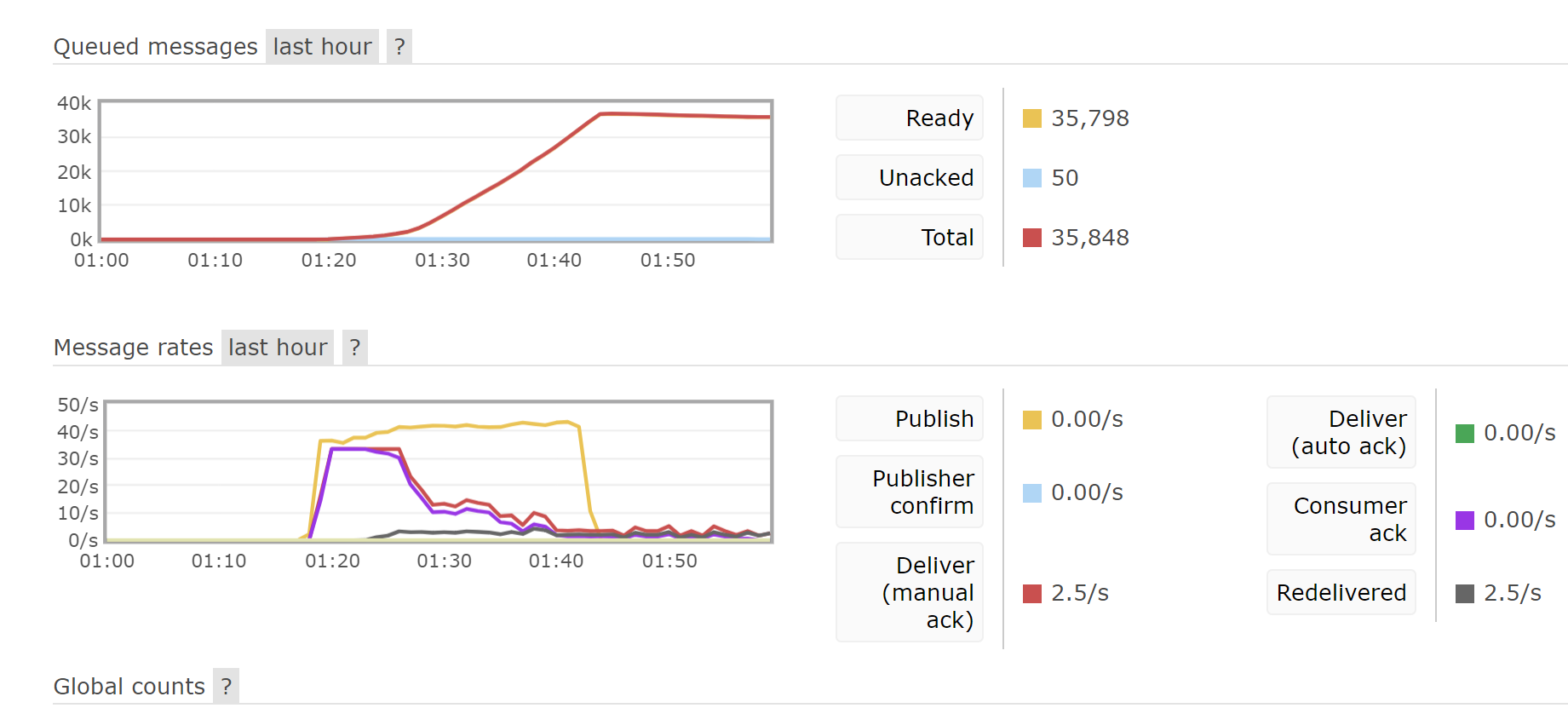

I did multiple optimizations to make it work, and I was glad to see 40tx/sec. However, for some reason performance quickly gets downgraded:

_I read messages from RabbitMQ and store it into smart contracts. Ack/get speed is parity tx confirmation speed_

Assuming I only have ~50% of CPU load I'm not sure what could be done here.

I was publishing around 40msg/sec for first 20 minutes. First ten minutes performance was great, but then fell to almost zero.

Code just takes a message and calls createRequest method on bc. Nothing more. And at some point it slows and slows down until complete stop. In latest version createRequest is a bit simplified (no sort), but it doesn't really matter. The main idea that continous RPC calling of one method that writes into bc has a degradation.

Steps to reproduce:

deploy Season code.

Run createRequest ~40 times/sec for some time.

After 10 minutes of top-speed you will see degradation.

It doesn't depend on hardware, I have seen this on very different machines.

chains

{

"name": "parity-poa-playground",

"engine": {

"authorityRound": {

"params": {

"stepDuration": "1",

"validators": {

"list": [

"0x00Bd138aBD70e2F00903268F3Db08f2D25677C9e",

"0x00Aa39d30F0D20FF03a22cCfc30B7EfbFca597C2",

"0x002e28950558fbede1a9675cb113f0bd20912019"

]

},

"validateScoreTransition": 1000000000,

"validateStepTransition": 1500000000,

"maximumUncleCount": 1000000000

}

}

},

"params": {

"maximumExtraDataSize": "0x20",

"minGasLimit": "0x1388",

"networkID": "0x2323",

"gasLimitBoundDivisor": "0x400",

"eip140Transition": 0,

"eip211Transition": 0,

"eip214Transition": 0,

"eip658Transition": 0

},

"genesis": {

"seal": {

"authorityRound": {

"step": "0x0",

"signature": "0x0000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000"

}

},

"difficulty": "0x20000",

"gasLimit": "0x165A0BC00"

},

"accounts": {

"0x0000000000000000000000000000000000000001": {

"balance": "1",

"builtin": {

"name": "ecrecover",

"pricing": {

"linear": {

"base": 3000,

"word": 0

}

}

}

},

"0x0000000000000000000000000000000000000002": {

"balance": "1",

"builtin": {

"name": "sha256",

"pricing": {

"linear": {

"base": 60,

"word": 12

}

}

}

},

"0x0000000000000000000000000000000000000003": {

"balance": "1",

"builtin": {

"name": "ripemd160",

"pricing": {

"linear": {

"base": 600,

"word": 120

}

}

}

},

"0x0000000000000000000000000000000000000004": {

"balance": "1",

"builtin": {

"name": "identity",

"pricing": {

"linear": {

"base": 15,

"word": 3

}

}

}

},

"0x6B0c56d1Ad5144b4d37fa6e27DC9afd5C2435c3B": {

"balance": "10000000000000000000000000000"

},

"0x0011598De1016A350ad719D23586273804076774": {

"balance": "10000000000000000000000000000"

},

"0x00eBF1c449Cc448a14Ea5ba89C949a24Fa805a14": {

"balance": "10000000000000000000000000000"

},

"0x006B8d5F5c8Ad11E5E6Cf9eE6624433891430965": {

"balance": "10000000000000000000000000000"

},

"0x009A1372f9E2D70014d7F31369F115FC921a87c8": {

"balance": "10000000000000000000000000000"

},

"0x0076ed2DD9f7dc78e3f336141329F8784D8cd564": {

"balance": "10000000000000000000000000000"

},

"0x006dB7698B897B842a42A1C3ce423b07C2656Ecd": {

"balance": "10000000000000000000000000000"

},

"0x0012fc0db732dfd07a0cd8e4bedc6b160c9aedc5": {

"balance": "10000000000000000000000000000"

},

"0x00f137e9bfe37cc015f11cec8339cc2f1a3ae3a6": {

"balance": "10000000000000000000000000000"

},

"0x00c2bc2f078e1dbafa4a1fa46929e1f2ca207f00": {

"balance": "10000000000000000000000000000"

},

"0x000a3702732843418d83a03e65a3d9f7add58864": {

"balance": "10000000000000000000000000000"

},

"0x009c4c00de80cb8b0130906b09792aac6585078f": {

"balance": "10000000000000000000000000000"

},

"0x0053017d2ef8119654bdc921a9078567a77e854d": {

"balance": "10000000000000000000000000000"

},

"0x002491c91e81da1643de79582520ac4c77229e58": {

"balance": "10000000000000000000000000000"

},

"0x00fd696f0c0660779379f6bff8ce58632886f9d2": {

"balance": "10000000000000000000000000000"

},

"0x007f16bc32026e7cd5046590e4f5b711ef1a6d8b": {

"balance": "10000000000000000000000000000"

},

"0x00fb81808899fd51c4a87a642084138be62189a0": {

"balance": "10000000000000000000000000000"

},

"0x00a61dabf0324bc07ba5a82a97c5f15d38fa9bbc": {

"balance": "10000000000000000000000000000"

},

"0x007a11ac48952a7e8e661833f4803bd7ffa58f77": {

"balance": "10000000000000000000000000000"

},

"0x00e7ad5b906c829c71c2f6f6cec016866ed1c264": {

"balance": "10000000000000000000000000000"

},

"0x0075f4f4d7324fef36371610d03e1195894bf420": {

"balance": "10000000000000000000000000000"

}

}

}

Contract code:

pragma solidity ^0.4.23;

contract Owned {

address public owner;

constructor() public {

owner = tx.origin;

}

modifier onlyOwner {

require(msg.sender == owner);

_;

}

function isDeployed() public pure returns(bool) {

return true;

}

}

contract SeasonFactory is Owned {

address[] public seasons;

event SeasonCreated(uint64 indexed begin, uint64 indexed end, address season);

function addSeason(address season) public onlyOwner {

SeasonShim seasonShim = SeasonShim(season);

require(seasonShim.owner() == owner);

require(seasons.length == 0 || SeasonShim(seasons[seasons.length - 1]).end() < seasonShim.begin());

seasons.push(seasonShim);

emit SeasonCreated(seasonShim.begin(), seasonShim.end(), season);

}

function getSeasonsCount() public view returns(uint64) {

return uint64(seasons.length);

}

function getSeasonForDate(uint64 ticks) public view returns(address) {

for (uint64 i = uint64(seasons.length) - 1; i >= 0; i--) {

SeasonShim season = SeasonShim(seasons[i]);

if (ticks >= season.begin() && ticks <= season.end())

return season;

}

return 0;

}

function getLastSeason() public view returns(address) {

return seasons[seasons.length - 1];

}

}

contract SeasonShim is Owned {

uint64 public begin;

uint64 public end;

}

contract Season is Owned {

uint64 public begin;

uint64 public end;

string name;

uint64 requestCount;

Node[] nodes;

uint64 headIndex;

uint64 tailIndex;

mapping(bytes30 => uint64) requestServiceNumberToIndex;

event RequestCreated(bytes30 indexed serviceNumber, uint64 index);

constructor(uint64 begin_, uint64 end_, string name_) public {

begin = begin_;

end = end_;

name = name_;

}

function createRequest(bytes30 serviceNumber, uint64 date, DeclarantType declarantType, string declarantName, uint64 fairId, uint64[] assortment, uint64 district, uint64 region, string details, uint64 statusCode, string note) public onlyOwner {

require (getRequestIndex(serviceNumber) < 0, "Request with provided service number already exists");

nodes.length++;

uint64 newlyInsertedIndex = getRequestsCount() - 1;

Request storage request = nodes[newlyInsertedIndex].request;

request.serviceNumber = serviceNumber;

request.date = date;

request.declarantType = declarantType;

request.declarantName = declarantName;

request.fairId = fairId;

request.district = district;

request.region = region;

request.assortment = assortment;

request.details = details;

request.statusUpdates.push(StatusUpdate(date, statusCode, note));

requestServiceNumberToIndex[request.serviceNumber] = newlyInsertedIndex;

fixPlacementInHistory(newlyInsertedIndex, date);

emit RequestCreated(serviceNumber, newlyInsertedIndex);

}

function fixPlacementInHistory(uint64 newlyInsertedIndex, uint64 date) private onlyOwner {

if (newlyInsertedIndex == 0) {

nodes[0].prev = -1;

nodes[0].next = -1;

return;

}

int index = tailIndex;

while (index >= 0) {

Node storage n = nodes[uint64(index)];

if (n.request.date <= date) {

break;

}

index = n.prev;

}

if (index < 0) {

nodes[headIndex].prev = newlyInsertedIndex;

nodes[newlyInsertedIndex].next = headIndex;

headIndex = newlyInsertedIndex;

}

else {

Node storage node = nodes[uint64(index)];

Node storage newNode = nodes[newlyInsertedIndex];

newNode.prev = index;

newNode.next = node.next;

if (node.next > 0) {

nodes[uint64(node.next)].prev = newlyInsertedIndex;

} else {

tailIndex = newlyInsertedIndex;

}

node.next = newlyInsertedIndex;

}

}

function updateStatus(bytes30 serviceNumber, uint64 responseDate, uint64 statusCode, string note) public onlyOwner {

int index = getRequestIndex(serviceNumber);

require (index >= 0, "Request with provided service number was not found");

Request storage request = nodes[uint64(index)].request;

request.statusUpdates.push(StatusUpdate(responseDate, statusCode, note));

}

function getSeasonDetails() public view returns(uint64, uint64, string) {

return (begin, end, name);

}

function getAllServiceNumbers() public view returns(bytes30[]) {

bytes30[] memory result = new bytes30[](getRequestsCount());

for (uint64 i = 0; i < result.length; i++) {

result[i] = nodes[i].request.serviceNumber;

}

return result;

}

function getRequestIndex(bytes30 serviceNumber) public view returns(int) {

uint64 index = requestServiceNumberToIndex[serviceNumber];

if (index == 0 && (nodes.length == 0 || nodes[0].request.serviceNumber != serviceNumber)) {

return -1;

}

return int(index);

}

function getRequestByServiceNumber(bytes30 serviceNumber) public view returns(bytes30, uint64, DeclarantType, string, uint64, uint64[], uint64, uint64, string) {

int index = getRequestIndex(serviceNumber);

if (index < 0) {

return (0, 0, DeclarantType.Individual, "", 0, new uint64[](0), 0, 0, "");

}

return getRequestByIndex(uint64(index));

}

function getRequestByIndex(uint64 index) public view returns(bytes30, uint64, DeclarantType, string, uint64, uint64[], uint64, uint64, string) {

Request storage request = nodes[index].request;

bytes30 serviceNumber = request.serviceNumber;

string memory declarantName = request.declarantName;

uint64[] memory assortment = getAssortment(request);

string memory details = request.details;

return (serviceNumber, request.date, request.declarantType, declarantName, request.fairId, assortment, request.district, request.region, details);

}

function getAssortment(Request request) private pure returns(uint64[]) {

uint64[] memory memoryAssortment = new uint64[](request.assortment.length);

for (uint64 i = 0; i < request.assortment.length; i++) {

memoryAssortment[i] = request.assortment[i];

}

return memoryAssortment;

}

function getRequestsCount() public view returns(uint64) {

return uint64(nodes.length);

}

function getStatusUpdates(bytes30 serviceNumber) public view returns(uint64[], uint64[], string) {

int index = getRequestIndex(serviceNumber);

if (index < 0) {

return (new uint64[](0), new uint64[](0), "");

}

Request storage request = nodes[uint64(index)].request;

uint64[] memory dates = new uint64[](request.statusUpdates.length);

uint64[] memory statusCodes = new uint64[](request.statusUpdates.length);

string memory notes = "";

string memory separator = new string(1);

bytes memory separatorBytes = bytes(separator);

separatorBytes[0] = 0x1F;

separator = string(separatorBytes);

for (uint64 i = 0; i < request.statusUpdates.length; i++) {

dates[i] = request.statusUpdates[i].responseDate;

statusCodes[i] = request.statusUpdates[i].statusCode;

notes = strConcat(notes, separator, request.statusUpdates[i].note);

}

return (dates, statusCodes, notes);

}

function getMatchingRequests(uint64 skipCount, uint64 takeCount, DeclarantType[] declarantTypes, string declarantName, uint64 fairId, uint64[] assortment, uint64 district) public view returns(uint64[]) {

uint64[] memory result = new uint64[](takeCount);

uint64 skippedCount = 0;

uint64 tookCount = 0;

int currentIndex = headIndex;

for (uint64 j = 0; j < nodes.length && tookCount < result.length; j++) {

Node storage node = nodes[uint64(currentIndex)];

if (isMatch(node.request, declarantTypes, declarantName, fairId, assortment, district)) {

if (skippedCount < skipCount) {

skippedCount++;

}

else {

result[tookCount++] = uint64(currentIndex);

}

currentIndex = node.next;

}

}

uint64[] memory trimmedResult = new uint64[](tookCount);

for (uint64 k = 0; k < trimmedResult.length; k++) {

trimmedResult[k] = result[k];

}

return trimmedResult;

}

function isMatch(Request request, DeclarantType[] declarantTypes, string declarantName_, uint64 fairId_, uint64[] assortment_, uint64 district_) private pure returns(bool) {

if (declarantTypes.length != 0 && !containsDeclarant(declarantTypes, request.declarantType)) {

return false;

}

if (!isEmpty(declarantName_) && !containsString(request.declarantName, declarantName_)) {

return false;

}

if (fairId_ != 0 && fairId_ != request.fairId) {

return false;

}

if (district_ != 0 && district_ != request.district) {

return false;

}

if (assortment_.length > 0) {

for (uint64 i = 0; i < assortment_.length; i++) {

if (contains(request.assortment, assortment_[i])) {

return true;

}

}

return false;

}

return true;

}

function contains(uint64[] array, uint64 value) private pure returns (bool) {

for (uint i = 0; i < array.length; i++) {

if (array[i] == value)

return true;

}

return false;

}

function containsDeclarant(DeclarantType[] array, DeclarantType value) private pure returns (bool) {

for (uint i = 0; i < array.length; i++) {

if (array[i] == value)

return true;

}

return false;

}

function isEmpty(string value) private pure returns(bool) {

return bytes(value).length == 0;

}

function containsString(string _base, string _value) internal pure returns (bool) {

bytes memory _baseBytes = bytes(_base);

bytes memory _valueBytes = bytes(_value);

if (_baseBytes.length < _valueBytes.length) {

return false;

}

for(uint j = 0; j <= _baseBytes.length - _valueBytes.length; j++) {

uint i = 0;

for(; i < _valueBytes.length; i++) {

if (_baseBytes[i + j] != _valueBytes[i]) {

break;

}

}

if (i == _valueBytes.length)

return true;

}

return false;

}

function strConcat(string _a, string _b, string _c) private pure returns (string){

bytes memory _ba = bytes(_a);

bytes memory _bb = bytes(_b);

bytes memory _bc = bytes(_c);

string memory abcde = new string(_ba.length + _bb.length + _bc.length);

bytes memory babcde = bytes(abcde);

uint k = 0;

for (uint i = 0; i < _ba.length; i++) babcde[k++] = _ba[i];

for (i = 0; i < _bb.length; i++) babcde[k++] = _bb[i];

for (i = 0; i < _bc.length; i++) babcde[k++] = _bc[i];

return string(babcde);

}

struct Node {

Request request;

int prev;

int next;

}

struct Request {

bytes30 serviceNumber;

uint64 date;

DeclarantType declarantType;

string declarantName;

uint64 fairId;

uint64[] assortment;

uint64 district; // округ

uint64 region; // район

StatusUpdate[] statusUpdates;

string details;

}

enum DeclarantType {

Individual, // ФЛ

IndividualEntrepreneur, // ИП

LegalEntity, // ЮЛ

IndividualAsIndividualEntrepreneur // ФЛ как ЮЛ

}

struct StatusUpdate {

uint64 responseDate;

uint64 statusCode;

string note;

}

}

@Pzixel are you eth_calling the contract or rather sending transactions to that contract? In the latter case the slowest part of that will be transaction signing and nonce checking.

For nonce checking we do some optimistic evaluation of the next nonce - in case there is a conflict (two concurrent RPC queries trying to send a transaction at the same time) it might happen that one of them will need to be re-signed again after the conflict is detected.

I'd recommend the following:

- Try to manage the nonce on your own (i.e. fetch next nonce before sending any transactions and then increment it in your app)

- Try pre-signing the transactions (will also require manual nonce management) and then use

eth_sendRawTransactionto let Parity only take care of including these transactions to the block.

Could you also provide some logs with running with -lrpc=trace? Also could you consider switching to IPC transport? It's orders of magnitude faster than HTTP and also single threaded, so that will rule-out the nonce-checking problem (the bottleneck should be signing).

I'd recommend the following

Great, I wasn't aware it's possible. I'l try to implement it.

Could you also provide some logs with running with -lrpc=trace?

I asked my friend to run with trace. I will be busy with trying to send raw transaction. I hope my library allows it.

P.S. I provided all required I have. You could easily deploy contract and reproduce the problem with every flags (or even in debug) with simple script that pushes messages into the contract.

I thank you from the bottom of my heart for this suggestions. It's finally something I didn't try yet so it could be profitable.

Just checked: I'm able to send transaction to the new network (and it seems to be faster, however, it may be just an illusion), but "corrupted" network is not able to execute even signer transaction. Going to check if there are any logs

Well, I tried to run it with executing pre-signed transactions and here is what I have:

All transaction was executed on top speed, no performance degradation signed. It seems that there is some problems with parity-signed transactions. Is it expected?

It kept top speed on 100k txs (in 1 hour). So I think it's indeed signing/nonce issue. I wonder if it's described somewhere in spec or even intended to work this way.

Just had same behavior for simultaneous 20000 requests with client-side nonce management. ~1700 requests got confirmed in ~10 minutes then the network just hanged.

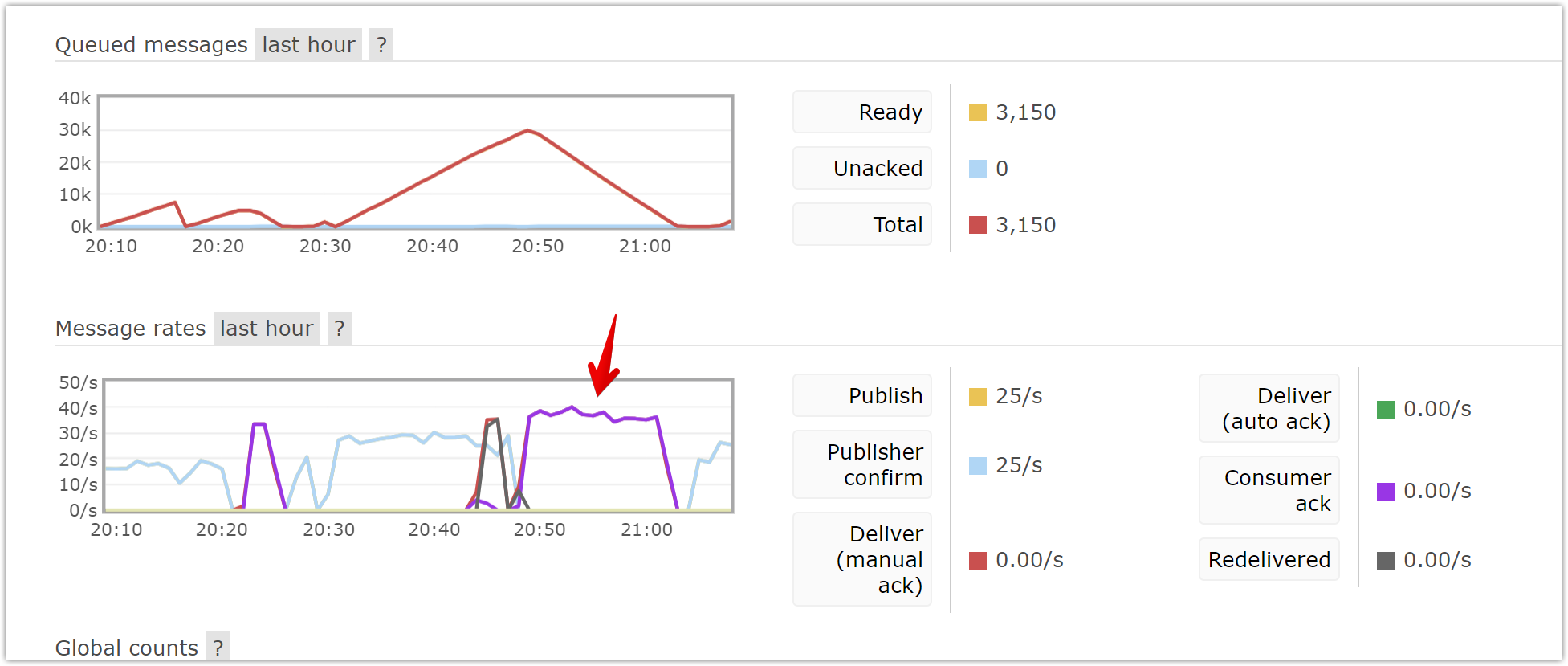

Well, we tried everything, but after we have written ~50k blocks network slowed down to 1tx/sec (from original 40tx/sec). I don't know what else can I do: I did client nonce checking and signing (it indeed speeded up the whole network), I removed extra authority nodes to lower networking, but it just doesn't work. I didn't toush any settings, but at some point it just slows down.

When network was clean, as I said it had performance at level 40tx/sec, now I have this:

Same hardware, same configuration, the only difference is that I had 40tx/sec when network was clean, and 1tx/sec after block 50k. I run 100 parallel requests simultaneously, which are presigned and so on, but I have only 1tx per block.

@tomusdrw @Tbaut any suggestions?

@Pzixel I don't see relation to the original issue any more, it seems that it's not a problem of slow RPC any more, it's somehow the whole network that is runing slow for you, right?

I'd close that issue and create a new one (or rename that one) to keep the discussion focused.

Could you also provide logs from both nodes (authority node and the node that you submit transactions to) with -ltxqueue=debug?

@tomusdrw hello. Sorry for not replying for so long, but I just didn't have a chance: I had to backup-restore the network on the test server before answering.

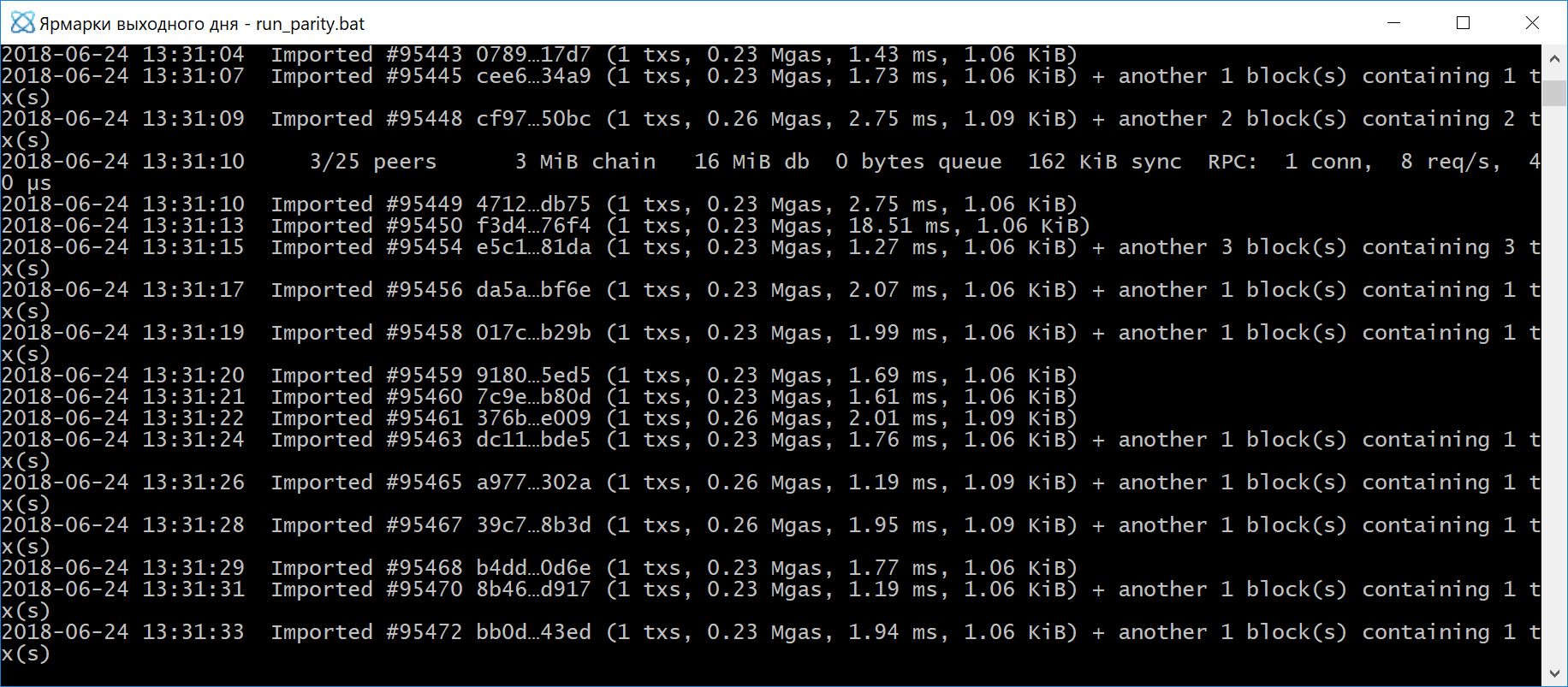

I tried to send 500 transactions simultaneously and here is logs of what happened:

In a nutshell: it didn't work well.

DB size is currently 1.3gb.

Ok, so what do you mean exactly by "it didn't work well"

You have submited 506 transactions:

$ grep "QueueStatus" K8e0sJYT | wc -l

506

496 went directly to current queue:

$ grep "to current" K8e0sJYT | wc -l

496

10 went to future for a while (because they were imported out of order)

$ grep "to future" K8e0sJYT | wc -l

10

379 transactions were mined:

$ grep mined K8e0sJYT | wc -l

379

while the network created 380 blocks (one block was empty):

$ grep "Imported #" K8e0sJYT | wc -l

380

So everything looks fine for me, the real question is:

- Why you have only one transaction per block if there is a lot of pending transactions.

There are two possible answers (kind of related):

- You have huge transactions (they require a lot of

GAS) - You have configured your chain with too small

block_gas_limit(and--gas-floor-target).

I suspect the (2) - you probably have a high-enough initial gas limit, but since the node is running with the default --gas-floor-target it gradually decreases until it get's to 4.7M, that would explain this:

after we have written ~50k blocks network slowed down to 1tx/sec (from original 40tx/sec)

After fixing the gas issue I recommend checking out 1.11-beta series, it has major improvements in terms of transaction import time and throughput.

There are two possible answers (kind of related):

- I've just checked - average gasUsed of one tx is 975911. I'm not sure if it's a high value tough

- I'm not sure what exactly should be configured differently. Could you elaborate, please?

my chains:

"engine": {

"authorityRound": {

"params": {

"stepDuration": "1",

"validators": {

"list": [

"0x00Bd138aBD70e2F00903268F3Db08f2D25677C9e",

"0x00Aa39d30F0D20FF03a22cCfc30B7EfbFca597C2",

"0x002e28950558fbede1a9675cb113f0bd20912019"

]

},

"validateScoreTransition": 1000000000,

"validateStepTransition": 1500000000,

"maximumUncleCount": 1000000000

}

}

},

"params": {

"maximumExtraDataSize": "0x20",

"minGasLimit": "0x1388",

"networkID": "0x2323",

"gasLimitBoundDivisor": "0x400",

"eip140Transition": 0,

"eip211Transition": 0,

"eip214Transition": 0,

"eip658Transition": 0

},

"genesis": {

"seal": {

"authorityRound": {

"step": "0x0",

"signature": "0x0000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000"

}

},

"difficulty": "0x20000",

"gasLimit": "0x165A0BC00"

},

authority.toml

[parity]

chain = "/parity/config/chain.json"

[rpc]

interface = "0.0.0.0"

cors = ["all"]

hosts = ["all"]

apis = ["web3", "eth", "net", "parity", "traces", "rpc", "personal", "parity_accounts", "signer", "parity_set"]

[account]

password = ["/parity/authority.pwd"]

[mining]

reseal_on_txs = "none"

tx_queue_size = 16384

tx_queue_mem_limit = 1024

So you start with 0x165A0BC00 = 6_000_000_000 gas limit, but the default value for --gas-floor-target is 4.7M, so your authority will gradually decrease the gas limit until it reaches 4.7M.

Please run with --gas-floor-target 0x165A0BC00 or add gas_floor_target = "0x165A0BC00" entry in mining section of the config file to keep the gas limit on the high level all the time.

@tomusdrw hmm, it seems that it helped. Speed is slowly recovering. It already saves ~4tx/sec after 10 minutes of block propagation.

Could gas restoration be enforced somehow to the maximum or I have to send multiple transaction to recover gas level? Sorry if my question sounds stupid, but I'm really trying to get the technologie and parity implementation itself.

I also see high uncle value (~20 in my test). How should i handle them if at all? Can share new logs if required.

Please read about gasLimitBoundDivisor parameter that you have configured in your spec file, the amount the gas changes (up or down) is part of consensus mechanism and controlled by this specific param.

So after the chain is already set-up, there is no way to change that (you would have to hard fork the network, but Parity does not support hardforks to change this particular parameter (yet)).

Uncle rate pretty much depends on couple of things:

- The block gas limit

- The block time

- Network latency

- Authorities hardware

The uncle rate will be lower if any of the parameter changes (assuming the others remain constant):

- Decrease the block gas limit

- Increase the block time

- Lower the latency

- Run on better hardware

You can also disable uncle inclusion in the spec file if that is your concern, but it doesn't really solve the problem (just makes it invisible) - the authorities will still produce blocks that will get orphaned.

It's just physically impossible to process every second like 10M gas blocks (cpu/io) on a smartphone connected via 2G.

So in short, to lower the uncle rate you either need to change network parameters or buy better hardware.

You could also profile what's the limiting factor for you:

- If it's CPU, you can consider trying out WASM or EVMJit, or alter gas accounting (or disable it). You could also implement your own pre-compiled contract

- If it's IO, then you can either buy better disk, or more RAM and configure the node to use a RAMdisk.

Thank you for the great reply.

I'd just like to clarify one thing:

"gasLimitBoundDivisor" determines gas limit adjustment has the usual Ethereum value

I don't really get this part of spec. It's quite unclear to me what "limit adjustment" means. Is it explained more detailed anywhere?

You can find more details in the Yellow Paper: http://gavwood.com/paper.pdf (see (45) and (46)). It's hardcoded as 1024 in that formula, but that's exactly this parameter.

@tomusdrw wow, that's complicated. It would take some time. Shame on you all who told me math never would be required in my work.

I do believe that you answered all my questions. All fixes basically go to two buckets:

- Client-side nonce managing and signing

- Proper network configuration - high gas floor and gas limit

Hope it would be helpful for newcomers.

Thank you again, you're great.

Hello guys.

Sorry for oftop, but how it's better to test transaction speed? I see @Pzixel create some kind of graphs... So how can I do testing in same way?

Thanks.

It's RabbitMQ graphs so you can't have them. However, I use buythewhale/ethstats as well which is pretty good.

@Pzixel how can I contact you?

Sorry for contact you here...

Sorry, I'm not sure I can help you any further.

_просто поставь монитор из тутора, на нем все хорошо видно_

@Pzixel thanks :)

_я хотел узнать, как вы скорость транзакций повышали_

_я ж выше всё написал, см. https://github.com/paritytech/parity/issues/8829#issuecomment-403028832 - повысили gas-floor и сделали подпись транзакций на клиентской стороне, с не на стороне parity_

Please keep it in English as my Russian is not good enough :)

Most helpful comment

Please keep it in English as my Russian is not good enough :)