Openfoodnetwork: Clean up useless data from live DBs to improve db backups speed and potentially app performance

What we should change and why (this is tech debt)

We will soon have tasks to sanitize and truncate data from production #3579 This is useful to fetch data from live environments into testing environments.

Now we should create a new task for a different purpose: to reduce the live DB size by deleting data that is not needed.

Maybe it can be called ofn:data:clean_up

There are at least 3 good candidates to be deleted or truncated (keep only latest entries):

- spree_state_changes

- spree_log_entries

- sessions

This requires further investigation about what exactly are these tables and if they can really be deleted.

Deleting all the data in these 3 tables in the AUS live database reduces the ZIP database size from 485MB to 67MB!

Context

this was found as part of Spree 2 data migrations.

This issue can include #2247 but it's not specific to sessions.

Impact and timeline

This will make backups run faster and take a lot less space.

And it can improve app performance: #3099

All 20 comments

I think this requires more than a rake task and fits more in ofn-install than here. In the past I investigated https://www.percona.com/doc/percona-toolkit/LATEST/pt-archiver.html for instance but never got to use it in production.

more than a rake task

there are many things you can do in this space, the scope here would be to simply delete unnecessary entries.fits more in ofn-install than here

could be, I am not sure, I could easily see a job running that runs the tasks regularly (for example, delete sessions older than 1 month), that would be better in the app code, right?pt-archiver.html

the scope of this issue is not about archiving data, it's really just the simple case of deleting data that is not necessary for the operation

To break it down by table:

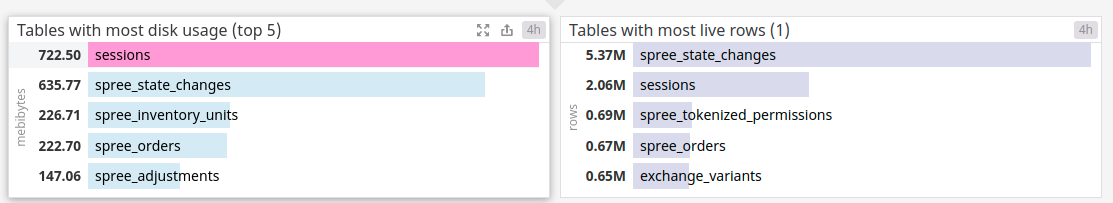

Aus production

- full backup: 480 MB

- without sessions: 107 MB

- without log entries: 106 MB

- without state changes: 70 MB

So I think sessions is a big one. Then let's look at state changes. Log entries is not really worth it but we can delete it while we are at it.

None of this really affects the performance of the app directly, I think. It's only the backup generation (which can affect the performance of the app).

Given these numbers it would be more about disk-space savings, right? No idea if that is currently a problem. Worth checking to be sure.

For me it's more about processing times. Creating or downloading backups in half the time is great.

Current numbers in FR production

Some of our databases are reaching ~2.5 million records in the sessions table. We maintain 2 indexes on that table, which have to be updated when a record is added or changed, plus we have to query it on every pageload or endpoint. We even hit it when serving an asset like an image for some reason. :see_no_evil:

The spree_state_changes tables are reaching 7-8 million records, and I can't even figure out if we use or need that data at all...

We even hit it when serving an asset like an image for some reason.

What? Did you test that in production or development? I can understand development because it needs the asset pipeline but nginx should handle this in production.

I just requested https://staging.openfoodnetwork.org.au/system/content_configurations/logos//original/OFN_Aus.png?2020 and the unicorn logs don't show any request while nginx logs show it. I think this is okay.

Sessions

The session issue is described in #2247.

Spree state changes

This is purely for logging, like we are introducing more and more subscription logging, Spree has state change logging. It can be helpful when investigating bugs but I doubt we need data older than a year. We also have backups for old data.

It can be helpful when investigating bugs but I doubt we need data older than a year

to be honest, I don't even think we need more than a month.

we are introducing more and more subscription logging, Spree has state change logging

Yeah, I'm just wondering if this state change logging has ever actually been used in the entire history of OFN? 7 million records that have never been looked at... :see_no_evil:

Just a heads up the database backup task that runs every 4 hours seems to be negatively impacting performance at least on French Prod, and I assume on others. CPU and and disk latency spikes, lasting around 5 minutes... I think even some fairly conservative pruning of these monster tables would be a big improvement here.

Can we just make a nice rake task and run it via schedule.rb?

Yes, I would delete all state changes older than a year.

Can we just make a nice rake task and run it via schedule.rb?

What I had in mind was to extract https://github.com/openfoodfoundation/openfoodnetwork/blob/fabddbd1c0ce0c7ef1cb1507109c7258d7740851/lib/tasks/data/truncate_data.rb#L50-L54 into a new rake task and run it every N months. It's a 5min task and it could fix the burden of the backups

Yep, that sounds awesome. Once a month?

Looks great. I would use longer periods though. Maybe keep data for 2 years? We can always reduce those periods later. Better safe than sorry.

I've never found myself in a situation where I needed any state change or log entry older than a couple of months. On the other hand, old sessions are not needed at all either but let's start with something and then we'll see if we need to tweak the time-window.

We should probably be finding old sessions by updated_at instead of created_at, right?

yep, might be safer but I wonder if they are really updated or just created

We should probably be finding old sessions by updated_at instead of created_at, right?

I wonder if they are really updated or just created

We are changing session variables regularly (session_id, flash messages). It updates the session record in the database every time.