Nomad: [question] nomad 0.4.1 registers check with consul to 127.0.0.1 even if it bind is 172.30.x.x

How to make it register when nomad is not accessible to consul via 127.0.0.1 ?

Nomad 64bit v0.4.1 Linux 64bit @Ubuntu 16.04 LTS)

Consul 64bit v0.5.2, Consul Protocol: 2 (Understands back to: 1)

When start Nomad, it gets error @ registration:

2016/11/14 11:38:21.415014 [DEBUG] consul.syncer: error in syncing: 1 error(s) occurred:

* 2 error(s) occurred:

* Unexpected response code: 400 (Must provide TTL or Script and Interval!)

* Unexpected response code: 400 (Must provide TTL or Script and Interval!)

if I list checks in Consul it lists:

{

"841860023284daa7c428406c87aa81ede8068c0e":{

"Node": "67695c6b6ac4",

"CheckID": "841860023284daa7c428406c87aa81ede8068c0e",

"Name": "Nomad Client HTTP Check",

"Status": "critical",

"Notes": "",

"Output": "Get http://127.0.0.1:4646/v1/agent/servers: dial tcp 127.0.0.1:4646: connection refused",

"ServiceID": "_nomad-client-nomad-client-http",

"ServiceName": "nomad-client"

},

"bcbd506a35931cca712b453a9dd8e1a463f01d00":{

"Node": "67695c6b6ac4",

"CheckID": "bcbd506a35931cca712b453a9dd8e1a463f01d00",

"Name": "Nomad Server HTTP Check",

"Status": "critical",

"Notes": "",

"Output": "Get http://127.0.0.1:4646/v1/status/peers: dial tcp 127.0.0.1:4646: connection refused",

"ServiceID": "_nomad-server-nomad-http",

"ServiceName": "nomad"

}

}

Nomad config is:

bind_addr = "172.30.4.55"

data_dir = "/opt/nomad/"

advertise {

# We need to specify our host's IP because we can't

# advertise 0.0.0.0 to other nodes in our cluster.

http = "172.30.4.55:4646"

# http = "127.0.0.1:4646"

rpc = "172.30.4.55:4647"

# rpc = "127.0.0.1:4647"

serf = "172.30.4.55:4648"

}

server {

enabled = true

bootstrap_expect = 1

}

client {

enabled = true

network_speed = 100

# network_interface = "172.30.4.55"

options {

"driver.raw_exec.enable" = "1"

}

}

consul {

address = "172.30.4.55:8500"

#address = "127.0.0.1:8500"

}

On the other hand nomad's switch checks_use_advertise in consul section does not seem to work:

* consul -> invalid key: checks_use_advertise

All 18 comments

@jrusiecki Would you mind trying with 0.5.0 RC2? Unfortunately we don't have versioned docs yet and there were many doc fixes so we decided to push the RC docs. checks_use_advertise is a new field in 0.5.

Thx, 0.5RC2 properly registers with and without checks_use_advertise.

Sweet! Thanks for following up @jrusiecki! Closing this issue

@dadgar I seem to be having this issue with both the released version of nomad 0.5.0 and the linked version 0.5.0-rc2.

I am playing with Nomad in a container and not using the "--net host" flag.

Nomad starts and registers to consul with the following config:

This is just a testing container for now.

{

"server":{

"enabled":true,

"bootstrap_expect":1

},

"datacenter":"eu1",

"region":"EU",

"data_dir":"/nomad/data/",

"bind_addr":"0.0.0.0",

"advertise":{

"http":"172.20.20.10",

"rpc":"172.20.20.10",

"serf":"172.20.20.10"

},

"consul":{

"checks_use_advertise":true,

"address":"172.20.20.10:80"

},

"leave_on_terminate":true,

"enable_debug":true

}

However both versions give me the same result.

Logs to follow:

Nov 24 21:29:39 172.20.20.10 consul: 2016/11/24 21:29:39 [WARN] agent: socket connection failed '0.0.0.0:4647': dial tcp 0.0.0.0:4647: getsockopt: connection refused

Nov 24 21:29:43 172.20.20.10 consul: 2016/11/24 21:29:43 [WARN] agent: socket connection failed '0.0.0.0:4648': dial tcp 0.0.0.0:4648: getsockopt: connection refused

Nov 24 21:29:44 172.20.20.10 consul: 2016/11/24 21:29:44 [WARN] agent: http request failed 'http://0.0.0.0:4646/v1/status/peers': Get http://0.0.0.0:4646/v1/status/peers: dial tcp 0.0.0.0:4646: getsockopt: connection refused

Nov 24 21:29:49 172.20.20.10 consul: 2016/11/24 21:29:49 [WARN] agent: socket connection failed '0.0.0.0:4647': dial tcp 0.0.0.0:4647: getsockopt: connection refused

Nov 24 21:29:53 172.20.20.10 consul: 2016/11/24 21:29:53 [WARN] agent: socket connection failed '0.0.0.0:4648': dial tcp 0.0.0.0:4648: getsockopt: connection refused

Nov 24 21:29:54 172.20.20.10 consul: 2016/11/24 21:29:54 [WARN] agent: http request failed 'http://0.0.0.0:4646/v1/status/peers': Get http://0.0.0.0:4646/v1/status/peers: dial tcp 0.0.0.0:4646: getsockopt: connection refused

Nov 24 21:29:59 172.20.20.10 consul: 2016/11/24 21:29:59 [WARN] agent: socket connection failed '0.0.0.0:4647': dial tcp 0.0.0.0:4647: getsockopt: connection refused

Nov 24 21:30:03 172.20.20.10 consul: 2016/11/24 21:30:03 [WARN] agent: socket connection failed '0.0.0.0:4648': dial tcp 0.0.0.0:4648: getsockopt: connection refused

#

Nov 24 21:28:56 172.20.20.10 nomad: ==> Nomad agent configuration:

Nov 24 21:28:56 172.20.20.10 nomad: Atlas: <disabled>

Nov 24 21:28:56 172.20.20.10 nomad: Client: false

Nov 24 21:28:56 172.20.20.10 nomad: Log Level: INFO

Nov 24 21:28:56 172.20.20.10 nomad: Region: EU (DC: eu1)

Nov 24 21:28:56 172.20.20.10 nomad: Server: true

Nov 24 21:28:56 172.20.20.10 nomad: Version: 0.5.0

Nov 24 21:28:56 172.20.20.10 nomad: ==> Nomad agent started! Log data will stream in below:

Nov 24 21:28:56 172.20.20.10 nomad: 2016/11/24 21:28:56 [INFO] serf: EventMemberJoin: 49f3747e73ce.EU 172.20.20.10

Nov 24 21:28:56 172.20.20.10 nomad: 2016/11/24 21:28:56.809499 [INFO] nomad: starting 1 scheduling worker(s) for [service batch system _core]

Nov 24 21:28:56 172.20.20.10 nomad: 2016/11/24 21:28:56 [INFO] raft: Node at 172.20.20.10:4647 [Follower] entering Follower state (Leader: "")

Nov 24 21:28:56 172.20.20.10 nomad: 2016/11/24 21:28:56.814531 [INFO] nomad: adding server 49f3747e73ce.EU (Addr: 172.20.20.10:4647) (DC: eu1)

Nov 24 21:28:56 172.20.20.10 consul: 2016/11/24 21:28:56 [INFO] agent: Synced service '_nomad-server-nomad-rpc'

Nov 24 21:28:56 172.20.20.10 consul: 2016/11/24 21:28:56 [INFO] agent: Synced service '_nomad-server-nomad-serf'

Nov 24 21:28:56 172.20.20.10 consul: 2016/11/24 21:28:56 [INFO] agent: Synced service '_nomad-server-nomad-http'

Nov 24 21:28:58 172.20.20.10 nomad: 2016/11/24 21:28:58 [WARN] raft: Heartbeat timeout from "" reached, starting election

Nov 24 21:28:58 172.20.20.10 nomad: 2016/11/24 21:28:58 [INFO] raft: Node at 172.20.20.10:4647 [Candidate] entering Candidate state

Nov 24 21:28:58 172.20.20.10 nomad: 2016/11/24 21:28:58 [INFO] raft: Election won. Tally: 1

Nov 24 21:28:58 172.20.20.10 nomad: 2016/11/24 21:28:58 [INFO] raft: Node at 172.20.20.10:4647 [Leader] entering Leader state

Nov 24 21:28:58 172.20.20.10 nomad: 2016/11/24 21:28:58.726776 [INFO] nomad: cluster leadership acquired

Nov 24 21:28:58 172.20.20.10 nomad: 2016/11/24 21:28:58 [INFO] raft: Disabling EnableSingleNode (bootstrap)

Nov 24 21:29:36 172.20.20.10 nomad: ==> Failed to check for updates: Get https://checkpoint-api.hashicorp.com/v1/check/nomad?arch=amd64&os=linux&signature=3044687d-3574-426f-13be-429c598e4964&version=0.5.0: dial tcp: i/o timeout

Nov 24 21:53:48 172.20.20.10 nomad: ==> Caught signal: terminated

Nov 24 21:53:48 172.20.20.10 nomad: ==> Gracefully shutting down agent...

Nov 24 21:53:48 172.20.20.10 nomad: 2016/11/24 21:53:48.656333 [INFO] nomad: server starting leave

Nov 24 21:53:48 172.20.20.10 nomad: 2016/11/24 21:53:48 [INFO] serf: EventMemberLeave: 49f3747e73ce.EU 172.20.20.10

Nov 24 21:53:48 172.20.20.10 nomad: 2016/11/24 21:53:48.656548 [INFO] agent: requesting shutdown

Nov 24 21:53:48 172.20.20.10 nomad: 2016/11/24 21:53:48.656552 [INFO] nomad: shutting down server

Nov 24 21:53:48 172.20.20.10 consul: 2016/11/24 21:53:48 [INFO] agent: Deregistered service '_nomad-server-nomad-rpc'

Nov 24 21:53:48 172.20.20.10 consul: 2016/11/24 21:53:48 [INFO] agent: Deregistered service '_nomad-server-nomad-serf'

Nov 24 21:53:48 172.20.20.10 consul: 2016/11/24 21:53:48 [INFO] agent: Deregistered service '_nomad-server-nomad-http'

Nov 24 21:53:48 172.20.20.10 nomad: 2016/11/24 21:53:48.676333 [INFO] agent: shutdown complete

#

Nov 24 22:09:25 172.20.20.10 nomad: ==> Starting Nomad agent...

Nov 24 22:09:25 172.20.20.10 nomad: ==> Nomad agent configuration:

Nov 24 22:09:25 172.20.20.10 nomad: Atlas: <disabled>

Nov 24 22:09:25 172.20.20.10 nomad: Client: false

Nov 24 22:09:25 172.20.20.10 nomad: Log Level: INFO

Nov 24 22:09:25 172.20.20.10 nomad: Region: EU (DC: eu1)

Nov 24 22:09:25 172.20.20.10 nomad: Server: true

Nov 24 22:09:25 172.20.20.10 nomad: Version: 0.5.0rc2

Nov 24 22:09:25 172.20.20.10 nomad: ==> Nomad agent started! Log data will stream in below:

Nov 24 22:09:25 172.20.20.10 nomad: 2016/11/24 22:09:25 [INFO] serf: EventMemberJoin: 18051f068f29.EU 172.20.20.10

Nov 24 22:09:25 172.20.20.10 nomad: 2016/11/24 22:09:25.654705 [INFO] nomad: starting 1 scheduling worker(s) for [system service batch _core]

Nov 24 22:09:25 172.20.20.10 nomad: 2016/11/24 22:09:25 [INFO] raft: Node at 172.20.20.10:4647 [Follower] entering Follower state (Leader: "")

Nov 24 22:09:25 172.20.20.10 nomad: 2016/11/24 22:09:25.660100 [INFO] nomad: adding server 18051f068f29.EU (Addr: 172.20.20.10:4647) (DC: eu1)

Nov 24 22:09:25 172.20.20.10 consul: 2016/11/24 22:09:25 [INFO] agent: Synced service '_nomad-server-nomad-rpc'

Nov 24 22:09:25 172.20.20.10 consul: 2016/11/24 22:09:25 [INFO] agent: Synced service '_nomad-server-nomad-serf'

Nov 24 22:09:25 172.20.20.10 consul: 2016/11/24 22:09:25 [INFO] agent: Synced service '_nomad-server-nomad-http'

Nov 24 22:09:27 172.20.20.10 nomad: 2016/11/24 22:09:27 [WARN] raft: Heartbeat timeout from "" reached, starting election

Nov 24 22:09:27 172.20.20.10 nomad: 2016/11/24 22:09:27 [INFO] raft: Node at 172.20.20.10:4647 [Candidate] entering Candidate state

Nov 24 22:09:27 172.20.20.10 nomad: 2016/11/24 22:09:27 [INFO] raft: Election won. Tally: 1

Nov 24 22:09:27 172.20.20.10 nomad: 2016/11/24 22:09:27 [INFO] raft: Node at 172.20.20.10:4647 [Leader] entering Leader state

Nov 24 22:09:27 172.20.20.10 nomad: 2016/11/24 22:09:27.131921 [INFO] nomad: cluster leadership acquired

Nov 24 22:09:27 172.20.20.10 nomad: 2016/11/24 22:09:27 [INFO] raft: Disabling EnableSingleNode (bootstrap)

Nov 24 22:09:56 172.20.20.10 nomad: ==> Newer Nomad version available: 0.5.0 (currently running: 0.5.0-rc2)

Nov 24 22:11:24 172.20.20.10 nomad: ==> Caught signal: terminated

Nov 24 22:11:24 172.20.20.10 nomad: ==> Gracefully shutting down agent...

Nov 24 22:11:24 172.20.20.10 nomad: 2016/11/24 22:11:24.304867 [INFO] nomad: server starting leave

Nov 24 22:11:24 172.20.20.10 nomad: 2016/11/24 22:11:24 [INFO] serf: EventMemberLeave: 18051f068f29.EU 172.20.20.10

Nov 24 22:11:24 172.20.20.10 nomad: 2016/11/24 22:11:24.305018 [INFO] agent: requesting shutdown

Nov 24 22:11:24 172.20.20.10 nomad: 2016/11/24 22:11:24.305018 [INFO] nomad: shutting down server

Nov 24 22:11:24 172.20.20.10 consul: 2016/11/24 22:11:24 [INFO] agent: Deregistered service '_nomad-server-nomad-http'

Nov 24 22:11:24 172.20.20.10 consul: 2016/11/24 22:11:24 [INFO] agent: Deregistered service '_nomad-server-nomad-rpc'

Nov 24 22:11:24 172.20.20.10 consul: 2016/11/24 22:11:24 [INFO] agent: Deregistered service '_nomad-server-nomad-serf'

Nov 24 22:11:24 172.20.20.10 nomad: 2016/11/24 22:11:24.322278 [INFO] agent: shutdown complete

Nov 24 22:11:25 172.20.20.10 consul: 2016/11/24 22:11:24 [INFO] agent: Synced service '_nomad-server-nomad-serf'

Nov 24 22:11:25 172.20.20.10 consul: 2016/11/24 22:11:24 [INFO] agent: Synced service '_nomad-server-nomad-http'

Nov 24 22:11:25 172.20.20.10 consul: 2016/11/24 22:11:24 [INFO] agent: Synced service '_nomad-server-nomad-rpc'

@morfien101 Thanks for the report. What version of Consul are you running. Can you paste your consul config too so we can try to reproduce. Going to re-open!

@dadgar I will get this back to the state that created this issue and post all the details as soon as I can.

Hi @dadgar

I managed to get some time tonight to look at this and can reliably recreate the issue.

Consul Version: 'v0.7.1'

Config:

{

"datacenter": "eu1",

"data_dir": "/opt/consul",

"log_level": "INFO",

"server": true,

"leave_on_terminate": true,

"ui": true,

"client_addr": "0.0.0.0",

"advertise_addr": "172.20.20.200",

"bind_addr": "0.0.0.0",

"encrypt": "b25lZGlydHlwYXNzd29yZA==",

"dns_config": {

"node_ttl": "3s",

"service_ttl": {

"*": "3s"

},

"enable_truncate": true,

"only_passing": true

},

"recursors": ["10.0.2.3","8.8.8.8"],

"bootstrap": true

}

I have also created a vagrant file that can reproduce this reliably.

https://github.com/morfien101/nomad_bug

@morfien101 Thank you so much for doing that! Will get this fixed in the coming weeks!

I have a related issue with this.

When a node has 2 interfaces, eth0 and eth1, but eth1 is actually the publicly accessible one:

- I have checks_use_advertise = true on all nomad clients

- I have advertise set to the eth1 IP address

- All nomad services are registered using the eth1 IP --> this is as expected

HOWEVER: - when I run a job, the job is registered in consul with the eth0 IP

- when looking up the actual apps from consul this return invalid IP:port mappings for the services so I cannot access my apps through a proxy like ZUUL for example.

--> Either checks_use_advertise should apply to every service registration nomad is doing, or there should be more control over how job service registration is done on a per job basis... (preference is adapting the behaviour of checks_use_advertise)

As a follow up, I actually got it to work using (an undocumented feature of) the client network_interface config option:

client { network_interface = "eth1" }

This started registering nomad jobs with the "correct" IP...

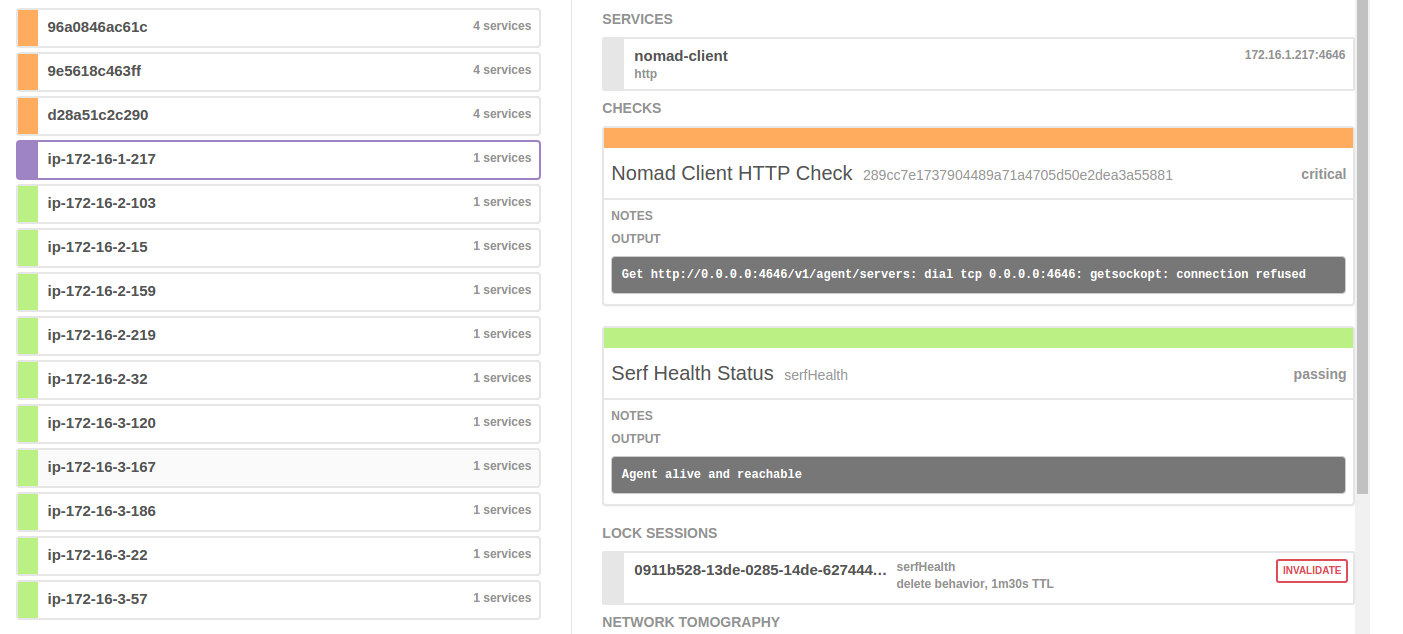

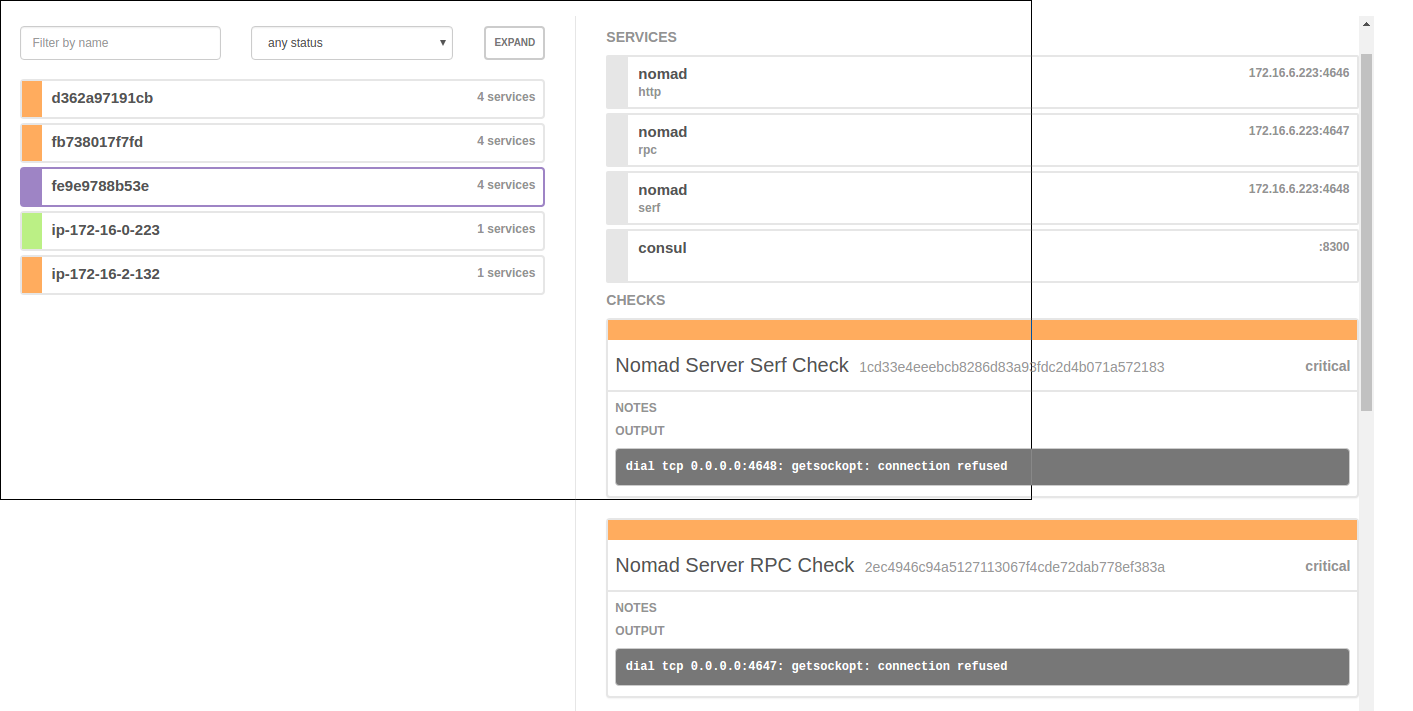

same consul 0.7.4 and nomad 0.5.4, different containers :(((( though nomad checks seem valid:

"_nomad-server-nomad-http": {

"ID": "_nomad-server-nomad-http",

"Service": "nomad",

"Tags": [

"http"

],

"Address": "172.16.11.130",

"Port": 4646,

"EnableTagOverride": false,

"CreateIndex": 0,

"ModifyIndex": 0

},

- it repeatedly tries to connect to 0.0.0.0, failing with:

dial tcp 0.0.0.0:4647: getsockopt: connection refused checks_use_advertise = truedoesn't do anything

seriously any containerized upgrade is getting blocked to version 0.5

All green nodes are 0.4.1 btw

@dennybaa I had the same problem and found that it's working in the current master. I guess this commit fixes it: https://github.com/hashicorp/nomad/commit/d212d40b18db1985531924f426161b6e600144c6

@jens-solarisbank Thanks for bumping this. This should be fixed from that release. Please test the 0.5.5 RC1: https://releases.hashicorp.com/nomad/0.5.5-rc1/

If it is still not working we can re-open! Thanks!

@jens-solarisbank Thanks for the details. @dadgar hello, it doesn't seem to work :(

Here's config of "on-the-host" nomad https://gist.github.com/b5bf4ec36f099b07cf4eb3e33f97ef77.

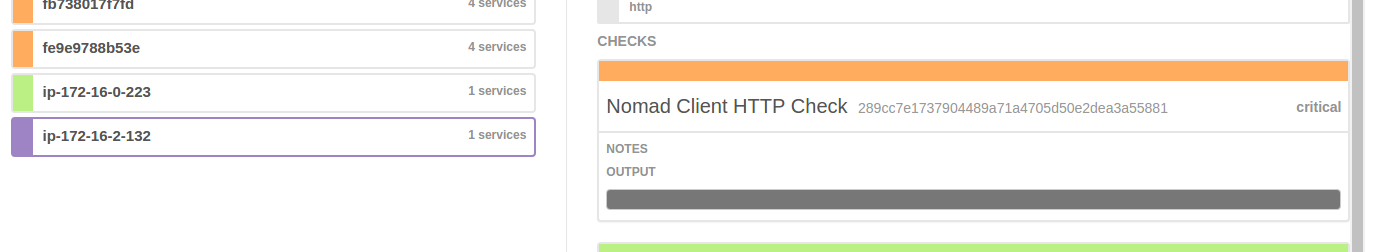

Well and here's its check it doesn't work

All checks on the nomad servers (which are inside containers) also do not work complaining dial tcp 0.0.0.0:4648: getsockopt: connection refused, here's config for details https://gist.github.com/e8bfb38ab73da1d3df244dc28bf5a5a8

So IMO nothing's really changed :(

please reopen

@jens-solarisbank @dadgar Also I haven't tried to set checks_use_advertise = true for the containerized nomad agents. Might this be an issue?

@dennybaa Hey I just tried in on the RC and it is working. You have to set checks_use_advertise = true if you want the check to be registered with the advertise address. Otherwise the bind address is used.

The configs you linked do not have that set to true. And to be clear this is fixed in the latest RC: https://releases.hashicorp.com/nomad/0.5.5-rc1/