- Version: v11.12.0

- Platform: Darwin imac 18.5.0 Darwin Kernel Version 18.5.0: Mon Mar 11 20:40:32 PDT 2019; root:xnu-4903.251.3~3/RELEASE_X86_64 x86_64

- Subsystem: net

$ cat s.js

'use strict';

const net = require('net');

const data = Buffer.alloc(1024);

const server = net.createServer();

server.on('connection', function(socket) {

socket.on('error', console.error);

let res = true;

do {

res = socket.write(data);

} while (res);

});

server.listen(8080, '127.0.0.1', function() {

console.log('Server listening on 127.0.0.1:8080');

});

$ cat c.js

'use strict';

const net = require('net');

const socket = net.createConnection({

host: '127.0.0.1',

port: 8080

});

socket.on('connect', function() {

console.log('connected');

});

- Start the server.

- Start the client in a separate process.

Expected behavior

The client process does not exit.

Actual behavior

The client process exit after the 'connect' event is emitted.

Additional details

The example works as expected if no data is written on the server socket or if the client socket is resumed.

All 22 comments

It doesn't seem to be a regression as I can reproduce also in Node.js v4.x, v6.x, v8.x, and v10.x but is it working as intended?

It's expected the client process exits because the socket was closed (you can verify this by adding a 'close' event handler on the socket object in the server process), but the actual bug seems to be that no 'close' and/or 'end' event is being emitted on the socket object in the client process.

EDIT: actually now that I look at it some more, it's hard to tell who is actually closing the connection.

Yes, who is closing the connection?

The behaviour looks correct to me. The client is exiting because the uv event loop isn't waiting for anything. Since the loop isn't waiting for anything, there are no pending events, node will never have anything to do, and it exits. There is a socket, that's true, but the presence of an open descriptor is not enough to keep node alive, not if node isn't waiting for i/o on that descriptor, which is why the resume keeps it alive.

@sam-github then why it doesn't exit if no data is written on the server socket?

Interesting point. @bnoordhuis , does node or uv do a single speculative read in anticipation of data arriving, with the effect of keeping the loop alive?

It seems that if less than 16k bytes are written the client socket is kept alive, otherwise it is closed.

with this the client socket is not closed.

$ dd if=/dev/urandom of=data bs=1 count=16383

$ cat data - | nc -l 8080

with this it is

$ dd if=/dev/urandom of=data bs=1 count=16384

$ cat data - | nc -l 8080

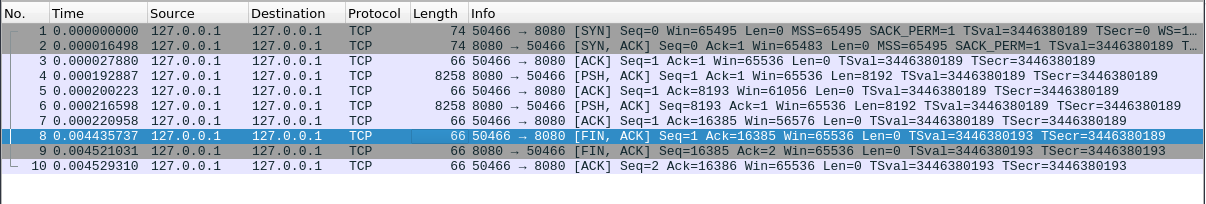

Here is what Wireshark shows:

so yeah the client closes the connection after receiving 16 KB but why, shouldn't it just stop reading?

// @mcollina

It seems that the problem is that we stop reading from the socket here because there's too much data. Calling readStop() causes calling uv_read_stop() which in turn removes the uv_tcp_t handle from the list of active_handles which causes the loop to exit as there are no more active handles or requests.

Not having a data listener, shouldn't we be throwing some kind of error as we cannot be buffering data indefinitely?

Perhaps but it doesn't help, the user might use a combination of 'readable' events and stream.read(), and even if a 'data' listener is enforced, the user might pause the stream via stream.pause(). If the stream is not resumed before the read buffer is filled again the process still exits without 'end' or 'close' being emitted.

I stumble upon this from time to time, and it is outright confusing because it is dependent on the remote peer.

I think we should investigate why this line https://github.com/nodejs/node/blob/230f1f2aa4aa2d5800214c4894d1c13a3f153dd0/lib/net.js#L326 was added. Basically this line causes a _read(), which in turn calls uv_read_start(), causing the read to happen _until_ the buffer is full.

I think the best approach would be _not_ to start reading from the socket until we have a call to on('data'), on('readable') or .resume(). Essentially I propose to document the options.manualStart (it's currently undocumented) and flip the default behavior.

In this way no uv_read_start() will happen and the process would actually exits all times.

I guess, for this case, you mean https://github.com/nodejs/node/blob/230f1f2aa4aa2d5800214c4894d1c13a3f153dd0/lib/net.js#L1052

which I think it was added to detect the remote end disconnection. Also, woudn't it be weird that node exists automatically when there's an ongoing active connection?

@mcollina I do not think it is very useful. The problem is not when the socket is created. It might happen at any time during its lifetime. See the following example as per https://github.com/nodejs/node/issues/26942#issuecomment-477491282

server

'use strict';

const assert = require('assert');

const net = require('net');

const data = Buffer.alloc(1024);

const server = net.createServer();

function write(socket) {

for (let i = 0; i < 16; i++)

socket.write(data);

}

server.on('connection', function(socket) {

socket.on('error', console.error);

socket.on('data', function(chunk) {

assert(chunk.equals(Buffer.from('write')));

write(socket);

});

write(socket);

});

server.listen(8080, '127.0.0.1', function() {

console.log('Server listening on 127.0.0.1:8080');

});

client

'use strict';

const net = require('net');

let nread = 0;

const socket = net.createConnection({

host: '127.0.0.1',

port: 8080

});

socket.on('data', function(chunk) {

nread += chunk.length;

if (nread === 16 * 1024)

this.pause();

socket.write('write');

});

socket.on('close', function() {

console.log('closed');

});

socket.on('connect', function() {

console.log('connected');

});

Essentially you are proposing to keep the process open up until there is a socket around. I'm ok with that, as long as it's semver-major.

I think stopping reading should do just that and not remove the handle from the list of active handles if that makes sense.

Essentially you are proposing to keep the process open up until there is a socket around.

I think s/until/while/ was meant.

This would make socket descriptors "special" when compared to file descriptors (and possibly pipes). It could possibly also affect child processes with IPC channels to them.

Think of this code:

fs.open("file", ()=>{})

Would we expect it to keep node alive? I wouldn't (and it doesn't).

So, then:

tls.connect("host")

why would it be expected to keep node alive? There is no js that node will ever run, it should complete.

The underlying problem seems to be that in order to detect socket close (so 'close' and 'end' events can be emitted), epoll has to wait for readability, try to read, and look for a zero-byte read return (there isn't a wait-for-close option). Or maybe the underlying problem is the Halting Problem :-). Perhaps the readability test shouldn't be made (readStart() not called), unless the close and/or end events are being listened on? Ideally, this would not exit:

tls.connect().on('close', ()=>{}) // or .on('end')

which would make sense, node has registered javascript that should run in the future when some event occurs, so it should wait for the event. But this would exit immediately:

tls.connect()

because nothing that happens in the future can ever cause js to run, there are no callbacks scheduled.

@sam-github connect() is working as I expect it to work. It's what happens after pausing the stream that surprises me. The TCP connection is open and no one is closing it but the process exits. I think this should not happen, unless the connection is implicitly or explicitly closed (error, end(), or destroy()) and not closed because the process exits.

The way I think of it is the same of

const net = require('net');

const server = net.createServer();

server.listen();

there are no callbacks scheduled but the process does not exit. Similarly the client process should run until the TCP connection is established or there is work to do.

tcp connections are always closed on process exit, same as in my fs.open() example, the file is closed on process exit.

createServer().listen() is different, you are explicitly asking node to start monitoring for incoming connections, and emit the connection event, so node doesn't exit.There is no equivalent in a script that only does tls.connect(), not unless it addes some event listeners or calls .read() or .resume(). Something to cause the loop to remain active.

I can't imagine anyone complaining, but I think your listen example _should_ exit, because there are no listeners for the 'connection' event (either passed as an arg to createServer(), or with .on('connection', ...). node will never execute another line of js, its done. Its a bit of a grey area, because there was an explicit request to start firing an I/O event, even though the event is not being listened on.

To be clear, I agree that the current behaviour is definitely odd, and a difficult to guess side-effect of having to read a socket to detect close. It would be good to improve this (thus my suggestion about not doing readStart() if there are no listeners for the event).

However, having the mere existence of an umused tcp socket keep node alive would make it unlike any other node resource. I think that would be even more strange. Its asymetry with fs.open() wasn't a strawman. Making sockets special is just going to be a different and more frequent source of confusion.

Ok convinced, thanks everyone for the constructive discussion. https://github.com/nodejs/node/issues/26942#issuecomment-477464832 fulfilled my desire to understand why, under these circumstances, the process exits. I think this can be closed.

Most helpful comment

It seems that the problem is that we stop reading from the socket here because there's too much data. Calling

readStop()causes calling uv_read_stop() which in turn removes theuv_tcp_thandle from the list of active_handles which causes the loop to exit as there are no more active handles or requests.