Node: prototypes and libraries are unsafe inside node core libs

In a PR i'm working on @ljharb pointed out several cases where using things like String.prototype.replace or fs.readFileSync are unsafe because user code could override them, forcing me to use things like const StringReplace = Function.call.bind(String.prototype.replace) and use that instead. A fair amount of node code uses this pattern, and a fair amount doesn't guard against this at all. I opened this issue to create a discussion about what the pattern should be moving forward, if there are things we can do to prevent this behavior from affection core libs, etc.

All 31 comments

cc @bmeck

@devsnek in that PR, you'll note a few lines with Function.call.bind( - that's an attempt to make things robust.

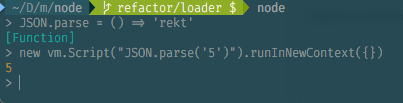

as an alternative to creating a direct reference to every single prototype method or library method we may want to call, perhaps we can shadow the globals in nativemodule wrapper so that they stay scoped to the context that the vm runs them in?

(function (exports, require, module, internalBinding, process, { String, Array, Object, JSON, etc }) {

or if the Function.call.bind is good enough for people, there are a lot of places in the source where it isn't used

@devsnek that would ensure you retain a reference to String, but anyone could later mutate String.prototype or any of its properties.

wouldn't it protect from different contexts? unless i'm doing something wrong here

Oh, maybe I'm misunderstanding. I thought node core code doesn't tend to run in a vm? If it's running in a vm, then there'd be different problems, unless that vm's globals were all deep-frozen.

i also posted a bad example, i was thinking more like getting a bunch of safe globals like below and then passing them to core libraries,

although i guess unless you actually assign those passed things as globals in the context they won't be very useful for most cases (i need to think this through more 😄)

I would tread very carefully as you never know who relies on the ability to monkey patch such things...

Certainly changing old code in this way might be breaking; but ensuring that new code is robust should be safe.

You could implement your stuff in a new vm context and use vanilla objects from there, but that doesn't seem practical.

@hashseed that opens new problems, because it might become possible for users to traverse from the objects created, to those vm primordials, exposing them to the same hazard.

Right. You could have to hold onto the actual functions and perform Function.prototype.call on them. Same goes for Function.prototype.call itself, obviously.

I might be missing something here, but why would we try to "protect" node.js core from such modifications? If people monkey-patch existing prototypes etc., then any breakage will be their fault, and the vm module is not supposed to protect the process against untrusted code, so the same rule applies there.

@tniessen If node breaks as a result of JS code running, it's node's fault. Since a node app runs with JS (and C) code written by many authors, it's simply not true that the user experiencing the breakage will necessarily be that user's fault.

(a good example right now is doing String.prototype.replace = () => '' in a repl, and then trying to continue using repl)

I think we can probably be safer around this than we are currently — a notable example being that we often call cb.apply or cb.call, which means relying on a user-provided callback having those properties unpatched. Recently I've been trying to migrate the majority of these to use Reflect.apply. IMO that's a case of our inner implementation details leaking out as we could just as easily be calling those functions using cb(...args) or similar. It also means that whenever the way we call those callbacks changes, it could potentially cause unintentional breakage.

On the opposite end, IMHO, users modifying String.prototype or Object.prototype might be doing it intentionally. I think in those situations the responsibility lies with the user and I'm not convinced we should be doing any extra work to protect those. If we are going to be trying to do some protection around it then we should be somehow copying/injecting them into the module wrappers in a frozen state.

(But that then opens up a whole new can of worms because we know that our module wrappers are actually escapable.)

Just my 2 cents and it's more than likely I'm wrong on this.

@apapirovski the user is almost certainly doing that intentionally; but that should not change how core behaves.

Basically, if I can observe that any part of node core is written in JS, then there's been an encapsulation failure, full stop.

Basically, if I can observe that any part of node core is written in JS, then there's been an encapsulation failure, full stop.

I highly doubt making assumptions about the used programming language indicates such a failure, or if it does, has any relevance.

> ''.replace.toString()

'function replace() { [native code] }'

> fs.readFile.toString()

'function (path, options, callback) {\n callback = maybeCallback(callback || options);\n options = getOptions(options, { flag: \'r\' });\n\n if (handleError((path = getPathFromURL(path)), callback))\n return;\n if (!nullCheck(path, callback))\n return;\n\n var context = new ReadFileContext(callback, options.encoding);\n context.isUserFd = isFd(path); // file descriptor ownership\n var req = new FSReqWrap();\n req.context = context;\n req.oncomplete = readFileAfterOpen;\n\n if (context.isUserFd) {\n process.nextTick(function() {\n req.oncomplete(null, path);\n });\n return;\n }\n\n binding.open(pathModule._makeLong(path),\n stringToFlags(options.flag || \'r\'),\n 0o666,\n req);\n}'

// Encapsulation failure, this function was written in JS!!!

If people monkey-patch things in a way that breaks existing code, they should not expect everything to keep working. If you ever used Detours on Windows (or literally any other API hooking library on any platform), you will notice that you can easily break standard APIs (which are not system calls) by messing with the function lookup tables. There are dozens of ways to interfere with others' code, and JavaScript makes it particularly easy.

// Another example of "encapsulation failure"

const fs = require('fs');

fs.readFile = null;

// In another module (okay unless this happens in core)

const fs = require('fs');

fs.readFile('foo', (err) => { console.log(err); });

// Oops, crashed

I don't have a strong opinion on this, but I think what you might call "protection" against this should not affect the maintainability, readability or performance of node.js. As long as it doesn't, I am okay with any changes leading to "protection" of the core, such that people can only break their code and other people's code, including required modules, but luckily, the core APIs will still work.

does anyone have any objections to adding uncurryThis to the internal native module wrapper? i think it would definitely help encourage people to follow the pattern we want to see, and make stuff like #18750 much easier

I'd prefer to have it in an internal module. It could be a module where we export all safe builtins that we need. They could even be pre-uncurried. Then we could do anywhere:

const {

uncurried: { Object_proto_hasOwnProperty },

Object_keys

} = require('internal/builtins');

Object_proto_hasOwnProperty({x: 1}, 'x') // true

Object_keys({x: 1}) // ['x']

PS: I haven't really thought about the names

Still not sure how I feel about this outside of the modules implementation. If we were going in this direction, I honestly wish we could find a way to have safe object prototypes within our wrappers. I don't like the idea of adjusting existing code with this half-solution which also makes code more difficult to understand and harder to maintain.

Allowing contributors to write JS the way that they're used to makes for a much friendlier initial experience and makes for one less obstacle in the way of making one's first PR. The learning curve in contributing to lib/ is already quite steep.

Core could use a babel transform to transform statics into these alternate safe versions.

i feel nervous about that just because it would turn error messages from core into spaghetti, unless we also packaged and used source maps for errors, which just sounds bloated

We could execute code in a frozen realm for core, but that too has problems with marshaling data between realms. I think exposing a large list of primordials is easier overall than a transform.

We had the same issue in V8's internal JS code. What we ended up doing is storing functions required by internal code in the function context of the function that sets up internal JS during bootstrap. That incurred no additional cost other than context slots because bootstrapping is performed at build time and baked into the startup snapshot.

Node sure how well that maps to Node.js. It doesn't work for lazily loaded modules.

@hashseed is that something we can easily do/any docs or examples on it? (i'd be happy to implement it if possible)

@devsnek we would need to port code to use these primordials rather than using any of the default globals, isn't that correct @hashseed ?

It's basically adding const ObjectKeys = Object.prototype.keys; to lib/internal/bootstrap_node.js; repeat for every other built-in method.

so basically @targos's suggestion?

Yes.

One way you could police that is to overwrite all builtin prototypes with proxies that assert that the last stack frame is not from internal lib. Not for production of course.

closing in favor of #18795

Most helpful comment

Certainly changing old code in this way might be breaking; but ensuring that new code is robust should be safe.