As part of the July 11 2017 security release we disabled V8 snapshots to mitigate hash flooding attacks against Node servers. The problem is that the snapshot is built at build time – and whatever the hash seed got used at that time gets baked into that particular Node.js binary.

Disabling snapshots has some negative performance & memory footprint consequences for code that heavily relies on creating lots of V8 contexts (e.g. via vm.runInNewContext). Startup time might also be negatively affected (although this should not be substantial).

This issue is for discussing a way to getting V8 snapshots enabled back again. There are some alternatives that have been proposed:

- When the snapshot is deserialized, generate a new hash seed and then rehash all hash tables in the snapshot (including all dictionary mode objects).

- Hook into the Node.js install or startup process to periodically 'refresh' the snapshot blob on disk. This has ergonomic issues – requires modification of installers, and it may not be possible to write to disk in all environments.

- Generate a new snapshot on each startup and re-use it for future contexts. This will help address performance of

vm.runInNewContextbut will not help with the default startup time. - Modify the V8 object model so that each hash table has its own seed. This will have performance consequences even for code that doesn't need multiple contexts.

/cc @nodejs/v8 @nodejs/ctc

All 41 comments

IMHO (1) seems to make most sense.

(2) breaks many installation stories that don't depend on install/version manager (specifically mine 😞, that is to just download the exe)

(3) seems like a compromised version of (1)

(4) might make sense anyway, but AFAICT on it's own does not mitigate the vulnerability, since all tables stored in the snapshot will still have known seeds.

(4) might make sense anyway, but AFAICT on it's own does not mitigate the vulnerability, since all tables stored in the snapshot will still have known seeds.

To clarify, I am proposing that each hash table has its own hash seed rather than having a global hash seed that gets used for all hash tables. This will address vulnerability; in fact, it would provide even stronger protection against hash flooding attacks. This would be quite a substantial undertaking on the VM side though.

With (4) every hash table instance would have its own hash seed, which is random. This local hash seed is not constant, and knowing the random seed of one particular instance does not affect other instances.

(4) is also least likely to be backportable. I also favor (1).

To clarify, I am proposing that each hash table has its own hash seed rather than having a global hash seed that gets used for all hash tables. This will address vulnerability; in fact, it would provide even stronger protection against hash flooding attacks. This would be quite a substantial undertaking on the VM side though.

With (4) every hash table instance would have its own hash seed, which is random. This local hash seed is not constant, and knowing the random seed of one particular instance does not affect other instances.

But it will leave all the tables that are "frozen" in the snapshot with knowable seeds thus compromisable. If none of them are important, theoretically you could just have two seeds: one for the defrosted tables and a fresh one for everything else

@hashseed your username has never been so relevant 😄

:)

You are right in that the deserialized tables would be compromised. We probably don't want that.

It sounds like for 4) we need 1) regardless.

How does the performance hit of rehashing the tables at startup compares to the current workaround?

Rehashing would be a lot faster than bootstrapping from scratch.

A variation of that could be lazily rehashing: upon deserialization, we mark all hash tables. Marked hash tables use the old hash seed. Once a table expands, it is rehashed anyways (iirc). That's when we would use the new hash seed.

That should work as mitigation because DOS attack is based on adding entries to the hash table to provoke hash collisions, which eventually would lead to expanding the table.

startup compares to the current workaround?

Just some quick anecdotal numbers from Windows x64

50 X node813 -e "process._rawDebug('.')" => 9.57s => ~0.2s per instance

50 X node814 -e "process._rawDebug('.')" => 19.17s => ~0.6s per instance

~200% slowdown (but still relatively fast)

That's probably helped by the fact that we have migrated a lot of the builtins away from being self hosted in JS. That reduces the time spent in bootstrapping a context from scratch.

The lazily rehashing sounds like a good way to only pay the price or rehashing when its actually needed.

The downside in (4) could be addressed similarly: we would choose a new local hashseed whenever the hash table is expanded and reallocated.

The lazily rehashing sounds like a good way to only pay the price or rehashing when its actually needed.

But is needless complexity when the number of hash tables is small. I'd go for the simplest solution first and only if that doesn't perform satisfactorily, look into lazy rehashing.

I agree with @bnoordhuis.

I agree as well. But here is the issue: the shape of a hash table (how many fields per entry, what kind of key, etc) depends on the context it is used for. There is no simple way to simply look at a FixedArray and tell that it is a NamedDictionary, and not a GlobalDictionary or StringSet. That makes rehashing hard.

Doing it lazily has the advantage that this can be done with the necessary context. But the issue here is that the code would be scattered.

I put a document together.

Would it make any sense at all to offer an option to enable the snapshot when Node is built from scratch? Disabling snapshot only makes sense if Node is distributed as binary.

@hashseed That option exists, we just flipped the default from on to off. It's ./configure --with-snapshot now. I'll take a look at the document.

Would it make any sense at all to offer an option to enable the snapshot when Node is built from scratch? Disabling snapshot only makes sense if Node is distributed as binary.

That is the current situation. The mitigation patch was just to reverse the default. https://github.com/nodejs/node/commit/eff636d8eb7b009c40fb053802c169ba1417293d

Snapshots were disabled in the past and then re-enabled, based on this comment/thread. Purely out of curiosity - was the issue that the comment was incorrect? Or is it because SetEntropySource isn't always called?

Aha! That's why it was re-enabled!

That's simply a mistake. The random seed that @jeisinger was talking about is the PRNG state. That state is not shared between contexts and also re-initialized for every new context, regardless of whether it is created from scratch or deserialized from snapshot.

We are talking about a global hash seed as basis to compute string hashes though!

I added a section to the document to point out the irony of this.

Edit: This message originally contained reference to this blog post from January from @indutny and this tool accompanies it. I ran the tool and it spit out different hash seeds for each node instance, which made me think that this vulnerability may not exist, but @hashseed responded below with an explanation.

If you look through the v8 source code, there are definitely calls to set_hash_seed that cause the randomized seed to be overwritten with the compile-time seed when loading a snapshot.

Yes. The hash seed can be guessed using timing attacks.

Yes. The hash seed is baked into the snapshot. Changing it after deserialization is not trivial because existing hashes will become invalid.

If I had to guess, I would say the seeds obtained by Fedor's tool doesn't have to be the correct one. The same set of inputs may be able to cause hash collision for a whole class of hash seeds.

It might be more in node's realm, but maybe have the binary create it's own snapshot on first run and cache it (similar to (2) but doesn't depend on installer, and will not change hash of binary).

This will break the leave-no-trace paradigm node has been working under, but "desperate times" etc.

/cc @bmeck

Ref: https://github.com/nodejs/node/issues/13877

I'm shooting for solution 1 that @ofrobots described above. That will add a slight overhead after deserialization, but it should be negligible.

I definitely agree with @dougmoscrop that this issue seems far from proven. Most of the articles are just excitedly repeating the original bulletin and adding simplistic explanations of how hashtables work. The only "proof" offered seems to be based on "we switched off snapshots previously because we were worried about constant hash seeds, so snapshots being switched back on must mean the issue has returned". I've had a dig around the V8 source and the code for string hashing has changed considerably since this issue was discussed a few years ago and like @dougmoscrop I've been unable to get to the seed myself.

I'm not asking anyone to publish the actual hashseed online, but I'd take this issue much more seriously if someone had actually done a repro of the vulnerability in a recent version of node so we could see what the CPU overhead really is in this specific codebase. In the absence of this I'm assuming it's more of a theoretical vulnerability in that the node developers believe an attacker might be able to do it.

The main reason I was looking for the seed myself is I'd like to see how the rest of our infrastructure responds to the attack in case we have something in there that can/does block/limit the types of requests involved...

Rest assured that this issue is still there. The hashseed persists with the snapshot.

Source: I'm the owner of v8/src/snapshot and did most of the refactorings on string hashing.

Yeah, I was very wrong - I should have edited my previous message. I added some logging and poked around and if you just search for set_hash_seed you'll see that yes, on startup it does get a new random seed even with snapshots on, but then it's overwritten, and if you look in the actual hash calls themselves, they use the one determined at compile time.

The disclosure was hard to grok for outsiders because of the mixed history and what have you. I will edit my previous message to reduce any further confusion.

Here you go: https://chromium-review.googlesource.com/c/574527/

This patch rehashes select deserialized hash tables, descriptor arrays, and transition arrays. It's not the general approach and only works for the default snapshot. Custom startup snapshots will not have rehashing enabled.

The overhead turned out to be not so negligible. The time to deserialize an isolate went up by around 0.15ms from around 2.7ms. The time to deserialize a context went up by 0.05ms from around 0.25ms.

I hope I can get this landed soon. And then try to port it back.

Landed upstream: https://chromium.googlesource.com/v8/v8.git/+/a2ab1353f6708b44d305fdd9fe65a6d29b95c6d6

I would like to wait a day or two to get some Chrome Canary coverage. Afterwards I'll float patches, starting with Node 8.

Offtopic (but apropo)

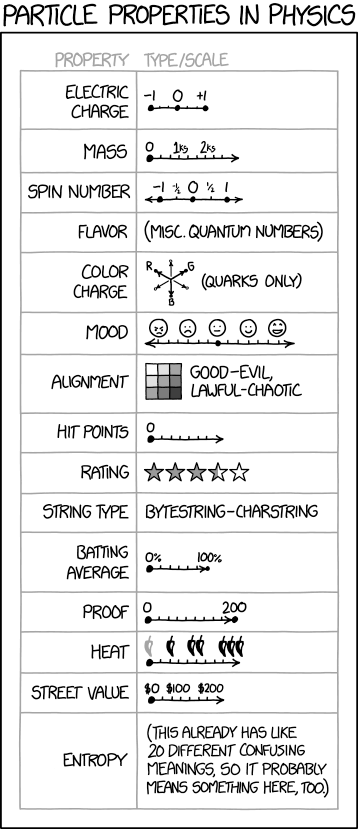

Each particle also has a password which allows its properties to be changed, but the cosmic censorship hypothesis suggests we can never observe the password itself—only its secure hash.

Is this getting backported to v8.x too?

@kasicka Yes, once https://github.com/nodejs/node/pull/14345 lands it is automatically scheduled to be included in the next Node 8.x release.

@ofrobots Hi, our system (not internet facing) creates lots of V8 contexts :).

We recently upgraded from 8.0.0 to 8.1.4 and now the initialization phase of our system, where those contexts are created, changed from ~2 seconds to ~8 minutes. Is there some workaround to creating the snapshots we can use in the meantime because what we are doing now is compiling 8.1.4 without your change and I don't think this is a good long term solution.

We upgraded because we had some issues that were resolved in 8.1 so I guess we can try and use 8.1.3 but since we are doing some low level v8 things we would rather to stay updated with the versions and not stay on a particular version or compile our own fork.

@BorisKozo if you don't expect hash flooding attacks to be an issue in your use case, that's a valid fix. Otherwise you could upgrade to 8.1.4 and cherry-pick this fix in addition.

We upgraded because we had some issues that were resolved in 8.1 so I guess we can try and use 8.1.3 but since we are doing some low level v8 things we would rather to stay updated with the versions and not stay on a particular version or compile our own fork.

@BorisKozo, I'm with @hashseed, using 8.1.3 until 8.3.0 goes out seems like a valid solution.

Another solution would be using the latest release source tarball but building it with ./configure --with-snapshots, so it's not a fork, just a tweaked build (we try to test with snapshots to make sure there are no regressions).

Thanks for the replies! We can wait for 8.3.0 if you can confirm that there is a fix there.

Thanks for the replies! We can wait for 8.3.0 if you can confirm that there is a fix there.

@BorisKozo we try not to commit to the content of specific releases. At the moment I see no reason it won't make it in (this is the PR https://github.com/nodejs/node/pull/14345) but things may change. It's high probability that will make it out in the next few weeks.

Most helpful comment

@hashseed your username has never been so relevant 😄