- Version: 6.5.0

- Platform: FreeBSD 11.0 BETA1 x64

- Subsystem: module, require

I have a function that uncaches a module and its children:

function uncacheTree(root) {

let uncache = [require.resolve(root)];

do {

let newuncache = [];

for (let i = 0; i < uncache.length; ++i) {

if (require.cache[uncache[i]]) {

newuncache.push.apply(newuncache,

require.cache[uncache[i]].children

.filter(cachedModule => !cachedModule.id.endsWith('.node'))

.map(cachedModule => cachedModule.id)

);

delete require.cache[uncache[i]];

}

}

uncache = newuncache;

} while (uncache.length > 0);

};

After running it, I require the module that it uncaches again. When I found my server running out of memory in a few days consistently, I found that after taking a coredump, all of the exported objects from the modules I hotpatched stayed on the heap according to mdb_v8. What's causing this to leak like that? Is there any way it can be fixed? I'm not sure if it's something wrong with node itself or just something hiding out in the code holding references to all the zombie exports.

All 35 comments

As long as there are references to the module anywhere, its memory won't (can't) be reclaimed. Through heapdumps you can probably figure out where those references are.

I'll be happy to take a look if you have a minimal test case that shows node.js leaking memory even when there are no references (keeping GC vagaries in mind) but otherwise I suggest we close this issue.

That makes sense. I'll come back with the test case without any references to the module when I have the chance. I'm fine with the issue being closed for now

There is definitely memory leak. Simple test case:

// module.js

module.exports = () => {};

// index.js

var heapdump = require('heapdump');

for (var i=0; i < 100000; i++) {

const m = require('./module.js');

m();

}

heapdump.writeSnapshot('./before-' + Date.now() + '.heapsnapshot');

for (var i=0; i < 100000; i++) {

delete require.cache[require.resolve('./module.js')];

const m = require('./module.js');

m();

}

heapdump.writeSnapshot('./after-' + Date.now() + '.heapsnapshot');

Difference between size of heapdumps are 3.6 MB vs 78.8 MB. Tested on node 6.5.0 and OS X 10.11.

@adammockor In this case, there are 100000 Module instances still reachable. You could check that by loading the heapsnapshot into the profiler.

As could be seen from the heapsnapshot, those are stored in the .children property of the current module, accessible through require.cache[require.resolve('./index.js')].children in your case.

Note that manual unloading through deleting from require.cache isn't supported, so this is not a bug.

Thank you for clarification.

So is there an official way? Or if we want to hack around, we can do this:

const cachedModule = require.cache[require.resolve('./module.js')];

if (cachedModule) {

delete cachedModule.parent.children;

delete cachedModule;

}

@adammockor , you can try uncacheTree('./module.js') as described in the OP, which should handle references through children, but UAYOR.

@bnoordhuis

Uh, deleting modules from require.cache does seem to be a part of documented API: https://nodejs.org/api/globals.html#globals_require_cache:

By deleting a key value from this object (

require.cache), the next require will reload the module. Note that this does not apply to native addons, for which reloading will result in anError.

And that leaves _internal_ references to those modules (where «internal» means inside the Node.js modules implementation, not in users code), and those references are never cleared, and the memory is never reclaimed.

I suggest we reopen this.

Also /cc @nodejs/ctc, @bengl

@bnoordhuis Perhaps this could be fixed on the documentation side?

Another problem I found: Timeout and some fs related objects also leak when hotpatching like this. Is there a way to allow modules to know when their require cache is going to be deleted for cleanup?

The docs say the next require will reload the module - they don't guarantee that all modules can be reloaded over and over without leaking memory. Any module that creates some handles during require is going to leak those handles when re-required.

I think its an interesting idea, notifying modules they are being re-required... but aren't we trying to get closer to ES6 class system? I don't think it has any such facility.

I think ES Modules probably incentivize us to not do this, at least until they stabilize.

You could make the module cache a Proxy, I suppose. Your mileage may vary with that.

I'll give that a shot.

@sam-github, even without the external handles there is still an internal reference to the module that doesn't get created deleted by removing a module from require.cache.

@ChALkeR you meant to write "doesn't get deleted", right?

@sam-github Yes, thanks, I was probably typing that from my phone =).

This issue has been inactive for sufficiently long that it seems like perhaps it should be closed. Feel free to re-open (or leave a comment requesting that it be re-opened) if you disagree. I'm just tidying up and not acting on a super-strong opinion or anything like that.

I have a use case for this. I'm writing a chat bot that has a plugin system which I want to reload when anything is changed in the code, while preserving some other modules that hold state (logs, the IRC socket, etc.). I ran into this quickly with the naive approach of emptying the cache on every message. Restarting the process isn't viable because it'd mean ~10s of downtime.

I wrote a test program. In node 6 it crashes (failed alloc) after ~37s, and in node 8 it crashes after 115s.

As people are experimenting more with hot-code-updates as a way to avoid downtime, either in development or production, having this primitive working well is valuable.

emptying the cache on every message

Why would you need to do that on every message? The overhead is simply non-trivial, either CPU- or memory-wise.

Either way, can you make sure the sockets and the logs, and only those, are preserved when clearing the cache?

Let's continue this conversation over at https://github.com/nodejs/help to talk about your case specifically.

Sure, https://github.com/nodejs/help/issues/757. Thanks 😄

@ChALkeR

even without the external handles there is still an internal reference to the module that doesn't get deleted by removing a module from

require.cache

What kind of internal references are you referring to?

Answering my own question: all require()d modules are retained in the parentModule.children, regardless of whether it was deleted.

// b.js

module.exports = new ArrayBuffer(1e7);

// a.js

// Flags: --expose-gc

require('./b.js');

var i = 0;

while (i++ < 100) {

delete require.cache[`${__dirname}/b.js`];

require('./b.js');

gc();

}

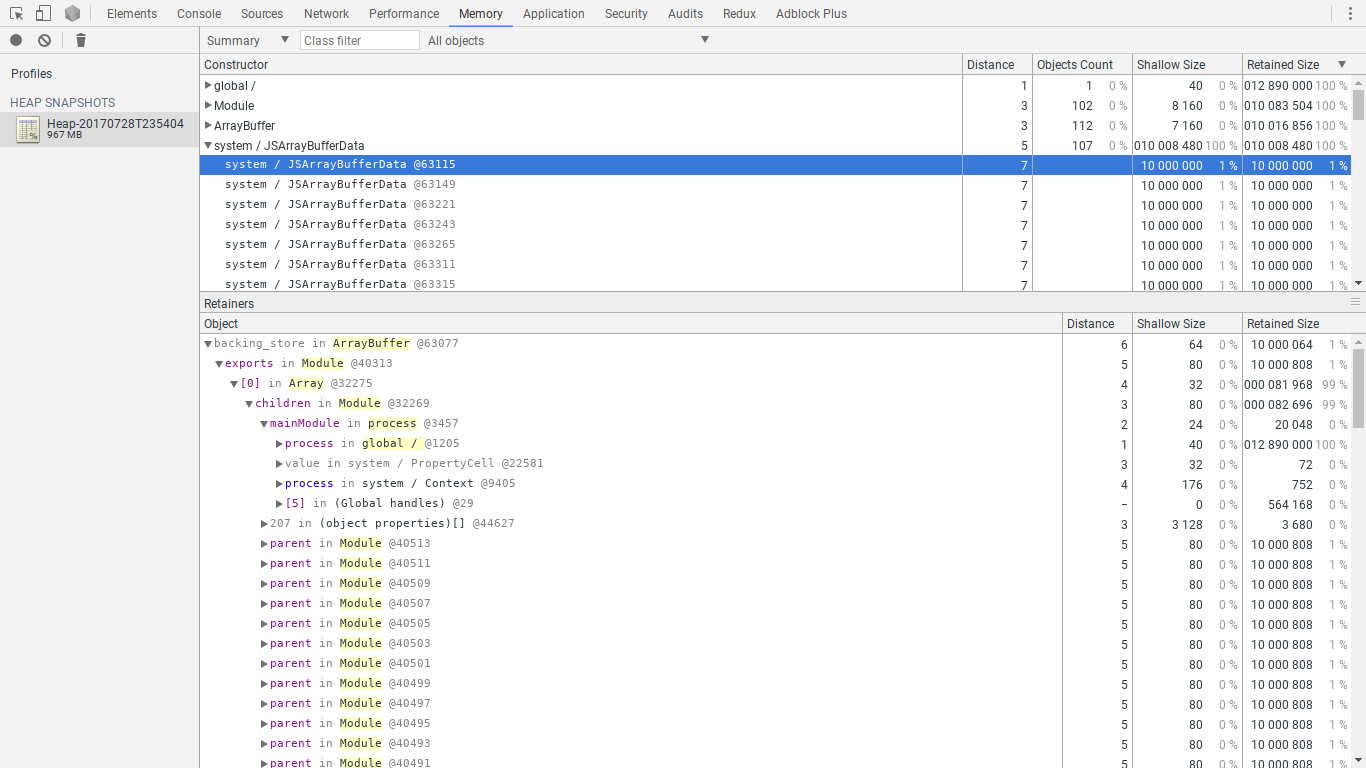

At the end of a.js's execution, module.children where module is the object exposed in a.js will contain 100 Module objects, all of b.js:

To fix it, use the following a.js:

'use strict';

require('./b.js');

var i = 0;

while (i++ < 100) {

require('./b.js');

removeFromCache(`${__dirname}/b.js`);

gc();

}

function removeFromCache(path) {

const mod = require.cache[path];

delete require.cache[path];

for (let i = 0; i < mod.parent.children.length; i++) {

if (mod.parent.children[i] === mod) {

mod.parent.children.splice(i, 1);

break;

}

}

}

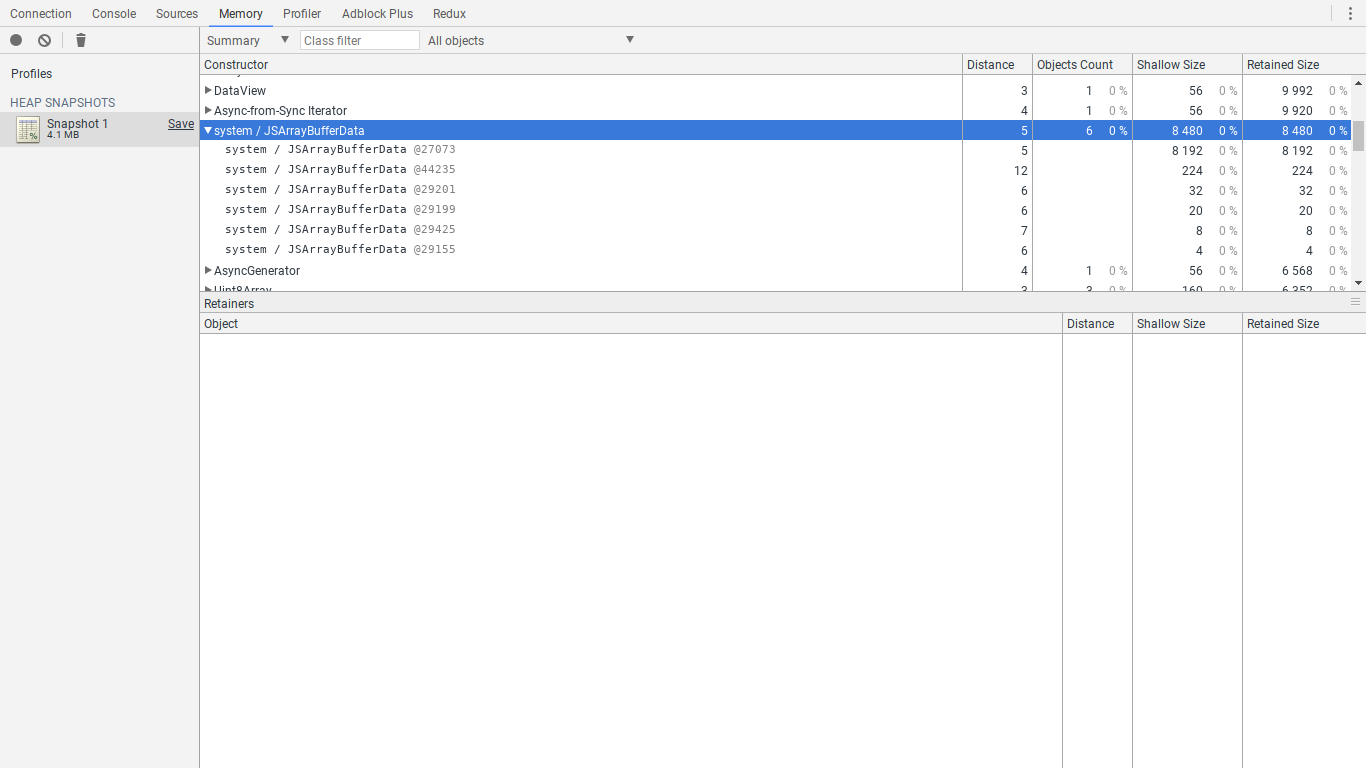

which gives a nice and clean heap snapshot:

For those of you who do want to use this code for hot reloading, I have to provide the following caveat:

The revised a.js only gets rid of the internal references to the module. It's very possible that your own code has references to stuff in the old module, constructor references, prototype references, etc., so further work may be needed to prevent OOM. Plus, Node.js internals may change at any time, so we might introduce new references to the module at other places, maybe even places normal JS cannot get to. Therefore if you tinker with require.cache, you will not receive any support from the project.

you will not receive any support from the project

If I'm reading https://nodejs.org/api/modules.html#modules_require_cache correctly, require.cache is stable and deleting keys is documented. It's hardly internal if the documentation outright allows doing this.

I assume this is about modifying module.children array, because while module.children is documented and stable, modifying it isn't documented at all.

Thanks @TimothyGu !

Any chance of this being documented?

Reopening per https://github.com/nodejs/node/issues/20123#issuecomment-382316574. Needs a decision on if and how we want to support this pattern.

@bnoordhuis If we do so, how would that play with esm?

Also, perhaps most if not all usecases for «unloading» modules would be fixed by instead providing a version of require that does not use (i.e. neither reads from or writes into) process-wide require.cache.

_Could that be done from the userland, perhaps?_ :wink:

To be clear, I'm not championing this, just following due process.

I could also use this - I need it for filesystem-based watching and reloading of JS files (in multiple programs of mine). In both cases, this block makes it a deal breaker for ESM migration.

@ChALkeR I like the idea of doing an uncached require, and I have come up with a userland solution for CJS already:

"use strict"

const Module = require("module")

const loaded = new WeakMap()

const visited = new WeakSet()

const parents = new Set()

function eachModule(func) {

for (const key in Module._cache) {

if (Object.prototype.hasOwnProperty.call(Module._cache, key)) {

func(Module._cache[key], key)

}

}

}

function removeRecursive(mod) {

const index = loaded.get(mod)

if (index == null) {

if (visited.has(mod)) return

delete Module._cache[mod.id]

if (mod.parent != null) {

parents.add(mod.parent)

visited.add(mod)

mod.parent = undefined

removeRecursive(mod.children)

}

} else if (index === 0) {

loaded.delete(index)

} else {

loaded.set(mod, index - 1)

}

}

function addModule(mod) {

const index = loaded.get(mod)

loaded.set(mod, index != null ? index + 1 : 0)

}

function removeModule(mod, key) {

if (visited.has(mod)) return

const index = loaded.get(mod)

if (index == null) {

delete Module._cache[key]

if (mod.parent != null) {

parents.add(mod.parent)

visited.add(mod)

mod.parent = undefined

}

} else if (index === 0) {

loaded.delete(index)

} else {

loaded.set(mod, index - 1)

}

}

eachModule(addModule)

addModule(module)

// export default function load(

// parent: Module, file: string, allowMissing?: boolean

// ): Module

module.exports = (parent, file, allowMissing) => {

eachModule(addModule)

try {

file = Module._resolveFilename(file, parent, false)

} catch (e) {

if (allowMissing && e.code === "MODULE_NOT_FOUND") return undefined

throw e

}

try {

return parent.require(file)

} finally {

const child = Module._cache[file]

if (child != null) removeRecursive(child)

eachModule(removeModule)

for (const parent of parents) {

let target = parent.children.findIndex(visited.has, visited)

for (let i = target; i < parent.children.length; i++) {

if (visited.has(parent.children[i])) {

parent.children[target++] = parent.children[i]

}

}

}

visited.clear()

parents.clear()

}

}

It's an incredible hack of monstrous proportions, and it doesn't hit everything, but it does do most of the work I need. It also covers these extra cases I also need:

- Child module doesn't exist (I need this separate from execution to avoid masking errors)

- Child loads modules without attaching them to

module.children - Child loads modules which delete themselves from

require.cache, but not fromparent.children. - I pass the module itself, not a

requirefunction. (This is necessary because I hook into it elsewhere withmodule.parent.) - Child loads modules through a child invocation to this, complete with itself as the parent.

It's partially untested, but I needed it for one of my two use cases. Beyond those two cases, I found I needed to do a few other things to avoid blowing up performance:

- I try to avoid checking modules I don't need to, trying to keep as much work as possible built on previous recursive calls. This is hard to do, since I don't have a choice in avoiding dirty-checking.

- I try to avoid excess allocations, since every iteration goes through every loaded module. (That's why I use a shared reference.)

- I made only

parentsa real iterableSetbecause it's slightly faster to check inclusion in weak collections than in strong ones. (I've benched this on JSPerf somewhere, but I don't recall the link.) - Because I've got so many parent references, I decided to do an optimized batch removal algorithm so I could avoid going too extreme with the runtime performance. (Every

.indexOf+.splicepair creates an array, and V8 isn't intelligent to elide it.)

Now, this is very suboptimal, and could far more efficiently be implemented natively. Internally, you could just store a reference to a module's creating parent and whenever it dies, you reclaim the child tree with it transparently. This would require storing a weak reference to the object, but that's not too hard with a native assist. (Most engines provide a way to do this natively.) There's a few other things, too, that could cut this down by quite a bit.

my opinion is that any sort of system we propose to core will have pitfalls for some subset of users, pushing alternative methods into userland. I think these weak cache module systems should remain in userland. at best we leak a small amount of memory if they aren't used correctly and at worst we're creating a system that cannot possibly work to the needs of the people that want to use it by enforcing arbitrary behaviour paradigms to be functional. Node.js exposes a lot of tools that userland can use for this like vm.Script and vm.Context which are stupidly powerful in terms of what you can start doing to your code.

@devsnek Leaking a little bit of memory is preferable to attempting black magic. My module is basically a requireUncached/importFresh that accepts a module reference instead of a callee, and properly cleans up after itself with regards to subdirectories.

For me personally, I'd like an importDetached. The main things I would want for it are these:

- It doesn't persist itself.

- It doesn't persist outside its dependency graph any newly loaded dependencies.

- It optionally enables differentiation between the module itself missing and a dependency error.

- It optionally reuses the parent cache when it intersects.

- If the parent later imports a dependency a child has previously imported and the child can reuse the parent cache, the grandchild depenency is moved to the parent cache.

- It works with ESM and CJS both. (It doesn't have to be synchronous.)

My POC above covers 1, 2 for the most part, 3, and it attempts 5. I need to overwrite Module._load to address the other two for CJS modules, and those would complicate this substantially.

I currently have a build system that needs this now + a test framework that will need it soon. Currently, my only option is to basically implement 90% of this myself, and that will take a while.

The more I think about this, though, the more it seems like something that would be equally ideal for the web platform and other use cases, and thus should be baked into the language. (It would also integrate well with realms.) This isn't a long-term need, but it'd be nice. Short-term, there might be a need to expose the loaded module list for altering, though. (This would be a V8 concern, not so much a spec concern.)

This would be a V8 concern, not so much a spec concern.

this 100% breaks the actual spec for es modules so we can't really do it with those, but cjs is open to new features as long as nothing breaks.

tc39 has been discussing stuff about debugging recently, i'm sure they'd be happy to have a strawman about a debug mechanism for hot reloading, i think it would be cool

@devsnek Okay, now that I take a closer look at the spec, I stand corrected. I do need to take a better look at what needs to go into hot reloading and uncached loading, so I can come up with something a little more cohesive.

Oh, and a quick update: here's a gist with what I'm currently using. I had to do some debugging, but now, it properly removes itself from not only require.cache, but also from module.children. It also attempts to remove the dependency chain as necessary (but it's relatively conservative - it should really be doing a partial GC of the relevant trees).

Am I right to interpret the overall discussion result as "unlikely to implement such an API in core"?

@Trott For ESM, that would likely be correct for now. I plan to, once I find some time, work on a hot swapping/etc. system for CommonJS and then seek a TC39 champion with my findings after I get something that works, because this kind of thing is generally useful, especially for zero-downtime updates, but it's currently impossible to implement for ESM without spec violations or other things that seriously undermine VM assumptions.

For CJS, I'm not a member of any of Node's committees, but I suspect that's probably also true in that case, because of the workaround I gave above.

Most helpful comment

To be clear, I'm not championing this, just following due process.