Summary or problem description

Decode ECPoint could be expensive, what do you think about caching the parsed ECPoint in local

Do you have any solution you want to propose?

Create a cache of the currently parsed ECPoints

Neo Version

- Neo 3

My tests are using a spam of 10K transactions like this:

[TestMethod]

public void TestValidTransaction()

{

var senderProbe = CreateTestProbe();

var snapshot = Blockchain.Singleton.GetSnapshot();

var walletA = new NEP6Wallet("wallet.json", "wallet");

using (var unlockA = walletA.Unlock("123"))

{

if (walletA.GetAccounts().Count() == 0)

{

walletA.CreateAccount();

walletA.Save();

}

var acc = walletA.GetAccounts().FirstOrDefault();

// Fake balance

var key = NativeContract.GAS.CreateStorageKey(20, acc.ScriptHash);

var entry = snapshot.Storages.GetAndChange(key, () => new StorageItem

{

Value = new Nep5AccountState().ToByteArray()

});

entry.Value = new Nep5AccountState()

{

Balance = 100_000_000 * NativeContract.GAS.Factor

}

.ToByteArray();

snapshot.Commit();

typeof(Blockchain)

.GetMethod("UpdateCurrentSnapshot", BindingFlags.Instance | BindingFlags.NonPublic)

.Invoke(Blockchain.Singleton, null);

// Make transaction

var txs = new List<Transaction>();

if (File.Exists("temp.dat"))

{

txs.AddRange(File.ReadAllBytes("temp.dat").AsSerializableArray<Transaction>());

}

else

{

for (int x = 0; x < 10_000; x++)

{

txs.Add(CreateValidTx(walletA, acc.ScriptHash, (uint)x));

}

File.WriteAllBytes("temp.dat", txs.ToArray().ToByteArray());

}

var st = Stopwatch.StartNew();

foreach (var tx in txs)

{

senderProbe.Send(system.Blockchain, tx);

senderProbe.ExpectMsg(RelayResultReason.Succeed);

senderProbe.Send(system.Blockchain, tx);

senderProbe.ExpectMsg(RelayResultReason.AlreadyExists);

}

st.Stop();

Console.WriteLine(st.Elapsed);

}

}

private Transaction CreateValidTx(NEP6Wallet wallet, UInt160 account, uint nonce)

{

var tx = wallet.MakeTransaction(new TransferOutput[]

{

new TransferOutput()

{

AssetId = NativeContract.GAS.Hash,

ScriptHash = account,

Value = new BigDecimal(1,8)

}

},

account);

tx.Nonce = nonce;

var data = new ContractParametersContext(tx);

Assert.IsTrue(wallet.Sign(data));

Assert.IsTrue(data.Completed);

tx.Witnesses = data.GetWitnesses();

return tx;

}

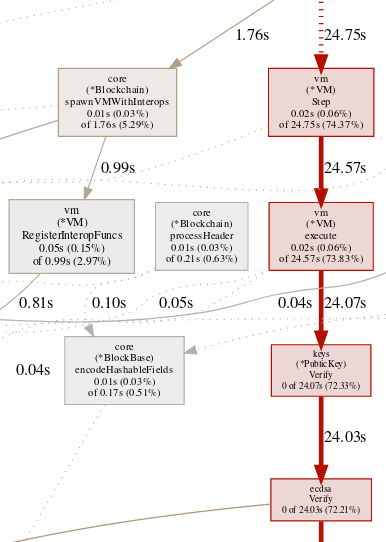

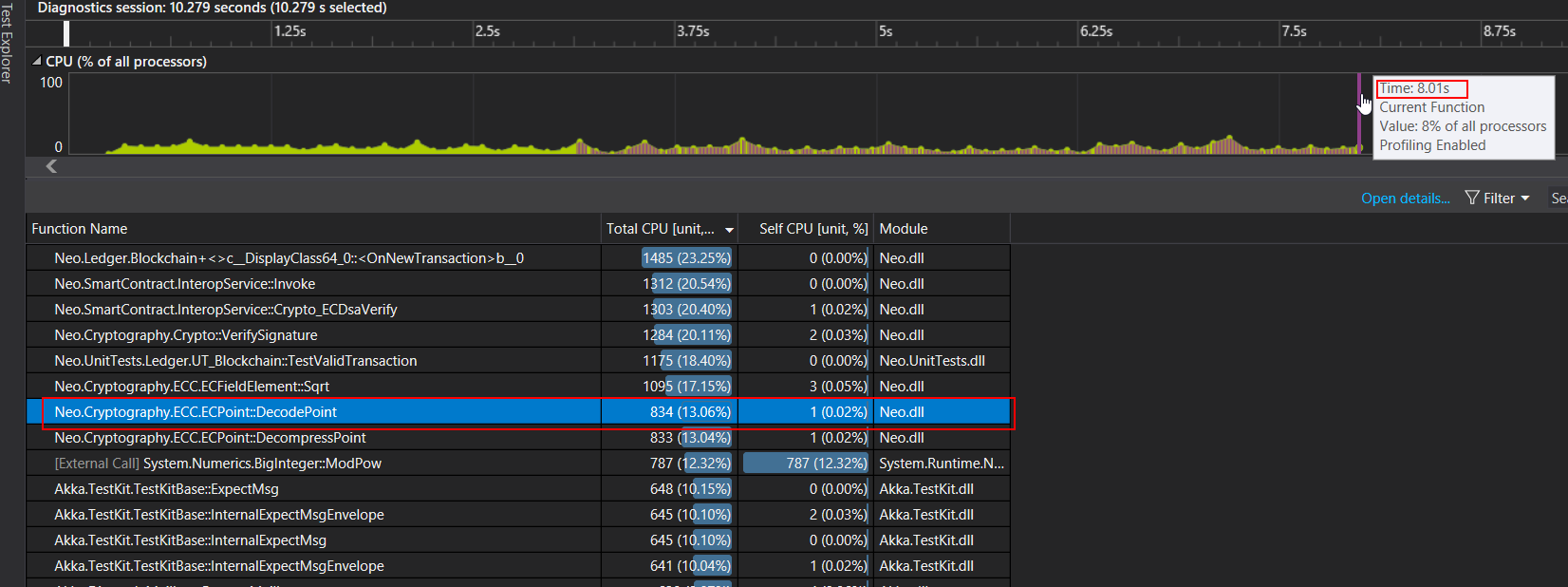

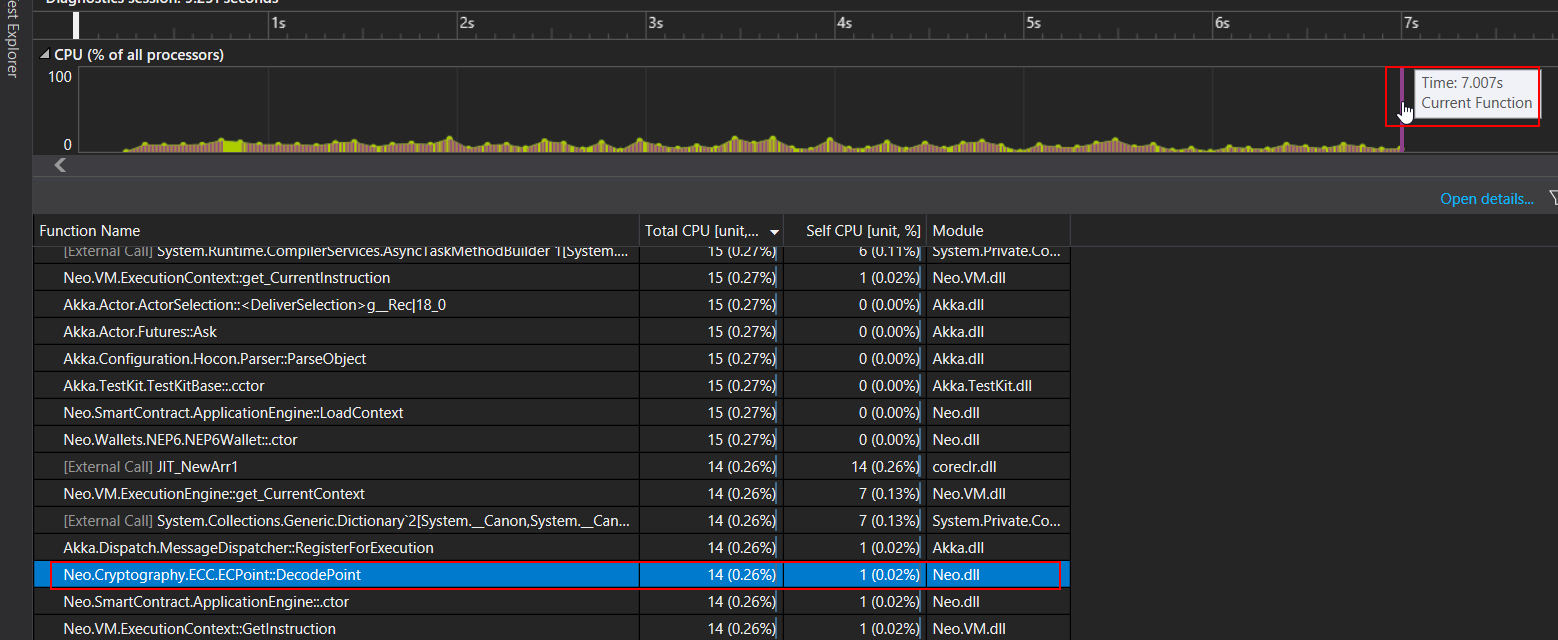

The original test are executed in around 8 seconds

And 7 seconds with caching

I understand that this improve it's better because all the TX are the same (and cached) but what do you think? it worth?

All 3 comments

I don't think they differ much.

This may be interesting for block signatures checking, profiling block import in neo-go we see that it takes considerable amount of time to decode the keys, even comparable to signature checks. And these keys don't change much unlike the keys that come in transactions, so it's not hard to gain some 13% (as in your case with transactions) or even more (given that we check 5-7 signatures for a block) here.

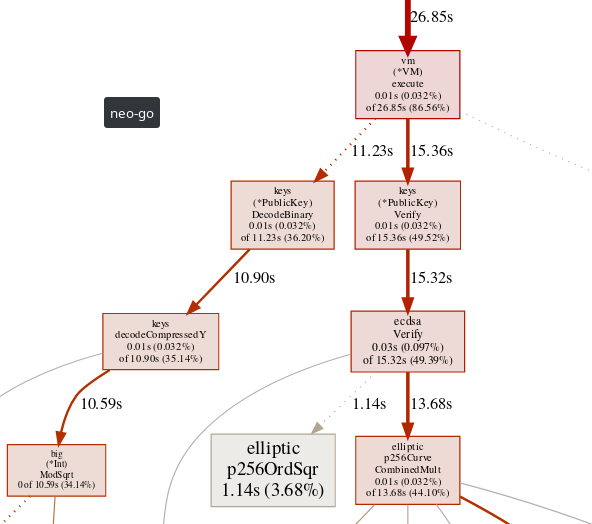

To add to that, I've just implemented a very simple key cache that is only used for block signature checks (nspcc-dev/neo-go#550) and that gave 30% improvement in 1.4M mainnet block importing (and by that I mean full import time, not some local unit-test). Granted, it's a very specific testcase (but we care about it a lot because we're constantly replaying mainnet blocks when debugging any existing differences between neo-go and C# Neo) and the impact is less influential at higher heights (just because blocks there have more transactions doing other things), but it looks like quite a significant improvement to me.

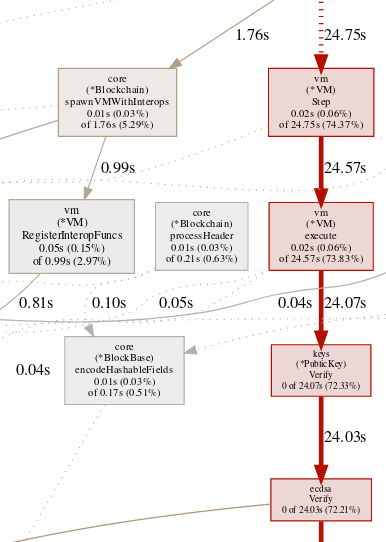

Now we're clearly ECDSA-bound when doing imports:

Most helpful comment

To add to that, I've just implemented a very simple key cache that is only used for block signature checks (nspcc-dev/neo-go#550) and that gave 30% improvement in 1.4M mainnet block importing (and by that I mean full import time, not some local unit-test). Granted, it's a very specific testcase (but we care about it a lot because we're constantly replaying mainnet blocks when debugging any existing differences between neo-go and C# Neo) and the impact is less influential at higher heights (just because blocks there have more transactions doing other things), but it looks like quite a significant improvement to me.

Now we're clearly ECDSA-bound when doing imports: