Mpv: HDR tonemapping producing subpar results with default config

mpv version and platform

Windows 10 1809

mpv 0.29.0-107-gd6d6da4711 Copyright © 2000-2018 mpv/MPlayer/mplayer2 projects

built on Sun Dec 16 00:57:00 UTC 2018

ffmpeg library versions:

libavutil 56.24.101

libavcodec 58.42.102

libavformat 58.24.101

libswscale 5.4.100

libavfilter 7.46.101

libswresample 3.4.100

ffmpeg version: git-2018-12-15-be60dc21

Reproduction steps

Attempt to watch HDR content on SDR monitor

Expected behavior

Accurate reproduction of colours and brightness levels

Actual behavior

Colours (particularly in the red spectrum) are not accurately reproduced, light often behaves unnaturally and visibly shifts mid-scene

Log file

Sample files

If someone can tell me how to losslessly cut an mkv with HDR metadata intact I can provide samples

From what I can tell, tonemapping with mpv currently has two major issues what make it rather unpleasant to the end user:

Reds are awful, I don't know if there's something special about this part of the spectrum but mpv does not play well with them at all - at least with the default settings. I've found I can improve reds dramatically by tweaking some settings such as the following but this comes with the cost of interfering with the rest of the film.

tone-mapping-desaturate=0

hdr-compute-peak=no

Here are some examples of reds/yellows not behaving correctly, I'll be using madVR as a pseudo reference as I don't have the SDR BD on hand and they seem to have no such issues with their tonemapping solution.

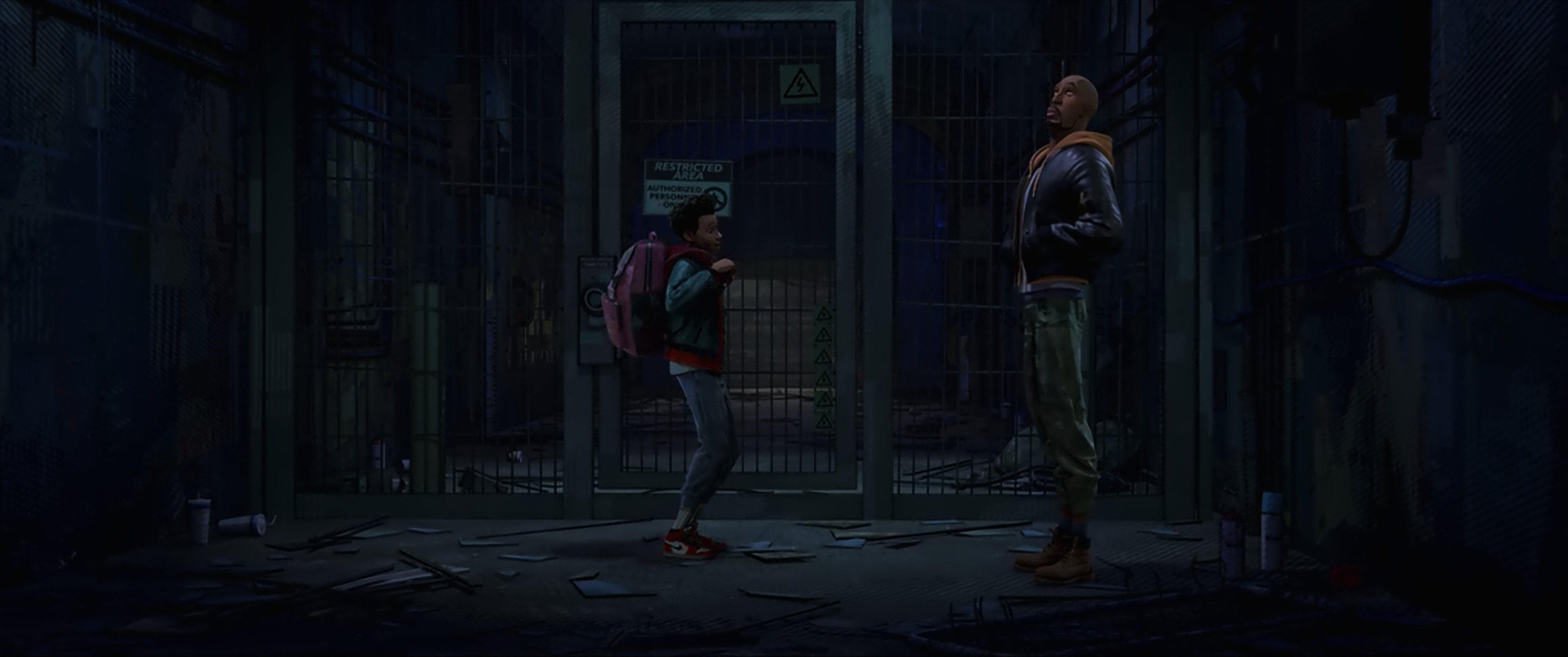

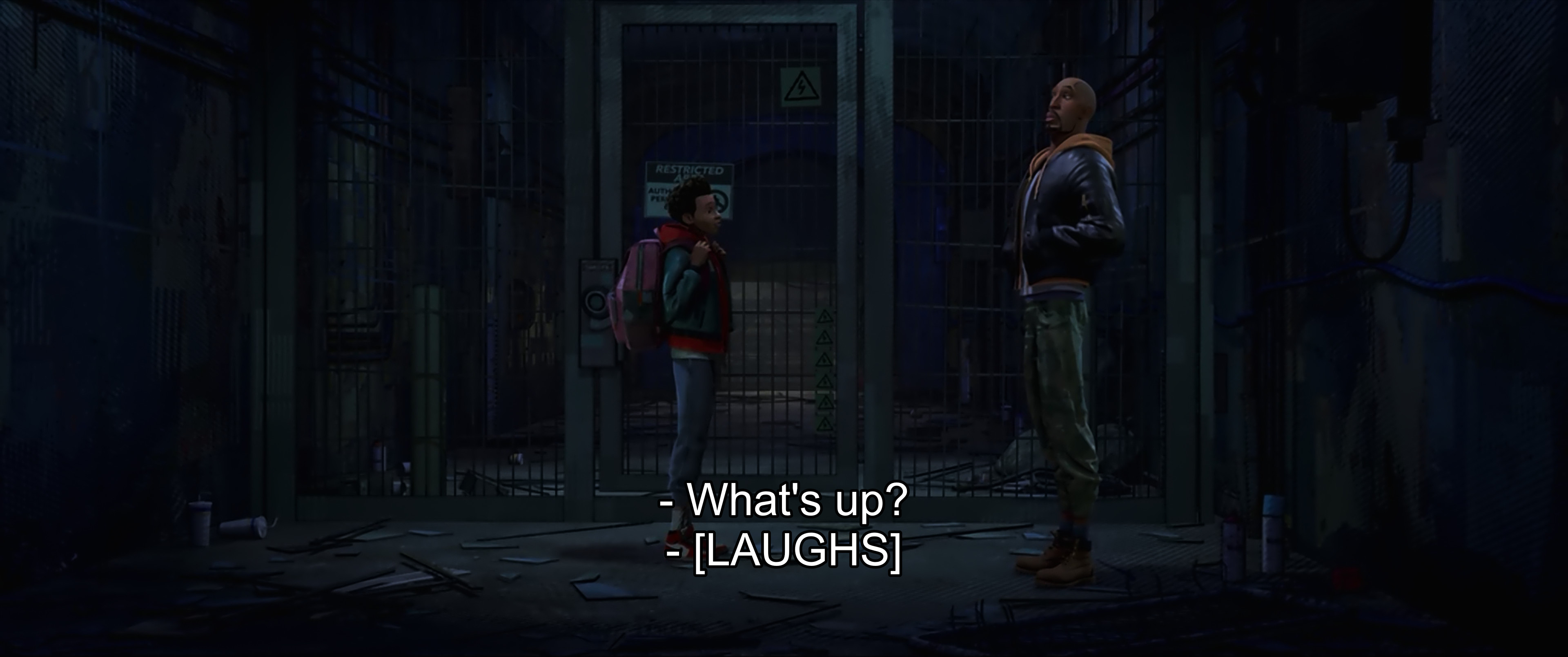

These examples are from The Dark Knight 2008, mpv (default) has no HDR-specific config, mpv (tweaked) users the aforementioned config from the previous paragraph.

Full gallery for easier viewing: https://imgbox.com/g/DvoY5yAjFH

Examples:

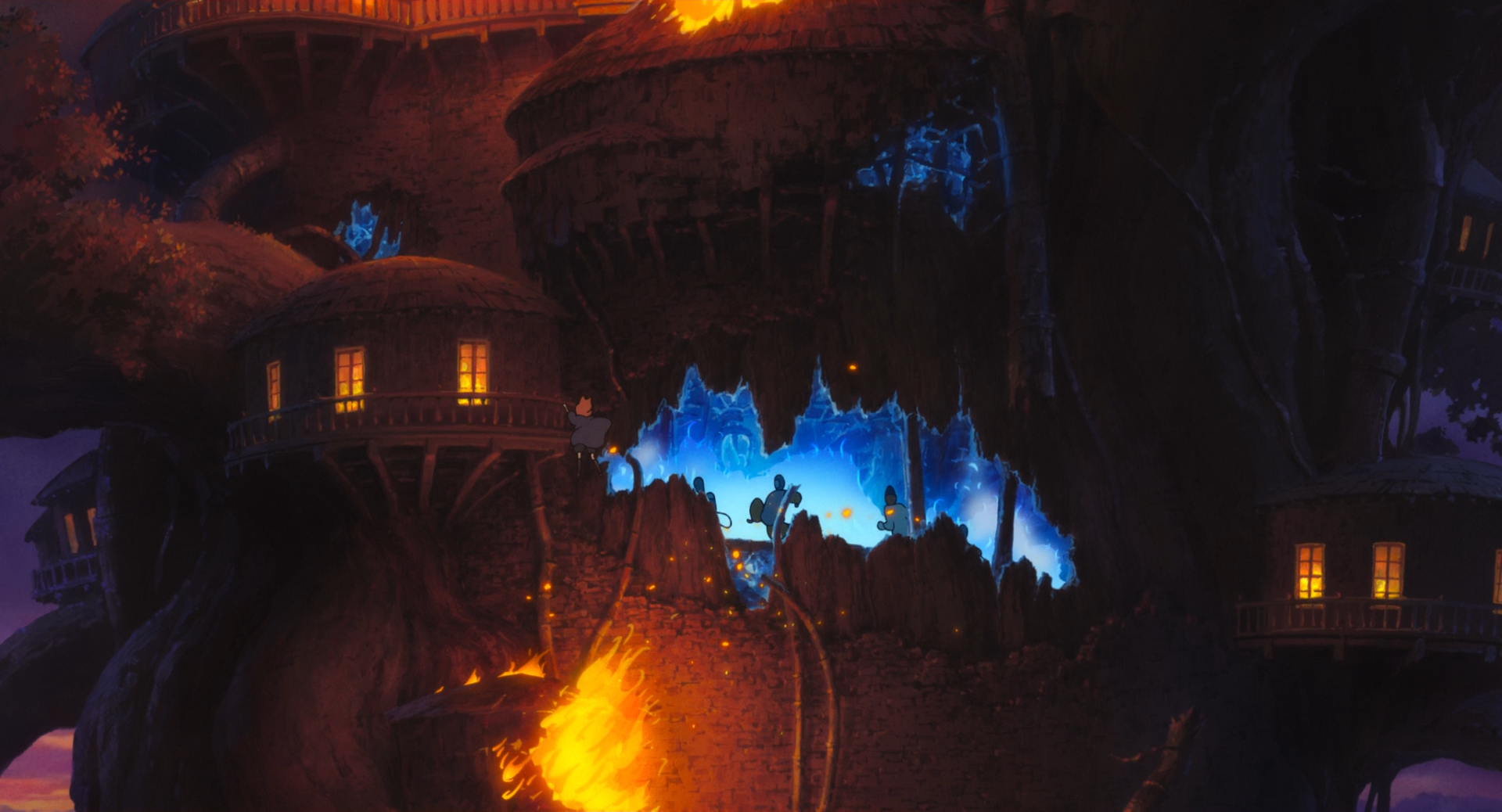

Fire -

madVR: https://images2.imgbox.com/db/c3/TRqmFfaz_o.png

mpv (default): https://images2.imgbox.com/50/1b/L9crP04w_o.png

mpv (tweaked): https://images2.imgbox.com/e6/0d/HROmk5iG_o.png

Explosion -

madVR: https://images2.imgbox.com/5d/0d/KzBne9Hd_o.png

mpv (default): https://images2.imgbox.com/a8/8a/kDpQb97n_o.png

mpv (tweaked): https://images2.imgbox.com/e4/5b/eji8jTxh_o.png

Secondly, hdr-compute-peak often causes noticeable shifts in brightness throughout the film even when there's not much going on. I noticed this while watching The Big Lebowski for example, while they were standing around talking the brightness suddenly shifted and it was very noticeable and uncomfortable.

Here's an example of it shifting dramatically within a few frames, again, from The Dark Knight 2008:

https://images2.imgbox.com/75/a9/LuuoGHun_o.png

https://images2.imgbox.com/a9/0c/SpBDirqQ_o.png

One thing in paricular that I've noticed about this is it's at its most egregious during sudden shifts, such as cutting from a dark scene directly to an explosion; perhaps it would be possible for mpv to scan ahead of time so such shifts can be accounted for?

All 290 comments

I found a valid sample https://4kmedia.org/sony-camping-in-nature-4k-demo/

At 1:05 this file shows a closeup of a camp fire which demonstrates the the issue reasonably well,

Default config: https://images2.imgbox.com/1e/8c/REmolmmV_o.png

Tweaked config: https://images2.imgbox.com/d6/91/vpuRlo3e_o.png

mpv seems to refuse to treat this sample as HDR and complains about an invalid peak here (0.29.1).

I also find the brightness shifting with --hdr-compute-peak to be quite unpleasant and annoying, even if it is relatively smooth. I only have a single HDR movie in my collection (Annihilation), where tone-mapping=reinhard with tone-mapping-param=0.6 yields much better results than the defaults.

tone-mapping=reinhard + tone-mapping-param=0.6 produces some nice results, but I'm unsure if I would consider them better than the default; although that being said I can definitely see why it would produce superior results in a a film such as Annihilation.

Regarding brightness, a possible course of action with --hdr-compute-peak is to make it more conservative, other tonemapping solutions have similar techniques (dynamic brightness) however they do not suffer from significant shifts, at least noticeable ones.

I looked around and found some more scenes to test these settings, one thing that struck me as interesting is that a few seconds after one of my examples, the camera shifts to a close-up of the fire. Here mpv outputs a very good image that does not suffer from any 'red' issues.

mpv (default): https://images2.imgbox.com/1f/4d/XGHeNxDw_o.png

mpv (tweaked): https://images2.imgbox.com/99/7b/0ZFXHJpk_o.png

This also a good example of the damage that is done by enabling hdr-compute-peak=no and tone-mapping-desaturate=0 in certain scenes which leaves the user in a bit of a bind, do they disable tone-mapping-desaturation to improve red scenes? Or do they keep it enabled and have bright scenes ruined?

This is obviously not an ideal solution in any world.

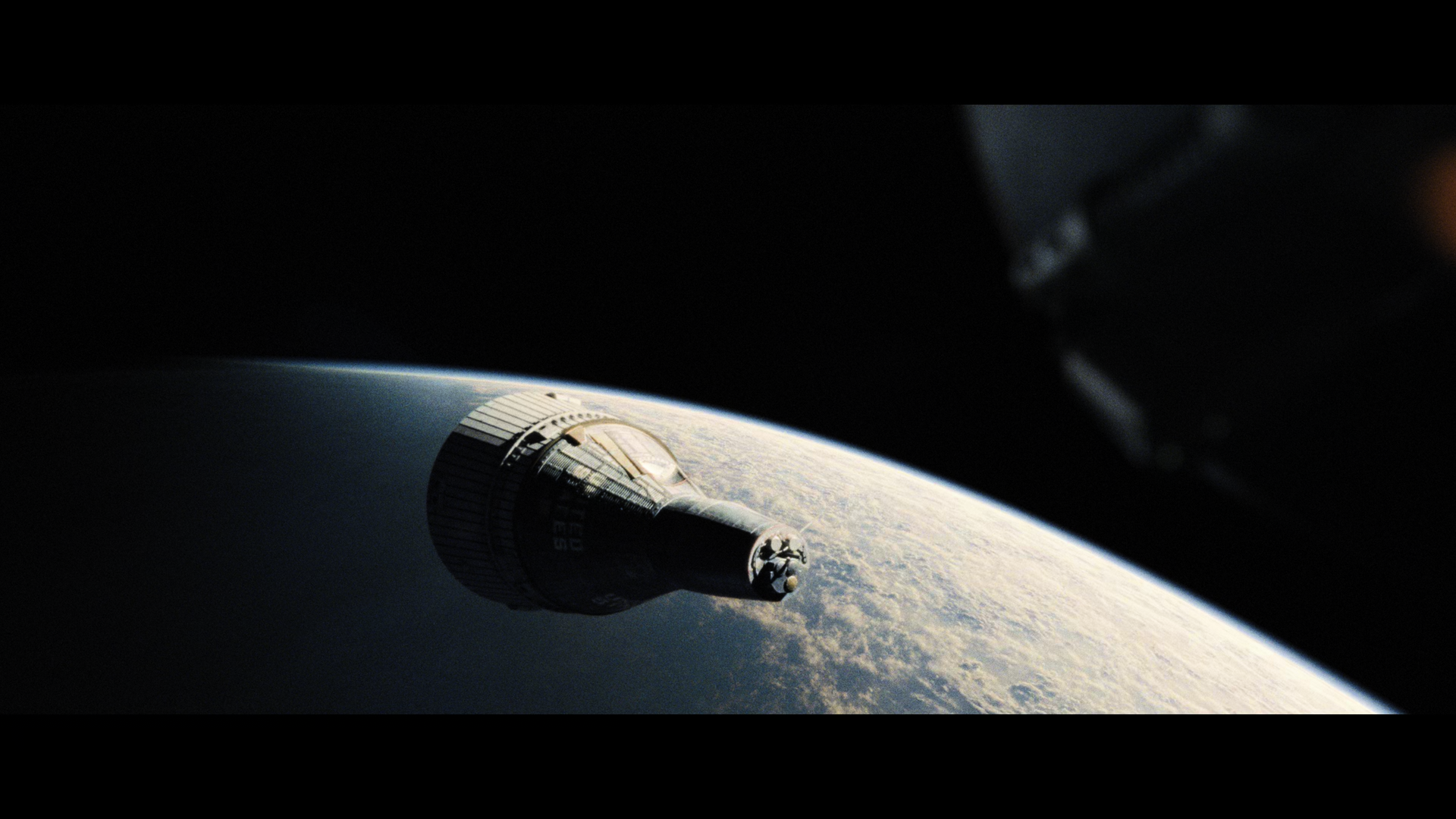

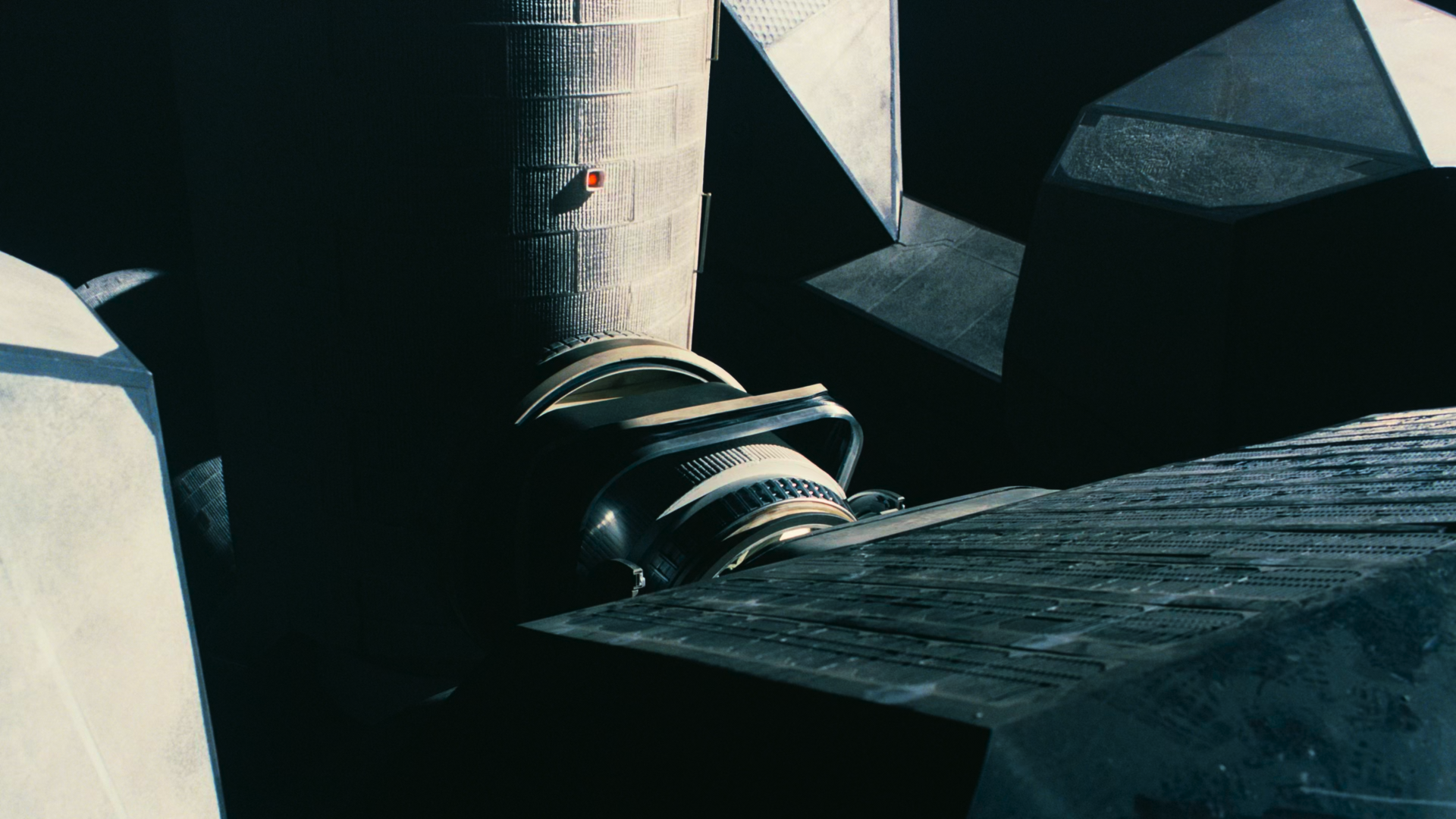

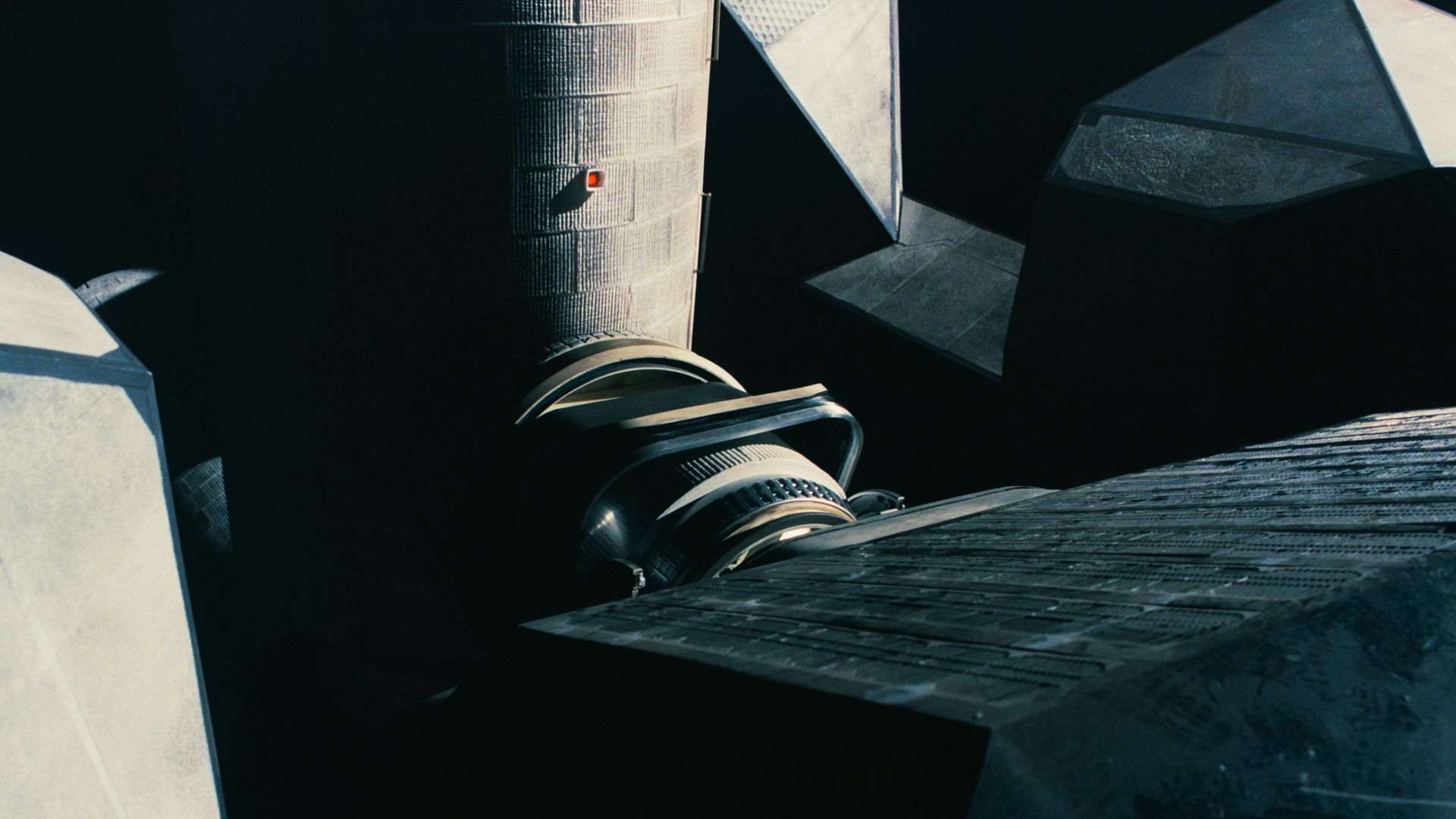

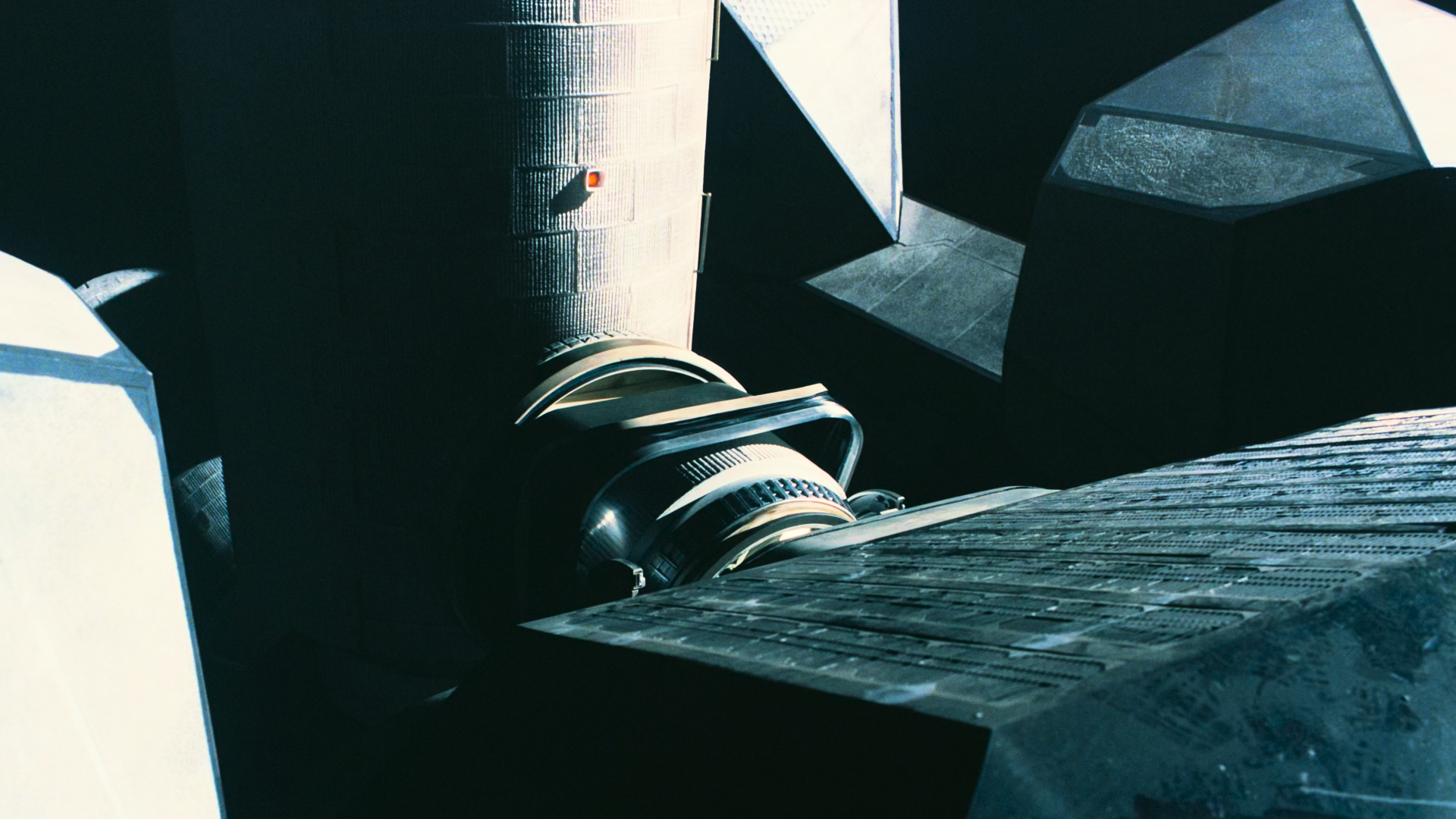

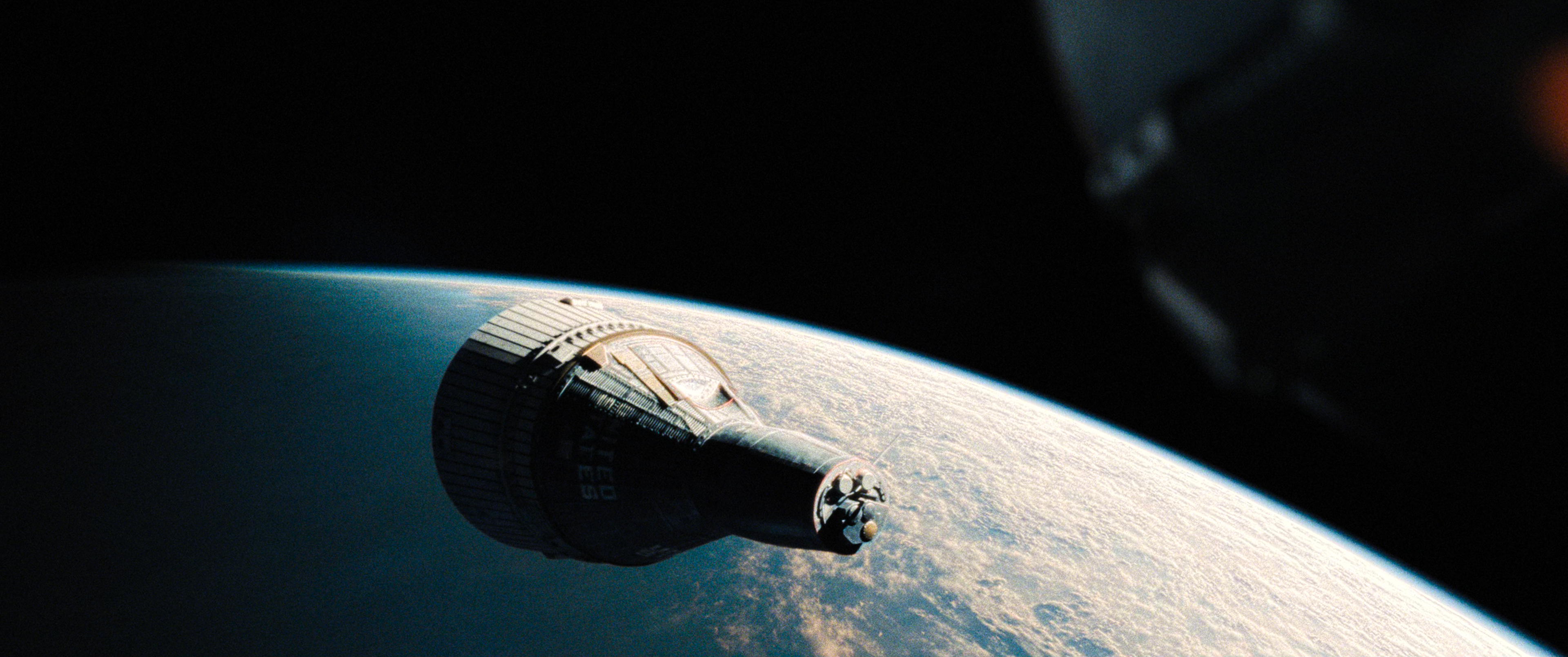

I have some more examples of mpv not dealing with bright scenes well, even with "tweaked" config; from Interstellar:

madvr: https://images2.imgbox.com/5a/bd/yGKWUgwg_o.png

mpv (default): https://images2.imgbox.com/4f/df/4miFqIQy_o.png

mpv (tweaked: https://images2.imgbox.com/98/93/ARtMkK8T_o.png

I also have a recording demonstrating how poorly hdr-compute-peak handles sudden shifts in its current state https://0x0.st/sdRG.mp4

re: desaturation, it's mostly a question of:

- how bright is the fire?

- what do we _expect_ bright fire to look like?

judging by the madVR result, I think they are doing tone-mapping per channel instead of linearly, which results in a lot of bright colors getting chromatically shifted; i.e. what was red to begin with ends up white, but colors close to it end up various shades of orange and yellow.

This style of tone mapping is also what hollywood has been doing for ages, which is why our brains are used to it. It's not chromatically accurate, but it might be more aesthetically pleasing. I could see myself adding an option to allow users to choose between the two modes of tone mapping, based on user preference (accurate colors or hollywood colors).

The more interesting question is concerning the desaturation itself: which one of the two mpv images is closer to our eye's perception of the same scene? Maybe desaturation of bright colors is not the correct approach in general? The reason it exists is because there are some scenes that just look extremely weird without it, usually very bright stuff like looking into the sun. I don't have much experience doing that in real life, so I can't say whether it's really unnatural or if it just feels unfamiliar.

Maybe we should disable desaturation by default, or tune it to be less aggressive? (e.g. perhaps not desaturate all the way towards white, but caps the desaturation coefficient)

One approach you could play around with is this patch:

diff --git a/video/out/gpu/video_shaders.c b/video/out/gpu/video_shaders.c

index 342fb39ded..d047cf19a8 100644

--- a/video/out/gpu/video_shaders.c

+++ b/video/out/gpu/video_shaders.c

@@ -678,7 +678,8 @@ static void pass_tone_map(struct gl_shader_cache *sc, bool detect_peak,

float base = 0.18 * dst_peak;

GLSL(float luma = dot(dst_luma, color.rgb);)

GLSLF("float coeff = max(sig - %f, 1e-6) / max(sig, 1e-6);\n", base);

- GLSLF("coeff = pow(coeff, %f);\n", 10.0 / desat);

+ const float desat_cap = 0.5;

+ GLSLF("coeff = %f * pow(coeff, %f);\n", desat_cap, 10.0 / desat);

GLSL(color.rgb = mix(color.rgb, vec3(luma), coeff);)

GLSL(sig = mix(sig, luma * slope, coeff);) // also make sure to update `sig`

}

desat_cap can be freely configured between 0 and 1: 0 meaning no desaturation, and 1 meaning full desaturation as before

Does that make the fire scenes more believable? A value of 0.5 still prevents e.g. the sony clip staring-into-sun from being too weird looking.

@haasn Could you take a lot at what VLC does with the latest version and its D3D11 renderer? With it, saturation is not pale while there are no "smearing" artifacts at the same time.

I tried do achieve the same result with the OpenGL renderer or mpv, but it doesn't seem to be possible.

I have spent my entire afternoon attempting to compile mpv correctly with MSYS2 so I could test your patch; for my own sanity I will not be trying again anytime soon, sorry. Maybe I will be able to get something working in a VM.

I could see myself adding an option to allow users to choose between the two modes of tone mapping, based on user preference (accurate colors or hollywood colors).

This seems like it would be a nice addition to mpv

Regarding desaturation, I agree with your point here - as I stated earlier the current solution does indeed leave the user in a less-than-ideal scenario, and unfortunately I don't have a solution. I think tweaking it to be less aggressive would be a better solution than disabling desaturation entirely, as you've mentioned it can help with particularly bright scenes. As I mentioned earlier I'm having trouble compiling mpv on Windows so I cannot test your patch currently, I will see if I can figure something out tomorrow. I might just boot into a Linux live USB and compile/test natively on there.

I implemented the tunable desaturation patch from above + added a new option in --tone-mapping-per-channel in #6410.

Feel free to try it out and give feedback.

@haasn If it works well, will there be a backport for mpv0.29.2?

Greetings, sorry for the delay - busy over the weekend

Just a few oddities before comparisons, I noticed when jumping through files mpv will often apply extreme desaturation on a frame, then the next frame it will 'recover' and output correct results; see these videos:

https://0x0.st/s5H9.mp4

https://0x0.st/s5Hp.mp4

This has made testing slightly annoying as I will have to watch the entire scene in realtime to verify that mpv is actually outputting incorrect colours instead of just a single incorrect frame caused by seeking, it doesn't seem to be consistent either.

Secondly, while testing The Lion King (and Mad Max) I noticed at the very start and end of the film, with desaturation enabled I was getting white artifacts in certain areas, see this clip: https://0x0.st/s5HG.mp4

The artifacts shift when I interact with parts of the screen (the OSC in this example), and it only happens in fullscreen. For a minute I thought tone-mapping-desaturate=0.0 solved this however upon closer inspection this is still an issue even with it disabled, it's just that desaturate=0.5 causes the artifacts to become bright white and therefore more noticeable. (Side note, why does the OSC have any affect on the VO? Surely this can't be right?)

Log: https://0x0.st/s5Xr.txt

Thirdly, I'm getting inconsistent results between films; I expected this to a certain extent but the differences between films are quite shocking, 'Darkest Hour' is basically perfect while other films are outputting bad (abhorrent) results . I'm unsure why exactly this is happening, the only thing I can think of is the nits that they were mastered at, any thoughts? Perhaps something different should be done with the tonemapping if we can identify a common theme between certain films so it doesn't adversely effect films that don't have HDR issues. I found this issue (https://github.com/mpv-player/mpv/issues/5969) from some time ago which seems to be related, in this haasn comments that the film in question (Mad Max) was mastered at a ridiculously high brightness, perhaps this is the root cause? Would it be possible to tune the desaturation algorithm to be less susceptible to extreme values? (Another side note regarding mastering, I recall your comments https://github.com/mpv-player/mpv/issues/5960 regarding --hdr-compute-peak, would it be valid to change the algorithm here to ignore things that are ridiculously bright in comparison to the rest of the scene? Or would this cause clipping?)

I've tested these films which do not seem to have any desaturation issues when viewing with tone-mapping-desaturate=0.5, not all of these exhibit "extreme" examples of explosions/fire/brightness as such they may not be the best examples for this topic but I thought it would be best to include these in my results for good measure.

Annihilation

Darkest Hour

Fury

Harry Potter and the Sorcerer's Stone

Jurassic Park (1993)

The Dark Knight Rises (odd...)

The Lion King (with the exception of the aforementioned issue)

Fims with desaturation issues:

Interstellar (This one isn't too bad, it's just extremely bright objects (stars))

The Dark Knight

Mad Max (This film is so bright it completely breaks desaturation and compute peak, fire swaps between looking realistic to the "red" that I've shown so extensively in The Dark Knight; and compute peak sees how bright the film is dims the entire film instead of just dimming certain scenes)

The Dark Knight: https://imgbox.com/g/rjS26OpA90

Mad Max: https://imgbox.com/g/33dHrUkeY9

I'm yet to test --tone-mapping-per-channel

Just a few oddities before comparisons, I noticed when jumping through files mpv will often apply extreme desaturation on a frame, then the next frame it will 'recover' and output correct results; see these videos:

This is sadly a known limitation of the way the algorithm works, since the result of the computation is delayed by one frame. I want to rework it to do it frame-perfect, but most likely not as part of mpv. (I'll probably experiment with this stuff in libplacebo first. I have a small-ish test program written to ingest individual frames and run the tone mapping algorithm on it, in case you're interested in helping to test)

To make testing with mpv easier, what you can do is seek to a specific frame (e.g. using --pause --start HH:MM:SS) and then force a redraw (by e.g. showing the OSD). That way the it will only ever use the one frame's average.

Secondly, while testing The Lion King (and Mad Max) I noticed at the very start and end of the film, with desaturation enabled I was getting white artifacts in certain areas, see this clip: https://0x0.st/s5HG.mp4

Is this only with the changes on my branch, or on current master? Does setting --deband=no fix it?

(Side note, why does the OSC have any affect on the VO? Surely this can't be right?)

Updating the OSC triggers a redraw when the frame is paused. Some shaders depend on random state (in particular, the built in --deband does), which gets reseeded even on a redraw.

Would it be possible to tune the desaturation algorithm to be less susceptible to extreme values?

In theory we already did. The conclusion from that issue was to do desaturation after average level adjustment. But maybe for absurd movies like this, using the "hollywood" style tone mapping would be the better solution.

Another side note regarding mastering, I recall your comments #5960 regarding --hdr-compute-peak, would it be valid to change the algorithm here to ignore things that are ridiculously bright in comparison to the rest of the scene?

It's possible, but how would you code that without tracking the values per pixel until the end of the frame? It could maybe be done by storing a histogram of values, but that would explode memory usage. That said, I've been thinking about maybe redesigning the averaging buffer as a sort of temporal "heat map", that design would allow some degree of post-processing. Or at the very least, we could apply the same "decaying buffer" approach to a histogram.

Or would this cause clipping?

There are two inputs to the tone mapping algorithm: scene max and scene average. Scene max is important to prevent clipping, and scene average is what triggers the darkening/lightening (aka "eye adaptation" simulation). We could exclude outliers from the scene average detection without it needing to affect the scene max.

That said, depending on what tone mapping curve is selected, the "scene max" also affects the overall brightness of the result. (Hable in particular is sensitive to the scene max, whereas e.g. mobius or reinhard are not)

To make testing with mpv easier, what you can do is seek to a specific frame (e.g. using --pause --start HH:MM:SS) and then force a redraw (by e.g. showing the OSD). That way the it will only ever use the one frame's average.

Thanks

Is this only with the changes on my branch, or on current master?

Current master, I first noticed this on the 14th of Dec I believe

Does setting --deband=no fix it?

No, seems to be caused by --sigmoid-upscaling=yes

scale=bicubic doesn't seem to suffer from this, both ewa_lanczossharp & Spline36 do

Random thought: What if instead of desaturating towards vec3(luma), we desaturate towards the per-chanenl tone mapped version? That way we will end up using "hollywood"-style desaturation for overly bright regions but still preserve the chromatic accuracy of non-highlights.

Hi @haasn, sorry to horn in but I was helping someone on the Emby forum with what appears to be same issue that is being reported, here. I hope you don't mind, but I'm going to quote from that thread and post a link.

https://emby.media/community/index.php?/topic/67254-option-for-hdr-tone-map-luminance-value-setting/

I noticed MPV handles HEVC differently, quite differently. the color red and yellow are slightly boosted. i busted out my i1Display Pro and strangely both MPV and MPC-HC came out with identical grey scale and color spectrum when i did a full sweep in both environment using my trusted H264/AVC calibration source. for a while i thought i was going crazy. until i did side by side comparison of both MPV (FFmpeg) and MPC-HC (Direct Show) renders and discovered that with H264/AVC they both render identical R709 space and exact same grey scale. however, when the source is HEVC, MPV pushes the yellow and red up higher on the Rec 709, somewhere around maybe 3~4 delta on the spectrum. which is quite significant for anyone to notice comparing side by side. i also noticed this with HDR HEVC UHD sources, which explains my result earlier today when messing around with HDR playback, that MPV tend to have more saturated look.

That post doesn't really contain any useful information.

Random thought: What if instead of desaturating towards vec3(luma), we desaturate towards the per-chanenl tone mapped version? That way we will end up using "hollywood"-style desaturation for overly bright regions but still preserve the chromatic accuracy of non-highlights.

I gave this a try in #6415, and I'm really happy with the results. Numbers could possibly use some tweaking, I just picked something that I think is reasonable by default.

Btw @HyerrDoktyer I think I might know what's causing the issues with random white pixels when desaturating, the way the curve was implemented it could possibly "underflow" for too dark pixels and end up applying super aggressive desaturation.

That bug is fixed as part of #6415 (but not #6410).

I also implemented a totally new design for the HDR peak detection, based on an idea proposed by @CounterPillow. Basically we switched from an FIR filter (running average with sliding window) to an IIR filter (geometric decay).

The new design has several key advantages:

- responds more quickly to changes while remaining relatively stable to fluctuations

- MUCH simpler code, reduced VRAM usage and code complexity massively

- can use much larger averaging windows without running into risk of overflow or VRAM limits

- as a combination of the above benefits, we can make our scene change detection much less aggressive (I bumped up the value from 20 nits to 50 nits and also made it user-configurable).

- (later) can be generalized to keep a heat map per region/pixel instead of frame-global, so we could do more fancy stuff down the line maybe

I pushed these changes onto #6415. Please try it out and let me know what you think.

I've compiled the mpv build with the mentioned PR if anyone to test it. (since I'm on way of rebuilding my ffmpeg anyway)

@haasn Thanks for the changes, the new tonemapping looks way better than the old.

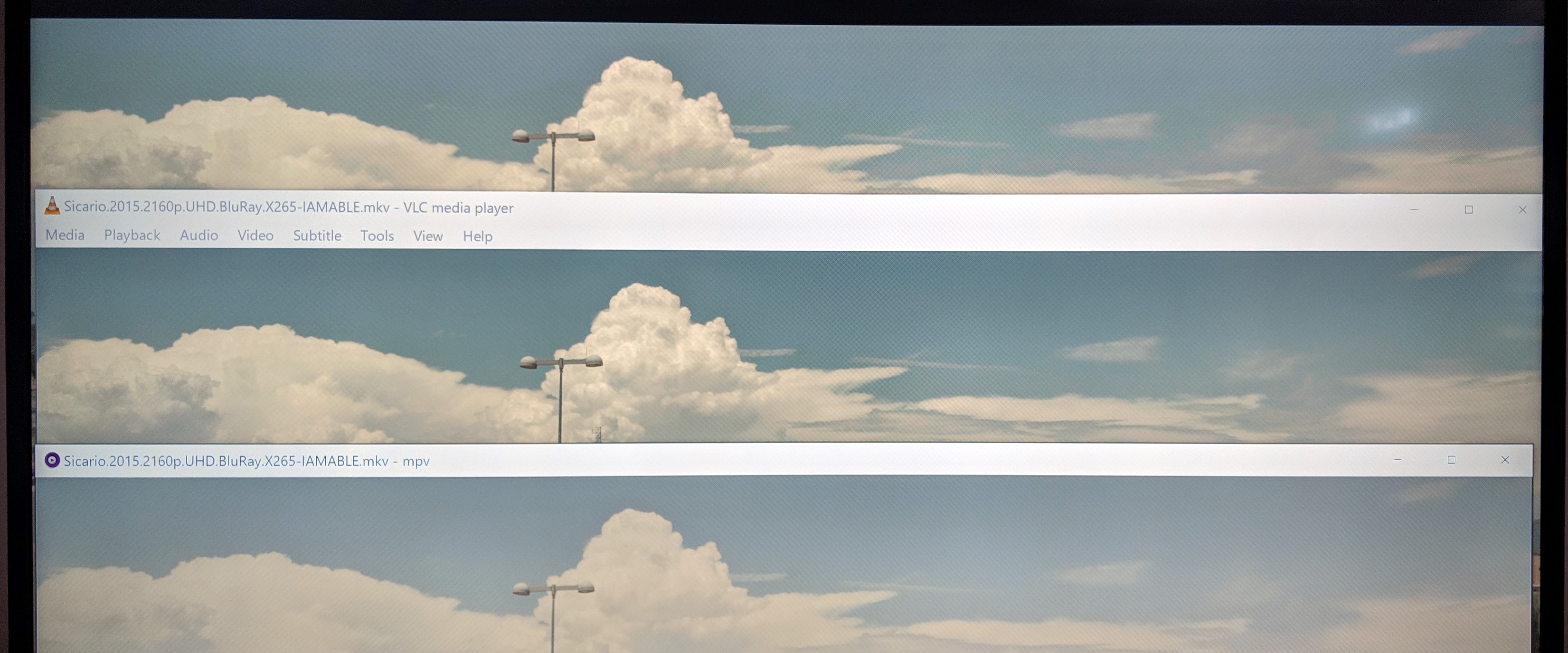

I noticed that there is still kind of "smearing ringing" effect, which with the old tonemapping was caused by low --tone-mapping-desaturate values. It's very visible in the Samsung "Chase the Light" demo and I compared second 23 vs. VLC D3D11:

mpv:

https://abload.de/img/mpvabilj.png

vlc D3D11:

https://abload.de/img/vlcogf0o.png

That was with hdr-compute-peak=no, as yes still produces a too dark and pulsating result with this video. But the "smearing" effect doesn't seem to be related to if it's enabled or not.

Download the demo here:

https://drive.google.com/uc?export=download&id=0Bxj6TUyM3NwjSkdPVGdvUV9KZDA

Link taken from here:

https://4kmedia.org/samsung-chasing-light-4k-demo/

Using nvdec with hdr-compute-peak=yes, I get a blank screen.

D3D11 worked just fine. In that log, I also used `tone-mapping-desaturate=0.0' which turned the screen from all black, to all blue.

@aufkrawall I'm not entirely sure what effect you're referring to, but I think this sample suffers greatly from being over-mastered. Enabling peak detection helps a lot.

Some screenshots of my own:

- new mpv, --hdr-compute-peak=yes

- new mpv, --hdr-compute-peak=no

- old mpv, --hdr-compute-peak=yes

- old mpv, --hdr-compute-peak=no

It's plain as day how much better the new desaturation algorithm performs compared to the old one. (Although this particular sample also works okay without desaturation)

However the peak detection is indeed still a bit sub-par on this sample. In particular, I noticed issues during the initial fade in, followed shortly by the sunrise. This rapid increase in brightness over the course of several frames triggers at least one scene change detection. Maybe we should use the difference between two successive frames rather than the difference between the current frame and the current average, for scene change detection? I can give it a try.

That said, even if we don't trigger any scene change detection, it's still sort of "weird" looking due to the specific timing of the fade in followed by the sun, which makes it sort of adapt twice in a row. Probably no way around this other than to use the HDR10+ dynamic metadata. (Which FFmpeg has a patch for now)

@Doofussy2 pushed a fix for your issue, try again?

Thanks for looking at that sample. Please take a closer look at the sunflower petals. There is ringing around them, at least without peak detection. This is also very extreme in your screenshot without desaturation.

You could of course argue that VLC D3D11 looks less saturated. But it's still way more vivid than mpv's previous Hable tonemapping and doesn't seem to show any sign of such artifacts. madVR also looks more vivid than VLC D3D11 and doesn't show such artifacts either (but maybe a slideshow instead :D ).

I'm on Linux right now, will post screenshots of another scene once I booted Windows.

Maybe we should use the difference between two successive frames rather than the difference between the current frame and the current average, for scene change detection? I can give it a try.

Gave it a try. Gets rid of this false positive but introduces others. We need some fundamentally different approach to scene change detection, I think. Maybe we should just bias the IIR towards larger changes somehow. Some crazy thoughts: what if we keep track of the avg/peak in gamma light instead of linear light? More stuff to try, I guess.

FWIW, here's the patch for what I just tested:

diff --git a/video/out/gpu/video.c b/video/out/gpu/video.c

index af7432591b..dbb8f7db27 100644

--- a/video/out/gpu/video.c

+++ b/video/out/gpu/video.c

@@ -2487,6 +2487,7 @@ static void pass_colormanage(struct gl_video *p, struct mp_colorspace src, bool

uint32_t counter;

uint32_t frame_sum;

uint32_t frame_max;

+ float prev_avg;

float total_avg;

float total_max;

} peak_ssbo = {0};

@@ -2512,6 +2513,7 @@ static void pass_colormanage(struct gl_video *p, struct mp_colorspace src, bool

"uint counter;"

"uint frame_sum;"

"uint frame_max;"

+ "float prev_avg;"

"float total_avg;"

"float total_max;"

);

diff --git a/video/out/gpu/video_shaders.c b/video/out/gpu/video_shaders.c

index cc07fa67da..1001d2c45a 100644

--- a/video/out/gpu/video_shaders.c

+++ b/video/out/gpu/video_shaders.c

@@ -615,11 +615,11 @@ static void hdr_update_peak(struct gl_shader_cache *sc,

// Scene change detection

if (opts->scene_threshold) {

float thresh = opts->scene_threshold / MP_REF_WHITE;

- GLSLF(" float diff = %f * cur_avg - total_avg;\n", scale);

- GLSLF(" if (abs(diff) > %f) {\n", scale * thresh);

+ GLSLF(" if (abs(cur_avg - prev_avg) > %f) {\n", thresh);

GLSLF(" total_avg = %f * cur_avg;\n", scale);

GLSLF(" total_max = %f * cur_max;\n", scale);

GLSLF(" }\n");

+ GLSL(prev_avg = cur_avg;)

}

// Update the current state according to the peak decay function

@aufkrawall on that sample, we can replicate the VLC D3D11 look by fully tone mapping per channel. Does that solve the issue you're having?

If you prefer this kind of look, we could maybe make the new desaturation curve a bit more tunable so you can effectively make it always engage for bright scenes like this.

I was using shinchiro's test build. I'm afraid I'd have to wait for another build. I haven't gotten around to teaching myself how to build my own. But it's on my to-do list. Just need enough time... But I'm happy to run tests.

@haasn Yep, that seems to do the trick. Fantastic! 👍

I suppose that might be an alternative if peak detection has issues or on lowend GPUs. At least the old peak detection was too slow for my Gemini Lake hand calculator. Haven't tried the improved version yet though.

If you prefer this kind of look, we could maybe make the new desaturation curve a bit more tunable so you can effectively make it always engage for bright scenes like this.

No need, the argument range was already high enough. You can just set --tone-mapping-desaturate=1.0 --tone-mapping-desaturate-exponent=0.0 to get it to always desaturate with fuil strength, regardless of the brightness level. (Thanks math!)

I'm really happy how each of the HDR videos I got look that way while the tonemapping is still basically for free regarding GPU performance. I would like the more vivid result of leaving --tone-mapping-desaturate-exponent at its default value even more if there weren't the "ringing" artifacts, but it's a huge improvement over Hable anyway.

The new peak detection is still too heavy for Gemini Lake, while it can deal with 4k 60fps without it (+ dithering and display-resample). mpv is just miraculously well optimized.

I was just doing comparative testing between madvr and mpv. @haasn I think you've nailed it with these new improvements!

Using this video

https://4kmedia.org/lg-chess-hdr-demo/

MadVR

New mpv

And this was my config

hwdec=d3d11va

hdr-compute-peak=no

tone-mapping=hable

tone-mapping-desaturate=1.0

tone-mapping-desaturate-exponent=0.0

But wait, there's more!

With help from @daddesio I developed a new scene change detection algorithm that solves the shortcomings of the previous one, thus allowing us to avoid the "eye adpatation" effects while also allowing us to pick a slow averaging filter. It also doesn't break on fades. Give it a whirl. (Pushed to #6415)

Now you should hopefully be able to use --hdr-compute-peak=yes even on stupid samples like the chasing the light one. (Although there's still the problem of the logo at the top right)

Btw, there's a subtle blue tint in the mpv version of those screenshots that I think I've observed while testing too. It goes away for me when using an ICC profile, so maybe our "built in" gamut adaptation algorithm needs improving.

Edit: Never mind, the one I observed is part of the source I'm testing on, and turning on the ICC profile only makes it less noticeable (since I have a wide gamut monitor).

Can somebody whip up test build, please? I haven't got the hang of making my own build, yet. I'd love to test this!

Btw, there's a subtle blue tint in the mpv version of those screenshots that I think I've observed while testing too. It goes away for me when using an ICC profile, so maybe our "built in" gamut adaptation algorithm needs improving.

It has to do with the grayscale, I believe. Here's how it looks in the current mpv release.

I'd also like to point out that I think the new tone mapping (with peak detection enabled + the default desaturation) works really well when using --tone-mapping=mobius instead of hable. Maybe we should even change the default?

Well, I can't test the new stuff you just added, but with shinchiro's test build, this is what I get with that same scene, using mobius.

hdr-compute-peak=yes

hdr-compute-peak=no

hwdec=d3d11va

hdr-compute-peak=yes

tone-mapping=mobius

tone-mapping-desaturate=1.0

tone-mapping-desaturate-exponent=0.0

And with

hwdec=d3d11va

hdr-compute-peak=yes

tone-mapping=mobius

@Doofussy2 @aufkrawall I checked the source, the thing on her back is actually blue-ish, not white. (it comes out to #25263b in sRGB without any tone mapping)

@Doofussy2 @aufkrawall I checked the source, the thing on her back is actually blue-ish, not white. (it comes out to #25263b in sRGB without any tone mapping)

Oh ok, but it's still really dark. With hable, it seems the correct brightness?

@Doofussy2 I get somewhat different results from you, mind. This is with my latest branch. It's possible we have slightly different versions of the file?

compute=yes, desat=default, curve=hable

compute=yes, desat=default, curve=mobius

By the way, I'd prefer it if you used actual movie clips rather than marketing wank.

Yeah, I was saying that I can't test with your latest adjustments. I haven't completely figured out the build process. So I'm testing with the build that shinciro posed, earlier. So it's likely different results.

I probably can test it on Linux tomorrow.

By the way, I'd prefer it if you used actual movie clips rather than marketing wank.

For sure.

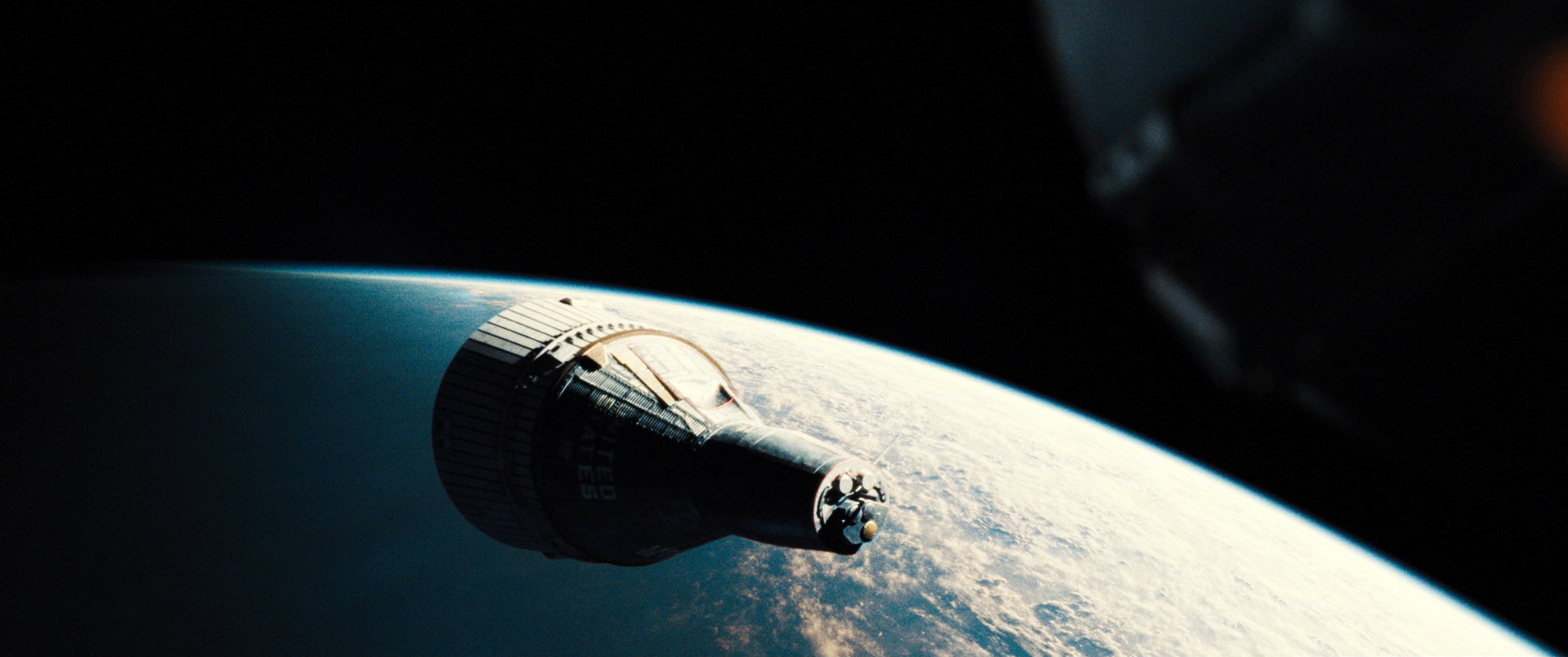

So here's a shot from Interstellar at 2:34:10. It's very bright, and I've had some issues when playing this.

hwdec=d3d11va

hdr-compute-peak=yes

tone-mapping=mobius

hwdec=d3d11va

deinterlace=no

hdr-compute-peak=no

tone-mapping=hable

tone-mapping-desaturate=1.0

tone-mapping-desaturate-exponent=0.0

I feel like balance is somewhere in the middle?

I don't have that movie in HDR unfortunately, can you get a clip to me somehow?

Also, I pushed a new option to #6415 that allows adjusting the upper limit on how much to boost dark scenes (by over-exposing them). The current limit was always hard-coded as 1.0, meaning that frames could only ever get darker - never brighter. While you're playing around with this stuff anyway, it might be a good idea to play around with this option as well.

It's possible that a conservative value like --tone-mapping-max-boost=1.2 might help detail recognition on dark scenes without making them look too funny (by being too bright). Thoughts?

I feel like balance is somewhere in the middle?

In theory, that can be accomplished. mobius is tunable (that's sort of the point), and I never put much thought or testing into the default param (0.3). It's possible you could get a more in-between result by using a higher param, perhaps --tone-mapping-param=0.5?

Edit: I think I realize now what you actually mean: basically, the scene is very bright, but mpv makes it look "normal" brightness again, and you want it to "remain" bright? That's sort of a difficult thing to balance with the ability to play e.g. shit like mad max which is mastered at "sun brightness" levels. Those movies would end up entirely bright if we put an upper limit on how much we can darken stuff.

That whole scene is swirling brightness. And much of it can be too bright, but only just. It's hard to describe. The whole movie is kinda too bright. That scene goes drectly from darkness, to super bright. When I compare it to watching it with the metadata passed to my display, it's still super bright, but I can see a little more detail. I'm going to try tuning mobius, and see what I get.

So here's what that looks like with;

hwdec=d3d11va

hdr-compute-peak=yes

tone-mapping=mobius

tone-mapping-param=0.1

Which isn't horrible...just a little dim. You really should get a hold of the whole movie. The lighting and color/saturation throughout the whole movie is kinda odd. But this is closer to what it should be, I think. Hable is I think a little too bright. But this is pretty extreme. Changing the param didn't make a lot of difference.

hwdec=d3d11va

hdr-compute-peak=yes

tone-mapping=mobius

tone-mapping-param=0.5

Actually, I'm going to stick with hable. There a lot of other bright scenes that now look better, to me. Like this one

Btw, another thing we can try doing is using a different method of estimating the overall frame brightness (rather than the current naive/linear average).

I played around with

- averaging

sqrt(sig)(poor man's gamma function) - averaging

log(sig)(approximation of HVS)

In particular, the approach 2 I think worked out pretty interesting. I'm not yet sure whether it's an improvement, but it does solve a lot of the "brightness" issues I think.

diff --git a/video/out/gpu/video.c b/video/out/gpu/video.c

index 5dbc0db7ca..099bbdb40c 100644

--- a/video/out/gpu/video.c

+++ b/video/out/gpu/video.c

@@ -2491,10 +2491,10 @@ static void pass_colormanage(struct gl_video *p, struct mp_colorspace src, bool

if (detect_peak && !p->hdr_peak_ssbo) {

struct {

float average[2];

- uint32_t frame_sum;

- uint32_t frame_max;

+ int32_t frame_sum;

+ int32_t frame_max;

uint32_t counter;

- } peak_ssbo = {0};

+ } peak_ssbo = { .frame_max = -100000 };

struct ra_buf_params params = {

.type = RA_BUF_TYPE_SHADER_STORAGE,

@@ -2515,8 +2515,8 @@ static void pass_colormanage(struct gl_video *p, struct mp_colorspace src, bool

pass_is_compute(p, 8, 8, true); // 8x8 is good for performance

gl_sc_ssbo(p->sc, "PeakDetect", p->hdr_peak_ssbo,

"vec2 average;"

- "uint frame_sum;"

- "uint frame_max;"

+ "int frame_sum;"

+ "int frame_max;"

"uint counter;"

);

}

diff --git a/video/out/gpu/video_shaders.c b/video/out/gpu/video_shaders.c

index 5673db2ff7..c84f3fe2ea 100644

--- a/video/out/gpu/video_shaders.c

+++ b/video/out/gpu/video_shaders.c

@@ -576,15 +576,20 @@ static void hdr_update_peak(struct gl_shader_cache *sc,

GLSL( sig_peak = max(1.00, average.y);)

GLSL(});

+ // Chosen to avoid overflowing on an 8K buffer

+ float log_min = 1e-3;

+ float sig_scale = 400.0;

+

// For performance, and to avoid overflows, we tally up the sub-results per

// pixel using shared memory first

GLSLH(shared uint wg_sum;)

GLSLH(shared uint wg_max;)

GLSL(wg_sum = wg_max = 0;)

GLSL(barrier();)

- GLSLF("uint sig_uint = uint(sig_max * %f);\n", MP_REF_WHITE);

- GLSL(atomicAdd(wg_sum, sig_uint);)

- GLSL(atomicMax(wg_max, sig_uint);)

+ GLSLF("float sig_log = log(max(sig_max, %f));\n", log_min);

+ GLSLF("uint sig_int = int(sig_log * %f);\n", sig_scale);

+ GLSL(atomicAdd(wg_sum, sig_int);)

+ GLSL(atomicMax(wg_max, sig_int);)

// Have one thread per work group update the global atomics

GLSL(memoryBarrierShared();)

@@ -602,7 +607,7 @@ static void hdr_update_peak(struct gl_shader_cache *sc,

GLSL(if (gl_LocalInvocationIndex == 0 && atomicAdd(counter, 1) == num_wg - 1) {)

GLSL( counter = 0;)

GLSL( vec2 cur = vec2(frame_sum / num_wg, frame_max);)

- GLSLF(" cur *= 1.0/%f;\n", MP_REF_WHITE);

+ GLSLF(" cur = exp(1.0/%f * cur);\n", sig_scale);

// Use an IIR low-pass filter to smooth out the detected values, with a

// configurable decay rate based on the desired time constant (tau)

@@ -617,7 +622,8 @@ static void hdr_update_peak(struct gl_shader_cache *sc,

GLSL( average = mix(average, cur, weight);)

// Reset SSBO state for the next frame

- GLSL( frame_max = frame_sum = 0;)

+ GLSL( frame_sum = 0;)

+ GLSL( frame_max = -100000;)

GLSL( memoryBarrierBuffer();)

GLSL(})

}

Just testing other movies. The beginning of Alien: Covenant was always problematic. Now it looks just like passing the metadata to the display.

This config is a winner!

hdr-compute-peak=no

tone-mapping=hable

tone-mapping-desaturate=1.0

tone-mapping-desaturate-exponent=0.0

@Doofussy2 Well, that config is probably what your display is doing internally - since it's the "simplest" possible way to do things. (Actually, that's what we did way way way back in the day)

If it works on your particular test samples and you like the look, then I guess feel free to use the values; but I'm not going to make them the default because they seriously degrade the accuracy of all colors.

Oh for sure. I wouldn't expect it to be default. And I eagerly await testing the other new developments that you made, today. I just have to wait until they get rolled in :)

Just realized I hadn't tried using hable on it's own, with no compute peak or desaturation. That works great!

Just look at this result (I know you don't want to use this video). That is so close to how it is when my display handles the metadata. The color, saturation and lighting are amazing!

And compare it to what I did, earlier. (I think I was doing to much)

I'm not yet sure whether it's an improvement, but it does solve a lot of the "brightness" issues I think.

Decided to go through with it. For comparison, this is what I get on that chess scene with the new defaults + the new logarithmic averaging commit.

Now, with mobius, it's almost a bit too over the top:

I wish I could show you just how close the last picture I posted is to 'actual' HDR. I don't think I can tell them apart. You have done excellent work, today @haasn !

Those last two were with compute peak, yes?

I also realized that much of the effective brightness of the output image is very much dictated by the constant 0.25 hard-coded into the mpv tone mapping algorithm. That constant was very much chosen ad-hoc with no real justification other than "it's close to the mid point of the gamma function".

If we changed that constant to, say, 0.30 instead, you would see a brighter result than before. It's possible that we simply need to tune this parameter as well. I could expose it as an option too.

Those last two were with compute peak, yes?

Yes.

I wish I could show you just how close the last picture I posted is to 'actual' HDR.

The one with mobius?

The one with mobius?

No, the one I just post only using hable.

hdr-compute-peak=no

tone-mapping=hable

This one

This is just about perfect! I can't separate it from 'actual' HDR on my display. The colors, the luminance and the saturation, are spot on!

I added a new (undocumented for now) option --tone-mapping-target-avg to control this 0.25 constant. Pushed it to #6415 as well.

@shinchiro could I trouble you to make a build of that branch again? would like to get some testing in so we can ideally pick better defaults and maybe also remove some of the options that we agree on values for. (Having too many options is not always a good thing, even for mpv...)

Anyway, it goes without saying, that local tests should be done on actual movies if possible; I'm only using the chess clip since it seems to be the common denominator / clip we all have lying around.

Anyway, it goes without saying, that local tests should be done on actual movies if possible; I'm only using the chess clip since it seems to be the common denominator / clip we all have lying around.

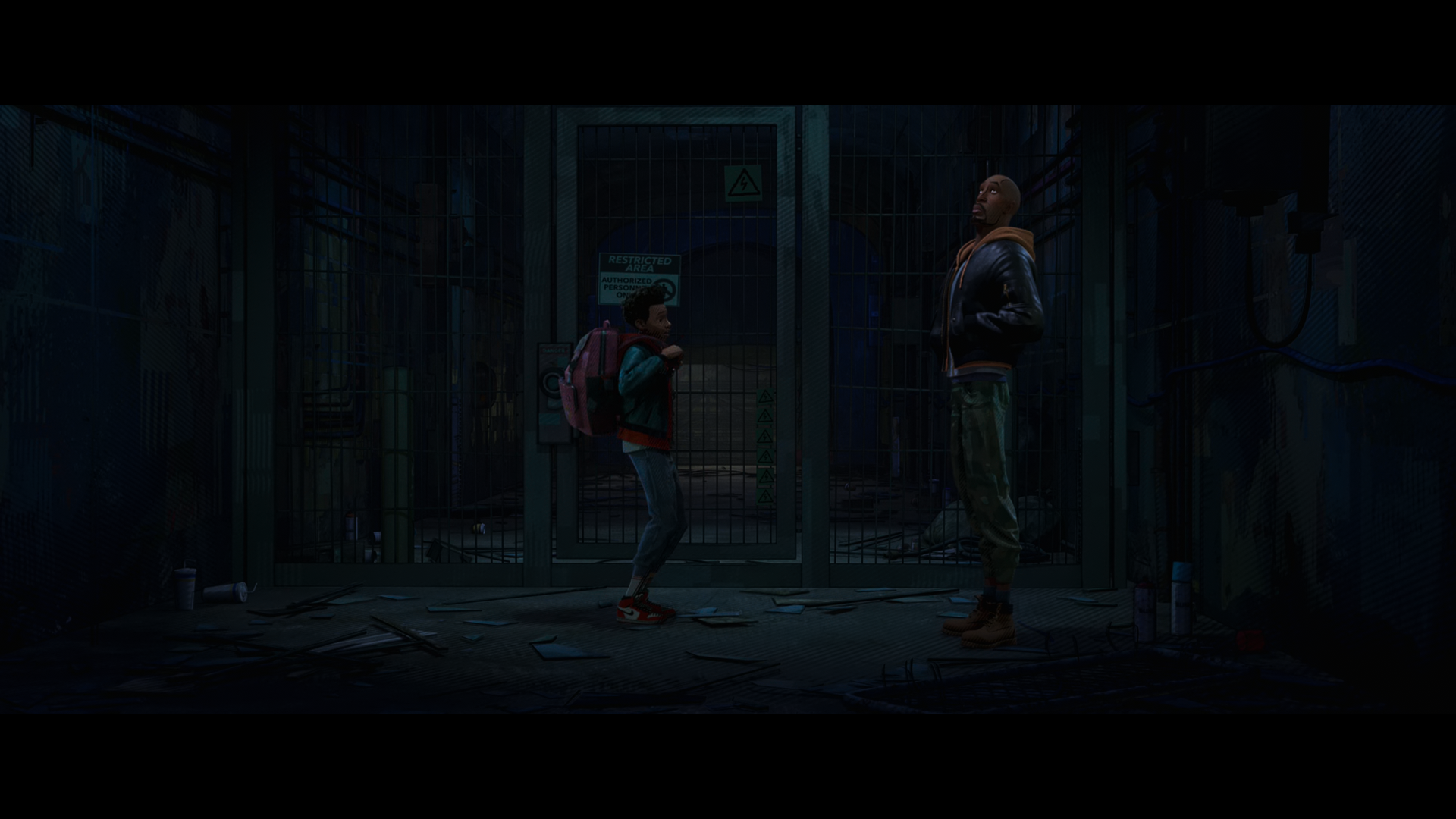

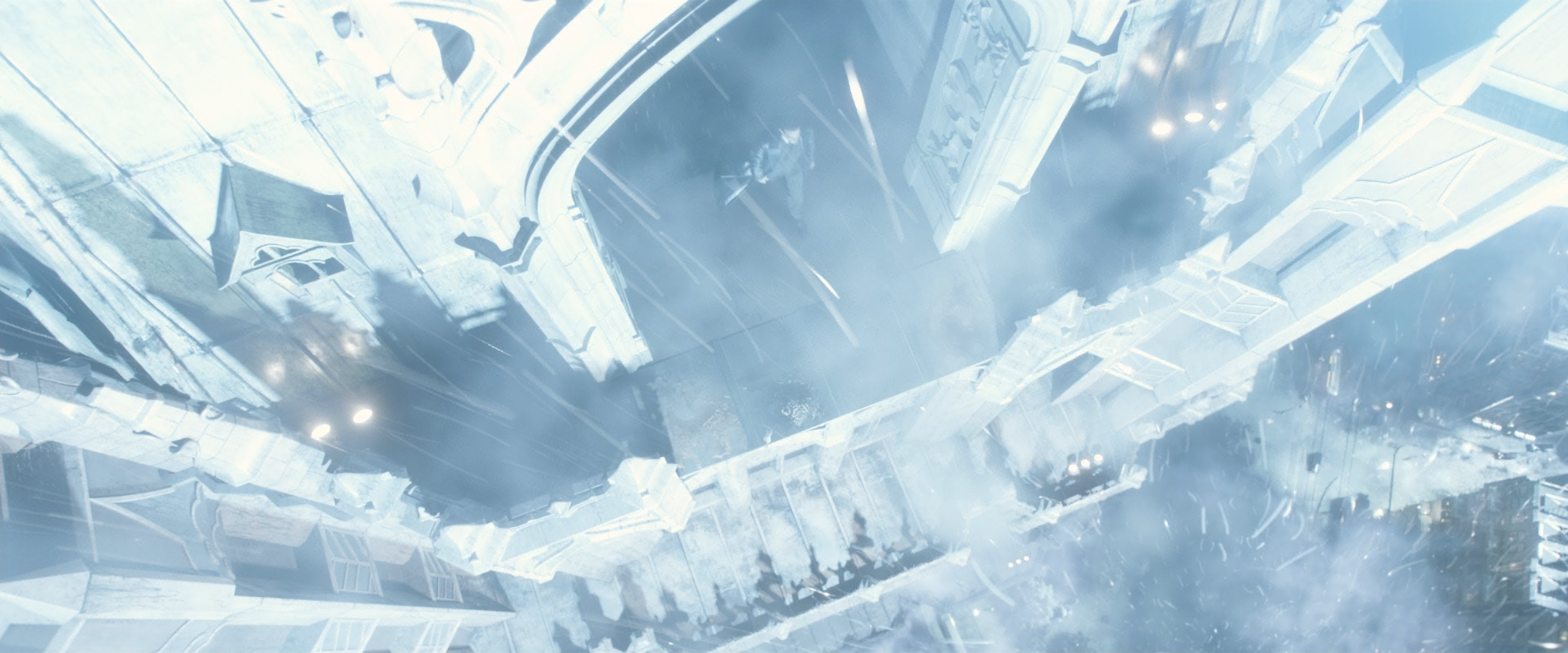

Yup! I agree. And to that point, I just tested one of my favorite test scenes (for the colors and glowy things). The movie is Lucy.

I've wrestled to get this right, and now it is.

Couple more examples

Avengers

Justice League

(glowy thing in the back looking good)

@Doofussy2 I'd like to know more about your test environment, though. What kind of display are you using and what curve is it calibrated to? What's the actual peak brightness in SDR mode and how are you making comparisons?

I have a Vizio M55-E0 (2017). I have not had it optimally calibrated. I use it as my desktop, too. That's how I can run off tests, so quickly. So the settings are dialed for my desktop. I have a GTX 1060, connected directly to the display via DP 1.4. I do have the digital vibrance dialed to 65%. That helps boost the luminance a little. Not so far as to make it out of range, still not at bright as when the display is in HDR10. HDMI 1 is the only input that allows 10 bit gamut. That is enabled separately to HDR10 being switched on. So that is always on.

This is the best info I can find about my display.

https://www.rtings.com/tv/reviews/vizio/m-series-xled-2017

Color temp = Computer

Black detail = Medium

Colorspace = Auto

Gamma = 2.2

Oh, I forgot the Nvidia settings. Here they are

The comparisons I make are using madVR to play the media (default settings). I take screenshots in mpv and compare it to what I just saw. I repeat it a few times, so I have a clear image in my mind.

@Doofussy2 Are they all using hdr-compute-peak=no tone-mapping=hable tone-mapping-desaturate=0?

Just hdr-compute-peak=no and tone-mapping=hable. And yes, wilth all of them.

Here you go:

mpv-x86_64-20190102-git-7db407a.zip

@haasn

Thank you for the fantastic updates, these improvements are stunning; and thank you @shinchiro for the updated builds

I don't have that movie in HDR unfortunately, can you get a clip to me somehow?

I come bearing gifts

Troublesome Mad max: https://0x0.st/sR8M.mkv

Interstellar scene that Doofussy mentioned: https://0x0.st/sR8u.mkv

I can also confirm that https://github.com/mpv-player/mpv/pull/6415 has fixed the artifacts, thanks for that!

If you want any further samples please feel free to @ me and I'll see what I can do

Thanks. When I wake up I'll probably start comparing the interstellar clip to the SDR blu-ray version to see how close we're getting to the "artist's intent". (That's ultimately my goal here - recreating what the mastering engineers want us to see on SDR screens, rather than trying to recreate the look of the HDR clip per se)

I've tried the new build kindly provided by shinchiro, and yay, the LG chess demo doesn't suffer anymore the sudden brightness loss with peak detection. :)

And also looks great otherwise.

Regarding the Samsung Chasing the Light video:

The "smearing ringing" effect is also noticeable in motion when the sunflower petals are moving in the wind, it doesn't look as clean as when setting --tone-mapping-desaturate=1.0 --tone-mapping-desaturate-exponent=0.0. This is also the case with hdr-compute-peak=yes, just less obvious than with =no.

One more scene where it is very obvious is second 35.

Default settings:

https://abload.de/img/defzafix.png

--tone-mapping-desaturate=1.0 --tone-mapping-desaturate-exponent=0.0:

https://abload.de/img/08oif4.png

There are differences in brightness between the screenshots due to seeking and peak detection, but the artifacts among the dark lines in the center are 100% reproducible and very noticeable during playback.

But you surely have a good point that this video might be overproduced, as I haven't spotted such artifacts anywhere else. I won't mention it anymore if you don't want to hear from that video again.

I also looked at "The World in 4k", available on YouTube:

https://www.youtube.com/watch?v=tO01J-M3g0U

The pulsating brightness has been greatly reduced by the new peak detection, but it's still noticeable.

One scene where this is very apparent is second 27, it suddenly turns way darker:

https://abload.de/img/27vocq9.png

Peak detection is a bit too dark in general in this video (judged by viewing on a sRGB IPS display with gamma of 2.2), and some scenes like second 27 are even darker than the average.

I noticed towards the end of the Mad Max sample that I provided there's a scene with some extremely bright areas which seem to be clipping, I've been playing around with the new config options but I can't seem to resolve this; happens at about 1:40 in my sample

mpv (new defaults): https://0x0.st/sRKi.png

madvr: https://0x0.st/sR85.jpg

I also noticed that madvr is using BT.2390, opposed to mpv using BT.2100; this post https://forum.doom9.org/showthread.php?p=1709584 claims that it's "used to compress highlights", do you think we would see any advantage by swapping?

btw any and all samples that I provide should be x265/HDR, which should allow for easy testing of variable configs

The pulsating brightness has been greatly reduced by the new peak detection, but it's still noticeable.

You can try fiddling around with the parameters maybe? Of interest are:

--hdr-peak-decay-rate--hdr-scene-threshold-low--hdr-scene-threshold-high

I also noticed that madvr is using BT.2390, opposed to mpv using BT.2100; this post https://forum.doom9.org/showthread.php?p=1709584 claims that it's "used to compress highlights", do you think we would see any advantage by swapping?

Those two documents are not comparable. As for BT.2390, it's just a report (not a standard) and it documents several ways of doing tone mapping:

We use something like option "3) YRGB", madVR uses option "4) R'G'B'". Or, more precisely, with the new desaturation, we switch between YR'G'B' and R'G'B' tone mapping based on the brightness level.

As for the actual curve they present, they suggest using a hermite spline to roll-off the knee, as follows:

Which I've played around with in the past but didn't think it was noticeably different from the existing curves (in particular, mobius already is a linear-with-knee function whose graph looks similar to the graph they provide).

re: that mad max clipping sample, for some reason the red channel in the image is almost entirely black, which leads to the weird blue color. this happens before even tone mapping. I'll investigate it in a bit. Also, I fixed another bug related to the new code that specifically affects the first few frames after a seek - but that one wouldn't cause any clipping.

Haasn, is there something in particular you'd like to test for? I'll be home in a few hours and I'll be able to test.

Found the culprit, it's due to the gamut reduction, which brings some values into the negative range. To fix it, we need to perform tone mapping before gamut reduction - while this can (and will) still introduce clipping as part of the gamut reduction, this is known and pretty much unavoidable.

The previous code's idea of trying to compensate for gamut reduction via tone mapping was, to put it simply, not correct. I've fixed it (not pushed yet).

That also explains why some of these issues went away when I was using the ICC profile (as I almost always do), since LittleCMS does a much better job reducing the gamut compared to our built-in gamut adaptation code - and more importantly - the ICC profile happens after tone mapping.

Also, I'd like to point out that this flame thing is slightly blue in the original source file. This is what it looks like using only linear scale adjustments (no tone mapping whatsoever):

This test raises an interesting question of how we want to balance the desaturation. This is what it looks like using the new default settings (+ the bug fixed):

This is what it looks like if I bump up the desaturation strength to 1.0:

And finally, this is what it looks like if I also drop the desaturation exponent to 0.0:

The default settings do make the blue flame kind of funny looking, but as you can also see, it's sort of in the source to begin with. So in a way, it's more faithful to the file, which to me seems like a good reason to keep it that way.

That said, mad max was almost surely not mastered on hardware actually capable of 10,000 nits, and the mastering engineer probably tuned this color based on that they saw on their screens. If their screen did desaturation tone mapping to reasonable brightness levels, then they would have shipped the file with that weird blue tint without realizing it? Speculation at this point...

@Doofussy2 : Just wanted to second your positive experience with "hable" as the tone mapping function - on my LG OLED TV, HDR content is also displayed by the TV-internal player very much like mpv looks with "hable" - with the TV's internal player however being much worse with regards to banding artefacts, that mpv does not suffer from.

@haasn my preference would be desaturation 1.0 (the third picture), though I'll be honest, I can't see any difference between 2 and 3. The last one is losing too much color around the lightning.

Just testing --tone-mapping=mobius --hdr-compute-peak=yes when playing Interstellar. The results are good. Definitely brighter than hable. Using --tone-mapping-param=0.1 didn't tone it down, noticeably. Mobius is a little too bright for me, but I don't see anything negative happening to the image. And it's a huge improvement over what we had, before. I think that could easily be the default.

I'm unsure if we should take example from HDR TVs, they're tonemapping too and from what I've heard their algorithms aren't particularly impressive, results vary wildly between manufacturers and even models within a product line. From what I've read many people online prefer to use madvr instead of their TVs internal tonemapping solution for a superior result.

I'm unsure if we should take example from HDR TVs, they're tonemapping too and from what I've heard their algorithms aren't particularly impressive and it results vary wildly between manufacturers and even models within a product line. From what I've read many people online prefer to use madvr instead of their TVs internal tonemapping solution for a superior result.

Do you mean TV shows, like Netfix? Or hlg broadcast?

Do you mean TV shows, like Netfix? Or hlg broadcast?

I mean, as I understand it, HDR TVs do not display a "true" result,

See this quote:

"Well, this might be splitting hairs, but I already disagree with the wording you're using. When you say "the HDR version" that sounds as if what your OLED shows is to be considered to original reference HDR version, but it's not.

I know it's something people need to wrap their head around first. But official HDR displays are not really all that different from old SDR displays. The key difference is that official HDR displays have a firmware which supports tone mapping. There's no secret sauce. The display's aren't physically built in a different way, like different OLED materials or something. It's all just tone mapping in the firmware. Ok, maybe manufacturers are pulling some extra things to squeeze a bit more brightness out of their technology, so they don't have to tone map as much (as they would otherwise have to). But still, in the end the key thing an official HDR display has is just a firmware which supports tone mapping.

So if you let madVR convert HDR video to SDR, basically you're comparing madVR's tone mapping algorithm to LG's tone mapping algorithm. If you fully embrace that fact, it should be easy to understand that we can't start with the assumption that LG implemented a perfect tone mapping algorithm, and madVR did not. Actually, I'm hearing from various insiders lots and lots of complaints about the very bad quality of the tone mapping implementations of most TVs out there today.

So in the same way you wouldn't assume that your OLED must be better at upscaling compared to madVR (for whatever reason), you also shouldn't assume that your OLED must be better at tone mapping compared to madVR."

@HyerrDoktyer Is MPV Player able to output wide gamut color directly to a HDR TV while using its tone mapping algorithm on Linux?

@HyerrDoktyer Is MPV Player able to output wide gamut color directly to a HDR TV while using its tone mapping algorithm on Linux?

I believe so I'm not entirely sure, perhaps this issue may help: https://github.com/mpv-player/mpv/issues/5521

Regarding Mad Max, it seems that most if not all of the explosions in this film suffer from this "blue" effect,

--target-trc=linear

I do like the way other tonemapping methods handle these oddities, they seem to output bright yellow/white in these areas and I think the end result is far more realistic

default:

madvr:

VLC:

The key difference is that official HDR displays have a firmware which supports tone mapping.

I don't think this is the full story. The author is making the assumption that the display in HDR mode is just the same as the SDR mode except with a different transfer function. But for example, my "HDR" display (i.e. IPS display with FALD) makes a very big distinction: It will not activate the FALD algorithm in "SDR" mode, so it's literally just a normal SDR display (1000:1 contrast). Many other displays based on FALD technology will have similar limitations in SDR mode - you need to put them into HDR mode to unlock the extra dynamic range. More importantly, no HDR display that has a white point significantly higher than the SDR levels is going to enable its full brightness range in SDR mode. That would just be insane, since SDR signals are not designed to be displayed at >1000 cd/m^2, and the displays are similarly unequipped to handle such signals. The only real exception here is OLED displays, since OLED is high dynamic range by design, and most OLED displays typically max out at something like 400 cd/m^2 anyway, so they just treat SDR and HDR as the exact same thing. So this post is basically only true when talking about OLED displays exclusively.

Also, tone mapping HDR->HDR is very different from tone mapping HDR->SDR, in my opinion. In the HDR->HDR case, you have a display that has essentially the same standard range capabilities as the reference display, but a different peak brightness - you can preserve faithfully all standard range content while only adjusting the levels of the peaks. (This is what e.g. "mobius" is designed to do. In fact, for a HDR output display, mobius is 100% accurate for standard range content). This is also what BT.2390 talks about, and what your display is doing internally. This is comparatively also easy to do. The difficult part is tone mapping HDR->SDR, because you need to make sacrifices. Since you only have the "standard range" available, you have throw out some of the standard dynamic range so you can fit room for the highlights. This is what "hable" or "reinhard" are designed to do.

In my opinion, the correct comparison when discussing the HDR->SDR tone mapping algorithm is between the HDR and SDR versions of the same source material, and only where the SDR version was graded by a human (rather than an automatic tone mapping algorithm). This is because SDR mastering in the studio involves making the same tradeoffs that we are trying to recreate in our HDR->SDR tone mapping algorithm.

(And for HDR->HDR tone mapping, you can just turn off peak detection, set the curve to mobius, configure the --target-peak and be done with it.)

I do like the way other tonemapping methods handle these oddities, they seem to output bright yellow/white in these areas and I think the end result is far more realistic

Are those mpv screenshots taken with commit b29e4485c? As I already pointed out, there was an underflow on this scene due to the combination of the explosion being very bright and out of gamut, which makes this "blue" effect much worse than it's supposed to be, even in in the source. (note: you can work around it by setting --target-prim=bt.2020, but this will skip gamut mapping so the colors will be undersaturated compared to bt.709)

And yes, I think that for this clip, the only explanation I have is that the mastering engineer in charge of grading these samples was relying on his/her own display cancelling out the blue spots. If you look at the source, the MaxCLL is 9918 cd/m^2 (and we can certainly agree that this movie has insane brightness levels), while the mastering display metadata says it was mastered on a display with only 4000 cd/m^2 luminance. So without a doubt, the mastering engineer was seeing a tone mapped version. (Clear evidence that they fucked up, you should never master to levels that exceed your own display's capabilities for precisely this reason...)

Fortunately, that actually gives us a way to work around it (and movies like it): We could tone map twice; once using the fully desaturated, dumb/naive "TV-style" tone mapping algorithm, to bring it from the MaxCLL levels (9918 nits) down to the mastering levels (4000 nits). Then we can tone map a second time to bring it down from the mastering levels to the display levels (i.e. 100 nits for an SDR display), and the second time around we can use the full tone mapping algorithm in its chromatically accurate / content-adaptive mode.

In my opinion, the correct comparison when discussing the HDR->SDR tone mapping algorithm is between the HDR and SDR versions of the same source material

Would a clip of the SDR version of Mad Max be helpful? Or do you already have your hands on that?

Are those mpv screenshots taken with commit b29e448?

No, they were taken with shinchiros latest build

I would prefer to compile my own builds so I can test these patches as you push them but I've had nothing but trouble cross compiling; probably PEBCAK more than anything. I had no issues compiling on a liveUSB but that's obviously a temporary solution and unfortunately as of now I don't have a more permanent environment.

MSYS2 repos are satan I can't even get 1KB/s from them, it took over 4 hours to get the dependencies

Would a clip of the SDR version of Mad Max be helpful? Or do you already have your hands on that?

It would!

No, they were taken with shinchiros latest build

Okay. Well, I made screenshots of a different frame with that bug fixed. You can compare it against that one if you want. But we already know what the madVR result will be, since we can more or less recreate it ourselves by setting the desaturation exponent to 0. (It should be the same as the bottom screenshot in my post)

I had to cut the start to save on filesize, so it won't be frame accurate; sorry.

https://0x0.st/sRmM.mp4

New build to test:

mpv-x86_64-20190103-git-b29e448.zip

Okay, I made some comparisons between the HDR and SDR versions of Interstellar:

- frame 1: HDR SDR

- frame 2: HDR SDR

- frame 3: HDR SDR

- frame 4: HDR SDR

- frame 5: HDR SDR

- frame 6: HDR SDR

- frame 7: HDR SDR

- frame 8: HDR SDR

- frame 9: HDR SDR

These are all with the new default tone mapping settings.

Some observations:

- We still kill more of the bright details than the SDR version. And for the non-bright scenes, we're actually brighter than the reference. So if anything, we're not darkening the scene enough. Mobius would definitely be a bad idea on this source, since it kills even more.

- The HDR version seems to be more green-tinted, and also has a different crop, compared to the SDR version I have. This seems to be in the source; I can't get rid of it by using different tone mapping settings. Most likely we should ignore this.

Overall I'm relatively okay with how this turned out.

To recover some of that detail in the ultra bright scenes we'd really need to start selectively expanding the dynamic range in addition to just darkening it. The problem is that the source content is very "flat", i.e. the SDR version has a greater contrast than the HDR version (ironically). Most likely the mastering engineers decided to boost the contrast of these scenes to bring out the details more.

In theory we could detect such "flat" scenes and boost the contrast dynamically ourselfes. But I won't open that can of worms.

The HDR version seems to be more green-tinted, and also has a different crop, compared to the SDR version I have. This seems to be in the source; I can't get rid of it by using different tone mapping settings. Most likely we should ignore this.

From my experience, this seems like the correct decision. Most HDR releases have a slight tint to them (usually yellow)

The Big Lebowski is probably the most egregious example I can think of

SDR (Old BD):

HDR:

SDR (Old BD):

HDR:

Comparing the mad max clip however, it seems like "mobius" matches the SDR version very well in most scenes, but in other scenes (especially bright ones), "hable" matches the SDR version better. Again, it's hit and miss. I think both are passable, but I would stick with "hable" personally.

I'll make some four-way comparisons between the HDR version as-is, the SDR version, the HDR version with mobius, and the HDR version with the "simulated mastering display" I described earlier; once I get around to implementing that.

@HyerrDoktyer sounds like a hollywood trick to increase sales of the HDR blu-ray by artificially exaggerating the difference between the two

Potentially, there's a huge amount of resale value with these new releases (HDR10/HDR10+/DV) and I'm sure they're well aware of it.

But the point is that a colour shift is basically expected when viewing HDR releases

Okay so, regarding the comments on your pull request

Is --tone-mapping-max-boost useful? Does it help on dark scenes? Or is it more annoying?

I think Annihilation is an interesting sample as it is basically the opposite of Mad Max, in the sense that it's incredibly dark, so dark in fact that there's almost no detail in many shadows

Here's some comparisons, defaults vs --tone-mapping-max-boost=1.5

https://imgbox.com/g/uGCpUy37oq

Nice improvement

Setting --hdr-peak-decay-rate=50 seems to be very effective to eliminate pulsating in most cases, I wouldn't set it lower.

I couldn't notice any differences in terms of pulsating when changing --hdr-scene-threshold-low/high. Actually, I couldn't notice any difference at all, at least not without comparison screenshots.

In the dark Chasing the Light water scene which I posted above, --tone-mapping-max-boost didn't show any effect.

Instead, I personally prefer setting --tone-mapping-target-avg=0.5, it seems to brighten up dark scenes more reliably and I personally prefer that value anyway since I find the default result of peak detect a bit too dark.

I also agree that Hable looks best with peak detect, Reinhard looks too saturated.

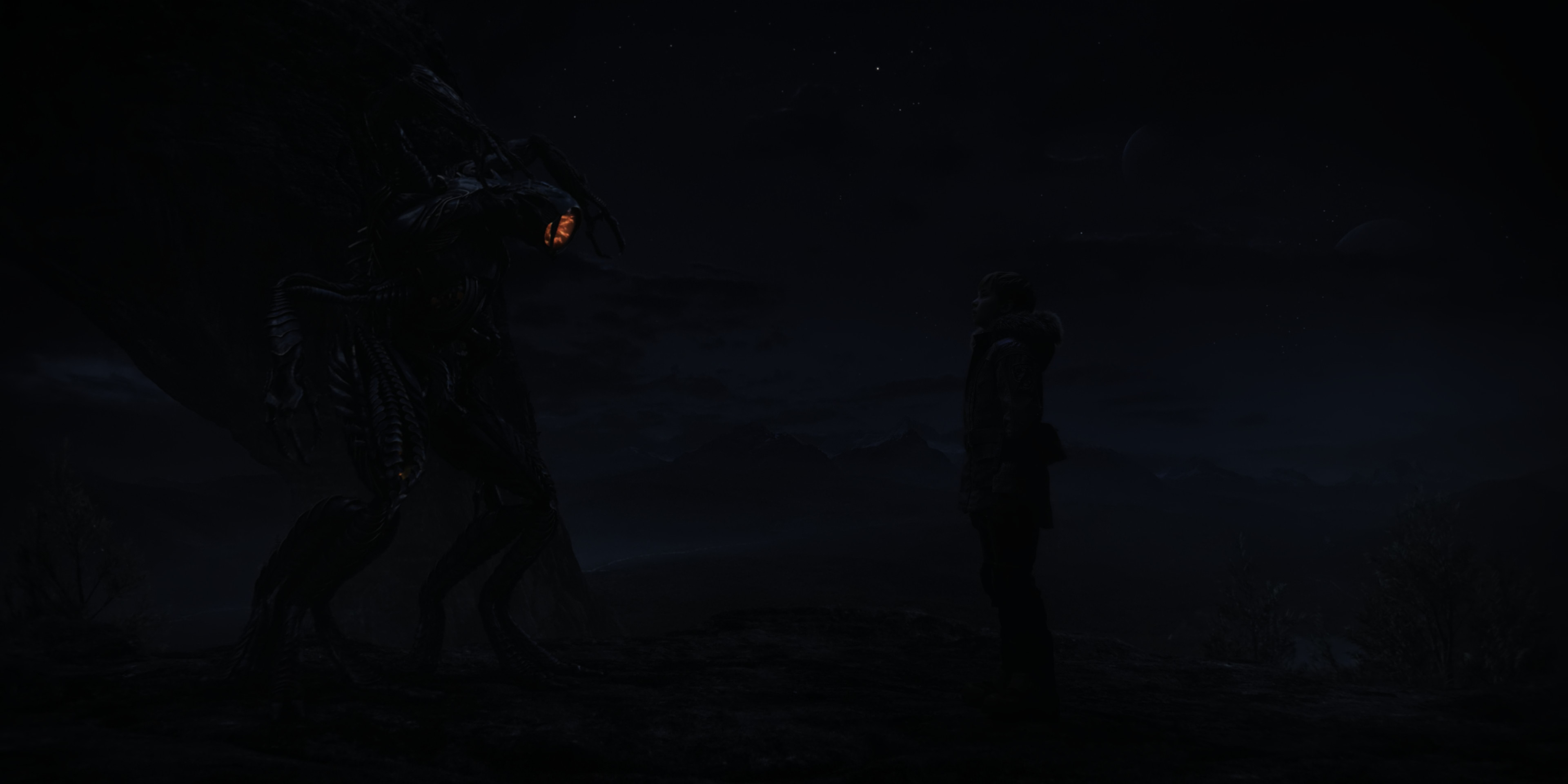

Yeah I saw the annihilation sample @lachs0r posted, stupidly dark. But do we have an SDR version of the movie? Being a horror movie and all, maybe they _want_ it to be so dark you can't see shit?

Yeah I saw the annihilation sample @lachs0r posted, stupidly dark. But do we have an SDR version of the movie? Being a horror movie and all, maybe they _want_ it to be so dark you can't see shit?

Give me 30 mins, and the answer is probably yes; they probably want it to be stupidly dark

Is --tone-mapping-target-avg useful? Or is the built-in default of 0.25 good enough?

Perhaps I'm misunderstanding the purpose of this setting but I'm not seeing any advantages over tone-mapping-max-boost

However, I can say that I've found the output from mpv to be a tad dark, so perhaps raising the default 0.30/0.35 as you suggested may be a good idea (although, the difference is very subtle)

In those two scenes, there is still some flickering observable:

0.27min:

https://abload.de/img/0.2788f2j.jpg

1.28min:

https://abload.de/img/1.28ntdd0.jpg

Not even setting hdr-peak-decay-rate=100 prevents this.

There also always some visible brightness adjustment going on during the first second of playback of the LG and Samsung demos. However not the case with the World in 4k video and probably not very important.

Regarding brightness:

--tone-mapping-target-avg=0.5:

https://abload.de/img/0.57jf5q.png

--tone-mapping-max-boost=10:

https://abload.de/img/10fodsz.png

Sorry took longer than expected (updated with fixed comparisons (some were 1 or 2 frames off))

https://imgbox.com/g/JLBu9OxsRD

Stupidly dark:

https://imgbox.com/g/7mmBECRTdS

Annihilation SDR vs vs HDR (defaults)

SDR BD is overexposed slightly so I'm unsure how valid of a sample this is (and this also raises other questions regarding the validity of using SDR blurays as a comparison (although the argument could easily be made that such releases are merely edge-cases)

Perhaps I'm misunderstanding the purpose of this setting but I'm not seeing any advantages over tone-mapping-max-boost

--tone-mapping-target-avg tells mpv what average brightness you want the result to have. --tone-mapping-max-boost tells mpv how much it's allowed to boost the brightness of dark scenes in order to _hit_ this target.

To use an example, if the actual measured average brightness is 0.22 in the scene, then even with --tone-mapping-target-avg=0.8, it wouldn't alter the scene at all (if the max boost is 1.0), since that means 0.22 is the upper limit on how bright it's allowed to make the scene. But if you set the max boost to, say, 3.0, then the net result is that this scene would get tone mapped to average brightness 0.66 (which is still below your target)

@aufkrawall On what timescale do you notice this flicker? And what do you mean by "flicker"? Does it look like a "sparkling" of the peak brightness, or does it look like a smooth transition / gradual shift?

Not even setting hdr-peak-decay-rate=100 prevents this.

Then you probably need to increase hdr-scene-threshold-low. The peak decay rate is just for smoothing out high frequencies, the scene threshold is what primarily controls how it changes over time.

HDR/SDR samples

Light:

https://0x0.st/sRML.mp4

https://0x0.st/sRMe.mp4

Dark:

https://0x0.st/sRMt.mp4

https://0x0.st/sRM9.mp4

Both:

https://0x0.st/sRMy.mp4

https://0x0.st/sRMJ.mp4

Light2:

https://0x0.st/sRMw.mp4

https://0x0.st/sRMv.mp4

@HyerrDoktyer Does mobius end up closer to the SDR version? If we want to tune max-boost based on this movie, then first we need to make sure our levels agree for "non-dark" scenes (i.e. bright, outdoors scenes).

That being said, maybe they intentionally over-exposed the SDR version because the movie is so dark on average? (Although that raises the question of why they did it for the very bright scenes as well) If so, then maybe we should use precisely this over-exposing factor as our choice of max-boost; based on the rationale that a very dark movie like this was boosted up by the same ratio by the mastering engineers.

Does mobius end up closer to the SDR version

Yes, it results in a shockingly similar result

SDR:

tone-mapping=mobius

Defaults for good measure:

Here's a good example of why I'm hesitant to consider the SDR BD as a valid sample

SDR

HDR:

SDR:

HDR:

Check out those skin tones

SDR:

HDR:

(these were all taken with the default config, tone-mapping=mobius replicates these overblown highlights)

@HyerrDoktyer your first link is corrupt for me (truncated?). The others work fine.

mobius is indeed right on the money here. I suspect they used a mobius-style tone mapping for their own grading process. No additional "max boost" needed. But, some food for thought: if we stick with the hable default, maybe we should tune max-boost to precisely the difference in brightness between hable and mobius for dark scenes like this? That way, we can stick to hable without losing dynamic range on very dark scenes. Best of both worlds?

FWIW, experimentally, I arrived at a value of 3.0 for these scenes. hable + 3.0 max boost actually looks as good / better than mobius.

@haasn It's a local flickering (rapid dimming?) which is mostly noticeable in the skies. It doesn't seem to be related to scene changes, at least it doesn't look like that. I've already suspected deband, but that's not the case and it indeed seems to be caused by peak detection. I raised --hdr-scene-threshold-low to 200, but that didn't change it. The effect already gets mitigated a lot by increasing --hdr-peak-decay-rate, but not completely for every scene.

I suppose the ultimate goal would be that the peak detection adaptation is not visible when scene changes occur. Which makes me wonder if this is possible with a "fine grained" differentiation between every scene?

@haasn Do you mean my samples? They seem to be working for me but try this: https://0x0.st/sRuy.mp4

If you mean any of my images, I also checked those and I'm not having any issues loading them

maybe we should tune max-boost to precisely the difference in brightness between hable and mobius for dark scenes like this? That way, we can stick to hable without losing dynamic range on very dark scenes. Best of both worlds?

This sounds like an interesting solution, some more darker samples would be nice.... I'll see if I can find any

FWIW, experimentally, I arrived at a value of 3.0 for these scenes. hable + 3.0 max boost actually looks as good / better than mobius.

Yeah I've been playing around with max boost and it was producing some very nice results with this film.

Btw, I noticed that --sigmoid-upscaling + HDR can explode catastrophically sometimes; for some reason only when ICC profiles are enabled. I can fix it either by bumping up the FBO format to 32-bit float, or by clamping in the sigmoid function.

But maybe we should just disable sigmoid upscaling for HDR content entirely? (That's what I ended up doing in libplacebo, but mostly due to other considerations)

I suppose the ultimate goal would be that the peak detection adaptation is not visible when scene changes occur. Which makes me wonder if this is possible with a "fine grained" differentiation between every scene?

Lowering --hdr-scene-threshold-high will put an upper bound on the amount of "adaptation" due to a false negative in the scene change detection. If you're bumping up the decay rate then I'd try lowering the high scene threshold to compensate.

Yeah I've been playing around with max boost and it was producing some very nice results with this film.

Funnily enough, there are some details that simply seem to be missing in the HDR version of these clips. Especially some of the details in the shadows are present in the SDR grade but completely gone in the HDR version (not even in the source file). (I wonder if this was due to the encoder dropping these details during quantization since it was not equipped to handle such small brightness differences?)

This is the source frame without any transfer function being applied, and the contrast/gamma/brightness greatly boosted:

This is the same frame in the SDR grade, also greatly enhanced:

It has way more details in the dark parts of the image. I noticed this in quite a few other scenes as well. I guess both the HDR version and the SDR version of this movie are just outright terrible.

Edit: Wait a minute, the HDR clips have x265 metadata. So these are re-encodes from the blu-ray source? Can you remux them instead? It's possible the re-encode is what clipped the black levels.

@haasn Nevermind, false alarm. I completely darkened the room and watched the scenes again without peak detection. The flickering seems to be inside the material, that YT video shows hopeless bitrate starving. Maybe peak detection just pronounces it a bit more.

But I noticed that increasing --hdr-peak-decay-rate to 100 eliminates the "initial pulsing" of the LG Chess demo. Do you think that value might show problems? The default value definitely isn't optimal as it shows a lot of brightness pulsing in those demo vids.

I also tried various scene-threshold values, I guess they are good as how they are by default.

Setting the peak decay rate too high might cause the adaptation to become "too gradual" for shifts of less than the low threshold. A decay rate of 100 (frames) means that it will take a good 1-3 seconds for the value to stabilize after a sudden shift. But OTOH, maybe such a gradual shift serves to hide the adaptation more than anything?

Might be worth a try going with a larger value by default. I can play around with some clips tomorrow, and maybe also bump up the option limit. (I assumed 100 would be absurd, but after thinking about it, I guess not really. 1000 would be absurd)

I can't, at least right now - but I can confirm that it's not a problem with the encode; the raw BD also suffers from the same clipped blacks.

All 3 releases of this film are pretty bad (WEB/BD/UHD), it's just a shit release

SDR raw BD: https://0x0.st/sRSZ.png

HDR raw BD: https://0x0.st/sRSK.png

Encode: https://0x0.st/sRSq.png

Colours are slightly different because a different tonemapping solution was used for the source image but you get the idea. I won't provide any flawed samples such as a bad encode.

As a sidenote: madVR also shows a bit of pulsing with peak detection enabled at the beginning of the Samsung demo. It however nicely manages to keep a steadier brightness during scenes where the sun intensity is insane, like the water surface with the gooses of which I posted a screenshot. I suppose the tonemapping boost somehow missed out on them.

I'll provide some interstellar samples tomorrow, there's a few scenes with pulsating light levels (although it's not particularly severe), and a significant amount of scenes with major light shifts

Another crazy thought, what if in addition to tracking the highest brightness in the scene, we also track the lowest brightness in the scene; and then use this information to adjust the exposure window size? That way the "very bright" scenes will end up getting tone-mapped down to a set black level (which for SDR displays would just be 0.0, and for HDR displays might be something like 0.001). Although it would break on black bars in the video..

Assuming that black bars are always the same black level, could it be tweaked so that they're ignored completely? Just throwing around ideas

If there doesn't seem to be any way to mitigate that then I don't think it would be a good idea, it's no longer uncommon for films to have multiple ARs (Dark Knight/Interstellar/Mission Impossible/ect)

Turns out it's false alarm here regarding flickering, what I was seeing seems to be the source material and not being caused by mpv (I wonder if this is a common theme with HDR film/demos), seems to be mostly with extreme DOF effects. I also could only find a single scene in the entire film where compute peak dramatically reduces brightness levels (same scene as before, but this time I have an actual sample):

https://0x0.st/sRLW.mp4 (This is from 2:39:15 in the film if you'd like to compare to SDR)

That being said the rest of the film is basically perfect (great work @haasn !)

On my end that clip seems to match the SDR version (which I just rewatched, great movie!) very well, apart from the inescapable differences in tint. I also definitely think that bumping up max-boost by default is a bad idea, since on this scene it makes it much brighter than before. (Whereas the levels are spot-on before). For such a big combined change as that PR ended up being, it's also better to be more conservative by default.

So all in all I'm very happy with the new defaults as-is. I think I'm going to squash them and also start work on reimplementing this in libplacebo.

We can take up the topic of the mad max clip and "simulating the mastering TV" question at another point in time, since so far that's the only movie I've seen where the light levels are mastered to levels above what their own mastering display could handle.

I'll also drop the --target-avg commit for now, mainly to avoid swamping the user with too many new options at once.

Fantastic, thank you. I believe everything that I've outlined in my initial issue has been dealt with and I think we've covered just about everything that's come up since

We can take up the topic of the mad max clip and "simulating the mastering TV" question at another point in time, since so far that's the only movie I've seen where the light levels are mastered to levels above what their own mastering display could handle.

You may find this helpful: https://docs.google.com/spreadsheets/d/15pPvBMCjJogKxt4jau4UI_yp7sxOVPIccob6fRe85_A/edit#gid=184653968

This is not my list but I can get my hands on samples for most of these

There is actually one issue I still have, and I don't quite know how to solve it without reading several frames ahead. Basically the problem is that due to the smoothing of the peak coefficient, a sudden spike in the peak frame brightness may exceed our smoothed version of this metric, resulting in clipping.

It's possible we need to use more aggressive smoothing for the average than for the peak brightness. We could try splitting up the decay rate into peak and average decay.

But for a curve like "hable", this might introduce flickering of its own. Have you observed any clipping as a result of the peak smoothing? (i.e. clipping that only happens when --hdr-peak-decay-rate is high, but goes away when you set it to 1.0)