Models: struct2depth: Prediction quality drops sharply when inferring with img_width and img_height

System information

- What is the top-level directory of the model you are using:

models/research/struct2depth - Have I written custom code (as opposed to using a stock example script provided in TensorFlow): No

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Ubuntu 18.04

- TensorFlow installed from (source or binary): binary

- TensorFlow version (use command below): 1.12.0

- Exact command to reproduce: python3 inference.py --input_dir /path/to/kitti/2011_09_26/2011_09_26_drive_0005_sync/image_03/data/ --output_dir /path/to/output/ --model_ckpt /path/to/kitti-model/model-199160 --img_height 275 --img_width 1242

I am using the pretrained KITTI model on a video from the KITTI database. When I run inference.py images are generated just fine, but the image shrinks to 416x128.

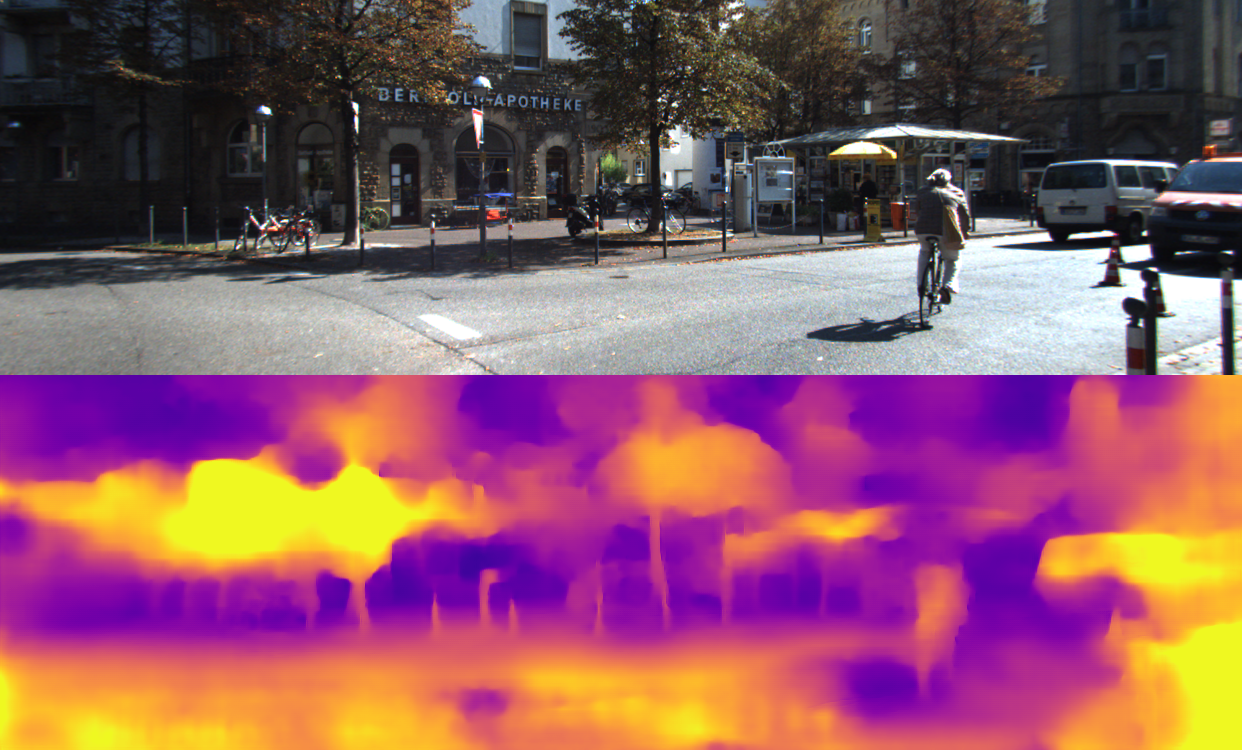

When I set the dimensions explicitly (--img_height 275 --img_width 1242), the dimensions are good, but the the quality drops considerably. Here is a random frame as an example.

Without explicit dimensions

With explicit dimensions:

All 6 comments

@aneliaangelova Can you please take a look? Thanks!

I'll take a look!

Hi,

The model is not expected to generalize to such large changes in image resolution.

We expect that if you train on larger resolution and test on smaller that will be fine, or when you test on a little large will be fine too. But in your case you're increasing the width dimension by 3X and the height about 2X, so the model does not have information to generate these large outputs.

I will recommend:

- reduce the image so sizes that are closer to the trained resolution

- retrain the model to images sizes closer to the resolutions you expect at test time.

Hi,

That does makes sense.

I will try to train on the KITTI dataset without shrinking the image sizes.

Will see how feasible the training speed is.

Thanks for the reply.

Closing this issue since its resolved. Feel free to reopen if still have problems. Thanks!

When inference, is_training flag is True, It's not correct for batch norm.

And another bug.

Regulalization loss is not add to total_loss.

Most helpful comment

Hi,

The model is not expected to generalize to such large changes in image resolution.

We expect that if you train on larger resolution and test on smaller that will be fine, or when you test on a little large will be fine too. But in your case you're increasing the width dimension by 3X and the height about 2X, so the model does not have information to generate these large outputs.

I will recommend: