Models: SSDLite Mobilenet v2 and Object Detection Tutorial

Hello,

Before describing my problem I need to tell that the "Object detection tutorial" by default in jupyter works fine in my environment.

System information

- What is the top-level directory of the model you are using: object_detection

- Have I written custom code (as opposed to using a stock example script provided in TensorFlow): No

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Ubuntu 16.04LTS

- TensorFlow installed from (source or binary): source in server / binary in test machine

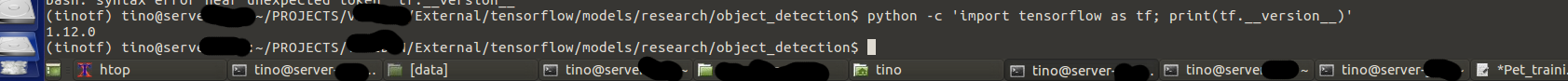

- TensorFlow version (use command below): 1.12

- Bazel version (if compiling from source): 0.16.1

- CUDA/cuDNN version: 10.0

- GPU model and memory: Titan X / 12Gb

- Exact command to reproduce:

python object_detection/export_inference_graph.py --input_type image_tensor --pipeline_config_path ${MODEL_DIR}ssdlite_mobilenet_v2_coco.config --trained_checkpoint_prefix ${MODEL_DIR}model.ckpt-50000 --output_directory ${MODEL_DIR}export

or

python object_detection/export_tflite_ssd_graph.py --pipeline_config_path ${MODEL_DIR}ssdlite_mobilenet_v2_coco.config --trained_checkpoint_prefix ${MODEL_DIR}model.ckpt-50000 --output_directory ${MODEL_DIR}export2

Describe the problem

I trained a new model using this official tutorial , but using 2 classes insteaf of 37 and using a ssdlite_mobilenet_v2_coco starting the training with transfer learning from the model ssdlite_mobilenet_v2_coco_2018_05_09.

So, after the training I have exported the model in 2 ways, as "frozen_inference_graph.pb" and "tflite_graph.pb" using the second command.

I tried to use them in the example "object_detection_tutorial.ipynb", and with the first model "frozen_inference_graph.pb" it does not produce any error but it does not plot the images.

And using the "tflite_graph.pb" I have the next error in the last step of the ipynb file:

Num of detections: {}

---------------------------------------------------------------------------

KeyError Traceback (most recent call last)

<ipython-input-49-71b1db50bc10> in <module>

14 image_np_expanded = np.expand_dims(image_np, axis=0)

15 # Actual detection.

---> 16 output_dict = run_inference_for_single_image(image_np, detection_graph)

17 # Visualization of the results of a detection.

18 vis_util.visualize_boxes_and_labels_on_image_array(

<ipython-input-48-0dd68e4f0672> in run_inference_for_single_image(image, graph)

36 # all outputs are float32 numpy arrays, so convert types as appropriate

37 print("Num of detections: " + str(output_dict))

---> 38 output_dict['num_detections'] = int(output_dict['num_detections'][0])

39 output_dict['detection_classes'] = output_dict[

40 'detection_classes'][0].astype(np.uint8)

KeyError: 'num_detections'

Could you help me in trying to figure out what I am doing wrong?

Thanks.

All 13 comments

I have been struggling to train a SSD Lite MobileNet v2 for days now, can you please share your config and the checkpoints used for this? see my issue and on SO

Previously I tried using legacy/train.py but I was getting all sorts of issues though I have trained Faster RCNN with it without any issues, so the TF team suggested that I try model_main.py but it has its own issues

@zubairahmed-ai I have used exactly this config file : https://github.com/tensorflow/models/blob/master/research/object_detection/samples/configs/ssdlite_mobilenet_v2_coco.config and the checkpoints from this pre-trained model: download.tensorflow.org/models/object_detection/ssdlite_mobilenet_v2_coco_2018_05_09.tar.gz and any complaints in the using the model_main.py script.

I started too with the Fast RCNN and any problems, except for exporting to a TFLite model.

Best.

This is so strange, please tell me your version of TF and Keras

Please also tell the Keras version

Please also tell the Keras version

I am not using Keras. I am using directly tensorflow from the source code. Sorry.

Ahaa maybe that's my issue but let me try with this TF version and report back. Thanks

Thanks that did the trick, after updating my TF to 1.12 and the right cuDNN version training using model_main.py has started

Using "frozen_inference_graph" is the right. This looks like an issue with object_detection_tutorial.

- Have you correctly replaced the model and labelmap in the notebook?

- Could you try directly running inference with downloaded frozen graph to see if it works?

I used frozen_inference_graph.py and used the right labels, everything works now, case closed

@Arritmic, I believe you have a different issue from @zubairahmed-ai. Please see my comment above and let me know how it goes.

Hi @pkulzc

As I said in the problem description, basically I tried to use both, "frozen_inference_graph.pb" and "tflite_graph.pb", in the jupyter notebook example, but I could not do it with the tflite_graph. The last week finally I trained a model based on a ssd mobilenet v1 and I have not had problems in the conversion to tflite file and I am using it in a C++ API. But really I have not tested its frozen_graph or tflite_graph in the example. I will do it this next week and I will tell you, because I want to use a SSD Mobilenet V2 model instead of the current one or that SSDLite version, and I want to know if I am making really some mistake or it is some kind of "not supported math ops".

Probably I have not explained so well, but for the moment we can close the issue.

Thank you so much

Thank you so much.

Closing this issue since it has been resolved. Please reopen the issue if have any further problems. Thanks!

Most helpful comment

Hi @pkulzc

As I said in the problem description, basically I tried to use both, "frozen_inference_graph.pb" and "tflite_graph.pb", in the jupyter notebook example, but I could not do it with the tflite_graph. The last week finally I trained a model based on a ssd mobilenet v1 and I have not had problems in the conversion to tflite file and I am using it in a C++ API. But really I have not tested its frozen_graph or tflite_graph in the example. I will do it this next week and I will tell you, because I want to use a SSD Mobilenet V2 model instead of the current one or that SSDLite version, and I want to know if I am making really some mistake or it is some kind of "not supported math ops".

Probably I have not explained so well, but for the moment we can close the issue.

Thank you so much

Thank you so much.