Moby: [SWARM] Very poor performance for ingress network with lots of parallel requests

Description

Executing a large number of parallel connections against plain Docker and Docker Swarms leads to 2 completely different perfomance results, with Swarm being the slowest one by a 50x factor!

The test is reproducible (at least on my VMs) easily with Siege and the official Nginx image, but I'm actually experiencing the problem in production with our custom java-based HTTP microservice. I cannot see any obvious error message in Docker logs or kernel logs.

Steps to reproduce the issue:

Run the nginx container:

[root@stresstest01 ~]# docker run -d --rm --net bridge -m 0b -p 80:80 --name test nginx

35c231e361d7e5ca73fb1bcfbeeaf57a066da057b708055477855e6d16af575d

Siege the container, and the results are good, over 13k trans/sec, and CPU in stresstest01 is 100% used by the nginx process.

[root@siege01 ~]# siege -b -c 250 -t 20s -f test_vm_docker.txt >/dev/null

** SIEGE 4.0.2

** Preparing 250 concurrent users for battle.

The server is now under siege...

Lifting the server siege...

Transactions: 260810 hits

Availability: 100.00 %

Elapsed time: 19.03 secs

Data transferred: 140.03 MB

Response time: 0.02 secs

Transaction rate: 13705.20 trans/sec

Throughput: 7.36 MB/sec

Concurrency: 245.51

Successful transactions: 231942

Failed transactions: 0

Longest transaction: 7.03

Shortest transaction: 0.00

Now, lets try with Docker Swarm (1 node swarm, 1 container stack)

[root@stresstest01 ~]# cat docker-compose.yml

services:

server:

deploy:

replicas: 1

image: nginx:latest

ports:

- published: 80

target: 80

version: '3.3'

[root@stresstest01 ~]# docker stack deploy test --compose-file docker-compose.yml

Creating network test_default

Creating service test_server

md5-f4221894196c970ed7866e093bb50670

[root@siege01 ~]# siege -b -c 250 -t 20s -f test_vm_docker.txt >/dev/null

** SIEGE 4.0.2

** Preparing 250 concurrent users for battle.

The server is now under siege...

Lifting the server siege...

Transactions: 65647 hits

Availability: 100.00 %

Elapsed time: 19.44 secs

Data transferred: 35.28 MB

Response time: 0.07 secs

Transaction rate: 3376.90 trans/sec

Throughput: 1.81 MB/sec

Concurrency: 246.66

Successful transactions: 58469

Failed transactions: 0

Longest transaction: 3.02

Shortest transaction: 0.00

[root@siege01 ~]# siege -b -c 250 -t 20s -f test_vm_docker.txt >/dev/null

** SIEGE 4.0.2

** Preparing 250 concurrent users for battle.

The server is now under siege...

Lifting the server siege...

Transactions: 4791 hits

Availability: 100.00 %

Elapsed time: 19.47 secs

Data transferred: 2.59 MB

Response time: 1.00 secs

Transaction rate: 246.07 trans/sec

Throughput: 0.13 MB/sec

Concurrency: 245.61

Successful transactions: 4291

Failed transactions: 0

Longest transaction: 1.20

Shortest transaction: 0.00

md5-f5189b7a8b687270178c2e57464b1815

Client:

Version: 17.09.0-ce

API version: 1.32

Go version: go1.8.3

Git commit: afdb6d4

Built: Tue Sep 26 22:41:23 2017

OS/Arch: linux/amd64

Server:

Version: 17.09.0-ce

API version: 1.32 (minimum version 1.12)

Go version: go1.8.3

Git commit: afdb6d4

Built: Tue Sep 26 22:42:49 2017

OS/Arch: linux/amd64

Experimental: false

md5-68e3b4fb54f0418f4434fd7a6bb1eed7

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 2

Server Version: 17.09.0-ce

Storage Driver: overlay

Backing Filesystem: xfs

Supports d_type: false

Logging Driver: json-file

Cgroup Driver: cgroupfs

Plugins:

Volume: local

Network: bridge host macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file logentries splunk syslog

Swarm: active

NodeID: s2ei2tx1nbf6lgn6d2yi9k782

Is Manager: true

ClusterID: s2dwwy929baleeoyk943wh2r9

Managers: 1

Nodes: 1

Orchestration:

Task History Retention Limit: 5

Raft:

Snapshot Interval: 10000

Number of Old Snapshots to Retain: 0

Heartbeat Tick: 1

Election Tick: 3

Dispatcher:

Heartbeat Period: 5 seconds

CA Configuration:

Expiry Duration: 3 months

Force Rotate: 0

Autolock Managers: false

Root Rotation In Progress: false

Node Address: 192.168.10.187

Manager Addresses:

192.168.10.187:2377

Runtimes: runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 06b9cb35161009dcb7123345749fef02f7cea8e0

runc version: 3f2f8b84a77f73d38244dd690525642a72156c64

init version: 949e6fa

Security Options:

seccomp

Profile: default

Kernel Version: 3.10.0-693.2.2.el7.x86_64

Operating System: CentOS Linux 7 (Core)

OSType: linux

Architecture: x86_64

CPUs: 16

Total Memory: 7.609GiB

Name: stresstest01

ID: 4XPS:KBEY:W53L:YAK6:4MZL:4HDN:DMUR:DD4T:5RWA:IUK6:522E:TCAL

Docker Root Dir: /var/lib/docker

Debug Mode (client): false

Debug Mode (server): false

Registry: https://index.docker.io/v1/

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

Additional environment details (AWS, VirtualBox, physical, etc.):

It's a KVM virtual machine (under oVirt) but the same happens when using a physical machine.

All 137 comments

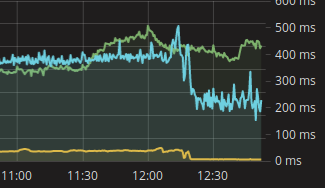

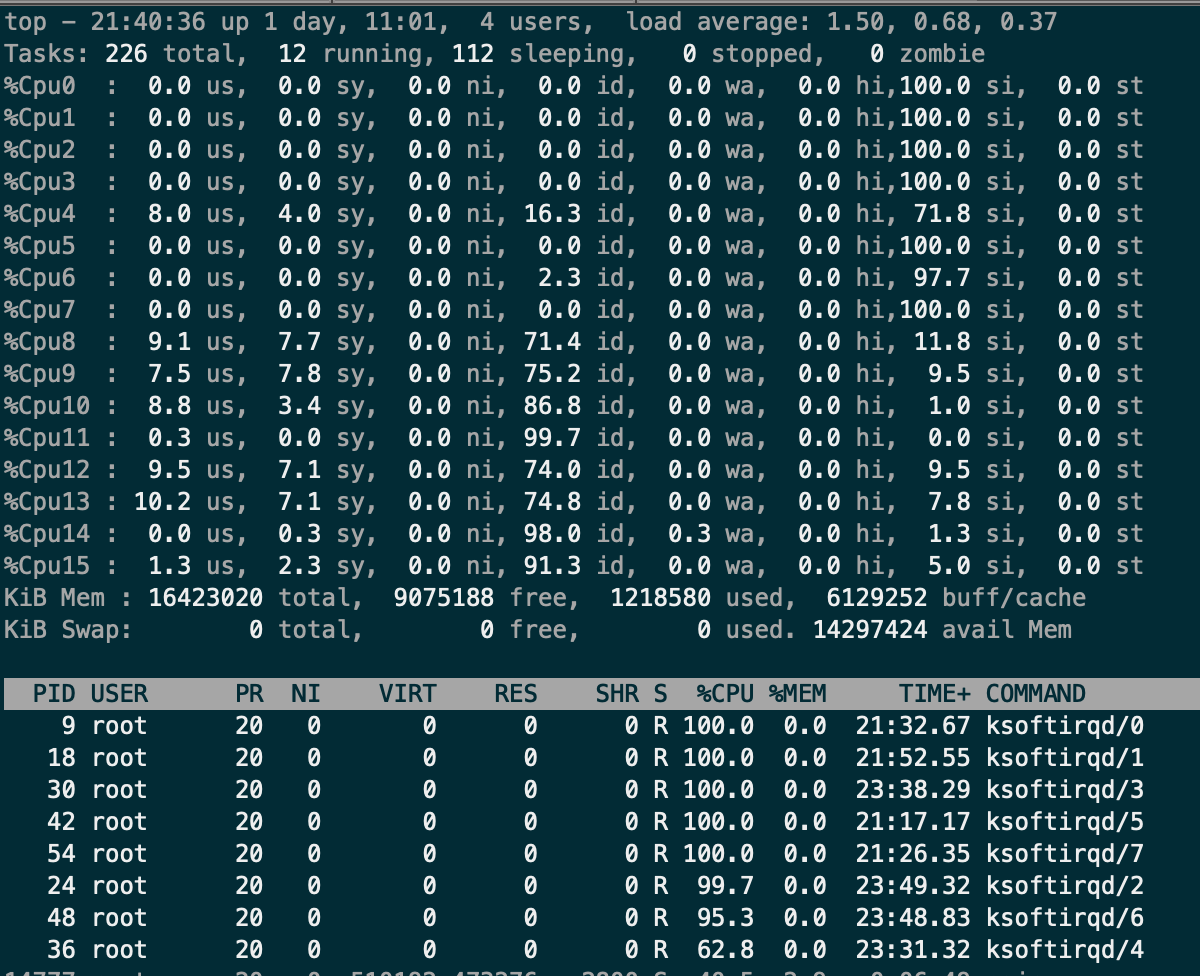

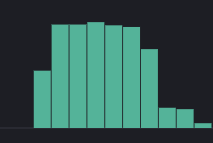

This issue is a total blocker for me and for deploying Swarm in production. This is a graph of how response time changed after switching a component in our architecture from Swarm to plain docker, on the same exact hosts

I think I'll start moving to Kubernetes

(green line is operations/sec, left Y-axis)

(comment copied from #35009 because at first I thought it was the same issue)

@vide the swarm-mode ingress is handled by IPVS and the connections are dispatched to the backend tasks over the overlay ingress network. But since it is a single node setup, the performance dip cannot happen due to the VXLAN headers used in overlay network. The only possible reason could be IPVS and it might require performance tuning for your case.

We can confirm the theory if you can change your stack file with an additional parameter mode:host under the ports section. This will bypass the IPVS and use the native port mapping just like docker run does. Can you pls confirm ?

ports:

- target: 80

published: 80

protocol: tcp

mode: host

@mavenugo Yep, IPVS was my number 1 suspect too, didn't think about the mode: host trick.

Benchmarking again with the settings you suggested:

```# siege -b -c 250 -t 20s -f test_vm_docker.txt >/dev/null

* SIEGE 4.0.2

* Preparing 250 concurrent users for battle.

The server is now under siege...

Lifting the server siege...

Transactions: 238493 hits

Availability: 100.00 %

Elapsed time: 19.85 secs

Data transferred: 128.05 MB

Response time: 0.02 secs

Transaction rate: 12014.76 trans/sec

Throughput: 6.45 MB/sec

Concurrency: 245.38

Successful transactions: 212106

Failed transactions: 0

Longest transaction: 3.22

Shortest transaction: 0.00

```

Which is comparable to the plain docker results.

So, what tuning can I do on IPVS in this case? Upgrading kernel maybe? Obviously I need IPVS load balancing in production :)

@vide thanks for confirmation. We should spend a bit more time analyzing the issue before looking at IPVS as the source of performance issue (though I mentioned that in my previous comment :) ). I will give siege a try and get back to you.

@mavenugo I've tried again on the same CentOS box with latest 4.13 kernel (4.13.4-1.el7.elrepo.x86_64) and the results are the same.

Plus, I've tried on my laptop's Ubuntu 17.04 install and results are bad here too.

@mavenugo could you reproduce it on your machine?

I can exactly reproduce the issue. The testing makes a new connection on each request.

Inactive connections soon piled up in ipvs.

$ sudo nsenter --net=/var/run/docker/netns/ingress_sbox ipvsadm -l

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

FWM 274 rr

-> 10.255.0.29:0 Masq 1 0 14074

If you can't wait for InActConn down to zero and run the testing again, you would get even poor result as described above.

On client side are full of "SYN_SENT".

tcp 0 1 192.168.105.67:47010 192.168.105.68:8000 SYN_SENT

tcp 0 1 192.168.105.67:46978 192.168.105.68:8000 SYN_SENT

tcp 0 1 192.168.105.67:47100 192.168.105.68:8000 SYN_SENT

tcp 0 1 192.168.105.67:47028 192.168.105.68:8000 SYN_SENT

tcp 0 1 192.168.105.67:47074 192.168.105.68:8000 SYN_SENT

tcp 0 1 192.168.105.67:47016 192.168.105.68:8000 SYN_SENT

tcp 0 1 192.168.105.67:46966 192.168.105.68:8000 SYN_SENT

tcp 0 1 192.168.105.67:47102 192.168.105.68:8000 SYN_SENT

tcp 0 1 192.168.105.67:46986 192.168.105.68:8000 SYN_SENT

...

If you want to work around this issue, set connection = keep-alive in your .siegerc file (use siege.config to generate a template .siegerc).

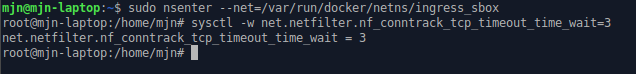

@vide @xinfengliu I could reproduce it and narrowed it down to the Conntracker states causing the issue. We see much better performance making IPVS not to use conntracker (via --sysctl net.ipv4.vs.conntrack=0 for the siege container alone).

BTW, Pls also note that am using the Service VIP directly. Using the service name results in performance impact since Siege does DNS lookup for every query and that delays the process. Using the Service VIP directly removes the DNS lookups and the performance is much better.

$ docker run --rm -itd --name sg3 --sysctl net.ipv4.vs.conntrack=0 --network testol2 ubuntu bash

ddddb204d2f70705b0044df7cced023e54d3a85745ecefe1c7915413c18eba81

root@Ubuntu-vm docker (master) $ docker exec -it sg3 bash

root@ddddb204d2f7:/# apt-get update && apt-get install siege

root@ddddb204d2f7:/# siege -c 100 -b -t20s http://10.0.1.3:5000

** SIEGE 3.0.8

** Preparing 100 concurrent users for battle.

The server is now under siege...

Lifting the server siege... done.

Transactions: 79772 hits

Availability: 100.00 %

Elapsed time: 19.40 secs

Data transferred: 3.12 MB

Response time: 0.02 secs

Transaction rate: 4111.96 trans/sec

Throughput: 0.16 MB/sec

Concurrency: 95.35

Successful transactions: 79777

Failed transactions: 0

Longest transaction: 0.61

Shortest transaction: 0.00

FILE: /var/log/siege.log

You can disable this annoying message by editing

the .siegerc file in your home directory; change

the directive 'show-logfile' to false.

root@ddddb204d2f7:/#

@mavenugo Ok, so, how do I set virtual server conntrack to 0 in Swarm mode? According to https://docs.docker.com/compose/compose-file/#not-supported-for-docker-stack-deploy sysctl tuning is not supported with docker stack deploy :(

There's an open issue about that: https://github.com/moby/libentitlement/issues/35

This issue seems related, too: https://github.com/moby/moby/issues/31746

@vide idk about the docker stack deploy support. But can you pls confirm if the suggested workaround works in a non-stack deploy case ?

--sysctl net.ipv4.vs.conntrack=0 can not use at ingress routing mesh on ingress_sbox. As the ipvs will do SNAT after forwarding.

In kubenetes's kube-proxy service. will set those kernel parameters:

https://github.com/kubernetes/kubernetes/blob/master/pkg/proxy/ipvs/proxier.go#L88-L91

and net.netfilter.nf_conntrack_buckets, net.netfilter.nf_conntrack_max.

Hello,

i'm on RHEL7.4 and docker 1.12. Testing on 2 node cluster with nginx:latest deployed in mash mode. I can reproduce the results @vide . But my test case is slightly different.

Instead of running siege as a container, i run it from outside of the cluster to load test the a pair of nginx containers. I experience a 10x degradation in response time and throughput.

against the cluster:

[root@l1vnetwt-2 siege-4.0.4]# siege -r1000 172.16.1.7:8080/index.html

[alert] Zip encoding disabled; siege requires zlib support to enable it

** SIEGE 4.0.4

** Preparing 255 concurrent users for battle.

The server is now under siege...

Transactions: 255000 hits

Availability: 100.00 %

Elapsed time: 1004.25 secs

Data transferred: 148.83 MB

Response time: 1.00 secs

**Transaction rate: 253.92 trans/sec**

Throughput: 0.15 MB/sec

Concurrency: 254.90

Successful transactions: 255000

Failed transactions: 0

Longest transaction: 1.20

Shortest transaction: 0.00

against a stand alone nginx:

[az@netdev virt]$ siege -r1000 10.253.130.49/index.html

================================================================

WARNING: The number of users is capped at 255. To increase this

limit, search your .siegerc file for 'limit' and change

its value. Make sure you read the instructions there...

================================================================

** SIEGE 4.0.4

** Preparing 255 concurrent users for battle.

The server is now under siege...

Transactions: 255000 hits

Availability: 100.00 %

Elapsed time: 92.20 secs

Data transferred: 148.83 MB

Response time: 0.09 secs

**Transaction rate: 2765.73 trans/sec**

Throughput: 1.61 MB/sec

Concurrency: 241.86

Successful transactions: 255000

Failed transactions: 0

Longest transaction: 1.70

Shortest transaction: 0.00

this is a complete blocker for any further implementation of docker swarm for us. What is the proposed fix and timing on this? thank you.

Hello,

We bumped into this same problem with the meshed LVS balancing, we have very poor performance.

Currently I worked around with host mode configuration, but I hope it's only a temporary solution.

Any plan to fix this?

test in host mode with ab (only 1 container):

Requests per second: 3424.63 [#/sec] (mean)

test in ingress mode with ab:

netstat on client:

tcp 0 1 10.41.0.3:35078 10.41.1.1:11007 SYN_SENT 51986/ab on (0.29/0/0)

tcp 0 1 10.41.0.3:35742 10.41.1.1:11007 SYN_SENT 51986/ab on (0.78/0/0)

...

tcp 0 1 10.41.0.3:35976 10.41.1.1:11007 SYN_SENT 51986/ab on (0.84/0/0)

tcp 0 1 10.41.0.3:35520 10.41.1.1:11007 SYN_SENT 51986/ab on (0.72/0/0)

ipvsadm output in ingress namespace:

-> 10.255.0.33:0 Masq 1 0 4619

-> 10.255.0.35:0 Masq 1 0 4599

-> 10.255.0.36:0 Masq 1 0 4611

ab:

Requests per second: 356.31 [#/sec] (mean)

Solved mine by upgrading to the latest docker from centos repo.

On Jan 9, 2018 12:10, "EmarMikey" notifications@github.com wrote:

Hello,

We bumped into this same problem with the meshed LVS balancing, we have

very poor performance.

Currently I worked around with host mode configuration.

Any plan to fix this?—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

https://github.com/moby/moby/issues/35082#issuecomment-356349718, or mute

the thread

https://github.com/notifications/unsubscribe-auth/AExz3Srkpl5IvrQoS7IJJOuTjFUeMVw8ks5tI52hgaJpZM4PtUll

.

@az-z which is? docker-ce 17.12 or the old one 1.12 or smth?

@vide have you checked if there are TCP retransmissions going on in your swarm setup. We see a lot of retransmissions for traffic heading through the ingress-sbox (where the IPVS is handled). The ingress-sbox would be the one with IP 172.18.0.2 on the docker_gwbridge.

This could easily be seen in our case between an nginx and a memcached container, where 1 second often was added on top of the total request time - something which strongly indicated retransmissions. Capturing 20 seconds of traffic with wireshark on the host showed that indeed a lot of retransmissions were going over the docker_gwbridge.

We have still not come to a solution on the issue #36032 which I have to say is fairly critical. We have this issue in a running production system, and we're starting to become quite desperate about it.

We're running ubuntu 16.04 and Docker 17.09 (we recentely upgraded to 17.12, but that was a disaster in many ways, so we downgraded again).

Jacek,

Ce 17.12.

On Jan 17, 2018 10:59, "Jacek Grzechnik" notifications@github.com wrote:

@az-z https://github.com/az-z which is? docker-ce 17.12 or the old one

1.12 or smth?—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/moby/moby/issues/35082#issuecomment-358349957, or mute

the thread

https://github.com/notifications/unsubscribe-auth/AExz3Wl-Hd7IeJkfmT2cxqDfC7tMydJ2ks5tLhj4gaJpZM4PtUll

.

@vide hi, is there any progress on this case?

@wuzhefang Nope, sorry, I moved to Kubernetes due to this issue

According to this issue and the post from #31746, I can add a bit of info here.

Very easy steps to reproduce, with a single swarm node.

Docker version:

Client:

Version: 18.03.0-ce

API version: 1.37

Go version: go1.9.4

Git commit: 0520e24

Built: Wed Mar 21 23:10:01 2018

OS/Arch: linux/amd64

Experimental: false

Orchestrator: swarm

Server:

Engine:

Version: 18.03.0-ce

API version: 1.37 (minimum version 1.12)

Go version: go1.9.4

Git commit: 0520e24

Built: Wed Mar 21 23:08:31 2018

OS/Arch: linux/amd64

Experimental: false

OS of the machine where docker runs:

Distributor ID: Ubuntu

Description: Ubuntu 16.04.4 LTS

Release: 16.04

Codename: xenial

a) Install swarm on a single node, and on that node:

docker service create --name nginx -p 80:80 --replicas 1 nginx

b) On the same console, execute:

watch -n 0.5 "sudo nsenter --net=/var/run/docker/netns/ingress_sbox cat /proc/net/ip_vs_conn | grep TIME_WAIT | wc -l"

This will monitor the ingress network for connections in TIME_WAIT state and every half second will spit how many of them do exist in that moment.

c) From another machine on the same network, use some load generator (I used ab from apache2-utils):

(The IP of my docker swarm machine is 192.168.1.11)

ab -n 10000 -c 100 http://192.168.1.11/

d) If you execute the snippet from c), +/- the following will be shown in the watch command from b) during a good amount of time:

Every 0.5s: sudo nsenter --net=/var/run/docker/netns/ingress_sbox cat /proc/net/ip_vs_conn | grep...

10064

Where 10064 are the 10k connections from the load test plus a few additional connections (don't really matter for our thing).

e) If you manage to execute the snippet from c) so that the result from b) gets the same value as the result from following command on the swarm node:

sysctl net.ipv4.ip_local_port_range | awk '{print $4 - $3}'

Congestion will start to happen. There are no more available source ports for this 'source IP + dest IP + dest port' combination.

f) Elaborating from here, it happens that the load-balancing mechanism in docker swarm uses facilites from ipvs (a module in the Linux Kernel that can itself act as a load balancer).

g) A variation of command in b) is:

sudo nsenter --net=/var/run/docker/netns/ingress_sbox cat /proc/net/ip_vs_conn | head

If you execute that right after executing the load test, you will see something like:

Pro FromIP FPrt ToIP TPrt DestIP DPrt State Expires PEName PEData

TCP C0A8010C BAF8 AC120002 0050 0AFF006A 0050 TIME_WAIT 119

TCP C0A8010C A830 AC120002 0050 0AFF006A 0050 TIME_WAIT 118

TCP C0A8010C A51A AC120002 0050 0AFF006A 0050 TIME_WAIT 117

TCP C0A8010C B807 AC120002 0050 0AFF006A 0050 TIME_WAIT 119

TCP C0A8010C B331 AC120002 0050 0AFF006A 0050 TIME_WAIT 118

TCP C0A8010C B424 AC120002 0050 0AFF006A 0050 TIME_WAIT 119

TCP C0A8010C BD14 AC120002 0050 0AFF006A 0050 TIME_WAIT 119

TCP C0A8010C B81D AC120002 0050 0AFF006A 0050 TIME_WAIT 119

TCP C0A8010C AF8F AC120002 0050 0AFF006A 0050 TIME_WAIT 118

Which is telling us that the timeout value for the TIME_WAIT state on the connections is very probably (at least in my test setup) 120s.

h) You can sysctl the node where swarm runs searching for that 120 value (obtained from g) )

sysctl -a | grep 120

i) And the docker swarm network for this same value:

sudo nsenter --net=/var/run/docker/netns/ingress_sbox sysctl -a |grep 120

j) And this is the end

From this point, no parameter that I tuned using either

sysctl -w ...

Or

sudo nsenter --net=/var/run/docker/netns/ingress_sbox sysctl -w ...

affected that TIME_WAIT timeout.

Don't really know if ipvs / netfilter (the underlying mechanism used by ipvs) is really using those sysctl-ed values (at least when triggered by docker swarm).

And from this point in a cul-de-sac.

Finally found the problem.

As a last-resort option, and knowing that swarm relies on netfilter facilites to do its internal load balancing for overlay networks (as the very simple case for the service in the prevous post which by default uses an overlay network), I downloaded the Linux Kernel and tampered a little bit with the files.

The sources of interest reside in the following folder:

[kernel source dir]/net/netfilter

That TIME_WAIT timeout is hardcoded inside this file from the ip_vs module, inside:

[kernel source dir]/net/netfilter/ipvs/ip_vs_proto_tcp.c

You can check the latest version of this file (which suffers from the same problem) here:

https://github.com/torvalds/linux/blob/master/net/netfilter/ipvs/ip_vs_proto_tcp.c

Inside this file, you can see following piece of code:

static const int tcp_timeouts[IP_VS_TCP_S_LAST+1] = {

[IP_VS_TCP_S_NONE] = 2*HZ,

[IP_VS_TCP_S_ESTABLISHED] = 15*60*HZ,

[IP_VS_TCP_S_SYN_SENT] = 2*60*HZ,

[IP_VS_TCP_S_SYN_RECV] = 1*60*HZ,

[IP_VS_TCP_S_FIN_WAIT] = 2*60*HZ,

[IP_VS_TCP_S_TIME_WAIT] = 2*60*HZ,

[IP_VS_TCP_S_CLOSE] = 10*HZ,

[IP_VS_TCP_S_CLOSE_WAIT] = 60*HZ,

[IP_VS_TCP_S_LAST_ACK] = 30*HZ,

[IP_VS_TCP_S_LISTEN] = 2*60*HZ,

[IP_VS_TCP_S_SYNACK] = 120*HZ,

[IP_VS_TCP_S_LAST] = 2*HZ,

};

So the guilty of that high timeout is:

[IP_VS_TCP_S_TIME_WAIT] = 2*60*HZ,

If the previous one is changed to:

[IP_VS_TCP_S_TIME_WAIT] = 2*HZ,

The TIME_WAIT timeout is lowered from 120s to 2s.

Then recompiling the module, replacing the system module with the compiled one, rebooting the swarm machine, restarting the service and repeating the load test yields incredibly good results. No more flooding of connections in TIME_WAIT state is observed for moderately high loads (2000 req/s).

If the code from the rest of the file is inspected, there is really no way (or I don't see it) to reload those timeouts. That tcp_timeouts vector seems to be used to initialize the internal timeout table that will be used for the connection management, (without apparently any way to tune it) in this function:

/* ---------------------------------------------

* timeouts is netns related now.

* ---------------------------------------------

*/

static int __ip_vs_tcp_init(struct netns_ipvs *ipvs, struct ip_vs_proto_data *pd)

{

ip_vs_init_hash_table(ipvs->tcp_apps, TCP_APP_TAB_SIZE);

pd->timeout_table = ip_vs_create_timeout_table((int *)tcp_timeouts,

sizeof(tcp_timeouts));

if (!pd->timeout_table)

return -ENOMEM;

pd->tcp_state_table = tcp_states;

return 0;

}

The file ip_vs_ctl.c, which seems to be in charge of updating the tuning for the module, exposes following systctl parameters:

IPVS sysctl table (under the /proc/sys/net/ipv4/vs/)

.procname = "amemthresh",

.procname = "am_droprate",

.procname = "drop_entry",

.procname = "drop_packet",

.procname = "conntrack",

.procname = "secure_tcp",

.procname = "snat_reroute",

.procname = "sync_version",

.procname = "sync_ports",

.procname = "sync_persist_mode",

.procname = "sync_qlen_max",

.procname = "sync_sock_size",

.procname = "cache_bypass",

.procname = "expire_nodest_conn",

.procname = "sloppy_tcp",

.procname = "sloppy_sctp",

.procname = "expire_quiescent_template",

.procname = "sync_threshold",

.procname = "sync_refresh_period",

.procname = "sync_retries",

.procname = "nat_icmp_send",

.procname = "pmtu_disc",

.procname = "backup_only",

.procname = "conn_reuse_mode",

.procname = "schedule_icmp",

.procname = "ignore_tunneled",

.procname = "debug_level",

Nothing like timeouts exposed here.

So there is no effective way to update the TIME_WAIT timeout parameter for this module once it has started (nor to tweak it so the module reads the tuned value during init).

If anybody has an idea of how this problem could be workarounded (s)he will deserve big hugs.

Currently, in a cul-de-sac again. (it is not very practical to recompile kernel modules after each kernel image upgrade)

Fantastic work!! But the kernel mailing list seems to be the next step..

Thanks @tmarti that's definitely an interesting find!

A 2 minute timeout for TIME_WAIT is very standard in practice. It's 2 times the maximum internet lifetime (projected) of a TCP segment and the intent is to ensure that the final ACK gets delivered. If it gets lost the other side will try to resend the FIN and the state needs to still be there in order for the other end to re-respond with the final ACK. (see https://en.wikipedia.org/wiki/Maximum_segment_lifetime and of course https://www.ietf.org/rfc/rfc793.txt) You can set the MSL in the linux kernel ... but it's rarely something one does. Apparently IPVS doesn't even give you the option.

Wasn't aware of this issue, but will read back over it. A larger maximum number of IPVS mappings might well solve the issue and would presumably be something one could set. (if the max mappings were sufficient to absorb steady-state behavior.) What is the desired connection rate?

Of course! How silly from my part.

I have a small theory that want to share with you.

Had to stumble with this post...

https://stackoverflow.com/questions/10085705/load-balancer-scalability-and-max-tcp-ports

... to realise one very simple fact.

We're some of us tired of inspecting netsat -nolap output from time to time.. and everyday we see that a TCP connection is identified by for values:

- source IP

- source port

- destination IP

- destination port

Usually, there are 2 degrees of freedom in this combination:

the source ip: because you normally accept connections from many diferent clients, you can assume this value to spread among different values

source port: this will correspond to some ephemeral port from the client (those in (ubuntu land) range usually from 32768 to 60999

And the other two are fixed:

- destination IP: the server public IP

- destination port: the webserver port (80 in this case)

What is the problem with the initial load test from @vide? (and mine, of course)

The problem with that setup is that you're actually fixing the source IP (because connections under the load test come all from a single PC, which is the node from where we launch the loa test) and getting one less degree of freedom.

So, for the load test, the possible combinations of the "key" that uniquely identifies one connection are reduced to the available number of ephemeral ports on the client (that magic number 28231), because all the other parameters are fixed.

What made look into other plalces for this problem?

I tried it reall hard this afternoon to dig into the code of ipvs module. Not as easy as it sounds: 16k lines of code and it implements its own TCP stack with load blancing and NAT as a bonus track.

Nice thing about that, is that I was able to see that the "current connections" list "key" is composed of precisely the source address:port (the client one!) and the destination adress:port (as done in function nf_nat_used_tuple of the module).

So what happens when the client tries to reuse a port (remember that the other 3 parameters are always the same in this tainted load test) that correspond to a connection in TIME_WAIT state? Well, at the end, the connection attempt gets discarded (not sure if it's due to a mismatching TCP seq. number in the connection state or whatever).

So what next?

In order to confirm that, no sysctl tweaks are needed, no kernel module source tampering is needed, nothing that low-level is actually needed.

Instead of doing a load test with 2000 req/s from a single source IP (which will exhaust connections in about 14s according to the port range 32767-60999 and the other parameters fixed), just launch 200req/s from 10 different source IPs, and confirm that the throughput remains steady.

During monday I'll try to do the proposed test and come back to here.

Many thanks @raarts and @thaJeztah for the encouragement.

And many many thanks @ctelfer for your comment. I was really stuck with the idea of sysctl-ing the ipvs module, and your comment totally overwhelmed me at the beginning, but finally led me to look into other places.

Finished a slightly different variant of the proposed test.

Test 1: First, let's recap and reproduce the tainted load test connection table saturation

For this test we need:

- a machine where docker swarm will run

- a machine from where to launch the tainted load test

Very simple steps to reproduce the tainted test:

a) create a single-replica swarm service on the swarm machine:

docker service create --name nginx -p 80:80 --replicas 1 nginx

In my case, this gives me 28232

b) now, go to the node from where we will execute this first load test and execute:

sysctl net.ipv4.ip_local_port_range | awk '{print $4 - $3}'

This will give us the number of different ephemeral ports from which the load test will be launched.

c) now, go to the swarm machine and execute:

watch -n 0.5 "sudo nsenter --net=/var/run/docker/netns/ingress_sbox cat /proc/net/ip_vs_conn | grep TIME_WAIT | wc -l"

This will continuosly monitor (each half second) the ipvs module (the real load balancer module used by docker swarm) for the number of connections in TIME_WAIT state.

d) now, go to the node from where the load test will be launched and execute:

ab -n 30000 -c 100 http://192.168.1.11/

Please note two things here:

- 192.168.1.11 is the IP of my swarm machine

- it is very important that 30000 is a number slightly above the number obtained from b)

e) on the node from the load test is launched, you will notice that ab gets stuck before ending

f) on the swarm node (the one where you are executing the watch command), you will see that the number of connections in TIME_WAIT state is a little bit below the number obtained from b)

What does this first load test tell us?

Nothing new according to previous comments in this issue.

We see a limit on the maximum number of requests the load test can absorb.

We only see that once the swarm node "fills" the connection table, a drop in accepted throughput happens, and this can be seen because the ab test on the load injector machine gets stuck without finishing.

It seems that we cannot get more than 28k-and-a-bit-more connections in TIME_WAIT state on the swarm machine.

Test 2: What does it mean to "fill" the connection table?

Now let's do a variation on the test, 5 minutes after the previous test (so that connection in TIME_WAIT state do expire and the connection table in the swarm machine is emptied).

a) go to the node from where we launched the load test and execute the following:

sudo sysctl -w net.ipv4.ip_local_port_range="10000 61000"

This will set the ephemeral port range to that 51k different ports can be used.

Wait! This will be executed on the machine from where we will launch the load test? Yes my friend, rememeber that this is a tainted load test, so things are not as they seem.

b) now, keeping the watch command in execution on the swarm machine, execute the following on the node from which we do the load test:

ab -n 60000-c 100 http://192.168.1.11/

Wait! Now we've increased the number of requests! Yes, so that it's slightly above the magical number 51k from a).

c) Monitor the output of the watch command in the swarm machine.

We will see that the ab command on the traffic injector machine gets stuck again, but this time... the watchcommand output on the docker machine gives us a number that's slightly below 51k!

No way! What's happening here!?

Simply put, and recapping from a previous post in this issue.

What does identify "an entry in the connection table"?

A tuple composed of source IP+source port+dest IP+dest port.

Remember from previous the post that in the tainted version of the load test, we're making 3 (out of the previous 4) parameters fixed.

So in the swarm machine, we are allowed to have as many different connections as source ports are used.

And guess what? The number of different source ports in the previous two tests corresponds to the sysctl parameter net.ipv4.ip_local_port_range from the machine where we lauched the load test.

Test3: And how we do un-taint the load test!?

Let's do an exercise:

- remember that the TCP TIME_WAIT state timeout for the ipvs module is hardcoded to 120s, and that value can't be

sysctl'ed - rememeber that the swarm node will not be able to keep more connections created from a node injector than the value of

sysctl net.ipv4.ip_local_port_range | awk '{print $4 - $3}'executed on the injector machine (in my case around 28k)

28k requests / 120s = approx 230 req/s

So simply put, inject 150 requests/s from 10 different nodes and see what happens

In this case, I will do a variation, so prepare:

- the docker swarm machine without connection in TIME_WAIT state (wait a little from the last test you executed)

- 3 machines from which we will inject requests using

abinto the swarm machine

Let's begin!

a) before starting the test, execute the following on each of the 3 nodes from which we will launch ab:

sudo sysctl -w net.ipv4.ip_local_port_range="10000 61000"

This will give us a higher number of connections when we reach the point to see the test result.

b) now on the swarm node, as usual, execute:

watch -n 0.5 "sudo nsenter --net=/var/run/docker/netns/ingress_sbox cat /proc/net/ip_vs_conn | grep TIME_WAIT | wc -l"

c) go into JUST ONE of the traffic injector nodes and execute:

ab -n 60000-c 100 http://192.168.1.11/

d) eventually, the output value from b) will stop growing and the ab command from c) will get stuck

e) go into the SECOND of the traffic injector nodes and execute:

ab -n 60000-c 100 http://192.168.1.11/

f) magic! the number of connections as shown in b) increases!

g) even more! go into the THIRD of the traffic injector nodes and execute:

ab -n 60000-c 100 http://192.168.1.11/

Wait until this ab command gets stuck.

i) WOW! The number of connections as shown in b) increases more!

In my case, the output from b) is:

Every 0.5s: sudo nsenter --net=/var/run/docker/netns/ingress_sbox cat /proc/net/ip_vs_conn | grep TIME_WAIT | wc -l

124299

And that's it. We want from 28k simultaneous connections in TIME_WAIT state to well above 100k.

Hey! But 51k*3 = 153k connections, and we only got 124k!

Well, as the result from the test went well, I won't investigate further, but I suspect this could be caused by the ipvs module calculating some hash in order to do the connection keying and, as in my case I'm injecting traffic from IPs which are very bitwise similar, maybe there are some hash collisions. Or maybe each hash structure bucket does not contain enough space to hold that high number of collisions. Don't really know.

Maybe it's simply this this still is a semi-tainted test (we've spread a little the number of IP addressed from where we lauched the test, but in real world scenarios we will have many more client IPs with lots fewer requests/s from each IP).

Conclusion

At first glance, it seemed that due to the swarm ingress network not being ablt to aborb a At first glanc high-and-sustained in terms of requests/s, some tweaking should have to be done via sysctl on the swarm machine.

Investingating a little, it seemed that a good option would be to patch the ipvs kernel module in order to reduce the TIME_WAIT timeout, because it seemed that the cause of the poor throughput in the ingress network was "filling" the connection table with connections in TIME_WAIT state, so it looked like a good idea to quickly clean up those connections.

Further testing showed that the real cause was a bad designed load test, and the limit was not on "filling" the connection table on the swarm machine but of the number of different combinations of "source IP"+"source port" in the load test requests.

So far so good, we've seen how a bad desinged load test can lead to switching to Kubernetes.

And there might be other perfectly valid reasons for the switch, but not the results of bad load test.

Simply... next time (and this includes me of course) remember to launch load tests from different machines!

@tmarti Please read carefully all the comments before jumping to conclusions. The test is not flawed, the test is basically a reproduction of what was already happening in real production with real production load in my environment (thousand of connections per second from real clients on the internet, proxied to Swarm by load balancers). And the same test works as expected with the same exact configuration, just without Swarm mode (or using host networking). So, Swarm is still broken and this issue is still valid and unresolved.

I'm sorry @vide, it wasn't my intention in any way to unerdmine your problem.

I suppose the emotion prevented me from taking into account this statement from yours:

but I'm actually experiencing the problem in production with our custom java-based HTTP microservice

I did a small test removing the service from docker swarm and executing in the docker machine:

docker container run -p 80:80 --name nginx -d nginx

And then, from another machine, executing:

curl 192.168.1.11

Now, in the docker machine, I don't observe any connection in TIME_WAIT state.

BUT in the "another machine", if I do:

netstat -noal | grep TIME_WAIT

I can see:

tcp 0 0 192.168.1.12:19841 192.168.1.11:80 TIME_WAIT timewait (58.73/0/0)

What happens here? Now the TIME_WAIT state does not happen in the docker machine but on the machine from where we do the request.

Strange, eh?

According to the TCP protocl RFC (https://www.ietf.org/rfc/rfc793.txt, section 3.5. Closing a Connection and around), the endpoint that ends up in TIME_WAIT state is the endpoint that does a closeon the connection

What this tells us is that:

- on the load test case with swarm, and on your java-microservices case, ther

docker service createcase, the endpoint that is closing the connection is theipvsmodule, and it is it who ends in TIME_WAIT state. - on the simplest case, the

docker container runcase, it is the client who closes the connection and hence ends in TIME_WAIT state.

_Is it possible that your java micro-service is invoked always from the same IP (or from a few IPs but with high throughput from each of them?)._

Or either:

_Is it possible that it is your java micro-service the one that is invoking some other micro-service balanced with swarm also with high throughput?_

Keep in mind that either of the endpoints (even it's possible that both endpoints exeperience this) that closes the TCP connection will end up in TIME_WAIT state.

I suspect that in your scenario, it would be more convenient to avoid ipvs closing the connection and letting the remote endpoiont close the connection and deal with the TIME_WAIT timeout. But in any case, in the case of micro-services which call other load-balanced micro-services, either of the two must deal with connections in TIME_WAIT state.

Also in this case it would be interesting to be able to tweak the TIME_WAIT timeout for the ipvs module.

I cannot guess much more without knowing the details of your case, but in any case I'm not an expert on the subject.

@vide, you edited your last comment and added this:

proxied to Swarm by load balancers

Next time create a new comment, so the responses to your comments keep coherent.

Based on you edit, here you have your problem.

Load balancers are surely source-NAT-ing the connections from real clients, so swarm sees them as comming from very few different IPs (as many different IPs as proxyfier load balancers you have), which trigger the described problem.

So here you have the same case as the tainted load test.

Maybe (I'm a little bit new to this arquitecture world) you could assign several different outbound IPs to each proxifer-load-balancer to add a little bit of diversity to the IPs swarm _sees_ and mitigate this problem. Of course, this would only be a workaround.

@tmarti the java microservice receives/received (I don't work there anymore, so I can't really say) all the connections from a couple of haproxy instances, we just switched the backend servers from pointing to several separated instances to the several Swarm slave IPs having the service port exposed, and you can see the response time difference when rolling back to the non-swarm configuration in the screenshot I've attached. The only difference in the setup was Swarm, hence I put the blame on it.

Maybe the TIME_WAIT on the IPVS side has some implication on the issue (generating socket starvation), or maybe in the end my test was not an exact reproduction of what was happening in production, but as I said I can not give you more details or test changes because I don't have access to that infra anymore. :(

@tmarti I swear I've only edited typos, and just a few seconds after adding the comment

No need to swear.

From whay you say about the haproxy instances, the problem is the same as in the bad load test: swarm balancer seeing too little different IPs given the high inbound troughput, which leads to connection saturation (according to ipvs hash-keying the connections by sourceIP:sourcePort + destIP:destPort).

I don't really think it's a swarm or ipvs design problem, there is really skilled people behind those products.

But this case teaches all of us a great lesson. As well as we do test our code, we must test our architecture so that it makes a proper use of the underlying technologies, and before blaming a product, trying to see the real cause of what's happening.

At the end I'm gratefull to you because this series of posts showed me a good point about chaining load balancers (haproxy + ipvs).

Big hugs @vide.

This is one of the best threads, ever.

Thanks for taking the extra time to analyze.

@tmarti would it be possible for you to summarize this thread in the form of a recommendation? For example something like: "_if you use the default ipvs swarm load-balancer, and put an external load-balancer in front of it, than make sure that ....... or else ..... will happen, because ......_". Or something along those lines?

That would make things way easier for people reading this thread later. (And for some reading it now...)

@raarts and @tmarti a recommendations writeup would be fantastic for the rest of us who's been following this thread (but unable to grasp all the details and implications of it).

On thing that strikes me though : does anyone really expose their swarm directly onto the internet? Wouldn't most setups involve a proxy of some sort?

I do. I run a Swarm that is mostly on-premise, but some nodes are in public cloud, connected through a Zerotier VPN (which I love by the way). Three of them expose only two ports: 80/443 (going into Traefik proxies in network host mode). They are loadbalanced by DNS round-robin.

@tmarti Very nice analysis. Definitely provides some great insight and thoughts to consider for future architectures as well.

@tmarti Execellent analysis and insight. I just encountered this issue recently. Thank you to help me find the reason why my load testing on docker swarm always get stuck.

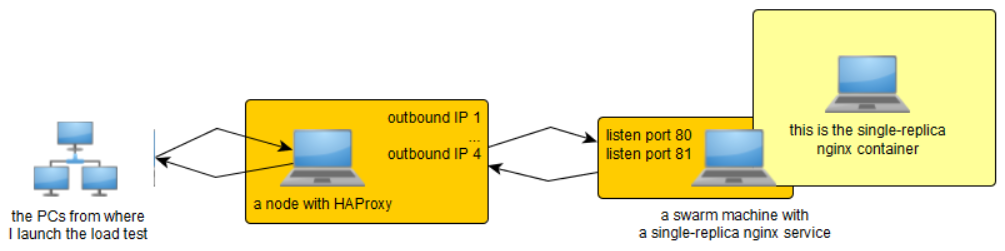

Did a first test with HAProxy in front of swarm.

Tested injecting load with ab and siege from different other nodes.

On the swarm service, mapped both ports 90 and 81 to port 80 of the nginx contanier.

And on the HAProxy node assigned 4 IP addresses to the network device.

Then, in the HAProxy backend definition, added 8 servers pointing to the same swarm machine IP, but with combinations of:

- targeting port 80 and 81

- setting either of the HAProxy's 4 IPs as source IP address

So that gave me a total of 8 server combinations in the backend definiton.

The good point is that each of those servers implies a different pair of source IP+dest port on the TCP communication between the HAProxy and swarm/IPVs.

So in the swarm machine this should suppose allowing as much as a total of 28k (ephemeral port range in the HAProxy machine) * 8 connections.

The HAProxy frontend listens to 0.0.0.0, we will later see why.

Also, some tuning on the HAProxy machine: increase max number of open file descriptors, increase ephemeral port range, increase max number of TCP connections in TIME_WAIT state, increase max netfilter_conntrack concurrent connections, and some extra tuning on the HAProxy config file (mainly to increase max connection number).

And then launched the load tests.

Increasing ephemeral port range on the load generator machines and launching 30k requests from 3 other nodes (256 concurrent connections from each node) to the SAME IP of the HAProxy machine (remember it has 4 IPs and listens to 0.0.0.0), I observe that the docker machine raise to between 70% and 80% CPU and an aggregated throughput of between 1700 and 2000 req/s, which is satisfactory.

But the annoying thing is that if I launch the test again from the 3 load generators, now pointing to ANOTHER of the IP addresses of the HAProxy machine (but all 3 to the SAME IP), although I observe a troughput now of about between 1000 and 1300 req/s, as much as about 9% of the requests do not receive a response.

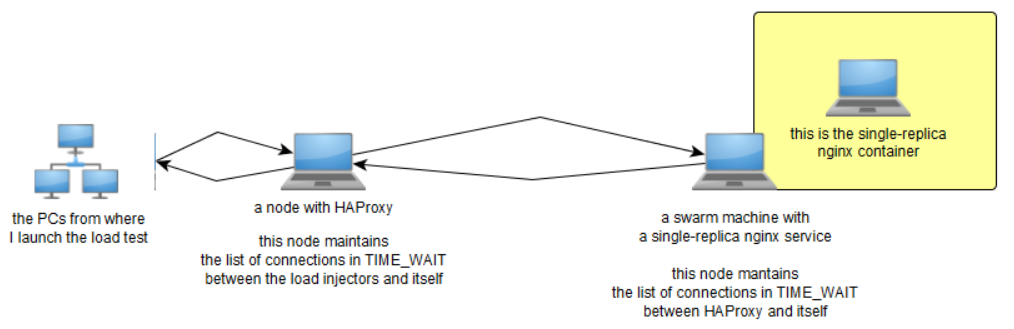

One of the most annoying things that happen is that it seems that:

the HAProxy node holds TIME_WAIT connections between load injector and HAProxy nodes

the swarm node holds TIME_WAIT connections between HAProxy and swarm nodes

Due to 1, the second round of load is injected to a different IP from the HAProxy node (the clients have no way to know if they recycle an ephemeral port for the same destination, it will (or not) already be in TIME_WAIT state on the HAProxy node and hence rejected/discarded).

Due to 2 (and I suspect this is the problem), similarly to 1, the HAProxy node has no way to know which ports it should recycle, and I think the problem actually comes from here: for a given pair of source IP (out of the 4 IPs of HAProxy node) and destination port (redirected ports 80 and 81 on the swarm service), the HAProxy node has no way to know that a concrete ephemeral port does not have to be reused during the TIME_WAIT timeout on the docker machine (hardcoded to 120s on IPVS).

If only HAProxy could be force to also close the socket, it would enter in TIME_WAIT state too, preventing that ephemeral port to be reused during the timeout. BUT THEN another problem would surface: TIME_WAIT timeout in docker machine is hardcoded by IPVS to 120s, and it seems that the TIME_WAIT timeout on the HAProxy machine is set (Ubuntu Server 16.04, seen no way to change it) to 60s.

By the moment you see, a relatively easy setup that bringa another question :-).

Well, did a new test, so prepare a bowl of popcorn and join this new comment.

Where we left on last comment

I prepared a small setup involving the following:

The idea behind all those 4 outbound IP addresses on the HAProxy node and listening to ports 80 and 81 (redirected to port 80 of the nginx container) on the swarm node was to add diversity in order to increase the number of ephemeral ports that could be used from the HAProxy node and hence increase the number of concurrent connections.

As I noticed today, the idea of assigning more outbound IPs to the HAProxy is good. This acts a multlplier for the number of available connections.

But not the idea to listen to serveral TCP ports and redirect them. The reason is when connection from the HAProxy node to the swarm node (during balancing), if an emphemeral port for a given IP is already taken, it will not be taken agin while in use (either TIME_WAIT or not). Although a connection is identified by sourceIP+sourcePort+destIP+destPort, from the same IP you cannot reuse the same ephemeral port although it's pointing to some other destionation.

The final point from the previous comment was based on this observation:

And following facts:

- on the HAProxy node, TIME_WAIT timeout is set to 60s (it's harcoded on kernel source file

include/net/tcp.h, search there for#define TCP_TIMEWAIT_LEN) - on the swarm node, IPVS module sets TIME_WAIT timeout to 120s (harcoded at compile time)

The idea was that as the HAProxy node was no way to know that it has already used a given ephemeral port for TCP connection establishment between itself and the swarm node, it could potentially reuse ephemeral ports, beeing that the root of the disaster.

Let's elaborate from here.

First test: let's see what happens when we reuse an ephemeral port

Thanks curl for existing, because this will be the tool for this test.

For this first test, we just need the docker node and the HAProxy node.

We will have a total of 3 terminals open (ideally all of them visible on the screen).

Preparation on the docker node:

docker service create --name nginx --replicas 1 -p 80:80 nginx

Now execute the following at the 1st on-screen terminal on the docker node (there is a reason for that 0.1) and execute:

watch -n 0.1 sudo nsenter --net=/var/run/docker/netns/ingress_sbox cat /proc/net/ip_vs_conn

Preparation on the HAProxy node: just have curl installed.

Now, open the 2nd on-screen terminal to the HAProxy node and execute:

watch -n 0.1 "netstat -noal|grep TIME_WAIT"

Now, open the 3rd on-screen terminal to the HAProxy node and execute:

time curl --interface [some outbound IP of the HAProxy node] --local-port 61235 http://[the swarm node IP]

And that's the nice curl into action. It allows us to choose the ephemeral port to use (--local-port).

The timing for the curl command in my case tells me that:

real 0m0.015s

In the order of a few milliseconds.

From this point, you will notice:

- on 1st terminal (swarm node) you will see just one connection which starts to timeout from 120s

- on 2nd terminal (HAProxy node) you will see just ont connection which starts to timeout from 60s

Nothing new. Just wait for the 2nd terminal connection to expire (after 60s), and WHILE the connection on the swarm node is still on TIME_WAIT, launch THE SAME curl command from 3rd terminal and observe carefully.

Hold on! That ephemeral port belongs to a connection in TIME_WAIT on the swarm node. Sure! Can you imagine what will happen then? Just keep reading.

If you observe carefully the ouptut from the 1st terminal, you will notice that since the moment we launch the second curl command, the connection in TIME_WAIT will disappear for approx 1 second, and then start counting from 120 again.

Now obseve the output from the second curl command timing info. It will not be anymore on the order of a few milliseconds, but in the order of about a few milliseconds PLUS 1 extra second:

real 0m1.013s

(Second test): What the...! What just happened previously!?

Another tool enters the game: tshark. Install it on the HAProxy node.

If tshark is used to inspect what's happening between HAProxy and swarm, which can be done executing the following on the HAProxy terminal (replace [swarm node IP] with the real IP of the swarm node and '[device]' with the name of the network device (as seen in ifconfig))...

sudo tshark -l -i [device] -o "capture.prom_mode:TRUE" -Y "(ip.src == [swarm node IP] or ip.dst == [swarm node IP]) and tcp.flags.syn == 1" -T fields -e ip.src -e tcp.srcport -e ip.dst -e tcp.dstport -e tcp.flags.syn -e tcp.flags.ack -e tcp.analysis.retransmission

... previous command will capture data corresponding to the first two messages of the 3-way handshake of the TCP connection betwen HAProxy and swarm: SYN and SYN+ACK).

With tshark running, excute again the curl command (at this point we assume that TIME_WAIT connections from previous test have already expired on both HAProxy and swarm nodes).

Somthing like the following will be shown on the tshark ouput:

192.168.1.20 61235 192.168.1.11 80 1 0

192.168.1.11 80 192.168.1.20 61235 1 1

Frist two numbers are source IP+port (our HAPRoxy node IP and the ephemeral port we told curl to use).

3rd and 4th are destination IP+port (our swarm node IP and port 80).

5th is SYN flag, and 6th is ACK.

So that seems pretty good for establishing a TCP connection.

Now, wait until the connection in TIME_WAIT expires on the HAProxy node (but NOT on the swarm machine) and execute curl again. Please also keep monitoring 1st terminal open at swarm node with the nsenter command.

Following will be shown on tshark output:

192.168.1.20 61235 192.168.1.11 80 1 0

(here a delay of 1s will happen)

192.168.1.20 61235 192.168.1.11 80 1 0 1

192.168.1.11 80 192.168.1.20 61235 1 1

Wow! It seems that the SYN message from the HAProxy node to the swarm node is sent twice. And btw, what is this new 7th number 1 in tshark output? (spolier: that 7th number 1 is the _retransmit_ flag).

This is what happens:

- when HAProxy node tries to establish a connection to the swarm node (sending a SYN message), and the ephemeral port chosen by HAProxy node is on TIME_WAIT on the swarm node, the connection is removed from the connection table on the swarm node (on IPVS module) (for this reason it disappears during 1 second), but it does not respond with a SYN+ACK.

- what happens now? Well, it enters into action the RTO (retransmission timeout) mechanism on the HAProxy node. It waits for 1 second and tries to send a new SYN message (this time with the retransmit flag we see in the second fragment of the

tsharkouput). - Now, on the swarm node IPVS module, as the connection was already dropped on step 1 and the slot freed, it now says

oh! this slot is free!and the connection is accepted. - The connection is fully established and the communication can continue.

And this is the reason for the dropped performance. If we now repeat the test from the previous comment with siege from the load injector node and tshark capturing on the HAProxy node, we'll see LOTS of SYN message retransmits. This means that lots of connections from the HAProxy node to the swarm node will suffer a 1-second delay, eventually leading to a performance degradation.

Right now, I think this is the ultimate cause of the degraded performance of the load test.

Wait! There's more

3 more points:

a) This kind of recycling connections in TIME-WAIT state is also known as _TIME-WAIT Assasination_:

Googling a little brings up some links talking about it, like https://blogs.technet.microsoft.com/networking/2010/08/11/how-tcp-time-wait-assassination-works/

b) Is seems that it also would be nice to lower the 1s of the RTO timeout:

See here (https://unix.stackexchange.com/questions/210367/changing-the-tcp-rto-value-in-linux) for some comments about it.

It would be nice but wait for c).

c) Ultimately, it seems that the IPVS module should support this kind of TIME-WAIT connection recycling

See following thread for a discussion that dates back to 2014 from (guess who? yes!) some mantainers of the IPVS module, and yes, about implications of these last paragraphs about TIME-WAIT Assassination and degraded performance:

http://archive.linuxvirtualserver.org/html/lvs-devel/2014-12/msg00016.html

Regarding this last point, if the take a look at the code mentioned in the last link, we end up in (IPVS code again) ip_vs_core.c source file (https://github.com/torvalds/linux/blame/master/net/netfilter/ipvs/ip_vs_core.c).

In that file, there is following piece of code (2 years since it was last touched):

/*

* Check if the packet belongs to an existing connection entry

*/

cp = pp->conn_in_get(ipvs, af, skb, &iph);

conn_reuse_mode = sysctl_conn_reuse_mode(ipvs);

if (conn_reuse_mode && !iph.fragoffs && is_new_conn(skb, &iph) && cp) {

bool uses_ct = false, resched = false;

if (unlikely(sysctl_expire_nodest_conn(ipvs)) && cp->dest &&

unlikely(!atomic_read(&cp->dest->weight))) {

resched = true;

uses_ct = ip_vs_conn_uses_conntrack(cp, skb);

} else if (is_new_conn_expected(cp, conn_reuse_mode)) {

uses_ct = ip_vs_conn_uses_conntrack(cp, skb);

if (!atomic_read(&cp->n_control)) {

resched = true;

} else {

/* Do not reschedule controlling connection

* that uses conntrack while it is still

* referenced by controlled connection(s).

*/

resched = !uses_ct;

}

}

if (resched) {

if (!atomic_read(&cp->n_control))

ip_vs_conn_expire_now(cp);

__ip_vs_conn_put(cp);

if (uses_ct)

return NF_DROP;

cp = NULL;

}

}

As debugging the kernel was an overkiller, I just added some printk's, recompiled, replaced and rebooted, and seen that from the four conditions on the most-external if, for the case that would be a great candiate to reuse a connection in TIME_WAIT (instead of dropping it and waiting an entire RTO):

if (conn_reuse_mode && !iph.fragoffs && is_new_conn(skb, &iph) && cp)

When a new SYN is received for a connection that was in TIME_WAIT, the cp pointer is null. It's like the connection was not there previously as regarding to IPVS. Although it DOES drop the previous connection (as seen by using the nsenter command) and does not respond to the SYN message, forcing HAProxy node to wait an entire RTO in order to try to send a SYN message again (which succeeds).

And that's all for now. Hope the popcorn was tasty :-).

Thanks for this great walk-through. It looks as though the IPVS maintainers knew about this, and thought this was the best they could do.

Since both timers are hardcoded, you can't make them both the same, which might (?) improve things, but for the moment it now seems better to NOT put a load-balancer in front of a swarm when connection volumes are high and using the swarm ingress ipvs load-balancer.

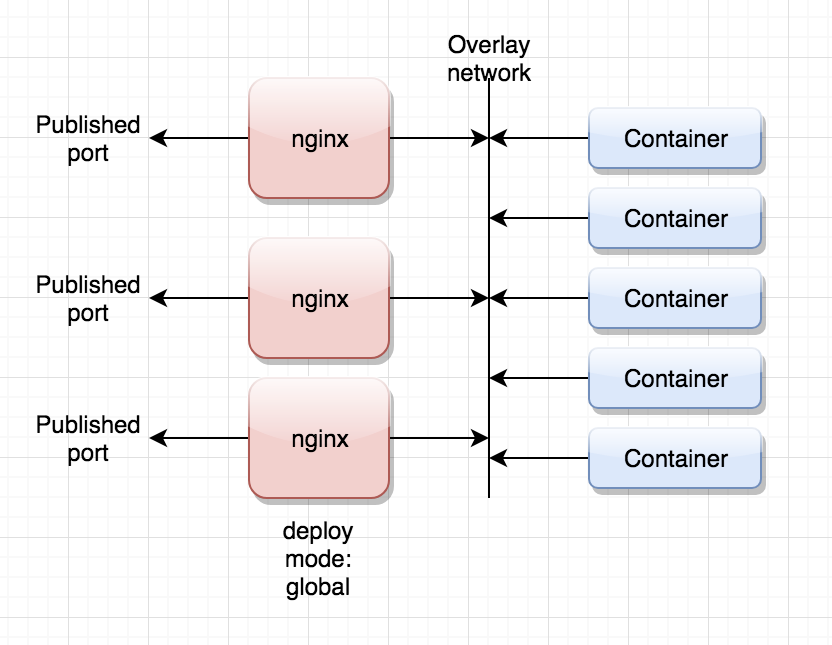

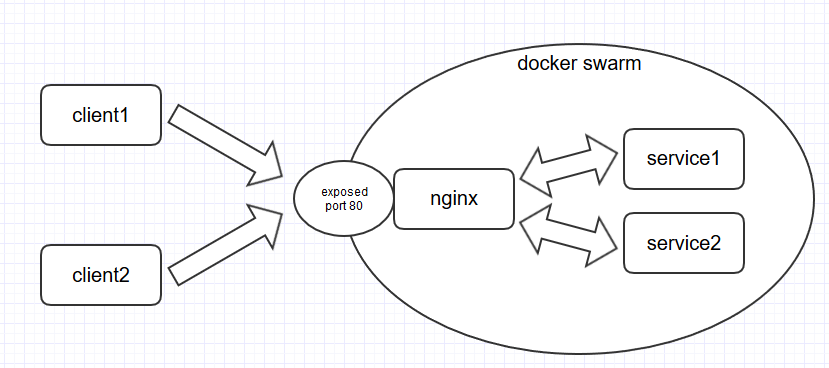

I use something like this (with mode: host for the published ports for the nginx proxies):

Which results in more or less the same situation, but inside the swarm itself. So I can expect the same to happen, but at least this way you can expand the number of frontend proxies/nodes, and you can front this with multiple load balancers if you want.

Yes, it seems a good idea by the moment to avoid an external LB in front of swarm under high load.

What your picture shows, though, seems the same as assigning for example 32 outbound IP's to the HAProxy node. Under heavy load the same problem will happen.

Good thing is that as long as HAProxy (our the nginx proxies in you picture) are tuned to handle a high number of concurrent connections, the delay on each of those connections will be at most (as long as the balanced services absorb the load) 1 additional second for each connection (due to the RTO in the port-recycling-scenario).

So i imagine that in this case, 500req/s will be able to be served, only with a delay of 1s on each request.

But also (really) think (by looking at that maintainers' forum thread) that this situation SHOULD be well-handled by IPVS.

There is no point in the pasted _if_ if no matter how the condition is never satisfied. And that _might_ (not really sure) be the IPVS bug.

Hi,

we have a similar issue, but the problem only seems to occur with swarm-internal traffic.

But let me first describe our test-setup (bare metal):

Output of docker version:

Client:

Version: 18.03.1-ce

API version: 1.37

Go version: go1.9.5

Git commit: 9ee9f40

Built: Thu Apr 26 07:20:16 2018

OS/Arch: linux/amd64

Experimental: false

Orchestrator: swarm

Server:

Engine:

Version: 18.03.1-ce

API version: 1.37 (minimum version 1.12)

Go version: go1.9.5

Git commit: 9ee9f40

Built: Thu Apr 26 07:23:58 2018

OS/Arch: linux/amd64

Experimental: false

Output of docker info:

Containers: 8

Running: 3

Paused: 0

Stopped: 5

Images: 21

Server Version: 18.03.1-ce

Storage Driver: devicemapper

Pool Name: centos-thinpool

Pool Blocksize: 524.3kB

Base Device Size: 10.74GB

Backing Filesystem: xfs

Udev Sync Supported: true

Data Space Used: 707.3MB

Data Space Total: 24.95GB

Data Space Available: 24.24GB

Metadata Space Used: 794.6kB

Metadata Space Total: 8.38GB

Metadata Space Available: 8.379GB

Thin Pool Minimum Free Space: 1.247GB

Deferred Removal Enabled: false

Deferred Deletion Enabled: false

Deferred Deleted Device Count: 0

Library Version: 1.02.140-RHEL7 (2017-05-03)

Logging Driver: json-file

Cgroup Driver: cgroupfs

Plugins:

Volume: local

Network: bridge host macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file logentries splunk syslog

Swarm: active

NodeID: 2iid0zm1nain8awsdvfw8r1e4

Is Manager: true

ClusterID: ufq20qvv0djznlg1bl0ked0m8

Managers: 1

Nodes: 1

Orchestration:

Task History Retention Limit: 5

Raft:

Snapshot Interval: 10000

Number of Old Snapshots to Retain: 0

Heartbeat Tick: 1

Election Tick: 3

Dispatcher:

Heartbeat Period: 5 seconds

CA Configuration:

Expiry Duration: 3 months

Force Rotate: 0

Autolock Managers: false

Root Rotation In Progress: false

Node Address: 172.18.2.128

Manager Addresses:

172.18.2.128:2377

Runtimes: runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 773c489c9c1b21a6d78b5c538cd395416ec50f88

runc version: 4fc53a81fb7c994640722ac585fa9ca548971871

init version: 949e6fa

Security Options:

seccomp

Profile: default

Kernel Version: 3.10.0-693.21.1.el7.x86_64

Operating System: CentOS Linux 7 (Core)

OSType: linux

Architecture: x86_64

CPUs: 16

Total Memory: 94.25GiB

Name: whoopwhooptest

ID: IE4X:DZ5F:3JLI:GIPF:YYCP:SLQ6:T6QP:7XYC:AZXY:Y2IG:QL6X:NXQN

Docker Root Dir: /var/lib/docker

Debug Mode (client): false

Debug Mode (server): false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

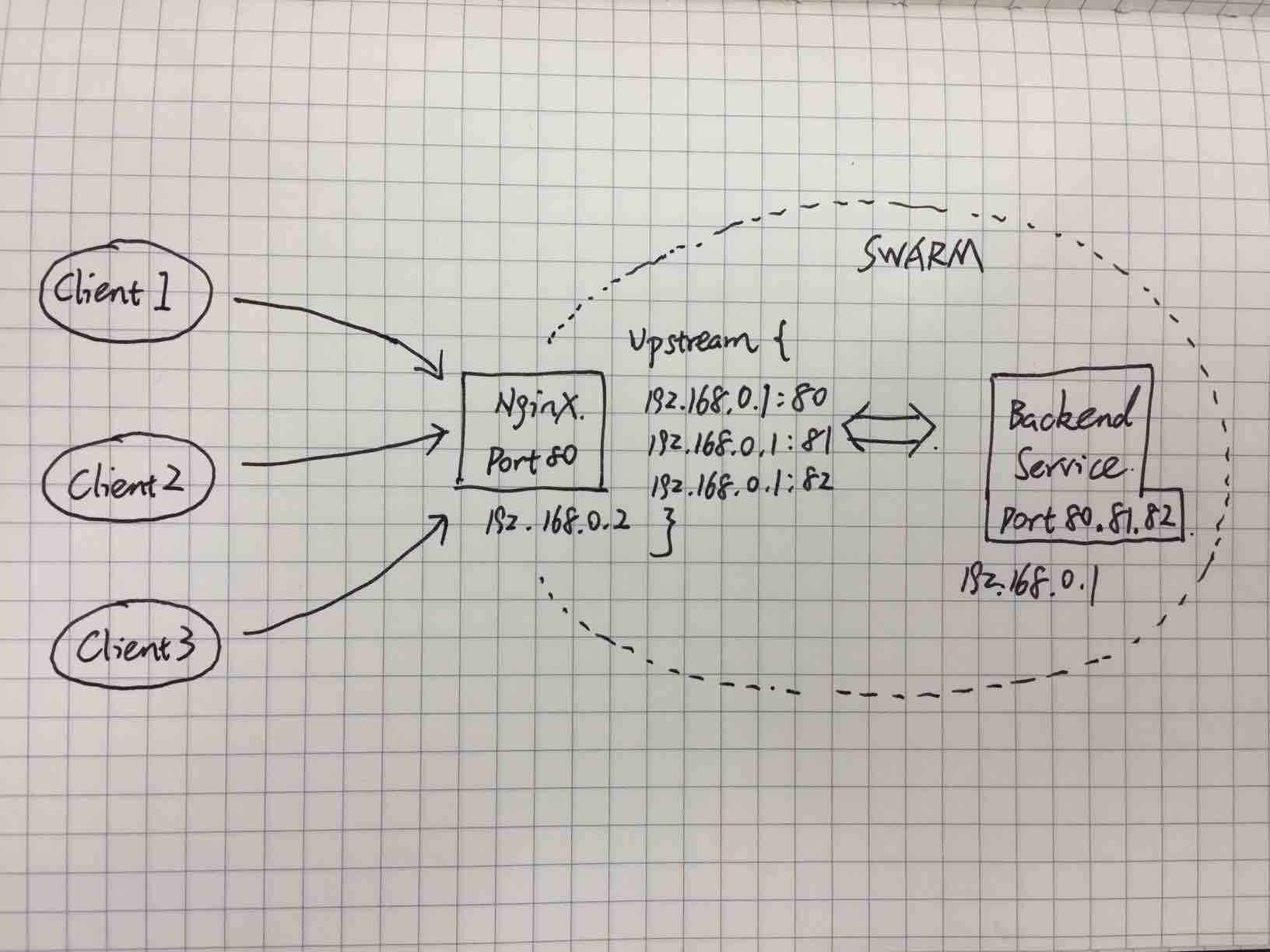

The nginx-service works as a reverseproxy to route to our different microservices by URI.

We are seeing a massive rise of response times under load. The response times range from 10 to 70ms under low load and rise to 1000 to 3000ms under "higher" load after ~14.000 requests.

The main problem is, TCP connection times are rising above 1000ms and lead to TCP connection timeouts in our production system where HAProxy is in front of the Docker Swarm.

We did some testing using jmeter to identify the issue / bottleneck.

This is what we did and found out:

Test 1

- no docker

- nginx installed via yum

- services started using java directly

1 client (10 parallel requests, 10 threads)

result

100.000 requests

- max response time: 426ms

- avg response time: 97ms

throughput: ~630 req/sec

=> no problemTest 2

nginx dockerized

- services dockerized

- no swarm

1 client (10 parallel requests, 10 threads)

result

100.000 requests

- max response time: 474ms

- avg response time: 94ms

throughput: ~670 req/sec

=> no problemTest 3

single node docker swarm

- nginx as stack service

- services as stack services

1 client (10 parallel requests, 10 threads)

result

100.000 requests

- max response time: 3099ms

- avg response time: 692ms

throughput: ~130 req/sec

=> problemTest 4

single node docker swarm

- nginx as stack service

- services as stack services

1 client (10 requests, 1 thread)

- lowered request rate in jmeter to find out the max the swarm can handle from 1 client

result

- lowered request rate in jmeter to find out the max the swarm can handle from 1 client

100.000 requests

- max response time: 388ms

- avg response time: 15ms

throughput: ~65 req/sec

=> no problemTest 5

same as Test 4 but 2 clients generating the same load (each client supplying half of the load)

result

client 1 (10 requests, 1 thread)

- 50.000 requests

- max response time: 1039ms

- avg response time: 16ms

- throughput: ~58 req/sec

- client 2 (10 requests, 1 thread)

- 50.000 requests

- max response time: 1032ms

- avg response time: 17ms

- throughput: ~58 req/sec

=> little problem

#

During all these tests, I had the following command running in our nginx and service container:

netstat -an|awk '/tcp/ {print $6}'|sort|uniq -c

Every time the response times rise to more than 1000ms, TIME_WAIT count (seen via command above) rises to about 12.000 to 13.000 in our service container. At the same time SYN_SENT count rises to about 90 to 100 in our nginx container while TIME_WAIT count is at about 15 to 25 there.

If wo do Test 5 again, but call our backend service directly (port exposed), effectively bypassing the nginx, there is no problem at all. No extreme rise in response times, no high TIME_WAIT counts.

All these tests lead to following conclusions for me:

- It doesn't matter if one, two (or more) clients generate the load (so one single client should not be a problem)

- The problem is related to swarm-internal communication only (nginx -> service), not related to communication from external client to a service within the swarm.

#

Does anyone have any hints to further debug what's going on in docker swarm's network?

Or are there any options we could try to tune the internal network?

Any help or idea would be appreciated.

xoxo

Indeed looks like the same issue. Several things come to mind looking at this:

How many instances of the backend service do you run, is that really 2 as shown in the diagram?

Also, a single node swarm is basically useless, I would not use that in production especially since single node without swarm runs fine.

On the other hand, if you want to run a multi-node swarm in production, then this test is not representative at all.

So my $0.02 would be, add an extra node with an exposed nginx proxy (load-balance using DNS), see if that improves the max # of req/sec.

First of all thanks for your reply @raarts.

Let me clarify some things:

We have a multi-node swarm in production (multiple managers, multiple workers) with HAProxy in front as loadbalancer (instead of your suggested DNS loadbalancing). All services have 2+ replicas.

We first tested on a "production-like" system with multiple nodes and HAProxy in front. Then we "minified" the setup for our tests on purpose, to leave out certain things, e.g. HAProxy, physical network, firewalls.

With our test setup we can surely say: It is neither HAProxy nor the physical network or a firewall that is causing the rise in response times under load. We could narrow it down to "virtual" docker-internal traffic this way.

This is exactly what we tried to achieve, to put our fingers onto the bleeding spot.

To get to your question about the number of instances of the backend services:

There are two different backend services in our test setup (we only use one of these in our tests). Each with replicas set to 1. We tried to scale up (2, 4, 8) both the service (we are using) and nginx wich did not change anything. We also tried this on a multi-node swarm with HAProxy in front with same behaviour.

In my mind the actual cause of the problem being discussed here, is that _to the ipvs-based internal swarm load-balancer_ all requests are coming from the same ip address. And since it hashes on source-ip/port it easily runs out of space in the hash-table. It also explains why adding multiple ip addresses to haproxy (in the way described by @tmarti) alleviates/solves the problem.

In your test environment you are using nginx as a proxy, which again causes all requests to originate from one (nginx's internal) ip address. In your production setup you use haproxy, which has the same effect.

I would take another good look at your tests with this principle in mind.

I understand that. I just hoped, someone had a good solution for this problem without externalizing the routing (via multiple IPs).

To recap, as I understand it the only viable solution would be:

- routing to services with nginx / HAProxy / whatever has to be done outside the swarm with multiple source IPs

- only backend services in swam

- every service has to expose its port so we can route to it from outside the swarm

- communication between services (wich generates internal load and would also lead to "port-exhaustion") has also to be done via routing outside the swam (to get multiple source IPs)

- service (in docker swarm) -> nginx with multiple IPs (outside the swarm) -> service (in docker swarm via exposed port)

Why should we use docker swarm then?

Yeah, you can scale services up and down easily and the traffic is loadbalanced internally. But what more benefits remain?

Rolling updates? Okay, this one stays great.

Automatic service discovery? Not needed anymore, cause routing to services is done via hardcoded servernames (hosts participating in swarm) and exposed ports in nginx or whatever you have in front of your docker swarm.

The overlay network is just great for services communicating directly to each other without "extra-hops" to a "router" outside the swarm, but it's useless if you can't take advantage of it due to the connection/port limit issue.

Am I missing or misunderstanding something obvious here?

How should I change our test setup to get closer to the core issue or a possible solution, without having to externalize all the routing that the internal swarm network should take care of?

Unfortunately I also have noted that swarm network has some problem.

I tested this simple stack composed by an nginx container as frontend that forwards anything to an apache backend:

//Docker Compose for swarm mode

[root@xxxxxx-xx-01 compose]# vi stack_nginx_apache_swarm.yml

version: '3'

services:

proxy:

image: nginx

ports:

- '8991:80'

volumes:

- /gfs1/compose/nginx.conf:/etc/nginx/conf.d/default.conf:ro

web:

image: httpd

//Docker Compose for standalone mode.

[root@xxxxxx-xx-01 compose]# more stack_nginx_apache_no_swarm.yml

version: '2'

services:

proxy:

image: nginx

ports:

- '8991:80'

volumes:

- /gfs1/compose/nginx.conf:/etc/nginx/conf.d/default.conf:ro

web:

image: httpd

[root@xxxxxx-xx-01 compose]# more nginx.conf

server {

listen 80;

location / {

proxy_pass http://web;

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Host $server_name;

}

}

I tested using the last offical docker version (18.03.0-ce) in a environment with 3 Swarm Managers and three Worker Nodes.

[root@xxxxxx-ce-01 compose]# docker info

Containers: 9

Running: 9

Paused: 0

Stopped: 0

Images: 135

Server Version: 18.03.0-ce

Storage Driver: devicemapper

Pool Name: docker-thinpool

[root@xxxxxxxx-xx-01 compose]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

yno1sq95oj0lch127unmopkpw * xxxxxxxx-xx-01.xxx.xxx Ready Active Reachable 18.03.0-ce

wqi9mnu3oi8ak5tbh84r8tbjq xxxxxxxx-xx-02.xxx.xxx Ready Active Leader 18.03.0-ce

vlc6qkfk7cn1kl1zqey7xigc0 xxxxxxxx-xx-03.xxx.xxx Ready Active Reachable 18.03.0-ce

rzsbihct4m38rf1jr56kx66s2 xxxxxxxx-xx-04.xxx.xxx Ready Active 18.03.0-ce

7i4eb15nlssl29s6fh84zh4hi xxxxxxxx-xx-05.xxx.xxx Ready Active 18.03.0-ce

sgzkxlki38tbiiv42ivct61ua xxxxxxxx-xx-06.xxx.xxx Ready Active 18.03.0-ce

I created two tests scenario. The first with swarm:

[root@xxxxxxxx-xx-01 compose]# docker stack deploy --prune --compose-file ./stack_nginx_apache_swarm.yml stack-nginx-apache --with-registry-auth

[root@xxxxxxxx-xx-01 compose]# docker stack ps stack-nginx-apache

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

aqt4rt9azu0l stack-nginx-apache_proxy.1 nginx:latest xxxxxxxx-xx-06.xxx.xxx Running Running 7 minutes ago

2iys9ec8girb stack-nginx-apache_web.1 httpd:latest xxxxxxxx-xx-05.xxx.xxx Running Running 8 minutes ago

I started different tests case with ab and wrk with the following commands (10.20.102.201 is the ip address of xxxxxxxx-xx-01.xxx.xxx node):

ab -n 10000 -c 100 http://10.20.102.201:8991/

wrk -t100 -c 100 -d10 http://10.20.102.201:8991/

The same thing in standalone mode starting the containers in this way:

[[email protected] compose]# docker-compose -p stack-nginx-apache -f ./stack_nginx_apache_no_swarm.yml up -d

The following results are clear: randomly there is same thing that forces the swarm overlay network to be slow, very slow.

In standalone mode, the results are very good and stable.

SWARM

Command Time Requests per second Total Error

ab -n 10000 -c 100 http://10.20.102.201:8991/ T0 3697.60 10000 0

ab -n 10000 -c 100 http://10.20.102.201:8991/ T1 97.13 10000 0

ab -n 10000 -c 100 http://10.20.102.201:8991/ T2 351.14 10000 0

ab -n 10000 -c 100 http://10.20.102.201:8991/ T3 220.28 10000 0

wrk -t100 -c 100 -d10 http://10.20.102.201:8991/ T4 1457.25 14718 0

wrk -t100 -c 100 -d10 http://10.20.102.201:8991/ T5 97.80 988 1

wrk -t100 -c 100 -d10 http://10.20.102.201:8991/ T6 95.25 961 0

wrk -t100 -c 100 -d10 http://10.20.102.201:8991/ T7 94.35 953 0

No Swarm

ab -n 10000 -c 100 http://10.20.102.201:8991/ T0 3011.05 10000 0

ab -n 10000 -c 100 http://10.20.102.201:8991/ T1 3008.31 10000 0

ab -n 10000 -c 100 http://10.20.102.201:8991/ T2 3258.23 10000 0

ab -n 10000 -c 100 http://10.20.102.201:8991/ T3 3271.23 10000 0

wrk

wrk -t100 -c 100 -d10 http://10.20.102.201:8991/ T4 4127.54 41722 0

wrk -t100 -c 100 -d10 http://10.20.102.201:8991/ T5 3992.96 40361 0

wrk -t100 -c 100 -d10 http://10.20.102.201:8991/ T6 4136.09 41836 0

wrk -t100 -c 100 -d10 http://10.20.102.201:8991/ T7 4086.80 41286 0

No CPU or Memory issue was raised during the tests.

I made different tests pointing to different nodes of the cluster and the situation doesn't change.

In this condition it's not possible to go in Production.

Did anybody try using dns-rr for internal networks? That should overcome the ipvs problems

@raarts Just tested it.

The throughput is way better. Can be compared to _Test 2_ in my earlier post, where everything is containerized but not using swarm.

Hi Raarts,

OK, but this is a workaround, not a solution.

If we use DNS Round Robin (DNSRR) mode, a load balancer must be configured for consuming all the ip list returned by dns service query. So means that the dynamic load balancer feature inside swarm is not used anymore and this is not good.

Stefano

I'm not sure what you mean. When using dnsrr, for example the nginx example as given by @PhilPhonic should use the service name, and on every dns lookup would get a different ip address for the internal service to use. This is handled by swarm, so it will do dynamic load balancing.

OK. I didn't understand how dns-rr works. Now it's clear and you are right.

Stefano

Sorry for re-joining late to the thread (very busy at work during this and last week).

Some responses to comments

@PhilPhonic, from your Test 5:

result

client 1 (10 requests, 1 thread) 50.000 requests max response time: 1039ms avg response time: 16ms throughput: ~58 req/sec client 2 (10 requests, 1 thread) 50.000 requests max response time: 1032ms avg response time: 17ms throughput: ~58 req/sec

Please note that prettly surely...

max response time: 1032ms