Mixedrealitytoolkit-unity: MRTK should be using SpatialPointerPose.Head

Issue description

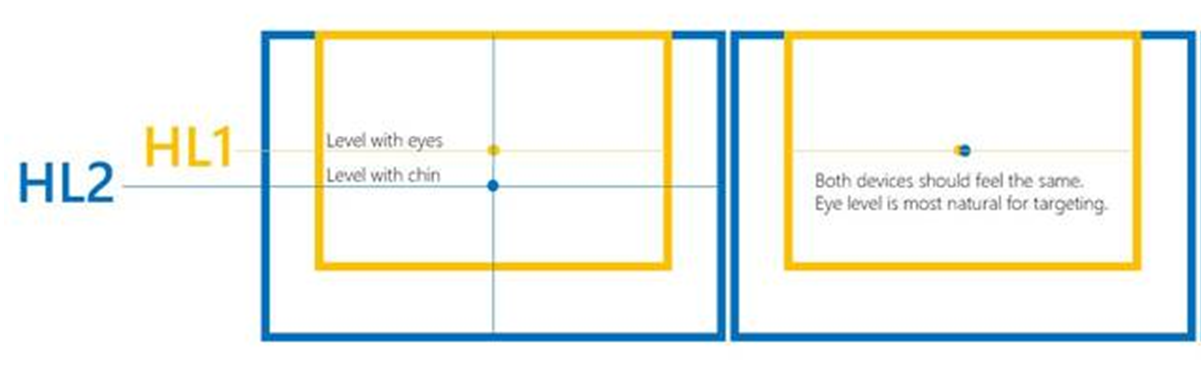

On HL2, MRTK should position the head-ray pointer using the same offset as HL1. This enables application developers to create a consistent head-targeting experience for their users when moving between HL1 and HL2 devices.

Steps to reproduce the behavior

Observe the HL2 platform positions the head-ray pointer at the same height relative to the user's eyes as HL1. This is good for consistency in the OS! But when loading a Unity app, the cursor does not inherit the OS position. The result is a change in default head-pointer position when moving from OS to Unity app.

Expected behavior

MRTK should use SpatialPointerPose.Head for pointer positioning with head-ray.

Screenshots

Visual explainer of problem, approximated dimensions for conceptual understanding only:

Your Setup

- Unity Version [e.g. 2018.3.11f1]

- MRTK Version [e.g. v2.0]

Target Platform

The head-ray position should be uniform for all 4 platforms:

- HoloLens

- HoloLens 2

- WMR immersive

- OpenVR

Additional context

This will alleviate the need for application developers to provide unique offsets for their apps when running on different endpoints. Uniform position for the head-ray pointer will enable application developers to spend time making their apps, rather than making the platform consistent for head targeting.

All 9 comments

@julenka, FYI

Great bug description, thank you!

@wiwei and @davidkline-ms I'd like this to be considered for our next iteration MRTK 2.3. Is "Consider for Next Iteration" label still being looked at when we decide which bugs to assign for the next iteration?

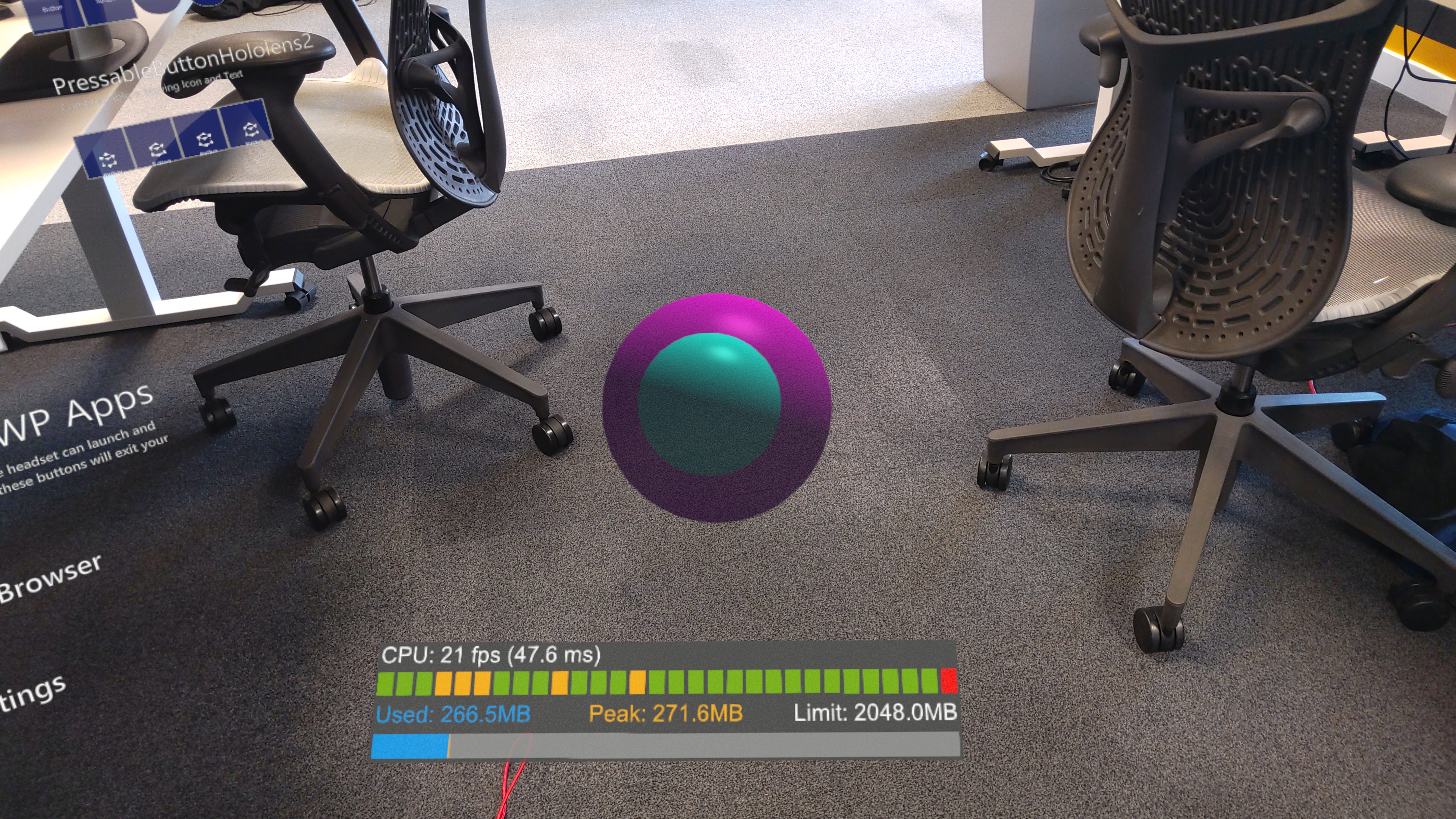

So I got a chance to test this today. I hacked a few things together so I could draw two spheres:

The magenta sphere's position is set using the camera transform (the same transform is used in positioning the gaze cursor).

The cyan sphere's position is set using

SpatialPointerPose.HeadPositionandForwardDirection.

Here's a picture of these spheres on device:

Other than a slight delay on the cyan sphere, the direction from the camera to both spheres and the gaze cursor was the same (I couldn't capture the gaze cursor in this image, as I didn't disable articulated hands, so it disappeared whenever I attempted to take a picture). I'd expect if SpatialPointerPose.Head was going to give us the corrected transform, that the position of the cyan sphere would differ from the magenta sphere and gaze cursor.

I'm going to follow this up with some people who may know more about this to see if there's a way to get the transform you described from the OS. Otherwise we'd have to use some offset value, which isn't ideal.

After following up with some OS/shell peeps, we're still waiting for this to be fixed on their side.

If someone is looking into this in the future, I believe that @dfields-msft is looking into this on the OS side, so you can maybe reach out to him.

@MenelvagorMilsom Looks like the change on the OS side was recently merged! Are you still able to work on this?

@keveleigh @MenelvagorMilsom the change is currently only checked in for the v-next OS release. We're still working on servicing to 19H1 and Vibranium; stay tuned... However, expect this to happen in the coming weeks, so preparing this change to be ready when it drops is a good idea.

@keveleigh I'm caught up in other work at the moment, sorry. Unfortunately the code I wrote for this before was very hacky, and not something that can be used by someone as a starting point.

We're already using SpatialPointerPose.Eyes in WindowsMixedRealityEyeGazeDataProvider. I imagine it will be simple enough to copy this pattern to acquire the information from SpatialPointerPose.Head.

Most helpful comment

@keveleigh @MenelvagorMilsom the change is currently only checked in for the v-next OS release. We're still working on servicing to 19H1 and Vibranium; stay tuned... However, expect this to happen in the coming weeks, so preparing this change to be ready when it drops is a good idea.