Minikube: error: You must be logged in to the server (Unauthorized)

The exact command to reproduce the issue:

minikube start

kubectl get nodes

The full output of the command that failed:

error: You must be logged in to the server (Unauthorized)

The operating system version:

Ubuntu 18.04

I have deleted the cluster using minikube delete.

Cleared everything from the config:

kubectl config delete-context minikube

kubectl config delete-cluster minikube

kubectl config unset.users minikube

rm -rf ~/.kube/config

rm -rf ~/.minikube

Created a new cluster using minikube start

Yet - still get the same error.

Checking the config:

kubectl config view --minify | grep /.minikube | xargs stats

stat: cannot stat 'certificate-authority:': No such file or directory

File: /home/tomerle/.minikube/ca.crt

Size: 1066 Blocks: 8 IO Block: 4096 regular file

Device: 802h/2050d Inode: 9837419 Links: 1

Access: (0644/-rw-r--r--) Uid: ( 1000/ tomerle) Gid: ( 1000/ tomerle)

Access: 2019-06-11 23:37:00.930257345 +0300

Modify: 2019-06-11 23:37:00.778257705 +0300

Change: 2019-06-11 23:37:00.778257705 +0300

Birth: -

stat: cannot stat 'client-certificate:': No such file or directory

File: /home/tomerle/.minikube/client.crt

Size: 1103 Blocks: 8 IO Block: 4096 regular file

Device: 802h/2050d Inode: 9837423 Links: 1

Access: (0644/-rw-r--r--) Uid: ( 1000/ tomerle) Gid: ( 1000/ tomerle)

Access: 2019-06-11 23:38:13.242084809 +0300

Modify: 2019-06-11 23:37:01.410256208 +0300

Change: 2019-06-11 23:37:01.410256208 +0300

Birth: -

stat: cannot stat 'client-key:': No such file or directory

File: /home/tomerle/.minikube/client.key

Size: 1679 Blocks: 8 IO Block: 4096 regular file

Device: 802h/2050d Inode: 9837424 Links: 1

Access: (0600/-rw-------) Uid: ( 1000/ tomerle) Gid: ( 1000/ tomerle)

Access: 2019-06-11 23:38:13.242084809 +0300

Modify: 2019-06-11 23:37:01.410256208 +0300

Change: 2019-06-11 23:37:01.410256208 +0300

Birth: -

All 32 comments

Think I have the same issue.

Ubuntu 18.04

kubectl version:

Client Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.3", GitCommit:"5e53fd6bc17c0dec8434817e69b04a25d8ae0ff0", GitTreeState:"clean", BuildDate:"2019-06-06T01:44:30Z", GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.2", GitCommit:"66049e3b21efe110454d67df4fa62b08ea79a19b", GitTreeState:"clean", BuildDate:"2019-05-16T16:14:56Z", GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"}

Related: #4353

If you can duplicate this, I would love to see the output of the following commands so that we may debug it further:

minikube start --alsologtostderrkubectl config view --minify | grep /.minikube | xargs stat(which you provided)minikube status

@tstromberg I'm facing the same problem .. Here's the output you need:

➜ minikube start --alsologtostderr

W0723 13:32:50.127327 13162 root.go:151] Error reading config file at /home/cvirus/.minikube/config/config.json: open /home/cvirus/.minikube/config/config.json: no such file or directory

I0723 13:32:50.127784 13162 notify.go:128] Checking for updates...

😄 minikube v1.2.0 on linux (amd64)

💿 Downloading Minikube ISO ...

129.33 MB / 129.33 MB [============================================] 100.00% 0s

I0723 13:34:03.590211 13162 start.go:753] Saving config:

{

"MachineConfig": {

"KeepContext": false,

"MinikubeISO": "https://storage.googleapis.com/minikube/iso/minikube-v1.2.0.iso",

"Memory": 2048,

"CPUs": 2,

"DiskSize": 20000,

"VMDriver": "virtualbox",

"ContainerRuntime": "docker",

"HyperkitVpnKitSock": "",

"HyperkitVSockPorts": [],

"XhyveDiskDriver": "ahci-hd",

"DockerEnv": null,

"InsecureRegistry": null,

"RegistryMirror": null,

"HostOnlyCIDR": "192.168.99.1/24",

"HypervVirtualSwitch": "",

"KvmNetwork": "default",

"DockerOpt": null,

"DisableDriverMounts": false,

"NFSShare": [],

"NFSSharesRoot": "/nfsshares",

"UUID": "",

"GPU": false,

"Hidden": false,

"NoVTXCheck": false

},

"KubernetesConfig": {

"KubernetesVersion": "v1.15.0",

"NodeIP": "",

"NodePort": 8443,

"NodeName": "minikube",

"APIServerName": "minikubeCA",

"APIServerNames": null,

"APIServerIPs": null,

"DNSDomain": "cluster.local",

"ContainerRuntime": "docker",

"CRISocket": "",

"NetworkPlugin": "",

"FeatureGates": "",

"ServiceCIDR": "10.96.0.0/12",

"ImageRepository": "",

"ExtraOptions": null,

"ShouldLoadCachedImages": true,

"EnableDefaultCNI": false

}

}

I0723 13:34:03.590446 13162 cache_images.go:285] Attempting to cache image: k8s.gcr.io/kube-apiserver:v1.15.0 at /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-apiserver_v1.15.0

I0723 13:34:03.590459 13162 cache_images.go:285] Attempting to cache image: k8s.gcr.io/coredns:1.3.1 at /home/cvirus/.minikube/cache/images/k8s.gcr.io/coredns_1.3.1

I0723 13:34:03.590304 13162 cache_images.go:285] Attempting to cache image: k8s.gcr.io/kube-proxy:v1.15.0 at /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-proxy_v1.15.0

I0723 13:34:03.590701 13162 cache_images.go:285] Attempting to cache image: k8s.gcr.io/kube-controller-manager:v1.15.0 at /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-controller-manager_v1.15.0

I0723 13:34:03.590341 13162 cache_images.go:285] Attempting to cache image: k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64:1.14.13 at /home/cvirus/.minikube/cache/images/k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64_1.14.13

I0723 13:34:03.590400 13162 cache_images.go:285] Attempting to cache image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1 at /home/cvirus/.minikube/cache/images/k8s.gcr.io/kubernetes-dashboard-amd64_v1.10.1

I0723 13:34:03.590428 13162 cache_images.go:285] Attempting to cache image: k8s.gcr.io/k8s-dns-kube-dns-amd64:1.14.13 at /home/cvirus/.minikube/cache/images/k8s.gcr.io/k8s-dns-kube-dns-amd64_1.14.13

I0723 13:34:03.590419 13162 cache_images.go:285] Attempting to cache image: k8s.gcr.io/pause:3.1 at /home/cvirus/.minikube/cache/images/k8s.gcr.io/pause_3.1

I0723 13:34:03.590436 13162 cache_images.go:285] Attempting to cache image: k8s.gcr.io/kube-addon-manager:v9.0 at /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-addon-manager_v9.0

I0723 13:34:03.590490 13162 cache_images.go:285] Attempting to cache image: k8s.gcr.io/k8s-dns-sidecar-amd64:1.14.13 at /home/cvirus/.minikube/cache/images/k8s.gcr.io/k8s-dns-sidecar-amd64_1.14.13

I0723 13:34:03.590497 13162 cache_images.go:285] Attempting to cache image: k8s.gcr.io/etcd:3.3.10 at /home/cvirus/.minikube/cache/images/k8s.gcr.io/etcd_3.3.10

I0723 13:34:03.590498 13162 cache_images.go:285] Attempting to cache image: k8s.gcr.io/kube-scheduler:v1.15.0 at /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-scheduler_v1.15.0

I0723 13:34:03.590316 13162 cache_images.go:285] Attempting to cache image: gcr.io/k8s-minikube/storage-provisioner:v1.8.1 at /home/cvirus/.minikube/cache/images/gcr.io/k8s-minikube/storage-provisioner_v1.8.1

I0723 13:34:03.591284 13162 cluster.go:90] Machine does not exist... provisioning new machine

I0723 13:34:03.593189 13162 cluster.go:91] Provisioning machine with config: {KeepContext:false MinikubeISO:https://storage.googleapis.com/minikube/iso/minikube-v1.2.0.iso Memory:2048 CPUs:2 DiskSize:20000 VMDriver:virtualbox ContainerRuntime:docker HyperkitVpnKitSock: HyperkitVSockPorts:[] XhyveDiskDriver:ahci-hd DockerEnv:[] InsecureRegistry:[] RegistryMirror:[] HostOnlyCIDR:192.168.99.1/24 HypervVirtualSwitch: KvmNetwork:default Downloader:{} DockerOpt:[] DisableDriverMounts:false NFSShare:[] NFSSharesRoot:/nfsshares UUID: GPU:false Hidden:false NoVTXCheck:false}

🔥 Creating virtualbox VM (CPUs=2, Memory=2048MB, Disk=20000MB) ...

I0723 13:34:03.794510 13162 cache_images.go:309] OPENING: /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-scheduler_v1.15.0

I0723 13:34:03.794510 13162 cache_images.go:309] OPENING: /home/cvirus/.minikube/cache/images/k8s.gcr.io/kubernetes-dashboard-amd64_v1.10.1

I0723 13:34:03.798424 13162 cache_images.go:309] OPENING: /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-addon-manager_v9.0

I0723 13:34:03.805468 13162 cache_images.go:309] OPENING: /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-proxy_v1.15.0

I0723 13:34:03.806543 13162 cache_images.go:309] OPENING: /home/cvirus/.minikube/cache/images/k8s.gcr.io/k8s-dns-sidecar-amd64_1.14.13

I0723 13:34:03.808255 13162 cache_images.go:309] OPENING: /home/cvirus/.minikube/cache/images/k8s.gcr.io/etcd_3.3.10

I0723 13:34:03.808312 13162 cache_images.go:309] OPENING: /home/cvirus/.minikube/cache/images/k8s.gcr.io/pause_3.1

I0723 13:34:03.808376 13162 cache_images.go:309] OPENING: /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-controller-manager_v1.15.0

I0723 13:34:03.808421 13162 cache_images.go:309] OPENING: /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-apiserver_v1.15.0

I0723 13:34:03.809985 13162 cache_images.go:309] OPENING: /home/cvirus/.minikube/cache/images/k8s.gcr.io/coredns_1.3.1

I0723 13:34:03.809989 13162 cache_images.go:309] OPENING: /home/cvirus/.minikube/cache/images/k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64_1.14.13

I0723 13:34:03.811677 13162 cache_images.go:309] OPENING: /home/cvirus/.minikube/cache/images/k8s.gcr.io/k8s-dns-kube-dns-amd64_1.14.13

I0723 13:34:03.833076 13162 cache_images.go:309] OPENING: /home/cvirus/.minikube/cache/images/gcr.io/k8s-minikube/storage-provisioner_v1.8.1

I0723 13:34:43.311607 13162 ssh_runner.go:101] SSH: sudo rm -f /etc/docker/ca.pem

I0723 13:34:43.370208 13162 ssh_runner.go:101] SSH: sudo mkdir -p /etc/docker

I0723 13:34:43.428786 13162 ssh_runner.go:182] Transferring 1038 bytes to ca.pem

I0723 13:34:43.430487 13162 ssh_runner.go:195] ca.pem: copied 1038 bytes

I0723 13:34:43.444328 13162 ssh_runner.go:101] SSH: sudo rm -f /etc/docker/server.pem

I0723 13:34:43.503298 13162 ssh_runner.go:101] SSH: sudo mkdir -p /etc/docker

I0723 13:34:43.568471 13162 ssh_runner.go:182] Transferring 1111 bytes to server.pem

I0723 13:34:43.570032 13162 ssh_runner.go:195] server.pem: copied 1111 bytes

I0723 13:34:43.631474 13162 ssh_runner.go:101] SSH: sudo rm -f /etc/docker/server-key.pem

I0723 13:34:43.687383 13162 ssh_runner.go:101] SSH: sudo mkdir -p /etc/docker

I0723 13:34:43.755555 13162 ssh_runner.go:182] Transferring 1679 bytes to server-key.pem

I0723 13:34:43.757197 13162 ssh_runner.go:195] server-key.pem: copied 1679 bytes

I0723 13:34:45.033786 13162 start.go:753] Saving config:

{

"MachineConfig": {

"KeepContext": false,

"MinikubeISO": "https://storage.googleapis.com/minikube/iso/minikube-v1.2.0.iso",

"Memory": 2048,

"CPUs": 2,

"DiskSize": 20000,

"VMDriver": "virtualbox",

"ContainerRuntime": "docker",

"HyperkitVpnKitSock": "",

"HyperkitVSockPorts": [],

"XhyveDiskDriver": "ahci-hd",

"DockerEnv": null,

"InsecureRegistry": null,

"RegistryMirror": null,

"HostOnlyCIDR": "192.168.99.1/24",

"HypervVirtualSwitch": "",

"KvmNetwork": "default",

"DockerOpt": null,

"DisableDriverMounts": false,

"NFSShare": [],

"NFSSharesRoot": "/nfsshares",

"UUID": "",

"GPU": false,

"Hidden": false,

"NoVTXCheck": false

},

"KubernetesConfig": {

"KubernetesVersion": "v1.15.0",

"NodeIP": "192.168.99.100",

"NodePort": 8443,

"NodeName": "minikube",

"APIServerName": "minikubeCA",

"APIServerNames": null,

"APIServerIPs": null,

"DNSDomain": "cluster.local",

"ContainerRuntime": "docker",

"CRISocket": "",

"NetworkPlugin": "",

"FeatureGates": "",

"ServiceCIDR": "10.96.0.0/12",

"ImageRepository": "",

"ExtraOptions": null,

"ShouldLoadCachedImages": true,

"EnableDefaultCNI": false

}

}

I0723 13:34:45.079176 13162 ssh_runner.go:101] SSH: systemctl is-active --quiet service containerd

I0723 13:34:45.141409 13162 ssh_runner.go:101] SSH: systemctl is-active --quiet service crio

I0723 13:34:45.157154 13162 ssh_runner.go:101] SSH: sudo systemctl stop crio

I0723 13:34:45.216048 13162 ssh_runner.go:101] SSH: systemctl is-active --quiet service crio

I0723 13:34:45.223954 13162 ssh_runner.go:101] SSH: sudo systemctl start docker

I0723 13:34:45.902286 13162 ssh_runner.go:137] Run with output: docker version --format '{{.Server.Version}}'

I0723 13:34:45.927080 13162 utils.go:241] > 18.09.6

🐳 Configuring environment for Kubernetes v1.15.0 on Docker 18.09.6

E0723 13:36:39.142160 13162 start.go:403] Error caching images: Caching images for kubeadm: caching images: caching image /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-addon-manager_v9.0: stream error: stream ID 19; INTERNAL_ERROR

I0723 13:36:39.205775 13162 cache_images.go:198] Loading image from cache: /home/cvirus/.minikube/cache/images/gcr.io/k8s-minikube/storage-provisioner_v1.8.1

I0723 13:36:39.205806 13162 cache_images.go:198] Loading image from cache: /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-controller-manager_v1.15.0

I0723 13:36:39.205827 13162 cache_images.go:198] Loading image from cache: /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-apiserver_v1.15.0

I0723 13:36:39.205869 13162 cache_images.go:198] Loading image from cache: /home/cvirus/.minikube/cache/images/k8s.gcr.io/k8s-dns-sidecar-amd64_1.14.13

I0723 13:36:39.205922 13162 cache_images.go:198] Loading image from cache: /home/cvirus/.minikube/cache/images/k8s.gcr.io/pause_3.1

I0723 13:36:39.205943 13162 ssh_runner.go:101] SSH: sudo rm -f /tmp/kube-apiserver_v1.15.0

I0723 13:36:39.206006 13162 ssh_runner.go:101] SSH: sudo rm -f /tmp/k8s-dns-sidecar-amd64_1.14.13

I0723 13:36:39.206006 13162 ssh_runner.go:101] SSH: sudo rm -f /tmp/pause_3.1

I0723 13:36:39.205830 13162 cache_images.go:198] Loading image from cache: /home/cvirus/.minikube/cache/images/k8s.gcr.io/etcd_3.3.10

I0723 13:36:39.206162 13162 ssh_runner.go:101] SSH: sudo rm -f /tmp/etcd_3.3.10

I0723 13:36:39.205860 13162 ssh_runner.go:101] SSH: sudo rm -f /tmp/storage-provisioner_v1.8.1

I0723 13:36:39.205794 13162 cache_images.go:198] Loading image from cache: /home/cvirus/.minikube/cache/images/k8s.gcr.io/k8s-dns-kube-dns-amd64_1.14.13

I0723 13:36:39.206339 13162 ssh_runner.go:101] SSH: sudo rm -f /tmp/k8s-dns-kube-dns-amd64_1.14.13

I0723 13:36:39.205867 13162 ssh_runner.go:101] SSH: sudo rm -f /tmp/kube-controller-manager_v1.15.0

I0723 13:36:39.205887 13162 cache_images.go:198] Loading image from cache: /home/cvirus/.minikube/cache/images/k8s.gcr.io/coredns_1.3.1

I0723 13:36:39.206494 13162 ssh_runner.go:101] SSH: sudo rm -f /tmp/coredns_1.3.1

I0723 13:36:39.205888 13162 cache_images.go:198] Loading image from cache: /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-proxy_v1.15.0

I0723 13:36:39.206643 13162 ssh_runner.go:101] SSH: sudo rm -f /tmp/kube-proxy_v1.15.0

I0723 13:36:39.205908 13162 cache_images.go:198] Loading image from cache: /home/cvirus/.minikube/cache/images/k8s.gcr.io/kubernetes-dashboard-amd64_v1.10.1

I0723 13:36:39.206806 13162 ssh_runner.go:101] SSH: sudo rm -f /tmp/kubernetes-dashboard-amd64_v1.10.1

I0723 13:36:39.205908 13162 cache_images.go:198] Loading image from cache: /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-scheduler_v1.15.0

I0723 13:36:39.206970 13162 ssh_runner.go:101] SSH: sudo rm -f /tmp/kube-scheduler_v1.15.0

I0723 13:36:39.205901 13162 cache_images.go:198] Loading image from cache: /home/cvirus/.minikube/cache/images/k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64_1.14.13

I0723 13:36:39.207113 13162 ssh_runner.go:101] SSH: sudo rm -f /tmp/k8s-dns-dnsmasq-nanny-amd64_1.14.13

I0723 13:36:39.205924 13162 cache_images.go:198] Loading image from cache: /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-addon-manager_v9.0

W0723 13:36:39.207235 13162 cache_images.go:100] Failed to load /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-addon-manager_v9.0: stat /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-addon-manager_v9.0: no such file or directory

I0723 13:36:39.335228 13162 ssh_runner.go:101] SSH: sudo mkdir -p /tmp

I0723 13:36:39.335254 13162 ssh_runner.go:101] SSH: sudo mkdir -p /tmp

I0723 13:36:39.335273 13162 ssh_runner.go:101] SSH: sudo mkdir -p /tmp

I0723 13:36:39.335254 13162 ssh_runner.go:101] SSH: sudo mkdir -p /tmp

I0723 13:36:39.335303 13162 ssh_runner.go:101] SSH: sudo mkdir -p /tmp

I0723 13:36:39.335251 13162 ssh_runner.go:101] SSH: sudo mkdir -p /tmp

I0723 13:36:39.335228 13162 ssh_runner.go:101] SSH: sudo mkdir -p /tmp

I0723 13:36:39.335495 13162 ssh_runner.go:101] SSH: sudo mkdir -p /tmp

I0723 13:36:39.335505 13162 ssh_runner.go:101] SSH: sudo mkdir -p /tmp

I0723 13:36:39.335520 13162 ssh_runner.go:101] SSH: sudo mkdir -p /tmp

I0723 13:36:39.335496 13162 ssh_runner.go:101] SSH: sudo mkdir -p /tmp

I0723 13:36:39.335497 13162 ssh_runner.go:101] SSH: sudo mkdir -p /tmp

I0723 13:36:39.383980 13162 ssh_runner.go:182] Transferring 47842304 bytes to kube-controller-manager_v1.15.0

I0723 13:36:39.384054 13162 ssh_runner.go:182] Transferring 30113280 bytes to kube-proxy_v1.15.0

I0723 13:36:39.384062 13162 ssh_runner.go:182] Transferring 11769344 bytes to k8s-dns-dnsmasq-nanny-amd64_1.14.13

I0723 13:36:39.384087 13162 ssh_runner.go:182] Transferring 20683776 bytes to storage-provisioner_v1.8.1

I0723 13:36:39.384089 13162 ssh_runner.go:182] Transferring 29871616 bytes to kube-scheduler_v1.15.0

I0723 13:36:39.384109 13162 ssh_runner.go:182] Transferring 76164608 bytes to etcd_3.3.10

I0723 13:36:39.384111 13162 ssh_runner.go:182] Transferring 12207616 bytes to k8s-dns-sidecar-amd64_1.14.13

I0723 13:36:39.384141 13162 ssh_runner.go:182] Transferring 318976 bytes to pause_3.1

I0723 13:36:39.384089 13162 ssh_runner.go:182] Transferring 44910592 bytes to kubernetes-dashboard-amd64_v1.10.1

I0723 13:36:39.384160 13162 ssh_runner.go:182] Transferring 49275904 bytes to kube-apiserver_v1.15.0

I0723 13:36:39.384064 13162 ssh_runner.go:182] Transferring 14267904 bytes to k8s-dns-kube-dns-amd64_1.14.13

I0723 13:36:39.384116 13162 ssh_runner.go:182] Transferring 12306944 bytes to coredns_1.3.1

I0723 13:36:39.441359 13162 ssh_runner.go:195] pause_3.1: copied 318976 bytes

I0723 13:36:39.477353 13162 docker.go:92] Loading image: /tmp/pause_3.1

I0723 13:36:39.477376 13162 ssh_runner.go:101] SSH: docker load -i /tmp/pause_3.1

I0723 13:36:39.742352 13162 utils.go:241] > Loaded image: k8s.gcr.io/pause:3.1

I0723 13:36:39.742381 13162 ssh_runner.go:101] SSH: sudo rm -rf /tmp/pause_3.1

I0723 13:36:39.802293 13162 cache_images.go:227] Successfully loaded image /home/cvirus/.minikube/cache/images/k8s.gcr.io/pause_3.1 from cache

I0723 13:36:40.328416 13162 ssh_runner.go:195] k8s-dns-dnsmasq-nanny-amd64_1.14.13: copied 11769344 bytes

I0723 13:36:40.356890 13162 docker.go:92] Loading image: /tmp/k8s-dns-dnsmasq-nanny-amd64_1.14.13

I0723 13:36:40.356914 13162 ssh_runner.go:101] SSH: docker load -i /tmp/k8s-dns-dnsmasq-nanny-amd64_1.14.13

I0723 13:36:40.401513 13162 ssh_runner.go:195] k8s-dns-sidecar-amd64_1.14.13: copied 12207616 bytes

I0723 13:36:40.402272 13162 ssh_runner.go:195] coredns_1.3.1: copied 12306944 bytes

I0723 13:36:40.476945 13162 ssh_runner.go:195] k8s-dns-kube-dns-amd64_1.14.13: copied 14267904 bytes

I0723 13:36:40.907574 13162 ssh_runner.go:195] storage-provisioner_v1.8.1: copied 20683776 bytes

I0723 13:36:41.405394 13162 ssh_runner.go:195] kube-proxy_v1.15.0: copied 30113280 bytes

I0723 13:36:41.449365 13162 ssh_runner.go:195] kube-scheduler_v1.15.0: copied 29871616 bytes

I0723 13:36:41.905052 13162 ssh_runner.go:195] kubernetes-dashboard-amd64_v1.10.1: copied 44910592 bytes

I0723 13:36:41.918167 13162 utils.go:241] > Loaded image: k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64:1.14.13

I0723 13:36:41.918205 13162 ssh_runner.go:101] SSH: sudo rm -rf /tmp/k8s-dns-dnsmasq-nanny-amd64_1.14.13

I0723 13:36:41.982074 13162 cache_images.go:227] Successfully loaded image /home/cvirus/.minikube/cache/images/k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64_1.14.13 from cache

I0723 13:36:41.982093 13162 docker.go:92] Loading image: /tmp/k8s-dns-sidecar-amd64_1.14.13

I0723 13:36:41.982098 13162 ssh_runner.go:101] SSH: docker load -i /tmp/k8s-dns-sidecar-amd64_1.14.13

I0723 13:36:41.982943 13162 ssh_runner.go:195] kube-controller-manager_v1.15.0: copied 47842304 bytes

I0723 13:36:42.003048 13162 ssh_runner.go:195] kube-apiserver_v1.15.0: copied 49275904 bytes

I0723 13:36:42.283479 13162 ssh_runner.go:195] etcd_3.3.10: copied 76164608 bytes

I0723 13:36:42.913815 13162 utils.go:241] > Loaded image: k8s.gcr.io/k8s-dns-sidecar-amd64:1.14.13

I0723 13:36:42.917174 13162 ssh_runner.go:101] SSH: sudo rm -rf /tmp/k8s-dns-sidecar-amd64_1.14.13

I0723 13:36:42.971320 13162 cache_images.go:227] Successfully loaded image /home/cvirus/.minikube/cache/images/k8s.gcr.io/k8s-dns-sidecar-amd64_1.14.13 from cache

I0723 13:36:42.971404 13162 docker.go:92] Loading image: /tmp/coredns_1.3.1

I0723 13:36:42.971429 13162 ssh_runner.go:101] SSH: docker load -i /tmp/coredns_1.3.1

I0723 13:36:43.773615 13162 utils.go:241] > Loaded image: k8s.gcr.io/coredns:1.3.1

I0723 13:36:43.782612 13162 ssh_runner.go:101] SSH: sudo rm -rf /tmp/coredns_1.3.1

I0723 13:36:43.835224 13162 cache_images.go:227] Successfully loaded image /home/cvirus/.minikube/cache/images/k8s.gcr.io/coredns_1.3.1 from cache

I0723 13:36:43.835268 13162 docker.go:92] Loading image: /tmp/k8s-dns-kube-dns-amd64_1.14.13

I0723 13:36:43.835289 13162 ssh_runner.go:101] SSH: docker load -i /tmp/k8s-dns-kube-dns-amd64_1.14.13

I0723 13:36:44.884632 13162 utils.go:241] > Loaded image: k8s.gcr.io/k8s-dns-kube-dns-amd64:1.14.13

I0723 13:36:44.935245 13162 ssh_runner.go:101] SSH: sudo rm -rf /tmp/k8s-dns-kube-dns-amd64_1.14.13

I0723 13:36:44.995368 13162 cache_images.go:227] Successfully loaded image /home/cvirus/.minikube/cache/images/k8s.gcr.io/k8s-dns-kube-dns-amd64_1.14.13 from cache

I0723 13:36:44.995447 13162 docker.go:92] Loading image: /tmp/storage-provisioner_v1.8.1

I0723 13:36:44.995472 13162 ssh_runner.go:101] SSH: docker load -i /tmp/storage-provisioner_v1.8.1

I0723 13:36:46.145612 13162 utils.go:241] > Loaded image: gcr.io/k8s-minikube/storage-provisioner:v1.8.1

I0723 13:36:46.157422 13162 ssh_runner.go:101] SSH: sudo rm -rf /tmp/storage-provisioner_v1.8.1

I0723 13:36:46.219300 13162 cache_images.go:227] Successfully loaded image /home/cvirus/.minikube/cache/images/gcr.io/k8s-minikube/storage-provisioner_v1.8.1 from cache

I0723 13:36:46.219376 13162 docker.go:92] Loading image: /tmp/kube-proxy_v1.15.0

I0723 13:36:46.219405 13162 ssh_runner.go:101] SSH: docker load -i /tmp/kube-proxy_v1.15.0

I0723 13:36:48.180337 13162 utils.go:241] > Loaded image: k8s.gcr.io/kube-proxy:v1.15.0

I0723 13:36:48.189104 13162 ssh_runner.go:101] SSH: sudo rm -rf /tmp/kube-proxy_v1.15.0

I0723 13:36:48.251307 13162 cache_images.go:227] Successfully loaded image /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-proxy_v1.15.0 from cache

I0723 13:36:48.251355 13162 docker.go:92] Loading image: /tmp/kube-scheduler_v1.15.0

I0723 13:36:48.251393 13162 ssh_runner.go:101] SSH: docker load -i /tmp/kube-scheduler_v1.15.0

I0723 13:36:48.958051 13162 utils.go:241] > Loaded image: k8s.gcr.io/kube-scheduler:v1.15.0

I0723 13:36:48.972781 13162 ssh_runner.go:101] SSH: sudo rm -rf /tmp/kube-scheduler_v1.15.0

I0723 13:36:49.035169 13162 cache_images.go:227] Successfully loaded image /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-scheduler_v1.15.0 from cache

I0723 13:36:49.035193 13162 docker.go:92] Loading image: /tmp/kubernetes-dashboard-amd64_v1.10.1

I0723 13:36:49.035199 13162 ssh_runner.go:101] SSH: docker load -i /tmp/kubernetes-dashboard-amd64_v1.10.1

I0723 13:36:50.931423 13162 utils.go:241] > Loaded image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1

I0723 13:36:50.947692 13162 ssh_runner.go:101] SSH: sudo rm -rf /tmp/kubernetes-dashboard-amd64_v1.10.1

I0723 13:36:51.007362 13162 cache_images.go:227] Successfully loaded image /home/cvirus/.minikube/cache/images/k8s.gcr.io/kubernetes-dashboard-amd64_v1.10.1 from cache

I0723 13:36:51.007426 13162 docker.go:92] Loading image: /tmp/kube-controller-manager_v1.15.0

I0723 13:36:51.007476 13162 ssh_runner.go:101] SSH: docker load -i /tmp/kube-controller-manager_v1.15.0

I0723 13:36:52.925558 13162 utils.go:241] > Loaded image: k8s.gcr.io/kube-controller-manager:v1.15.0

I0723 13:36:52.941711 13162 ssh_runner.go:101] SSH: sudo rm -rf /tmp/kube-controller-manager_v1.15.0

I0723 13:36:52.999337 13162 cache_images.go:227] Successfully loaded image /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-controller-manager_v1.15.0 from cache

I0723 13:36:52.999421 13162 docker.go:92] Loading image: /tmp/kube-apiserver_v1.15.0

I0723 13:36:52.999451 13162 ssh_runner.go:101] SSH: docker load -i /tmp/kube-apiserver_v1.15.0

I0723 13:36:55.521011 13162 utils.go:241] > Loaded image: k8s.gcr.io/kube-apiserver:v1.15.0

I0723 13:36:55.583376 13162 ssh_runner.go:101] SSH: sudo rm -rf /tmp/kube-apiserver_v1.15.0

I0723 13:36:55.651330 13162 cache_images.go:227] Successfully loaded image /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-apiserver_v1.15.0 from cache

I0723 13:36:55.651388 13162 docker.go:92] Loading image: /tmp/etcd_3.3.10

I0723 13:36:55.651411 13162 ssh_runner.go:101] SSH: docker load -i /tmp/etcd_3.3.10

I0723 13:37:00.470184 13162 utils.go:241] > Loaded image: k8s.gcr.io/etcd:3.3.10

I0723 13:37:00.489945 13162 ssh_runner.go:101] SSH: sudo rm -rf /tmp/etcd_3.3.10

I0723 13:37:00.551176 13162 cache_images.go:227] Successfully loaded image /home/cvirus/.minikube/cache/images/k8s.gcr.io/etcd_3.3.10 from cache

❌ Unable to load cached images: loading cached images: loading image /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-addon-manager_v9.0: stat /home/cvirus/.minikube/cache/images/k8s.gcr.io/kube-addon-manager_v9.0: no such file or directory

I0723 13:37:00.551525 13162 kubeadm.go:501] kubelet v1.15.0 config:

[Unit]

Wants=docker.socket

[Service]

ExecStart=

ExecStart=/usr/bin/kubelet --authorization-mode=Webhook --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --cgroup-driver=cgroupfs --client-ca-file=/var/lib/minikube/certs/ca.crt --cluster-dns=10.96.0.10 --cluster-domain=cluster.local --container-runtime=docker --fail-swap-on=false --hostname-override=minikube --kubeconfig=/etc/kubernetes/kubelet.conf --pod-manifest-path=/etc/kubernetes/manifests

[Install]

💾 Downloading kubeadm v1.15.0

💾 Downloading kubelet v1.15.0

I0723 13:37:33.026250 13162 ssh_runner.go:101] SSH: sudo rm -f /usr/bin/kubeadm

I0723 13:37:33.087320 13162 ssh_runner.go:101] SSH: sudo mkdir -p /usr/bin

I0723 13:37:33.148679 13162 ssh_runner.go:182] Transferring 40169856 bytes to kubeadm

I0723 13:37:33.466528 13162 ssh_runner.go:195] kubeadm: copied 40169856 bytes

I0723 13:37:53.239879 13162 ssh_runner.go:101] SSH: sudo rm -f /usr/bin/kubelet

I0723 13:37:53.299432 13162 ssh_runner.go:101] SSH: sudo mkdir -p /usr/bin

I0723 13:37:53.361000 13162 ssh_runner.go:182] Transferring 119612544 bytes to kubelet

I0723 13:37:54.388633 13162 ssh_runner.go:195] kubelet: copied 119612544 bytes

I0723 13:37:54.447539 13162 ssh_runner.go:101] SSH: sudo rm -f /lib/systemd/system/kubelet.service

I0723 13:37:54.503354 13162 ssh_runner.go:101] SSH: sudo mkdir -p /lib/systemd/system

I0723 13:37:54.564807 13162 ssh_runner.go:182] Transferring 222 bytes to kubelet.service

I0723 13:37:54.567372 13162 ssh_runner.go:195] kubelet.service: copied 222 bytes

I0723 13:37:54.580361 13162 ssh_runner.go:101] SSH: sudo rm -f /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

I0723 13:37:54.639434 13162 ssh_runner.go:101] SSH: sudo mkdir -p /etc/systemd/system/kubelet.service.d

I0723 13:37:54.701386 13162 ssh_runner.go:182] Transferring 473 bytes to 10-kubeadm.conf

I0723 13:37:54.703975 13162 ssh_runner.go:195] 10-kubeadm.conf: copied 473 bytes

I0723 13:37:54.717827 13162 ssh_runner.go:101] SSH: sudo rm -f /var/lib/kubeadm.yaml

I0723 13:37:54.775388 13162 ssh_runner.go:101] SSH: sudo mkdir -p /var/lib

I0723 13:37:54.837330 13162 ssh_runner.go:182] Transferring 1139 bytes to kubeadm.yaml

I0723 13:37:54.839526 13162 ssh_runner.go:195] kubeadm.yaml: copied 1139 bytes

I0723 13:37:54.853425 13162 ssh_runner.go:101] SSH: sudo rm -f /etc/kubernetes/addons/storageclass.yaml

I0723 13:37:54.911425 13162 ssh_runner.go:101] SSH: sudo mkdir -p /etc/kubernetes/addons

I0723 13:37:54.972599 13162 ssh_runner.go:182] Transferring 271 bytes to storageclass.yaml

I0723 13:37:54.974660 13162 ssh_runner.go:195] storageclass.yaml: copied 271 bytes

I0723 13:37:54.987879 13162 ssh_runner.go:101] SSH: sudo rm -f /etc/kubernetes/addons/storage-provisioner.yaml

I0723 13:37:55.043207 13162 ssh_runner.go:101] SSH: sudo mkdir -p /etc/kubernetes/addons

I0723 13:37:55.097193 13162 ssh_runner.go:182] Transferring 1709 bytes to storage-provisioner.yaml

I0723 13:37:55.099574 13162 ssh_runner.go:195] storage-provisioner.yaml: copied 1709 bytes

I0723 13:37:55.113222 13162 ssh_runner.go:101] SSH: sudo rm -f /etc/kubernetes/manifests/addon-manager.yaml.tmpl

I0723 13:37:55.171389 13162 ssh_runner.go:101] SSH: sudo mkdir -p /etc/kubernetes/manifests/

I0723 13:37:55.236851 13162 ssh_runner.go:182] Transferring 1406 bytes to addon-manager.yaml.tmpl

I0723 13:37:55.239914 13162 ssh_runner.go:195] addon-manager.yaml.tmpl: copied 1406 bytes

I0723 13:37:55.299380 13162 ssh_runner.go:101] SSH:

sudo systemctl daemon-reload &&

sudo systemctl start kubelet

I0723 13:37:55.435062 13162 certs.go:47] Setting up certificates for IP: 192.168.99.100

I0723 13:37:56.110962 13162 ssh_runner.go:101] SSH: sudo rm -f /var/lib/minikube/certs/ca.crt

I0723 13:37:56.155165 13162 ssh_runner.go:101] SSH: sudo mkdir -p /var/lib/minikube/certs/

I0723 13:37:56.204695 13162 ssh_runner.go:182] Transferring 1066 bytes to ca.crt

I0723 13:37:56.206809 13162 ssh_runner.go:195] ca.crt: copied 1066 bytes

I0723 13:37:56.219191 13162 ssh_runner.go:101] SSH: sudo rm -f /var/lib/minikube/certs/ca.key

I0723 13:37:56.275384 13162 ssh_runner.go:101] SSH: sudo mkdir -p /var/lib/minikube/certs/

I0723 13:37:56.332712 13162 ssh_runner.go:182] Transferring 1675 bytes to ca.key

I0723 13:37:56.334569 13162 ssh_runner.go:195] ca.key: copied 1675 bytes

I0723 13:37:56.348071 13162 ssh_runner.go:101] SSH: sudo rm -f /var/lib/minikube/certs/apiserver.crt

I0723 13:37:56.403332 13162 ssh_runner.go:101] SSH: sudo mkdir -p /var/lib/minikube/certs/

I0723 13:37:56.460735 13162 ssh_runner.go:182] Transferring 1298 bytes to apiserver.crt

I0723 13:37:56.462554 13162 ssh_runner.go:195] apiserver.crt: copied 1298 bytes

I0723 13:37:56.475563 13162 ssh_runner.go:101] SSH: sudo rm -f /var/lib/minikube/certs/apiserver.key

I0723 13:37:56.531370 13162 ssh_runner.go:101] SSH: sudo mkdir -p /var/lib/minikube/certs/

I0723 13:37:56.592933 13162 ssh_runner.go:182] Transferring 1679 bytes to apiserver.key

I0723 13:37:56.595433 13162 ssh_runner.go:195] apiserver.key: copied 1679 bytes

I0723 13:37:56.609296 13162 ssh_runner.go:101] SSH: sudo rm -f /var/lib/minikube/certs/proxy-client-ca.crt

I0723 13:37:56.663389 13162 ssh_runner.go:101] SSH: sudo mkdir -p /var/lib/minikube/certs/

I0723 13:37:56.930297 13162 ssh_runner.go:182] Transferring 1074 bytes to proxy-client-ca.crt

I0723 13:37:56.933352 13162 ssh_runner.go:195] proxy-client-ca.crt: copied 1074 bytes

I0723 13:37:56.939641 13162 ssh_runner.go:101] SSH: sudo rm -f /var/lib/minikube/certs/proxy-client-ca.key

I0723 13:37:56.991267 13162 ssh_runner.go:101] SSH: sudo mkdir -p /var/lib/minikube/certs/

I0723 13:37:57.051799 13162 ssh_runner.go:182] Transferring 1675 bytes to proxy-client-ca.key

I0723 13:37:57.052851 13162 ssh_runner.go:195] proxy-client-ca.key: copied 1675 bytes

I0723 13:37:57.056561 13162 ssh_runner.go:101] SSH: sudo rm -f /var/lib/minikube/certs/proxy-client.crt

I0723 13:37:57.060823 13162 ssh_runner.go:101] SSH: sudo mkdir -p /var/lib/minikube/certs/

I0723 13:37:57.066688 13162 ssh_runner.go:182] Transferring 1103 bytes to proxy-client.crt

I0723 13:37:57.067673 13162 ssh_runner.go:195] proxy-client.crt: copied 1103 bytes

I0723 13:37:57.070510 13162 ssh_runner.go:101] SSH: sudo rm -f /var/lib/minikube/certs/proxy-client.key

I0723 13:37:57.075159 13162 ssh_runner.go:101] SSH: sudo mkdir -p /var/lib/minikube/certs/

I0723 13:37:57.079722 13162 ssh_runner.go:182] Transferring 1679 bytes to proxy-client.key

I0723 13:37:57.080698 13162 ssh_runner.go:195] proxy-client.key: copied 1679 bytes

I0723 13:37:57.083489 13162 ssh_runner.go:101] SSH: sudo rm -f /var/lib/minikube/kubeconfig

I0723 13:37:57.131365 13162 ssh_runner.go:101] SSH: sudo mkdir -p /var/lib/minikube

I0723 13:37:57.193174 13162 ssh_runner.go:182] Transferring 428 bytes to kubeconfig

I0723 13:37:57.195327 13162 ssh_runner.go:195] kubeconfig: copied 428 bytes

I0723 13:37:57.543937 13162 kubeconfig.go:127] Using kubeconfig: /home/cvirus/.kube/config

🚜 Pulling images ...

I0723 13:37:57.562070 13162 ssh_runner.go:101] SSH: sudo kubeadm config images pull --config /var/lib/kubeadm.yaml

I0723 13:37:58.536730 13162 utils.go:241] > [config/images] Pulled k8s.gcr.io/kube-apiserver:v1.15.0

I0723 13:37:59.408903 13162 utils.go:241] > [config/images] Pulled k8s.gcr.io/kube-controller-manager:v1.15.0

I0723 13:38:00.520771 13162 utils.go:241] > [config/images] Pulled k8s.gcr.io/kube-scheduler:v1.15.0

I0723 13:38:01.541610 13162 utils.go:241] > [config/images] Pulled k8s.gcr.io/kube-proxy:v1.15.0

I0723 13:38:02.470598 13162 utils.go:241] > [config/images] Pulled k8s.gcr.io/pause:3.1

I0723 13:38:03.480028 13162 utils.go:241] > [config/images] Pulled k8s.gcr.io/etcd:3.3.10

I0723 13:38:04.514476 13162 utils.go:241] > [config/images] Pulled k8s.gcr.io/coredns:1.3.1

🚀 Launching Kubernetes ...

I0723 13:38:04.517575 13162 ssh_runner.go:137] Run with output: sudo /usr/bin/kubeadm init --config /var/lib/kubeadm.yaml --ignore-preflight-errors=DirAvailable--etc-kubernetes-manifests,DirAvailable--data-minikube,FileAvailable--etc-kubernetes-manifests-kube-scheduler.yaml,FileAvailable--etc-kubernetes-manifests-kube-apiserver.yaml,FileAvailable--etc-kubernetes-manifests-kube-controller-manager.yaml,FileAvailable--etc-kubernetes-manifests-etcd.yaml,Port-10250,Swap

I0723 13:38:04.561303 13162 utils.go:241] > [init] Using Kubernetes version: v1.15.0

I0723 13:38:04.561361 13162 utils.go:241] > [preflight] Running pre-flight checks

I0723 13:38:04.609116 13162 utils.go:241] ! [WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

I0723 13:38:04.633839 13162 utils.go:241] ! [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

I0723 13:38:04.703063 13162 utils.go:241] ! [WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

I0723 13:38:04.703139 13162 utils.go:241] > [preflight] Pulling images required for setting up a Kubernetes cluster

I0723 13:38:04.703161 13162 utils.go:241] > [preflight] This might take a minute or two, depending on the speed of your internet connection

I0723 13:38:04.703180 13162 utils.go:241] > [preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

I0723 13:38:04.882176 13162 utils.go:241] > [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

I0723 13:38:04.882951 13162 utils.go:241] > [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

I0723 13:38:04.888917 13162 utils.go:241] > [kubelet-start] Activating the kubelet service

I0723 13:38:04.942974 13162 utils.go:241] > [certs] Using certificateDir folder "/var/lib/minikube/certs/"

I0723 13:38:05.045075 13162 utils.go:241] > [certs] Generating "front-proxy-ca" certificate and key

I0723 13:38:05.188872 13162 utils.go:241] > [certs] Generating "front-proxy-client" certificate and key

I0723 13:38:05.189149 13162 utils.go:241] > [certs] Using existing ca certificate authority

I0723 13:38:05.431593 13162 utils.go:241] > [certs] Generating "apiserver-kubelet-client" certificate and key

I0723 13:38:05.431916 13162 utils.go:241] > [certs] Using existing apiserver certificate and key on disk

I0723 13:38:05.652705 13162 utils.go:241] > [certs] Generating "etcd/ca" certificate and key

I0723 13:38:06.431760 13162 utils.go:241] > [certs] Generating "etcd/peer" certificate and key

I0723 13:38:06.431798 13162 utils.go:241] > [certs] etcd/peer serving cert is signed for DNS names [minikube localhost] and IPs [192.168.99.100 127.0.0.1 ::1]

I0723 13:38:06.604271 13162 utils.go:241] > [certs] Generating "etcd/server" certificate and key

I0723 13:38:06.604330 13162 utils.go:241] > [certs] etcd/server serving cert is signed for DNS names [minikube localhost] and IPs [192.168.99.100 127.0.0.1 ::1]

I0723 13:38:06.711735 13162 utils.go:241] > [certs] Generating "etcd/healthcheck-client" certificate and key

I0723 13:38:06.898354 13162 utils.go:241] > [certs] Generating "apiserver-etcd-client" certificate and key

I0723 13:38:07.055118 13162 utils.go:241] > [certs] Generating "sa" key and public key

I0723 13:38:07.055152 13162 utils.go:241] > [kubeconfig] Using kubeconfig folder "/etc/kubernetes"

I0723 13:38:07.168684 13162 utils.go:241] > [kubeconfig] Writing "admin.conf" kubeconfig file

I0723 13:38:07.241227 13162 utils.go:241] > [kubeconfig] Writing "kubelet.conf" kubeconfig file

I0723 13:38:07.393915 13162 utils.go:241] > [kubeconfig] Writing "controller-manager.conf" kubeconfig file

I0723 13:38:07.475864 13162 utils.go:241] > [kubeconfig] Writing "scheduler.conf" kubeconfig file

I0723 13:38:07.475898 13162 utils.go:241] > [control-plane] Using manifest folder "/etc/kubernetes/manifests"

I0723 13:38:07.475913 13162 utils.go:241] > [control-plane] Creating static Pod manifest for "kube-apiserver"

I0723 13:38:07.481104 13162 utils.go:241] > [control-plane] Creating static Pod manifest for "kube-controller-manager"

I0723 13:38:07.481619 13162 utils.go:241] > [control-plane] Creating static Pod manifest for "kube-scheduler"

I0723 13:38:07.482121 13162 utils.go:241] > [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

I0723 13:38:07.483279 13162 utils.go:241] > [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

I0723 13:38:44.985859 13162 utils.go:241] > [apiclient] All control plane components are healthy after 37.501772 seconds

I0723 13:38:44.985890 13162 utils.go:241] > [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

I0723 13:38:45.013196 13162 utils.go:241] > [kubelet] Creating a ConfigMap "kubelet-config-1.15" in namespace kube-system with the configuration for the kubelets in the cluster

I0723 13:38:45.554377 13162 utils.go:241] > [upload-certs] Skipping phase. Please see --upload-certs

I0723 13:38:45.554409 13162 utils.go:241] > [mark-control-plane] Marking the node minikube as control-plane by adding the label "node-role.kubernetes.io/master=''"

I0723 13:38:46.060855 13162 utils.go:241] > [bootstrap-token] Using token: hhn9t1.6lgpo06mocszm3qn

I0723 13:38:46.060884 13162 utils.go:241] > [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

I0723 13:38:46.109352 13162 utils.go:241] > [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

I0723 13:38:46.119341 13162 utils.go:241] > [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

I0723 13:38:46.127578 13162 utils.go:241] > [bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

I0723 13:38:46.132878 13162 utils.go:241] > [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

I0723 13:38:46.222204 13162 utils.go:241] > [addons] Applied essential addon: CoreDNS

I0723 13:38:46.499265 13162 utils.go:241] > [addons] Applied essential addon: kube-proxy

I0723 13:38:46.500839 13162 utils.go:241] > Your Kubernetes control-plane has initialized successfully!

I0723 13:38:46.500876 13162 utils.go:241] > To start using your cluster, you need to run the following as a regular user:

I0723 13:38:46.500896 13162 utils.go:241] > mkdir -p $HOME/.kube

I0723 13:38:46.500921 13162 utils.go:241] > sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

I0723 13:38:46.500946 13162 utils.go:241] > sudo chown $(id -u):$(id -g) $HOME/.kube/config

I0723 13:38:46.500976 13162 utils.go:241] > You should now deploy a pod network to the cluster.

I0723 13:38:46.500998 13162 utils.go:241] > Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

I0723 13:38:46.501025 13162 utils.go:241] > https://kubernetes.io/docs/concepts/cluster-administration/addons/

I0723 13:38:46.501056 13162 utils.go:241] > You can now join any number of control-plane nodes by copying certificate authorities

I0723 13:38:46.501078 13162 utils.go:241] > and service account keys on each node and then running the following as root:

I0723 13:38:46.501094 13162 utils.go:241] > kubeadm join localhost:8443 --token hhn9t1.6lgpo06mocszm3qn \

I0723 13:38:46.501131 13162 utils.go:241] > --discovery-token-ca-cert-hash sha256:612c32ae519f64de561358522787e62a6b0a52dc9499e8dda85dfffd7943bc15 \

I0723 13:38:46.501150 13162 utils.go:241] > --experimental-control-plane

I0723 13:38:46.501181 13162 utils.go:241] > Then you can join any number of worker nodes by running the following on each as root:

I0723 13:38:46.501204 13162 utils.go:241] > kubeadm join localhost:8443 --token hhn9t1.6lgpo06mocszm3qn \

I0723 13:38:46.501241 13162 utils.go:241] > --discovery-token-ca-cert-hash sha256:612c32ae519f64de561358522787e62a6b0a52dc9499e8dda85dfffd7943bc15

I0723 13:38:46.547105 13162 kubeadm.go:240] Configuring cluster permissions ...

I0723 13:39:01.971798 13162 ssh_runner.go:137] Run with output: cat /proc/$(pgrep kube-apiserver)/oom_adj

I0723 13:39:01.979794 13162 utils.go:241] > 16

I0723 13:39:01.979845 13162 kubeadm.go:257] apiserver oom_adj: 16

I0723 13:39:01.979859 13162 kubeadm.go:262] adjusting apiserver oom_adj to -10

I0723 13:39:01.979865 13162 ssh_runner.go:101] SSH: echo -10 | sudo tee /proc/$(pgrep kube-apiserver)/oom_adj

I0723 13:39:01.989426 13162 utils.go:241] > -10

⌛ Verifying: apiserverI0723 13:39:01.993029 13162 kubeadm.go:381] Waiting for apiserver ...

I0723 13:39:02.000397 13162 kubeadm.go:142] https://192.168.99.100:8443/healthz response: <nil> &{Status:200 OK StatusCode:200 Proto:HTTP/1.1 ProtoMajor:1 ProtoMinor:1 Header:map[Content-Length:[2] Content-Type:[text/plain; charset=utf-8] Date:[Tue, 23 Jul 2019 11:39:01 GMT] X-Content-Type-Options:[nosniff]] Body:0xc000350880 ContentLength:2 TransferEncoding:[] Close:false Uncompressed:false Trailer:map[] Request:0xc000a0e500 TLS:0xc000a709a0}

I0723 13:39:02.000436 13162 kubeadm.go:384] apiserver status: Running, err: <nil>

proxyI0723 13:39:02.000473 13162 kubernetes.go:125] Waiting for pod with label "kube-system" in ns "k8s-app=kube-proxy" ...

I0723 13:39:02.005944 13162 kubernetes.go:136] Found 1 Pods for label selector k8s-app=kube-proxy

etcdI0723 13:39:02.005978 13162 kubernetes.go:125] Waiting for pod with label "kube-system" in ns "component=etcd" ...

I0723 13:39:02.009196 13162 kubernetes.go:136] Found 0 Pods for label selector component=etcd

I0723 13:39:44.521440 13162 kubernetes.go:136] Found 1 Pods for label selector component=etcd

schedulerI0723 13:39:45.512168 13162 kubernetes.go:125] Waiting for pod with label "kube-system" in ns "component=kube-scheduler" ...

I0723 13:39:45.513849 13162 kubernetes.go:136] Found 0 Pods for label selector component=kube-scheduler

I0723 13:40:04.532929 13162 kubernetes.go:136] Found 1 Pods for label selector component=kube-scheduler

controllerI0723 13:40:05.517974 13162 kubernetes.go:125] Waiting for pod with label "kube-system" in ns "component=kube-controller-manager" ...

I0723 13:40:05.520723 13162 kubernetes.go:136] Found 1 Pods for label selector component=kube-controller-manager

dnsI0723 13:40:05.520756 13162 kubernetes.go:125] Waiting for pod with label "kube-system" in ns "k8s-app=kube-dns" ...

I0723 13:40:05.524070 13162 kubernetes.go:136] Found 2 Pods for label selector k8s-app=kube-dns

🏄 Done! kubectl is now configured to use "minikube"

➜ k get pods

error: You must be logged in to the server (Unauthorized)

➜ kubectl config view --minify | grep /.minikube | xargs stat

➜ minikube status

host: Running

kubelet: Running

apiserver: Running

kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.100

➜ kubectl get pods

error: You must be logged in to the server (Unauthorized)

1:56:42 pm CEST in ~ at ☸️ minikube

➜ kubectl config view --minify | grep /.minikube | xargs stat

stat: cannot stat 'certificate-authority:': No such file or directory

File: /home/cvirus/.minikube/ca.crt

Size: 1066 Blocks: 8 IO Block: 4096 regular file

Device: 10303h/66307d Inode: 4722366 Links: 1

Access: (0644/-rw-r--r--) Uid: ( 1000/ cvirus) Gid: ( 1000/ cvirus)

Access: 2019-07-23 13:37:55.653630843 +0200

Modify: 2019-07-23 13:37:55.489628320 +0200

Change: 2019-07-23 13:37:55.489628320 +0200

Birth: -

stat: cannot stat 'client-certificate:': No such file or directory

File: /home/cvirus/.minikube/client.crt

Size: 1103 Blocks: 8 IO Block: 4096 regular file

Device: 10303h/66307d Inode: 4722370 Links: 1

Access: (0644/-rw-r--r--) Uid: ( 1000/ cvirus) Gid: ( 1000/ cvirus)

Access: 2019-07-23 13:38:46.546375327 +0200

Modify: 2019-07-23 13:37:55.765632566 +0200

Change: 2019-07-23 13:37:55.765632566 +0200

Birth: -

stat: cannot stat 'client-key:': No such file or directory

File: /home/cvirus/.minikube/client.key

Size: 1679 Blocks: 8 IO Block: 4096 regular file

Device: 10303h/66307d Inode: 4722371 Links: 1

Access: (0600/-rw-------) Uid: ( 1000/ cvirus) Gid: ( 1000/ cvirus)

Access: 2019-07-23 13:38:46.546375327 +0200

Modify: 2019-07-23 13:37:55.765632566 +0200

Change: 2019-07-23 13:37:55.765632566 +0200

Birth: -

@CVirus

Thanks for providing more info, we will look into it ! I am curious are you using a corporate network ?

@medyagh Yes I am. But I face the same problem at home too.

Do you want me to test again from scratch at home to verify that it is also reproducible outside of the corporate network and VPN?

@medyagh The issue is reproducible on my home network with a minikube created from scratch.

Was able to workaround the issue by using the kvm2 driver

minikube start --vm-driver kvm2

@CVirus did you find any work around for this issue?

@gabolucuy Yes, read my above comment ^^

well but not using kvm

@gabolucuy No, I'm sorry this is the only way I could run minikube now. Possibly the maintainers aren't giving this bug much attention because it looks like it is a very rare corner case.

@CVirus Ok thanks, my work around was to completly remove minikube and reinstall the latest version and the error: error: You must be logged in to the server (Unauthorized) disappeared, hope I don't get the same error in the future, but I think the error started because last nigth I didn't shutdown minikube and just put my pc to sleep and this morning I started with the error.

@gabolucuy just did the same and still getting the error.

@CVirus Are you on a linux distro? In case you are, I used this commet to remove minikube https://github.com/kubernetes/minikube/issues/1043#issuecomment-353049052

Can anyone confirm whether they have seen this with minikube v1.4?

I just tested on v1.4 and was able to reproduce only the first time I tried. Then I rm -rf ~/.minikube and tried again and now I no longer get the Unauthorized error. So looks like it is working.

Let's wait for more confirmations from others facing the same issue if possible.

@tstromberg Issue resurfaced again with v1.4

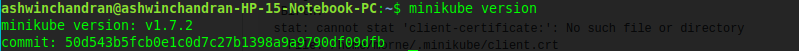

Issue still reproducible here with minikube version: v1.5.2

Issue still reproducible here with minikube version: v1.5.2

+1

I also reproduce it with minikube version: v1.6.2, running k8s 1.16.0 on ubuntu 18.04.

Deleting the cache dirs in ~/.kube might help.

Getting the same issue with v1.7.2. Deleting cache dirs didn't help.

Issue appeared after coming back up from pm-suspend (same as gabolucuy above it seems). Running on a home pc with Ubuntu 18

updated to v1.7.2 but still the same issue

Using the kvm2 driver does fix it, but it's not ideal.

the error

my os

@ZephSibley I can't thank you enough.The only method to solve this problem for me was by installing kvm2 driver. Minikube worked perfectly and error was not seen no more.

I refered this link to setup KVM and replacing virtualbox as VM

https://computingforgeeks.com/how-to-run-minikube-on-kvm/

We were facing the similar issue. Deleting the http-cache helped.

rm -rf ~/.kube/http-cache/*

I hit this issue when I deleted minikube and started it again. Clearing the ~/.kube/http-cache/ helped. It seems you needs to clear it everytime u rebuild minikube from scratch with minikube delete and start your minikube again.

Is anyone able to repeat this with minikube v1.9?

We recently moved the location of certificates, so I'm curious if it affected the caching at all.

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/lifecycle stale

Stale issues rot after 30d of inactivity.

Mark the issue as fresh with /remove-lifecycle rotten.

Rotten issues close after an additional 30d of inactivity.

If this issue is safe to close now please do so with /close.

Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/lifecycle rotten

Meanwhile have you tried out newest driver Docker Driver with latest version of minikube?

you could try

minikube delete

minikube start --driver=docker

for more information on the docker driver checkout:

https://minikube.sigs.k8s.io/docs/drivers/docker/

Regrettably, there isn't enough information in this issue to make it actionable, and a long enough duration has passed, so this issue is likely difficult to replicate. If you can provide any additional details, such as:

The exact minikube start command line used: preferably with --alsologtostderr -v=8 added.

The full output of the command

The full output of "minikube logs"

Please feel free to do so at any point. Thank you for sharing your experience!

Most helpful comment

We were facing the similar issue. Deleting the http-cache helped.

rm -rf ~/.kube/http-cache/*