Minikube: Localkube crashed on Hyper-V

Minikube version (use minikube version): v0.21.0

Environment:

- OS (e.g. from /etc/os-release): Windows 10 Enterprise 10.0.15063 build 15063

- VM Driver (e.g.

cat ~/.minikube/machines/minikube/config.json | grep DriverName): hyperv - ISO version (e.g.

cat ~/.minikube/machines/minikube/config.json | grep -i ISOorminikube ssh cat /etc/VERSION): minikube-v0.23.0.iso - Install tools: Chocolatey

- Others:

What happened:

Either after restarting my laptop, or after being idle for a while, minikube appears to cease responding and is unable to restart.

Attempt to access the dashboard ends with:

minikube dashboard

Could not find finalized endpoint being pointed to by kubernetes-dashboard: Error validating service: Error getting service kubernetes-dashboard: Get https://192.168.0.185:8443/api/v1/namespaces/kube-system/services/kubernetes-dashboard: dial tcp 192.168.0.185:8443: connectex: No connection could be made because the target machine actively refused it.

Minikube sometimes is successfuly starting after doing:

minikube stopminikube deleterd /s ~/.minikubeminikube start --vm-driver hyperv --hyperv-virtual-switch External --alsologtostderr

The above is not always successful and I'm unable to determine why. Typically the process freezes after downloading the ISO.

What you expected to happen:

I expect minikube to start succesfully.

How to reproduce it (as minimally and precisely as possible):

Restart the machine or stop using the dashboard/kubectl for a while.

Output of minikube logs (if applicable):

Anything else do we need to know:

All 24 comments

Minikube logs attached after yet another restart

The cluster seems to be up and running, but the route seems to be broken. Can you do a minikube status?

It took me a while to reproduce, but here it is:

D:\Checkout\Kubernetes\poc-kubernetes-service\config\k8s (master)

λ kubectl apply -f .\poc-kubernetes-service.deployment.yml

Unable to connect to the server: dial tcp 10.0.0.15:8443: connectex: No connection could be made because the target machine actively refused it.

D:\Checkout\Kubernetes\poc-kubernetes-service\config\k8s (master)

λ minikube status

minikube: Running

localkube: Stopped

kubectl: Correctly Configured: pointing to minikube-vm at 10.0.0.15

I have been getting this a ton on OS X 10.12.6 virtualbox v0.23.0.iso.

My localkube is always crashed, but minikube is running.

Doing minikube stop and minikube start fixes it.

I've run minikube with verbose logging level 5 and although I see a ton of stuff in the log, I never see the same thing or anything unusual before/after the crash.

I should also note our whole team sees the same issue, random crashes of localkube throughout the day.

yeah, in my case I need to do a reinstall of minikube using choco, as well as manually removing the VM from hyper-v to get it up and running again

Can the title be changed to reflect this? it seems to be a more general issue

@r2d4 can you share an update, if possible, please?

Could this be related to the dynamic Hyper V memory management problem? https://github.com/kubernetes/minikube/blob/master/docs/drivers.md#hyperV-driver (look for dynamic memory)

Please note that ever since opening this issue, I was never able to successfully start minikube on my machine :(

I'm trying to run the latest minikube (v22), I'm getting the following output:

C:\Users\mmisztal

λ minikube start --vm-driver=hyperv --hyperv-virtual-switch=External --alsologtostderr

W0916 19:17:09.171858 7848 root.go:147] Error reading config file at C:\Users\mmisztal\.minikube\config\config.json: open C:\Users\mmisztal\.minikube\config\config.json: The system cannot find the file specified.

I0916 19:17:09.176890 7848 notify.go:112] Checking for updates...

I0916 19:17:09.664256 7848 cache_images.go:154] Attempting to cache image: gcr.io/google_containers/pause-amd64:3.0 at C_\Users\mmisztal\.minikube\cache\images\gcr.io\google_containers\pause-amd64_3.0

I0916 19:17:09.664256 7848 cache_images.go:154] Attempting to cache image: gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.4 at C_\Users\mmisztal\.minikube\cache\images\gcr.io\google_containers\k8s-dns-dnsmasq-nanny-amd64_1.14.4

Starting local Kubernetes v1.7.5 cluster...

I0916 19:17:09.664256 7848 cache_images.go:154] Attempting to cache image: gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.4 at C_\Users\mmisztal\.minikube\cache\images\gcr.io\google_containers\k8s-dns-kube-dns-amd64_1.14.4

Starting VM...

I0916 19:17:09.664256 7848 cache_images.go:154] Attempting to cache image: gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.4 at C_\Users\mmisztal\.minikube\cache\images\gcr.io\google_containers\k8s-dns-sidecar-amd64_1.14.4

I0916 19:17:09.664256 7848 cache_images.go:154] Attempting to cache image: gcr.io/google_containers/kubernetes-dashboard-amd64:v1.6.3 at C_\Users\mmisztal\.minikube\cache\images\gcr.io\google_containers\kubernetes-dashboard-amd64_v1.6.3

I0916 19:17:09.664256 7848 cache_images.go:154] Attempting to cache image: gcr.io/google-containers/kube-addon-manager:v6.4-beta.2 at C_\Users\mmisztal\.minikube\cache\images\gcr.io\google-containers\kube-addon-manager_v6.4-beta.2

I0916 19:17:09.665718 7848 cluster.go:69] Machine exists!

I0916 19:17:09.667218 7848 cache_images.go:77] Successfully cached all images.

I0916 19:17:10.564110 7848 cluster.go:76] Machine state: Running

I0916 19:17:23.375193 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\ca.pem

I0916 19:17:23.379700 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:17:23.392157 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server.pem

I0916 19:17:23.396654 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:17:23.408176 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server-key.pem

I0916 19:17:23.417678 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:17:45.213665 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\ca.pem

I0916 19:17:45.218679 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:17:45.228163 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server.pem

I0916 19:17:45.232162 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:17:45.245170 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server-key.pem

I0916 19:17:45.250666 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:18:07.677894 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\ca.pem

I0916 19:18:07.683476 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:18:07.694445 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server.pem

I0916 19:18:07.698940 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:18:07.708415 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server-key.pem

I0916 19:18:07.712916 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:18:28.904863 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\ca.pem

I0916 19:18:28.909958 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:18:28.919897 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server.pem

I0916 19:18:28.923919 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:18:28.932495 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server-key.pem

I0916 19:18:28.937992 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:18:49.663049 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server.pem

I0916 19:18:49.674083 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:18:49.685551 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server-key.pem

I0916 19:18:49.690083 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:18:49.700047 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\ca.pem

I0916 19:18:49.705136 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

E0916 19:19:06.730480 7848 start.go:143] Error starting host: Temporary Error: Error configuring auth on host: Temporary Error: ssh command error:

command : sudo systemctl -f restart docker

err : Process exited with status 1

output : Job for docker.service failed because the control process exited with error code.

See "systemctl status docker.service" and "journalctl -xe" for details.

Temporary Error: ssh command error:

command : sudo systemctl -f restart docker

err : Process exited with status 1

output : Job for docker.service failed because the control process exited with error code.

See "systemctl status docker.service" and "journalctl -xe" for details.

Temporary Error: ssh command error:

command : sudo systemctl -f restart docker

err : Process exited with status 1

output : Job for docker.service failed because the control process exited with error code.

See "systemctl status docker.service" and "journalctl -xe" for details.

Temporary Error: ssh command error:

command : sudo systemctl -f restart docker

err : Process exited with status 1

output : Job for docker.service failed because the control process exited with error code.

See "systemctl status docker.service" and "journalctl -xe" for details.

Temporary Error: ssh command error:

command : sudo systemctl -f restart docker

err : Process exited with status 1

output : Job for docker.service failed because the control process exited with error code.

See "systemctl status docker.service" and "journalctl -xe" for details.

.

Retrying.

I0916 19:19:08.737015 7848 cluster.go:69] Machine exists!

I0916 19:19:09.678200 7848 cluster.go:76] Machine state: Running

I0916 19:19:23.100870 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\ca.pem

I0916 19:19:23.104857 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:19:23.114768 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server.pem

I0916 19:19:23.118968 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:19:23.131934 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server-key.pem

I0916 19:19:23.136935 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:19:43.869885 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\ca.pem

I0916 19:19:43.874022 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:19:43.884458 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server.pem

I0916 19:19:43.892001 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:19:43.910003 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server-key.pem

I0916 19:19:43.915002 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:20:04.840005 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\ca.pem

I0916 19:20:04.845004 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:20:04.856034 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server.pem

I0916 19:20:04.860562 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:20:04.871993 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server-key.pem

I0916 19:20:04.876006 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:20:25.701465 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\ca.pem

I0916 19:20:25.705498 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:20:25.718000 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server.pem

I0916 19:20:25.722702 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:20:25.733753 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server-key.pem

I0916 19:20:25.738085 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:20:46.299894 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server.pem

I0916 19:20:46.305931 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:20:46.315895 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server-key.pem

I0916 19:20:46.321411 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:20:46.331424 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\ca.pem

I0916 19:20:46.336468 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

E0916 19:21:02.719572 7848 start.go:143] Error starting host: Temporary Error: Error configuring auth on host: Temporary Error: ssh command error:

command : sudo systemctl -f restart docker

err : Process exited with status 1

output : Job for docker.service failed because the control process exited with error code.

See "systemctl status docker.service" and "journalctl -xe" for details.

Temporary Error: ssh command error:

command : sudo systemctl -f restart docker

err : Process exited with status 1

output : Job for docker.service failed because the control process exited with error code.

See "systemctl status docker.service" and "journalctl -xe" for details.

Temporary Error: ssh command error:

command : sudo systemctl -f restart docker

err : Process exited with status 1

output : Job for docker.service failed because the control process exited with error code.

See "systemctl status docker.service" and "journalctl -xe" for details.

Temporary Error: ssh command error:

command : sudo systemctl -f restart docker

err : Process exited with status 1

output : Job for docker.service failed because the control process exited with error code.

See "systemctl status docker.service" and "journalctl -xe" for details.

Temporary Error: ssh command error:

command : sudo systemctl -f restart docker

err : Process exited with status 1

output : Job for docker.service failed because the control process exited with error code.

See "systemctl status docker.service" and "journalctl -xe" for details.

.

Retrying.

I0916 19:21:04.722833 7848 cluster.go:69] Machine exists!

I0916 19:21:05.654388 7848 cluster.go:76] Machine state: Running

I0916 19:21:18.835908 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\ca.pem

I0916 19:21:18.840908 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:21:18.851408 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server.pem

I0916 19:21:18.855932 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:21:18.867965 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server-key.pem

I0916 19:21:18.872959 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:21:40.500452 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\ca.pem

I0916 19:21:40.506435 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:21:40.516435 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server.pem

I0916 19:21:40.522952 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:21:40.531938 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server-key.pem

I0916 19:21:40.536944 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:22:01.473287 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server.pem

I0916 19:22:01.484788 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:22:01.500299 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server-key.pem

I0916 19:22:01.505288 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:22:01.521285 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\ca.pem

I0916 19:22:01.529301 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:22:22.487798 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server-key.pem

I0916 19:22:22.492799 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:22:22.503295 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\ca.pem

I0916 19:22:22.507797 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:22:22.516797 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server.pem

I0916 19:22:22.520798 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:22:43.717315 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\ca.pem

I0916 19:22:43.723292 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:22:43.736295 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server.pem

I0916 19:22:43.741684 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I0916 19:22:43.755708 7848 ssh_runner.go:57] Run: sudo rm -f \etc\docker\server-key.pem

I0916 19:22:43.761169 7848 ssh_runner.go:57] Run: sudo mkdir -p \etc\docker

I am having the same problem with Hyper-V and Virtualbox. I was originally plagued with the problem in 22.0 for hyper-v, but todays release works, but when it starts, has this problem.

Not sure what to do at this point. It refuses to connect for anything kubectl related, or the dashboard.

λ minikube start --alsologtostderr

W0918 13:22:14.577650 11976 root.go:148] Error reading config file at C:\Users\yulian\.minikube\config\config.json: open C:\Users\yulian\.minikube\config\config.json: The syste

m cannot find the file specified.

I0918 13:22:14.579657 11976 notify.go:109] Checking for updates...

I0918 13:22:14.642792 11976 cache_images.go:150] Attempting to cache image: gcr.io/google_containers/pause-amd64:3.0 at C_\Users\yulian\.minikube\cache\images\gcr.io\google_con

tainers\pause-amd64_3.0

I0918 13:22:14.642792 11976 cache_images.go:150] Attempting to cache image: gcr.io/google_containers/kubernetes-dashboard-amd64:v1.6.3 at C_\Users\yulian\.minikube\cache\images

\gcr.io\google_containers\kubernetes-dashboard-amd64_v1.6.3

Starting local Kubernetes v1.7.5 cluster...

Starting VM...

I0918 13:22:14.642792 11976 cache_images.go:150] Attempting to cache image: gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.4 at C_\Users\yulian\.minikube\cache\images\gcr

.io\google_containers\k8s-dns-kube-dns-amd64_1.14.4

I0918 13:22:14.642792 11976 cache_images.go:150] Attempting to cache image: gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.4 at C_\Users\yulian\.minikube\cache\image

s\gcr.io\google_containers\k8s-dns-dnsmasq-nanny-amd64_1.14.4

I0918 13:22:14.642792 11976 cache_images.go:150] Attempting to cache image: gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.4 at C_\Users\yulian\.minikube\cache\images\gcr.

io\google_containers\k8s-dns-sidecar-amd64_1.14.4

I0918 13:22:14.642792 11976 cache_images.go:150] Attempting to cache image: gcr.io/google-containers/kube-addon-manager:v6.4-beta.2 at C_\Users\yulian\.minikube\cache\images\gc

r.io\google-containers\kube-addon-manager_v6.4-beta.2

I0918 13:22:14.642792 11976 cluster.go:67] Machine exists!

I0918 13:22:14.643798 11976 cache_images.go:73] Successfully cached all images.

I0918 13:22:14.923932 11976 cluster.go:74] Machine state: Stopped

I0918 13:22:51.042751 11976 ssh_runner.go:57] Run: sudo rm -f /etc/docker/ca.pem

I0918 13:22:51.045694 11976 ssh_runner.go:57] Run: sudo mkdir -p /etc/docker

I0918 13:22:51.054526 11976 ssh_runner.go:57] Run: sudo rm -f /etc/docker/server.pem

I0918 13:22:51.059432 11976 ssh_runner.go:57] Run: sudo mkdir -p /etc/docker

I0918 13:22:51.070227 11976 ssh_runner.go:57] Run: sudo rm -f /etc/docker/server-key.pem

I0918 13:22:51.083965 11976 ssh_runner.go:57] Run: sudo mkdir -p /etc/docker

Getting VM IP address...

Moving files into cluster...

I0918 13:22:52.652434 11976 cache_images.go:103] Loading image from cache at C_\Users\yulian\.minikube\cache\images\gcr.io\google_containers\pause-amd64_3.0

I0918 13:22:52.653416 11976 cache_images.go:103] Loading image from cache at C_\Users\yulian\.minikube\cache\images\gcr.io\google_containers\k8s-dns-dnsmasq-nanny-amd64_1.14.4

I0918 13:22:52.653416 11976 ssh_runner.go:57] Run: sudo rm -f /tmp/pause-amd64_3.0

I0918 13:22:52.653416 11976 ssh_runner.go:57] Run: sudo rm -f /tmp/k8s-dns-dnsmasq-nanny-amd64_1.14.4

I0918 13:22:52.653416 11976 cache_images.go:103] Loading image from cache at C_\Users\yulian\.minikube\cache\images\gcr.io\google_containers\k8s-dns-sidecar-amd64_1.14.4

I0918 13:22:52.653416 11976 ssh_runner.go:57] Run: sudo rm -f /tmp/k8s-dns-sidecar-amd64_1.14.4

I0918 13:22:52.653416 11976 cache_images.go:103] Loading image from cache at C_\Users\yulian\.minikube\cache\images\gcr.io\google_containers\kubernetes-dashboard-amd64_v1.6.3

I0918 13:22:52.660284 11976 ssh_runner.go:57] Run: sudo rm -f /tmp/kubernetes-dashboard-amd64_v1.6.3

I0918 13:22:52.653416 11976 cache_images.go:103] Loading image from cache at C_\Users\yulian\.minikube\cache\images\gcr.io\google-containers\kube-addon-manager_v6.4-beta.2

I0918 13:22:52.661266 11976 ssh_runner.go:57] Run: sudo rm -f /tmp/kube-addon-manager_v6.4-beta.2

I0918 13:22:52.659304 11976 ssh_runner.go:57] Run: sudo mkdir -p /tmp

I0918 13:22:52.653416 11976 cache_images.go:103] Loading image from cache at C_\Users\yulian\.minikube\cache\images\gcr.io\google_containers\k8s-dns-kube-dns-amd64_1.14.4

I0918 13:22:52.662248 11976 ssh_runner.go:57] Run: sudo rm -f /tmp/k8s-dns-kube-dns-amd64_1.14.4

I0918 13:22:52.657341 11976 ssh_runner.go:57] Run: sudo mkdir -p /tmp

I0918 13:22:52.664210 11976 ssh_runner.go:57] Run: sudo mkdir -p /tmp

I0918 13:22:52.666173 11976 ssh_runner.go:57] Run: sudo mkdir -p /tmp

I0918 13:22:52.673042 11976 ssh_runner.go:57] Run: sudo mkdir -p /tmp

I0918 13:22:52.675985 11976 ssh_runner.go:57] Run: sudo mkdir -p /tmp

I0918 13:22:52.780984 11976 ssh_runner.go:57] Run: docker load -i \tmp\pause-amd64_3.0

I0918 13:22:53.325608 11976 ssh_runner.go:57] Run: docker load -i \tmp\k8s-dns-dnsmasq-nanny-amd64_1.14.4

I0918 13:22:53.579765 11976 ssh_runner.go:57] Run: docker load -i \tmp\k8s-dns-sidecar-amd64_1.14.4

I0918 13:22:53.608240 11976 ssh_runner.go:57] Run: docker load -i \tmp\k8s-dns-kube-dns-amd64_1.14.4

I0918 13:22:53.802520 11976 ssh_runner.go:57] Run: docker load -i \tmp\kube-addon-manager_v6.4-beta.2

I0918 13:22:54.036070 11976 ssh_runner.go:57] Run: sudo rm -f /usr/local/bin/localkube

I0918 13:22:54.052760 11976 ssh_runner.go:57] Run: sudo mkdir -p /usr/local/bin

I0918 13:22:54.225462 11976 ssh_runner.go:57] Run: docker load -i \tmp\kubernetes-dashboard-amd64_v1.6.3

I0918 13:22:56.183160 11976 ssh_runner.go:57] Run: sudo rm -f /etc/kubernetes/addons/storageclass.yaml

I0918 13:22:56.186104 11976 ssh_runner.go:57] Run: sudo mkdir -p /etc/kubernetes/addons

I0918 13:22:56.193954 11976 ssh_runner.go:57] Run: sudo rm -f /etc/kubernetes/addons/kube-dns-controller.yaml

I0918 13:22:56.197880 11976 ssh_runner.go:57] Run: sudo mkdir -p /etc/kubernetes/addons

I0918 13:22:56.205730 11976 ssh_runner.go:57] Run: sudo rm -f /etc/kubernetes/addons/kube-dns-cm.yaml

I0918 13:22:56.210638 11976 ssh_runner.go:57] Run: sudo mkdir -p /etc/kubernetes/addons

I0918 13:22:56.217505 11976 ssh_runner.go:57] Run: sudo rm -f /etc/kubernetes/addons/kube-dns-svc.yaml

I0918 13:22:56.223394 11976 ssh_runner.go:57] Run: sudo mkdir -p /etc/kubernetes/addons

I0918 13:22:56.240075 11976 ssh_runner.go:57] Run: sudo rm -f /etc/kubernetes/manifests/addon-manager.yaml

I0918 13:22:56.251858 11976 ssh_runner.go:57] Run: sudo mkdir -p /etc/kubernetes/manifests/

I0918 13:22:56.265596 11976 ssh_runner.go:57] Run: sudo rm -f /etc/kubernetes/addons/dashboard-rc.yaml

I0918 13:22:56.273440 11976 ssh_runner.go:57] Run: sudo mkdir -p /etc/kubernetes/addons

I0918 13:22:56.291104 11976 ssh_runner.go:57] Run: sudo rm -f /etc/kubernetes/addons/dashboard-svc.yaml

I0918 13:22:56.305823 11976 ssh_runner.go:57] Run: sudo mkdir -p /etc/kubernetes/addons

Setting up certs...

I0918 13:22:56.322505 11976 certs.go:47] Setting up certificates for IP: %s 192.168.99.100

I0918 13:22:56.346058 11976 ssh_runner.go:57] Run: sudo rm -f /var/lib/localkube/certs/ca.crt

I0918 13:22:56.358815 11976 ssh_runner.go:57] Run: sudo mkdir -p /var/lib/localkube/certs/

I0918 13:22:56.390218 11976 ssh_runner.go:57] Run: sudo rm -f /var/lib/localkube/certs/ca.key

I0918 13:22:56.401994 11976 ssh_runner.go:57] Run: sudo mkdir -p /var/lib/localkube/certs/

I0918 13:22:56.410824 11976 ssh_runner.go:57] Run: sudo rm -f /var/lib/localkube/certs/apiserver.crt

I0918 13:22:56.421617 11976 ssh_runner.go:57] Run: sudo mkdir -p /var/lib/localkube/certs/

I0918 13:22:56.432411 11976 ssh_runner.go:57] Run: sudo rm -f /var/lib/localkube/certs/apiserver.key

I0918 13:22:56.435354 11976 ssh_runner.go:57] Run: sudo mkdir -p /var/lib/localkube/certs/

I0918 13:22:56.455961 11976 ssh_runner.go:57] Run: sudo rm -f /var/lib/localkube/certs/proxy-client-ca.crt

I0918 13:22:56.461851 11976 ssh_runner.go:57] Run: sudo mkdir -p /var/lib/localkube/certs/

I0918 13:22:56.485409 11976 ssh_runner.go:57] Run: sudo rm -f /var/lib/localkube/certs/proxy-client-ca.key

I0918 13:22:56.490308 11976 ssh_runner.go:57] Run: sudo mkdir -p /var/lib/localkube/certs/

I0918 13:22:56.507974 11976 ssh_runner.go:57] Run: sudo rm -f /var/lib/localkube/certs/proxy-client.crt

I0918 13:22:56.520731 11976 ssh_runner.go:57] Run: sudo mkdir -p /var/lib/localkube/certs/

I0918 13:22:56.538392 11976 ssh_runner.go:57] Run: sudo rm -f /var/lib/localkube/certs/proxy-client.key

I0918 13:22:56.544280 11976 ssh_runner.go:57] Run: sudo mkdir -p /var/lib/localkube/certs/

I0918 13:22:56.562932 11976 ssh_runner.go:57] Run: sudo rm -f /var/lib/localkube/kubeconfig

I0918 13:22:56.573718 11976 ssh_runner.go:57] Run: sudo mkdir -p /var/lib/localkube

Connecting to cluster...

Setting up kubeconfig...

I0918 13:22:56.738577 11976 config.go:101] Using kubeconfig: C:\Users\yulian/.kube/config

Starting cluster components...

I0918 13:22:56.740539 11976 ssh_runner.go:57] Run: if [[ `systemctl` =~ -\.mount ]] &>/dev/null;then

printf %s "[Unit]

Description=Localkube

Documentation=https://github.com/kubernetes/minikube/tree/master/pkg/localkube

[Service]

Type=notify

Restart=always

RestartSec=3

ExecStart=/usr/local/bin/localkube --dns-domain=cluster.local --node-ip=192.168.99.100 --generate-certs=false --logtostderr=true --enable-dns=false

ExecReload=/bin/kill -s HUP $MAINPID

[Install]

WantedBy=multi-user.target

" | sudo tee /usr/lib/systemd/system/localkube.service

sudo systemctl daemon-reload

sudo systemctl enable localkube.service

sudo systemctl restart localkube.service || true

else

sudo killall localkube || true

# Run with nohup so it stays up. Redirect logs to useful places.

sudo sh -c 'PATH=/usr/local/sbin:$PATH nohup /usr/local/bin/localkube --dns-domain=cluster.local --node-ip=192.168.99.100 --generate-certs=false --logtostderr=true --enable-dns

=false > /var/lib/localkube/localkube.out 2> /var/lib/localkube/localkube.err < /dev/null & echo $! > /var/run/localkube.pid &'

fi

Kubectl is now configured to use the cluster.

λ minikube dashboard

Could not find finalized endpoint being pointed to by kubernetes-dashboard: Error validating service: Error getting service kubernetes-dashboard: Get https://192.168.99.100:8443/

api/v1/namespaces/kube-system/services/kubernetes-dashboard: dial tcp 192.168.99.100:8443: connectex: No connection could be made because the target machine actively refused it.

λ minikube status

minikube: Running

cluster: Running

kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.100

Does your machine expose 192.168.99.100 at all? You can easily check this in HyperV in the lower corner of the VM UI.

Seems to be a network problem ...

I don't see any IP Addresses in the 'Networking' tab when I click on the minikube VM

Then it really seems to be the network. Try to ask for help in Minikube slack channel, someone there will surely help you much faster. It seems that the HyperV adapter is not configured correctly for the VM

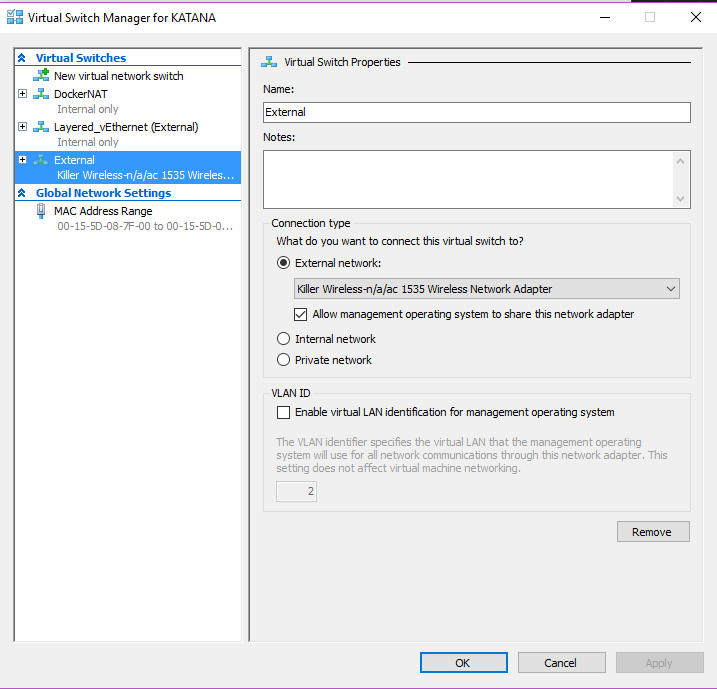

Make sure you use an External Virtual Switch (or an Internal Virtual Switch

with NAT configured and a local running DHCP server for this same network).

Avoid using DockerNAT if you also have this installed on your machine. This

needs additional configuration before it will work

I'm explicitly telling it to use a an external switch minikube start --vm-driver=hyperv --hyperv-virtual-switch=External --alsologtostderr .

Here's the forementioned switch in my Hyper-V:

To be honest, I don't see anything wrong with it.

For me, I get to work with external DHCP by choosing "Internal Network" for the HyperV switch, and then you share it through the windows network adapter which has external access, e.g. wifi:

Btw, these are multiple separate problems in this issue. Random crashes above were probably due to HyperV dynamic memory issue. The last problem is HyperV networking problem

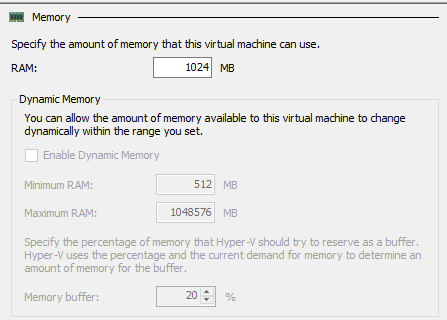

I've been having crashing issues with minikube. I'm new to kubernetes and I'm going through some Pluralsight Courses to start learning the technology. I came across this thread and I changed my minikube virtual in Hyper-V to not use dynamic memory and the system seems to have stabilized.

Ran into this or a very similar issue today using:

minikube v0.24

ISO: v0.23.6

hyper-v

I found it worked fine after a few changes. All of them may not be needed and YMMV.

Issue 'minikube stop' and make these in the settings for the minikube VM in the Hyper-V Manager:

- Network Adapter -> Hardware Acceleration: Turn off "Enable virtual machine queue." I did this since I wasn't sure if my network adapter actually supports VMQ and I was having the no IP address issue at the start of this. I also noticed that one of the comments on another issue included a similar Killer-Wireless chipset to the one in my laptop. After this change the VM would successfully start, but the service would still crash

Network Adapter -> Advanced: Turn off "Protected network." Since the adapter was bound to my wireless card I wasn't sure at first if I was losing my WiFi connection and sending the virtual switch into the weeds whenever the connection dropped which, based on the description of the setting I thought that might have triggered a migration event on the VM (I don't know if that actually occurred. It made sense to me at the time, but this may not have actually been necessary.)

Memory: Set the ram for the minikube VM to 4096MB and turn off "Enable Dynamic Memory." One of the messages that I caught on the console window when the service crashed was a kernel fault. It could be that Hyper-V is trying to shrink the available memory for the VM and failing to properly reallocate. This seems like it could be the nail in the coffin for keeping the cluster service from crashing after 5-10 minutes of leaving things idle. After this change minikube hummed along the rest of the day without any problem.

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/lifecycle stale

Stale issues rot after 30d of inactivity.

Mark the issue as fresh with /remove-lifecycle rotten.

Rotten issues close after an additional 30d of inactivity.

If this issue is safe to close now please do so with /close.

Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/lifecycle rotten

/remove-lifecycle stale

Closing issue. If this is still a problem, please create a new issue

Most helpful comment

I've been having crashing issues with minikube. I'm new to kubernetes and I'm going through some Pluralsight Courses to start learning the technology. I came across this thread and I changed my minikube virtual in Hyper-V to not use dynamic memory and the system seems to have stabilized.