Marlin: [BUG] JUNCTION_DEVIATION creates unexpected decelerations/accelerations on smooth curves

Bug Description

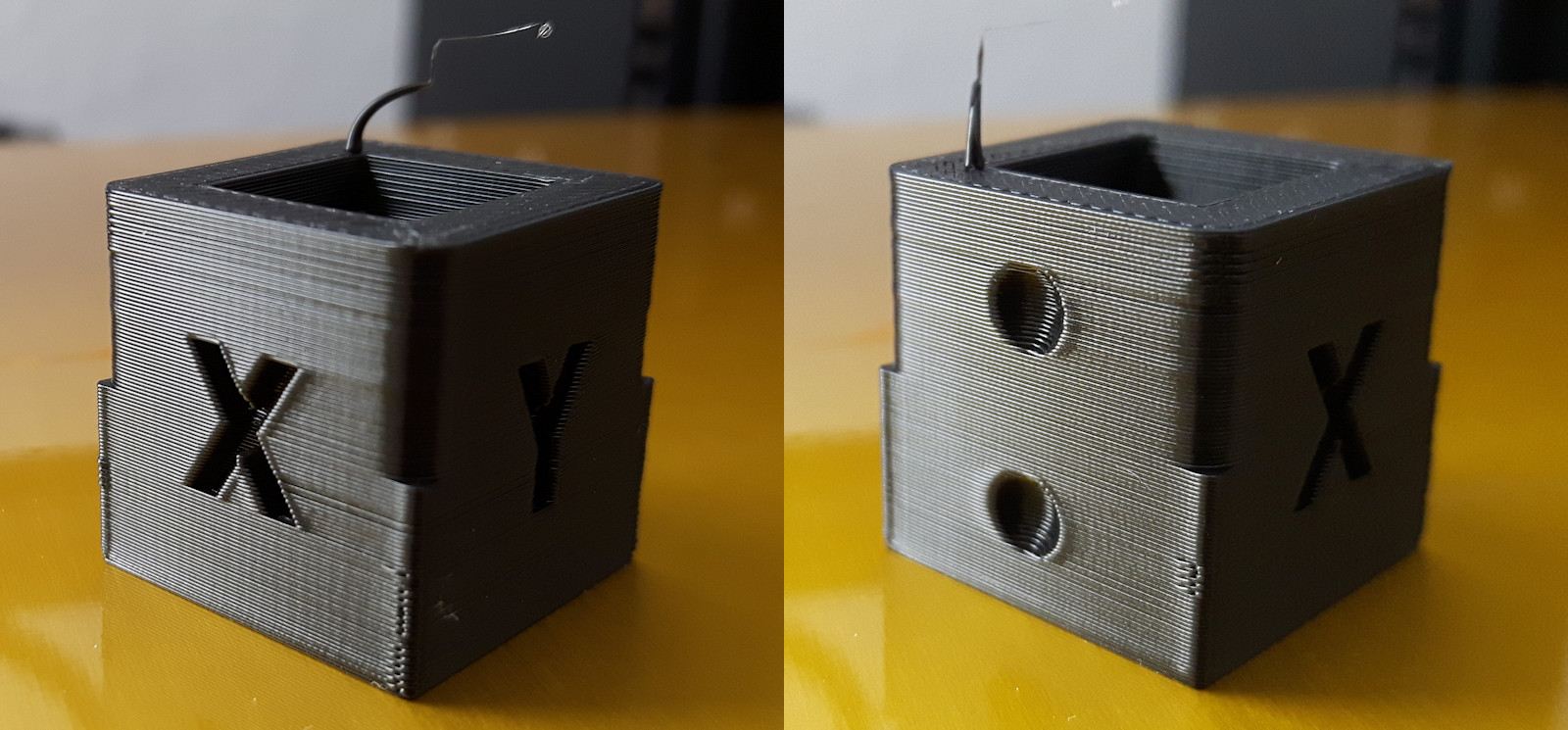

With Junction deviation enabled the printer decelerates and accelerates unexpectedly on smooth curves. JUNCTION_DEVIATION_MM is set to 0.017. There is no difference if I increase it to 0.2.

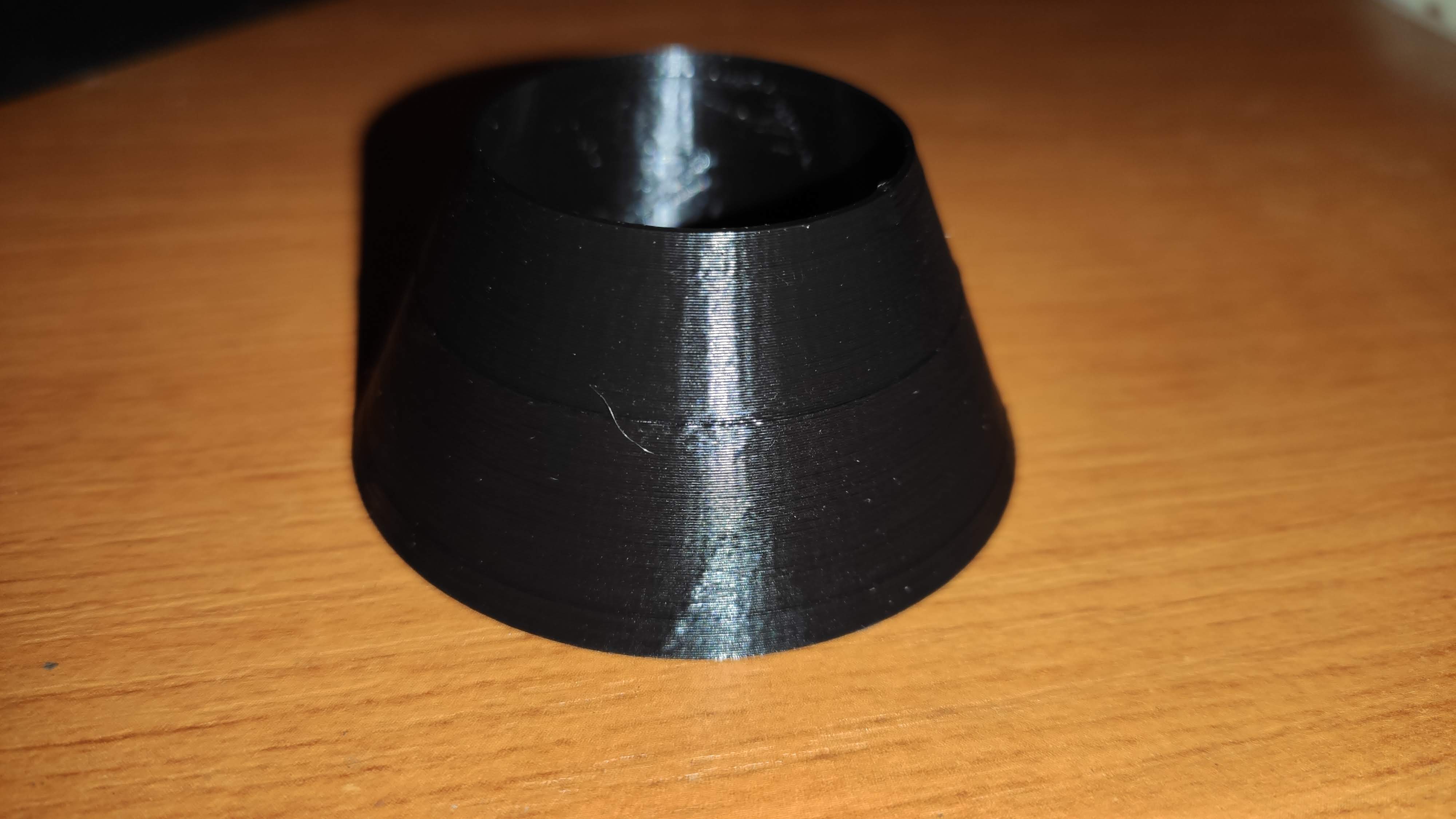

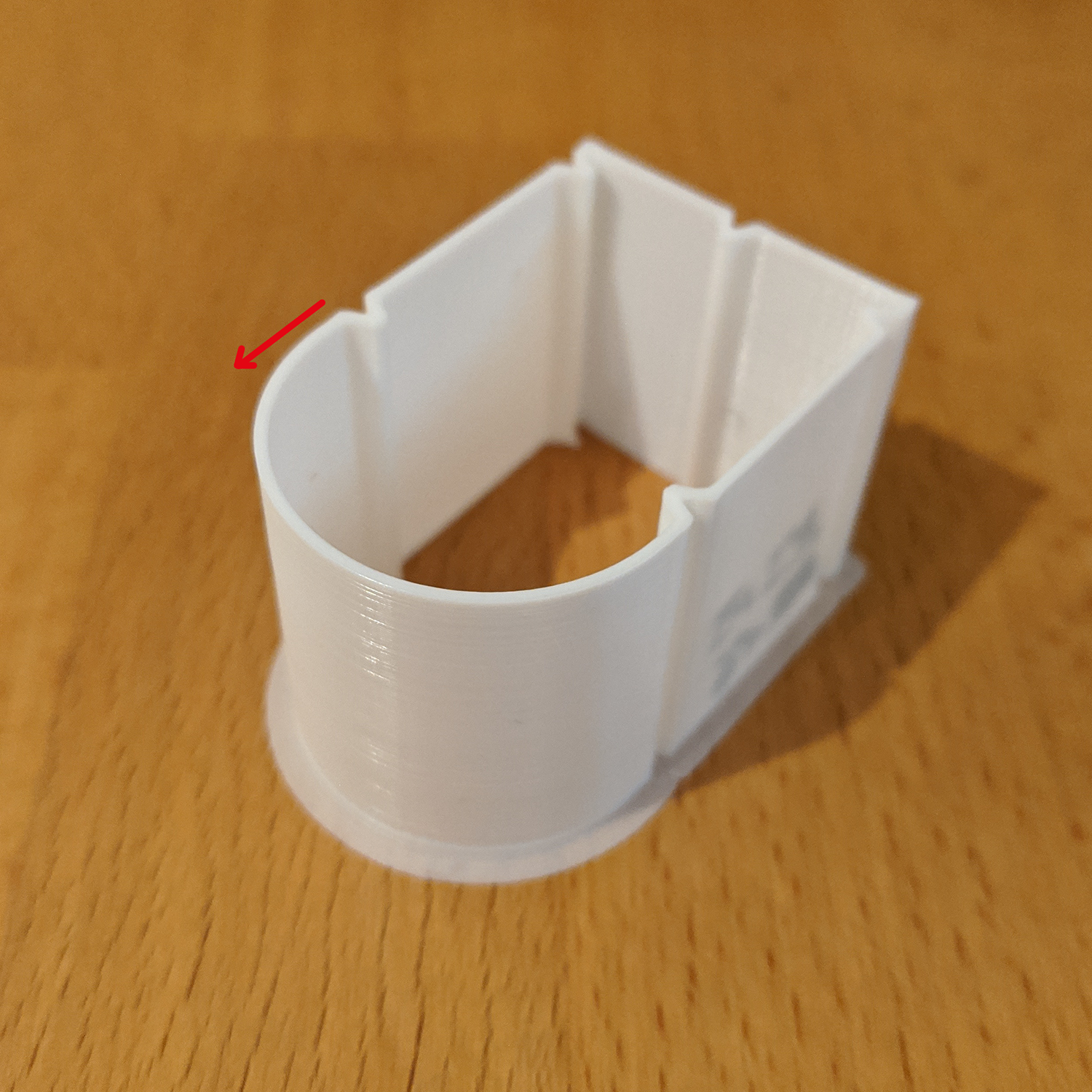

In this picture I have marked the locations where the slowdown occurs with arrows. This happens at other locations as well but these are the most obvious.

With CLASSIC_JERK the print is smooth and without unexpected deceleration / acceleration.

Example videos (look at the extruder visualizer when this happens):

With Junction Deviation = 0.017

With Classic Jerk

#define DEFAULT_XJERK 10.0

#define DEFAULT_YJERK 10.0

#define DEFAULT_ZJERK 0.3

My Configurations

LIN_ADVANCE is enabled and LIN_ADVANCE_K is set to 0.1. Settings this to 0 has no effect.

S_CURVE_ACCELERATION is enabled.

Steps to Reproduce

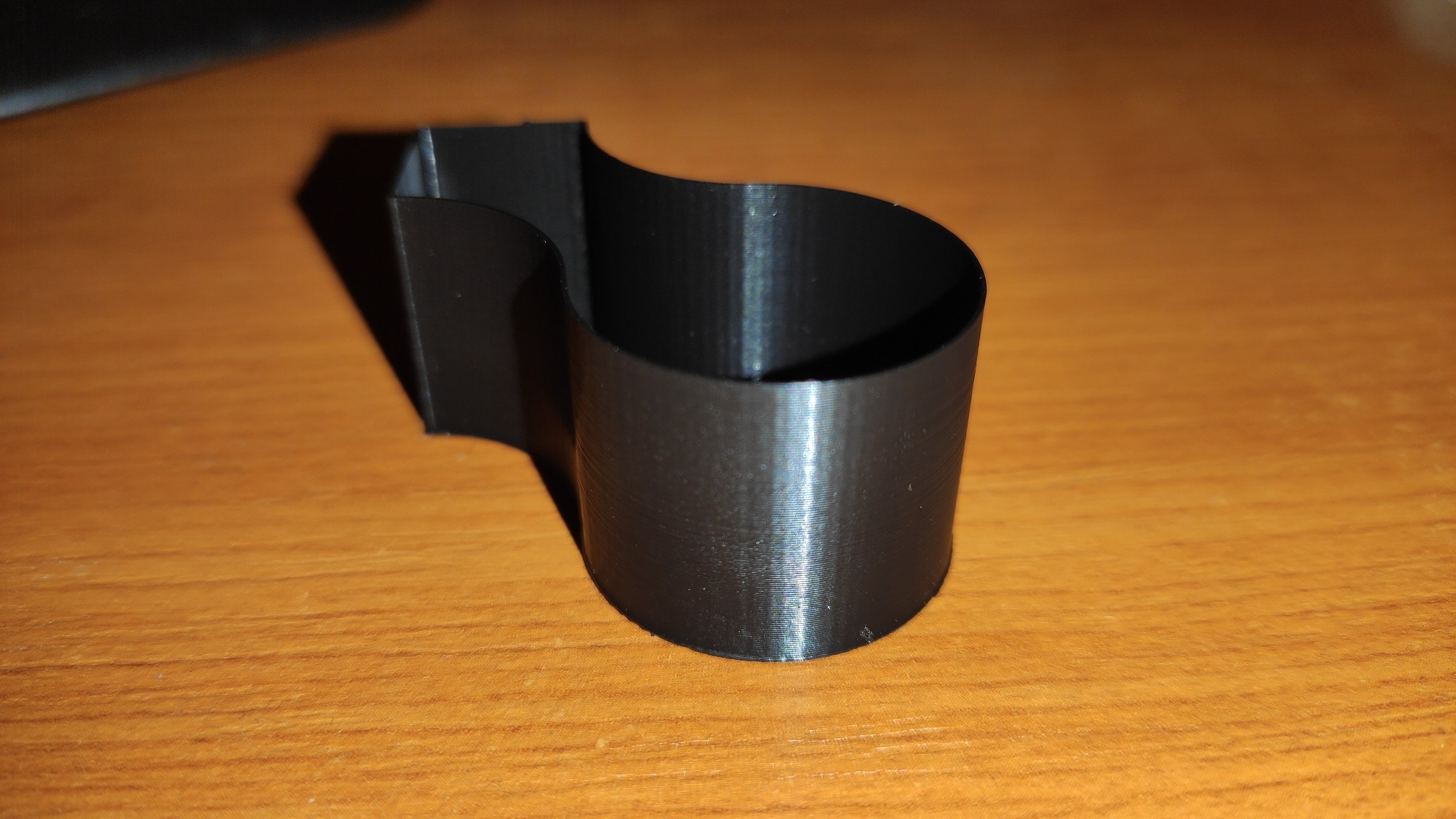

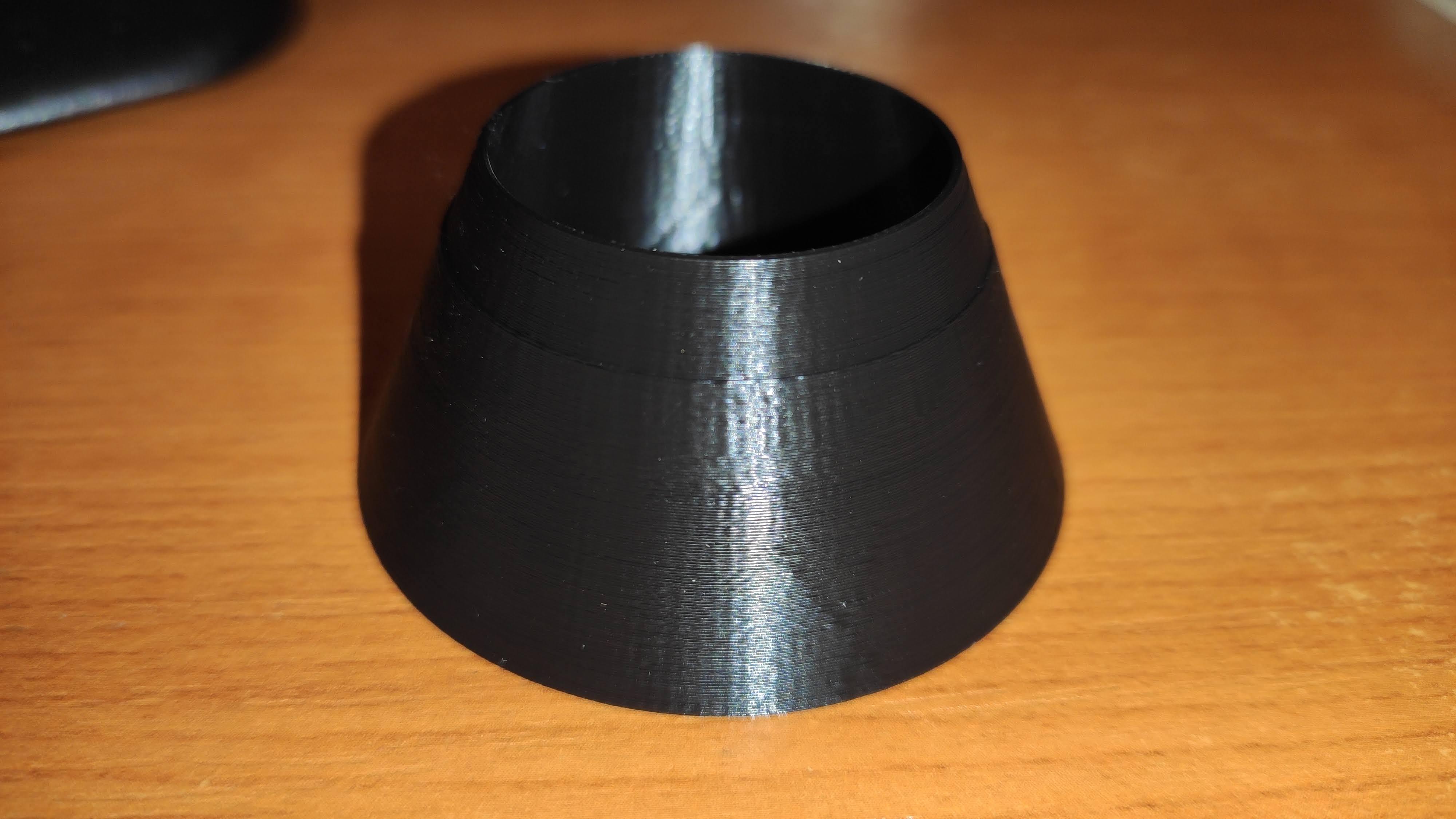

Print a large curved object like the one in the photo.

Expected behavior:

The curve is printed smooth, just like with classic jerk.

Actual behavior:

The curve is printed with at least decelerated/accelerated moves.

I'm using Marlin bugfix-2.0.x commit e7a9f17 from March 22nd.

All 265 comments

17146

Have the same issue.

SKR Mini E3 v1.2, Cura/PrusaSlicer/Fusion 360

Yes. I have this same problem (in fact also printing face shields :D).

Differences in setup, producing the same issue:

- You are using Slic3r and I am using Cura.

- Your board is 32 bit (ARMED STM32) and mine is 8 bit (ZUM Mega 3D).

- The STL model is different (I was printing this one).

Similarities:

- The model has arcs that are long and thin.

- S_CURVE: enabled (tested both ON and OFF, same problem).

- Junction deviation: enabled.

- LIN_ADVANCE: enabled (tested both ON and OFF, same problem).

More parameters:

- I have ADAPTIVE_STEP_SMOOTHING enabled (tested both ON and OFF, same problem).

I'm considering switching back permanently to classic jerk since it doesn't have this problem. Your help would be very appreciated!

Maybe related: #15473

Because of this problem, I spent a lot of time testing JD and LA settings. My bottom line is to set JD much higher, certainly based on my other settings. Printing curves like @ktand in the first post with default 0.013 JD creates a lot of stuttering on my machine. Increasing JD to much higher values makes it working as expected. In my case setting JD to 0.07 stuttering gets rarely. Increasing to 0.09 makes stuttering almost gone, except small curves and corners where it does its job as expected.

Here are my config files, maybe to compare settings like acceleration, E-jerk,...

Configuration.zip

Configuration_adv.zip

I increased JUNCTION_DEVIATION_MM to 0.2, almost 11 times higher, but the

problem still occurs.

Den tis 31 mars 2020 kl 19:43 skrev rado79 notifications@github.com:

Because of this problem, I spent a lot of time testing JD and LA settings.

My bottom line is to set JD much higher, certainly based on my other

settings. Printing curves like @ktand https://github.com/ktand in the

first post with default 0.013 JD creates a lot of stuttering on my machine.

Increasing JD to much higher values makes it working as expected. In my

case setting JD to 0.07 stuttering gets rarely. Increasing to 0.09 makes

stuttering almost gone, except small curves and corners where it does its

job as expected.Here are my config files, maybe to compare settings like acceleration,

E-jerk,...

Configuration.zip

https://github.com/MarlinFirmware/Marlin/files/4410644/Configuration.zip

Configuration_adv.zip

https://github.com/MarlinFirmware/Marlin/files/4410645/Configuration_adv.zip—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/MarlinFirmware/Marlin/issues/17342#issuecomment-606773983,

or unsubscribe

https://github.com/notifications/unsubscribe-auth/AEHQ2JDBQG34PUYSMZVYDKTRKITSLANCNFSM4LW33CGA

.

I increased it to 0.3mm and the problem still occurs.

El mar., 31 mar. 2020 19:56, Karl Andersson notifications@github.com

escribió:

I increased JUNCTION_DEVIATION_MM to 0.2, almost 11 times higher, but the

problem still occurs.Den tis 31 mars 2020 kl 19:43 skrev rado79 notifications@github.com:

Because of this problem, I spent a lot of time testing JD and LA

settings.

My bottom line is to set JD much higher, certainly based on my other

settings. Printing curves like @ktand https://github.com/ktand in the

first post with default 0.013 JD creates a lot of stuttering on my

machine.

Increasing JD to much higher values makes it working as expected. In my

case setting JD to 0.07 stuttering gets rarely. Increasing to 0.09 makes

stuttering almost gone, except small curves and corners where it does its

job as expected.Here are my config files, maybe to compare settings like acceleration,

E-jerk,...

Configuration.zip

<

https://github.com/MarlinFirmware/Marlin/files/4410644/Configuration.zip>

Configuration_adv.zip

<

https://github.com/MarlinFirmware/Marlin/files/4410645/Configuration_adv.zip—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<

https://github.com/MarlinFirmware/Marlin/issues/17342#issuecomment-606773983

,

or unsubscribe

<

https://github.com/notifications/unsubscribe-auth/AEHQ2JDBQG34PUYSMZVYDKTRKITSLANCNFSM4LW33CGA.

—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

https://github.com/MarlinFirmware/Marlin/issues/17342#issuecomment-606780696,

or unsubscribe

https://github.com/notifications/unsubscribe-auth/AAMPPKS64M6XGUXUT6YIQNLRKIVEDANCNFSM4LW33CGA

.

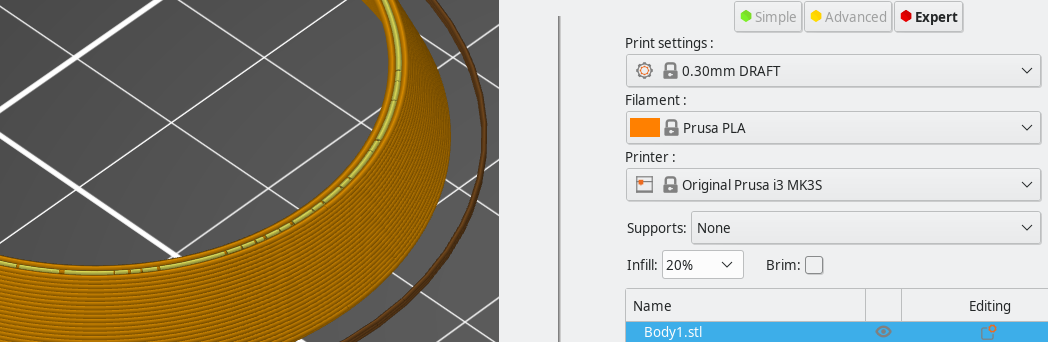

First, be sure to turn off all acceleration and speed tuning in your slicer. Prusa Slicer and Cura are both prone to inserting a lot of parameter changes, and this can sometimes interfere with the planner.

Unfortunately it is not a simple thing to debug dynamic issues of this kind without lots of data collection and isolation of effects. So we will need to gather as much logging as possible to determine root causes.

One thing I would note is that when doing curves you are likely to get a greater variation in linear speeds and constraints on those speeds. If you have your movement speed set very high in the slicer so you can get fast curves, you will also get a lot of places where your printer's maximum constraints come into play to alter the speed. So, you can try setting very high max-accel and max-speed values on the printer to remove those constraints.

Curves with lots of segments are also a bit more demanding, so you may also be hitting computation limits in some cases, and slowing down of the planner to keep the buffer full. If your board has lots of SRAM you can increase the buffer sizes and set the slowdown limit to a smaller proportion.

We did just fix an issue with G2/G3 arcs, but your slicer probably isn't producing those….

One of the settings I saw CH3D point out recently was the "minimum segment length" setting in the slicer. This makes sure not to flood the machine with too many tiny segments, when a minimum length of 0.6mm would do just fine for most applications.

I don't mean to suggest that tuning slicer settings and reducing load on the machine is the ultimate solution for JD (and LA) issues, but it will help in the isolation testing.

These are the acceleration parameters currently set in the slicer. I will set them to 0 so that the printer defaults are used (I will also reset the printer configuration to ensure no parameters has changed) and try another print.

Regarding the slowing down I've tried the following:

- Enabled

SLOWDOWNwith aSLOWDOWN_DIVISORof 2. No difference. - Increase the

BLOCK_BUFFER_SIZEto 64, no difference.

I'm printing from SD.

Is there a different how the planner treats segments with JD vs classic jerk enabled, except from generating segments with different acceleration/deceleration? With classic jerk the print is very smooth.

Tried to find a setting regarding minimum segment length but the only settings that I could find are these:

Remember to increase the SLOWDOWN_DIVISOR if you increase the number of buffer lines, otherwise it slows down too soon. With 64, a divisor of 16 should be good.

I tried without SLOWDOWN but with a BLOCK_BUFFER_SIZE of 64, no difference.

Is there a different how the planner treats segments with JD vs classic jerk enabled…?

There is a difference in how much acceleration and deceleration are applied at the segment junctions. And there are more (expensive) calculations done at each segment junction.

Ideally, we'll need to put together a table / graph of the speeds that are being applied at the junctions, comparing classic jerk to junction deviation, and both of those to the best desired speeds.

Hi! Thanks for looking in to this! Is JD applied to each new motion regardless of length?

🌊 Is JD applied to each new motion regardless of length? 🦁

Yes.

OK, thanks. (I didn't put those icons.. new bug report? :p)

Tried to find a setting regarding minimum segment length but the only settings that I could find are these:

@ktand Where did you found it? Is it PrusaSlicer? Can't find it on 2.2

Since this has become a duplicate of 17146 I am closing that issue to reduce noise.

@thinkyhead see my comment on the duplicate issue: https://github.com/MarlinFirmware/Marlin/issues/17146#issuecomment-606901454. Sloppy/low-res slicing is not a solution; it breaks other things.

Tried to find a setting regarding minimum segment length but the only settings that I could find are these:

@ktand Where did you found it? Is it PrusaSlicer? Can't find it on 2.2

@qwewer0 I'm using Slic3r++ 2.2.48

I was in the middle of writing a reply in the other report when @thinkyhead closed it so I'm just copying what I had started to type over there. It's a good idea to consolidate it to one thread anyways.

@swilkens wrote

If this is an issue specifically with the SKR Mini E3 v1.2 and maybe with other SKR boards too, then how can we make sure of it, then solve it?

I'm open to ideas.Build back your original board, use the same firmware options and verify on the original board with the same gcode?

My board is an SKR E3 DIP but that's basically the "same" as the mini I think. I do still have my original creality board but that is different stepper drivers(A4988). This weekend I could try swapping it back in just to test but I'm not sure if I could enable everything without running out of memory on it. Probably not, but i can try.

@CarlosGS @qwewer0 @ktand @rado79 and any others I may have missed. Out of curiosity what stepper drivers and exact main board is everyone using.

SKR E3 DIP v1.1 and all 4 stepper drivers are TMC2209's on my printer.

Like @thinkyhead said we need to start collecting data and logging. To try and get it as consistent across the board we should all decide on or make a fairly quick printing test model that we can all use and a list of what data we should all collect. I'm probably not the best to make those decisions but if someone writes out an almost step by step and what data points to record I'll certainly do it.

It looks like M928 can produce a log of "console and host input" and send it to the SDcard to easily grab. Is that the kind of logging you are talking about @thinkyhead? Also what M111 level would be helpful for this?

Hi! I don't know if this help, but I'm having same problems. CJ is better but I got unexpected beheaviour on some parts. Two videos:

Junction Deviation 0.013

Classic Jerk

#define DEFAULT_XJERK 10.0

#define DEFAULT_YJERK 10.0

#define DEFAULT_ZJERK 0.3

GT2560 Motherboard with mega1280. All stepper drivers are TMC2208 as standalone.

S_CURVE_ACCELERATION enabled.

#define BLOCK_BUFFER_SIZE 32

NO Linear Advance

BQ Zum Mega 3D with integrated DRV8825 drivers

@CarlosGS @qwewer0 @ktand @rado79 and any others I may have missed. Out of curiosity what stepper drivers and exact main board is everyone using.

BTT SKR1.3 and TMC2208/2209, 24V

@thinkyhead

I have now configured the slicer to use the same defaults as the printer's. Unfortunately this didn't help.

#define DEFAULT_MAX_FEEDRATE { 200, 200, 30, 120 }

#define DEFAULT_MAX_ACCELERATION { 2000, 2000, 200, 10000 }

#define DEFAULT_ACCELERATION 2000 // X, Y, Z and E acceleration for printing moves

#define DEFAULT_RETRACT_ACCELERATION 2000 // E acceleration for retracts

#define DEFAULT_TRAVEL_ACCELERATION 2000 // X, Y, Z acceleration for travel (non printing) moves

Start of G-code script:

M201 X2000 Y2000 Z200 E10000 ; sets maximum accelerations, mm/sec^2

M203 X200 Y200 Z30 E120 ; sets maximum feedrates, mm/sec

M204 P2000 R2000 T2000 ; sets acceleration (P, T) and retract acceleration (R), mm/sec^2

M205 X10.00 Y10.00 Z0.40 E4.50 ; sets the jerk limits, mm/sec

M205 S0 T0 ; sets the minimum extruding and travel feed rate, mm/sec

Inspecting the G-code file in S3D shows no speed changes in the critical areas. (Not sure I would be able to seem them if there were any though).

Regarding your suggestion:

So, you can try setting very high max-accel and max-speed values on the printer to remove those constraints.

what would be suitable values for max-accel? And for me to be sure I make the right modification, is it the DEFAULT_MAX_ACCELERATION or the DEFAULT_ACCELERATION we're talking about? I've always wondered how the DEFAULT_MAX_ACCELERATION relates to the DEFAULT_ACCELERATION. Is that just a limit or is the DEFAULT_MAX_ACCELERATION used by the planner?

@CarlosGS @qwewer0 @ktand @rado79 and any others I may have missed. Out of curiosity what stepper drivers and exact main board is everyone using.

SKR Mini E3 v1.2, TMC2209

My board is an SKR E3 DIP and TMC2208 UART drivers. But the result is the same.

Is that a Face Mask holder for a hospital? We are printing some thing very similar to what your pictures shows in Houston. Except ours say "Houston Strong" on them!

@Roxy-3D Yes it is. Link. I've delivered two batches to a local hospital.

I am in complete confusion. I tried different combinations (turned on and off) of parameters:

LIN_ADVANCE

S_CURVE_ACCELERATION

CLASSIC_JERK

But I always got pimples on my model, sometimes even on straight lines. I tried to increase the maximum resolution to 0.8, the result is better but not perfect. Photo in my post on reddit https://www.reddit.com/r/ender3/comments/ft3fse/pimples_when_printing_a_3d_printer/

Is it really necessary to return to the stock board and marlin 1,1,9? (

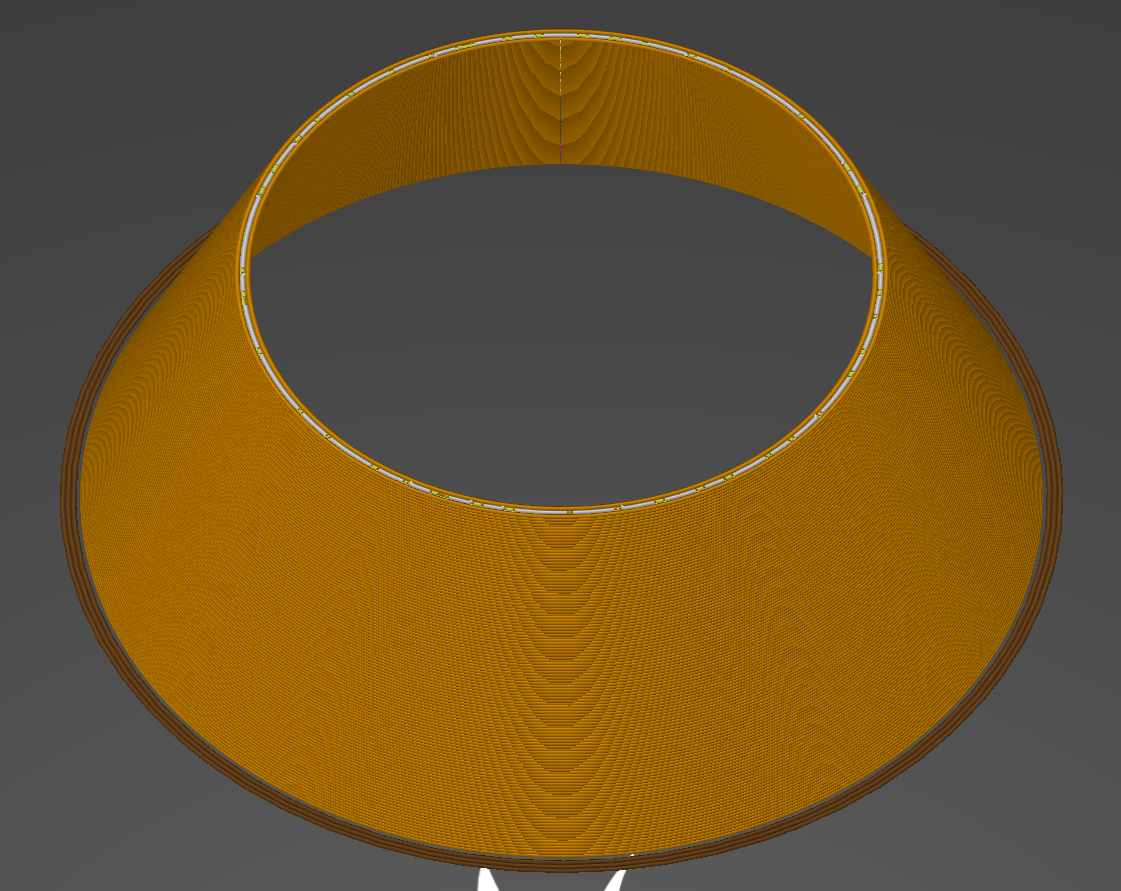

With PrusaSlicer, in my case the jittering motion happens on the "gap fill" layers (the white traces)

Maybe the cause is that these look like arcs to us, but the gcode is different.

This is an arc in PrusaSlicer:

G1 X42.099 Y31.207 E0.10230

G1 X43.391 Y29.547 E0.17992

G1 X44.569 Y28.161 E0.15551

G1 X45.807 Y26.811 E0.15659

G1 X47.091 Y25.514 E0.15609

G1 X48.420 Y24.268 E0.15574

G1 X49.788 Y23.074 E0.15526

G1 X51.682 Y21.563 E0.20715

G1 X52.647 Y20.832 E0.10354

G1 X54.132 Y19.786 E0.15529

G1 X55.144 Y19.120 E0.10360

G1 X57.732 Y17.530 E0.25974

And this is the parallel arc segment for "gap fill" in PrusaSlicer:

G1 F7056.755

G1 X56.875 Y18.516 E0.00036

G1 F6344.481

G1 X55.363 Y19.465 E0.10529

G1 X54.418 Y20.079 E0.06647

G1 F6581.463

G1 X54.412 Y20.084 E0.00043

G1 F6541.549

G1 X54.359 Y20.120 E0.00367

G1 F6525.710

G1 X52.886 Y21.159 E0.10338

G1 X52.566 Y21.393 E0.02269

G1 F6783.652

G1 X52.560 Y21.398 E0.00046

G1 F6517.417

G1 X51.930 Y21.883 E0.04563

G1 X50.318 Y23.158 E0.11800

G1 F6861.681

G1 X50.312 Y23.162 E0.00039

G1 F6617.866

G1 X50.046 Y23.383 E0.01958

G1 X49.192 Y24.121 E0.06381

G1 F6822.325

G1 X49.186 Y24.126 E0.00041

G1 F6353.068

G1 X48.694 Y24.571 E0.03908

G1 F6333.234

G1 X47.377 Y25.807 E0.10673

G1 X46.932 Y26.241 E0.03670

G1 F6762.339

G1 X46.927 Y26.246 E0.00043

G1 F6512.778

G1 X46.100 Y27.090 E0.06787

G1 X45.896 Y27.307 E0.01709

G1 F6670.677

G1 X45.891 Y27.312 E0.00041

G1 F6227.178

G1 X44.879 Y28.434 E0.09080

G1 X44.120 Y29.307 E0.06950

G1 F6689.505

G1 X44.114 Y29.315 E0.00052

G1 F6283.570

G1 X43.710 Y29.806 E0.03789

G1 X42.420 Y31.449 E0.12439

Video of both gcodes:

The same test but with Cura:

So the jittery arcs in Cura also have segments with uneven length. My guess is that these were being softened by Classic Jerk and are now being noticed.

I have also recorded the PrusaSlicer example (updated previous post), and it shows arc segments of uneven length too. Sorry for the spam but it is looking promising!!

Not sure if this'll help anybody here. Although the stuttering still occurs on my machine, the extrusion seems far more reliable:

With the SKR E3 series (Mini 1.2, DIP), I found the Z stepper was missing steps (verified by M48, and hearing the probe randomly hit the bed on second deployment). The extruder was also showing very minor inconsistencies, which was revealed when I was running a 0.8 nozzle.

I tried turning on and off Stealthchop, slowing down the probing, etc, and still had issues. On average, the probing was out 0.08 to 0.1.

I recently converted the printer to 24V to see if it was a voltage issue, but the issue persisted. Finally, I changed the motor timings in Configuration_Adv.h to the DRV8825 values:

define MINIMUM_STEPPER_POST_DIR_DELAY 650

define MINIMUM_STEPPER_PRE_DIR_DELAY 650

define MINIMUM_STEPPER_PULSE 2

define MAXIMUM_STEPPER_RATE 250000

Immediately, my M48 dropped to 0.001. The stutters are still there, but the extrusion seems far cleaner.

I've always wondered how the

DEFAULT_MAX_ACCELERATIONrelates to theDEFAULT_ACCELERATION. Is that just a limit or is theDEFAULT_MAX_ACCELERATIONused by the planner?

These are just the defaults that you set in the configs. The planner uses the current values. The current values may been loaded from EEPROM or may have been altered by M201 / M204.

- Max Acceleration: All accelerations are clamped to this value.

- Acceleration: The acceleration that will be used for the next move.

Jerk, junction deviation, and move prediction rely on the current acceleration and feedrate values, so it does seem like changing the feedrate or accelerations constantly throughout a move could undermine the planner. It would be good to compare very clean G-code that doesn't change feedrates except when going between feature types (infill vs. walls) to G-code that changes motion parameters more often.

I'm not sure why the G-code above does separate G1 F commands when the F parameter could be on the end of the following line instead. That would make it slightly leaner.

This is worth a read for anyone using 2208 and 2209… https://github.com/MarlinFirmware/Marlin/issues/11825#issuecomment-421809385

@thinkyhead Didn't worked for me. https://github.com/MarlinFirmware/Marlin/issues/17146#issuecomment-609656052

This is worth a read for anyone using 2208 and 2209… #11825 (comment)

Hello all! so I read that and what I took away from it was maybe stealthchop was the issue? or am I reading that wrong?

I received a micro-swiss direct drive kit to go with their hot end I already had for my ender 3 pro and installed it. I wen't with 2.0.x bugfix(as of yesterday) just to have the latest and set it up. Calibrated e-steps, flow and k value. Need to tune more but these were what I thought to be important to get me going and testing for this "bug"

Part of me hoped that by switching to this setup and being able to really lower my retraction which I have not REALLY calibrated yet but am running at 1mm @ 25mm/s and am getting decent results. Add that to a much lower k value of 0.08 and I was hoping the issue would go away. *hint... no such luck! :(

I broke out the trusty tux model and sliced out a bit of the middle section and after the first slower layer printed I could immediately hear the familiar sound of the extruder going nuts, but much more subtle now. In the end the "skin" of the tux model ended up looking almost the same as it had with JD/LA enabled before the direct drive as outlined in #17146 OP.

So I thought lets test out this stealthchop theory and printed the same gcode again but in the middle of it I sent an 'M569 S0 E' which I knew put the extruder stepper into spreadcycle mode because I could hear it but I also verified it on the Ender 3 LCD in advanced settings/TMC Drivers and also with M569 after the print was done which showed "E driver mode: spreadCycle"

I printed half the model with stealthchop and half with speadcycle and can't tell the difference. The extruder stutter remained even with spreadcycle.

@thinkyhead. Since I'm using a board with driver sockets I'm willing to order another stepper driver or two and test with them to try and see if this is a TMC2208/9 issue but I would like some input from someone in the know about what to order. A different TMC driver(maybe 5160)? A bog standard A4988? DRV8825? I would even ask if anyone has a few different drivers sitting around who might not have the time or want to test them out to mail them to me and I'll mail them back. I would just ask that the sender REALLY clean them with some IPA and I would do the same before sending them back.

So I thought lets test out this stealthchop theory and printed the same gcode again but in the middle of it I sent an 'M569 S0 E' which I knew put the extruder stepper into spreadcycle mode

@DaMadOne Isn't the X and Y axis is the problem here?

Tested with A4988 drivers. Same issue.

I can confirm that I have the same issue on the curve on this part from a TeachingTech Thingiverse collection. It only appears around layers 100 to 125 at 0.2 mm LH.

Hardware:

Stock Ender 3 Pro

Creality 1.1.4 board

ATMega 1284

A4988 drivers

Firmware:

Marlin 1.1.9 (1.1.x release branch from 05.04.20, NOT the bugfix branch)

Current config

--> Also tested with different settings: Linear Advance en-/disabled, S-Curve en-/disabled, Adaptive Step Smoothing en-/disabled. Those have _no effect_ on the issue.

Slicer:

Cura 4.5, acceleration & jerk control disabled

GCode

--> I can also confirm the already mentioned _inconsistent / quickly alternating_ segment length on the affected layers in the affected regions of the part. These are visible in the Cura preview. While on non-affected layers, segments in the curve are of about equal length, the segments on the affected layers are alternating short/long/short/long in comparison. However, this does not affect prints with classic Jerk at all. Only prints with Junction Deviation are deteriorated.

Same issue with Sidewinder-X1 - Reducing resolution did not help. Slicing with Cura produces worse results than Slic3r, however both are back to great once I deactivate JD

I have found a workaround for the issue, which eliminates the problem for my test print: By increasing MIN_STEPS_PER_SEGMENT from the default 6 to 16 (which is 1/2 nozzle diameter for me), the curve becomes perfectly smooth when using JD. I didn't test any lower values, so 10 or 12 steps per segment may already be enough. This is certainly not a fix, because it slightly reduces precision.

It's very interesting that increasing MIN_STEPS_PER_SEGMENT has a positive effect. Certainly we don't want a million blocks with only one step in them, since this makes any acceleration / deceleration turn into a floating point square root party, and there are bound to be other potential pitfalls with many tiny segments.

Once I have more free time I will have to dive into a deeper analysis to see what the MIN_STEPS_PER_SEGMENT change is mitigating, and then figure out an earlier point to apply mitigation.

In the meantime, I would enjoy hearing from others what their experiences are in playing with MIN_STEPS_PER_SEGMENT, and if there are any "magic" thresholds that differ depending on hardware.

For me I have to set JD to its lowest possible value of 0.010

Anything higher creates artefacts

I do not see slowdown on SKR PRO during printing of gcode with lots of small segments. But surface quality difference between JD and Jerk is still there. MIN_STEPS_PER_SEGMENT did not changed it much.

I see hardly any differences in surface quality for MIN_STEPS 6 and 16. MIN_STEPS 16 has slightly less "noise" on the surface, but still very far from JERK quality.

Jerk surface quality is better with all MIN_STEPS values (16, 6, 1).

@BarsMonster You're using different hardware than me. Are 16 steps also equivalent to 0.2 mm in your case? That's what you should aim for. I Have 80 steps/mm on X and Y, therefore MIN_STEPS_PER_SEGMENT = 16 filtered out all the tiny segments <0.2 mm from the GCode.

What size circumference are you using to see the stutters? I've put together a test stl / gcode just to see if I can see any artifacts. Here's the files. You may want to stretch it vertically if you want more comparison layers, but I included a gcode file from S3D at 0.2mm layer height at 0.4mm:

https://drive.google.com/open?id=1zZSb3GSWtmA65jmILRCykF0DZYADhaPb

Each ring has a different edge count. Bottom layer is a standard circle in Fusion 360. The next layer has 1000 edges, followed by 500, 100, and 50.

One change I've been playing with is increasing the buffer size. I noticed 16 seems to be a bit rough with lots of tiny movements. With my 32 bit board having lots of RAM, I increased it to 128:

#if ENABLED(SDSUPPORT)

#define BLOCK_BUFFER_SIZE 128 // SD,LCD,Buttons take more memory, block buffer needs to be smaller

My results seem to be quite smooth, even with the MIN_STEPS_PER_SEGMENT = 1. This is on an Ender 5 with a E3 DIP STM32F103RC with all steppers as TMC2208 and LA enabled. I also ran the print at 300% speed just to see if I was saturating the 128 buffer. Stealthchop was turned off for the extruder as I managed to stall it with it enabled.

EDIT Added a 'tall' version that's stretched 500% on the Z. Will update with photo.

EDIT 2 Tall results at 300% speed:

@XDA-Bam @XBrav I was testing on R=8mm features, which is probably too tight. Probably cone in vase mode would be more efficient and cover all curvatures. My curves are exported with maximum resolution in Fusion, and sliced in Cura with maximum resolution so it definitely pushes the limit.

Steps per mm is 160 in my case, so yes, seems like higher MIN_STEPS would be needed in my case according to your formula. I will test min steps = 32.

BLOCK_BUFFER_SIZE is 64 in my case.

@XDA-Bam Tested with MIN_STEPS_PER_SEGMENT=32. "Noise" is still the same in amplitude, but lower in frequency. Probably on larger circles it would be less visible, but at R=8mm it is still very significant and significantly degrades surface quality.

Could I get a little information about BLOCK_BUFFER_SIZE, BUFSIZE, TX_BUFFER_SIZE, to what those do in our cases?

I do not see slowdown on SKR PRO during printing of gcode with lots of small segments. But surface quality difference between JD and Jerk is still there. MIN_STEPS_PER_SEGMENT did not changed it much.

I see hardly any differences in surface quality for MIN_STEPS 6 and 16. MIN_STEPS 16 has slightly less "noise" on the surface, but still very far from JERK quality.

Jerk surface quality is better with all MIN_STEPS values (16, 6, 1).

I can't seem to replicate this on my SKR Mini V1.2 running 2.0.5.3, also printed with JD and Classic Jerk. Sliced with PrusaSlicer 2.2.0 at max resolution possible. S_CURVE_ACCELERATION was enabled while LINEAR_ADVANCE was disabled.

I'm also not sure this is the same issue as OP, who seems to have shown this happens with fill segments - which this model doesn't have.

@swilkens Linear Advance?

@swilkens Linear Advance?

No, I'm not using Linear Advance. It is enabled in the firmware, but the K value is set to 0 - the same as in the first post of this topic.

I also don't think LA would have a strong effect on this geometry, as the outer line is a of constant tangency. But I am checking now.

Can't reproduce the issue with @BarsMonster 's test file with JD. (SKR Mini E3 v1.2)

Mine is smooth as @swilkens 's

Will try with increased MIN_STEPS_PER_SEGMENT, with a different model.

@swilkens Linear Advance?

No, I'm not using Linear Advance. It is enabled in the firmware, but the K value is set to 0 - the same as in the first post of this topic.

I also don't think LA would have a strong effect on this geometry, as the outer line is a of constant tangency. But I am checking now.

A heads up, LA with K=0 is not the same as compiling without it from what I've heard. If you have no need for LA, comment out the define and recompile.

Not saying it is a solution for you, just a note.

A heads up, LA with K=0 is not the same as compiling without it from what I've heard. If you have no need for LA, comment out the define and recompile.

Not saying it is a solution for you, just a note.

I'm not sure that's true.

if you only need to have a part printed fast without special needs in terms of quality, there is no reason to enable LIN_ADVANCE at all. For those prints, you can just set K to 0."

https://marlinfw.org/docs/features/lin_advance.html

Feel free to look at the code and verify code execution/planner queue is not the same. If it was the same, then they'd just default it to enabled with K=0.

I realize the web site says that, but I'm talking not talking about outcome, I'm talking about specific code that is executed with K=0 vs not enabled. Compiled result is different. X/10 times might yield the out visible result.

You might think of it as All roads lead to Rome, but each road is different and you might get lost on the lesser traveled paths.

Exactly. For example, it is also different to compile with Junction Deviation and set it to zero than to compile with Classic Jerk and set it to zero. The goal is that there are no _apparent_ differences... but internal code is what brought us here ;)

Uptate: In my case I've been observing the printer with Classic Jerk and it is also slightly "shaky" in the gap fill parts. I would also need to test changing the buffer size and min_steps_per_segment but don't have the time to do this properly :(

Looking forward to learning the cause & solution, stay safe!

Exactly. For example, it is also different to compile with Junction Deviation and set it to zero than to compile with Classic Jerk and set it to zero. The goal is that there are no _apparent_ differences... but internal code is what brought us here ;)

Uptate: In my case I've been observing the printer with Classic Jerk and it is also slightly "shaky" in the gap fill parts. I would also need to test changing the buffer size and min_steps_per_segment but don't have the time to do this properly :(

Looking forward to learning the cause & solution, stay safe!

Well, that's different. JD is only enabled by specifically disabling classic jerk. Therefore JD 0 would be no JD and no Jerk, correct?

And fair enough @randellhodges. I agree in regards to this testing that LA K0 != JD OFF.

I was commenting more on the fact that it has visible difference... but really that's irrelevant to this issue so... my bad.

I verified g-code generated by Cura, which shows the issue.

Print segments are 0.4-0.6mm in length, extrusion multiplier (how much filament is extruded vs movement length) is the same for all moves (within 0.1%). So it seems gcode is correct. With that I am not sure how MIN_STEPS_PER_SEGMENT could affect the prints as all segments are larger than 32 steps. (160 steps per mm in my case)

@swilkens @qwewer0 Could you try to print in vase mode or use my gcode? Probably gradual change of Z makes it visible.

I wonder what is Z-jerk when using JD? If Z changes often, and Z-jerk is 0 with JD, these tiny moves on Z movement could be very slow and cause surface defects.

With or without vase mode, can't reproduce the issue on Xtest-HR.stl

@BarsMonster @qwewer0 for me the problem appears in the "gap fill" which aren't generated in your slicing settings, see these comments above

However, your code has uneven Z steps:

G1 X143.954 Y66.913 E1745.41495

G1 X144.322 Y66.652 Z13.002 E1745.43261

G1 X144.7 Y66.403 E1745.45033

G1 X144.895 Y66.282 E1745.45931

G1 X145.282 Y66.057 E1745.47684

G1 X145.483 Y65.948 E1745.48579

G1 X145.878 Y65.747 Z13.003 E1745.50314

G1 X146.294 Y65.555 E1745.52108

G1 X146.71 Y65.38 E1745.53874

G1 X147.126 Y65.224 E1745.55614

G1 X147.337 Y65.151 E1745.56488

G1 X147.774 Y65.014 Z13.004 E1745.58281

G1 X148.201 Y64.897 E1745.60014

G1 X148.64 Y64.793 E1745.6178

G1 X149.09 Y64.704 E1745.63576

G1 X149.535 Y64.633 Z13.005 E1745.6534

G1 X149.976 Y64.579 E1745.67079

G1 X150.206 Y64.558 E1745.67983

G1 X150.535 Y64.533 E1745.69275

G1 X150.993 Y64.515 E1745.71069

G1 X151.438 Y64.513 Z13.006 E1745.72811

G1 X151.79 Y64.523 E1745.7419

If you have different acceleration for Z this may be causing shaking.

@CarlosGS Z steps are monotonic, with 1um steps. Having Z coordinate on each line would require like 50nm (0.05um) resolution on Z axis which is hardly helpful. I agree that Z steps could cause the issue, but it's hard to make it smaller than 1um.

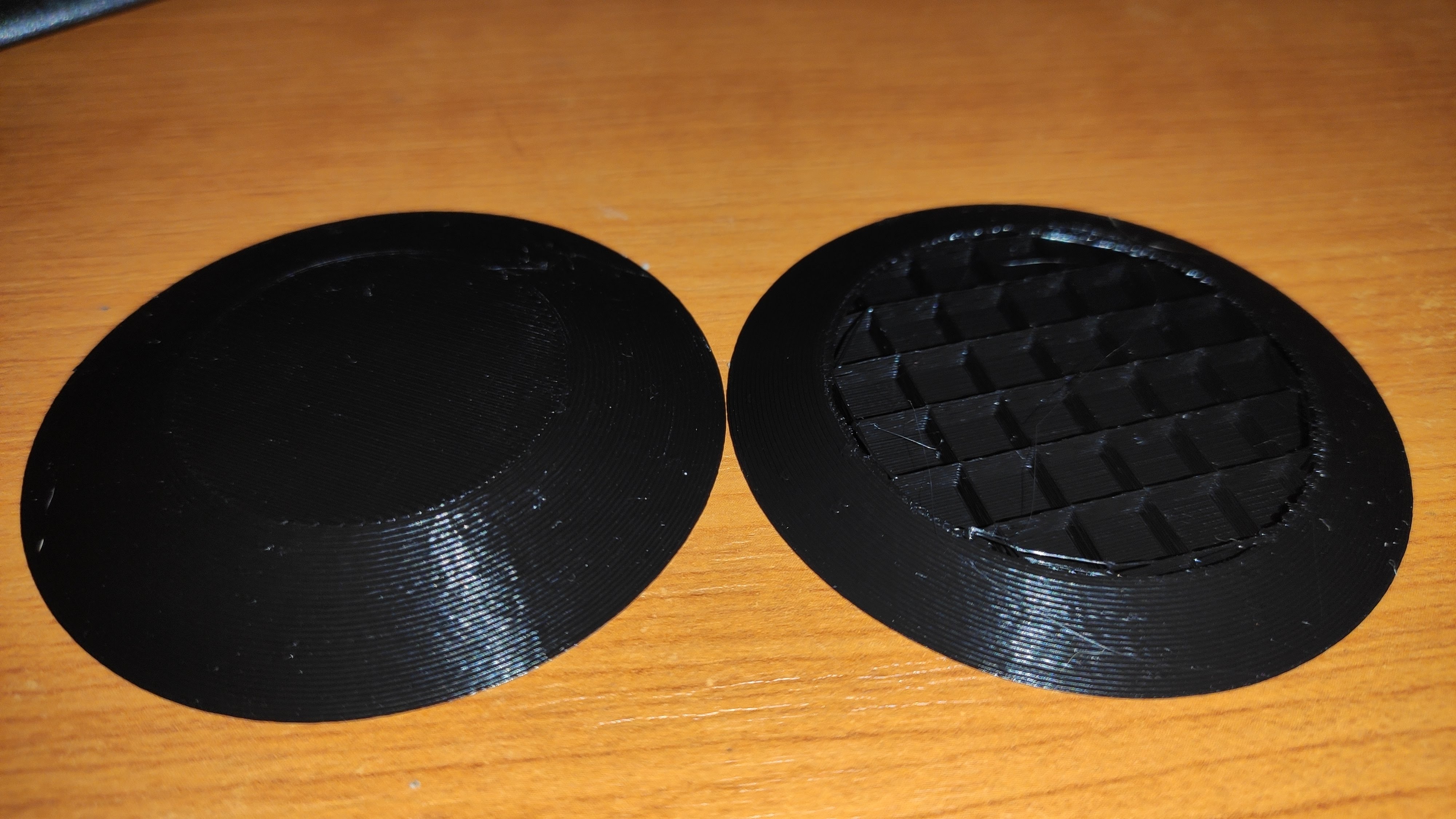

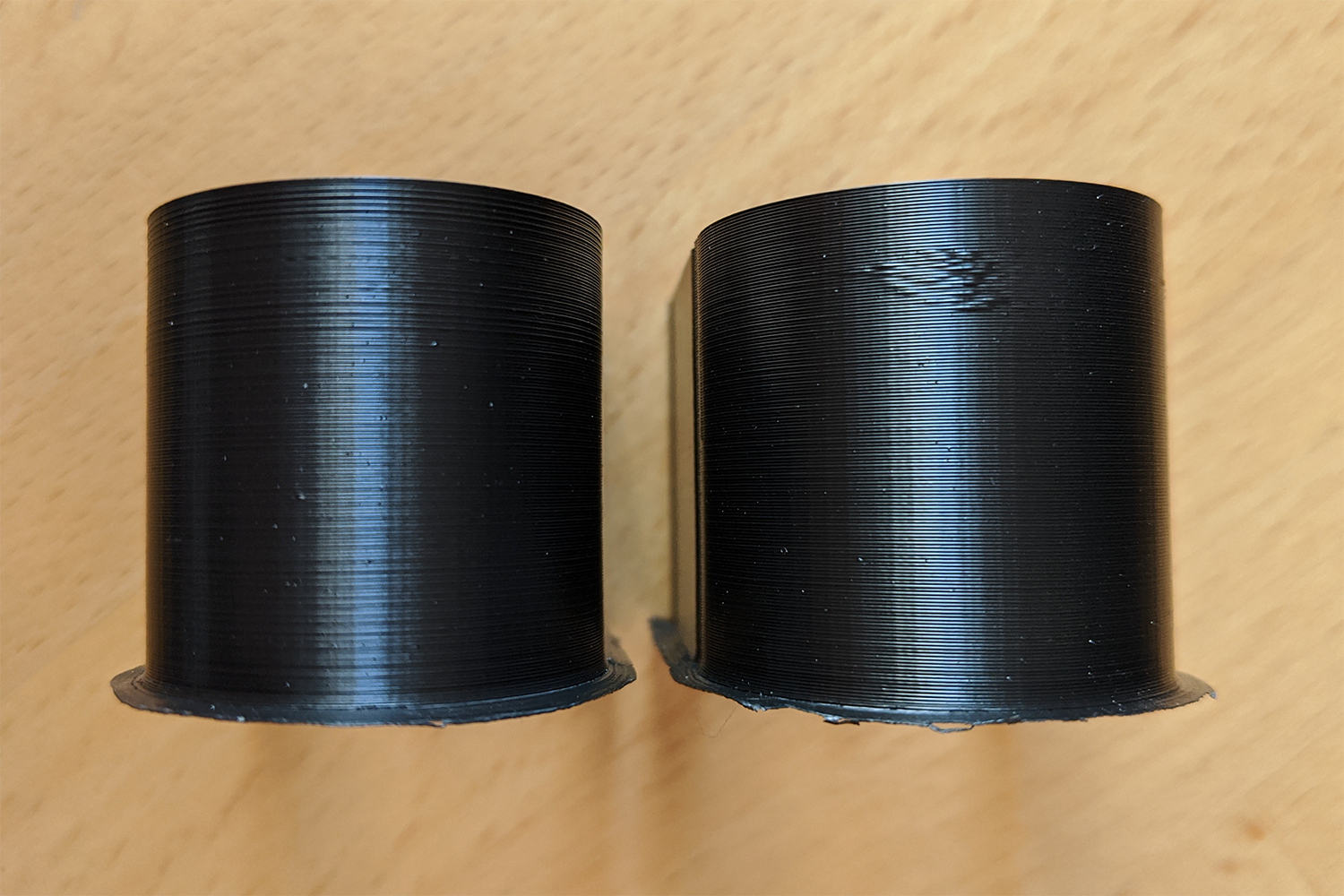

Tested the bottom of the penguin model with default MIN_STEPS_PER_SEGMENT 6 and 16 as @XDA-Bam wrote, but the results are the same. (penguin has no gap fill, but still has jitteriness) (Left 6, Right 16)

Penguin model has less triangles and those are larger than in Xtest-HR.stl, but still Xtest-HR.stl is smooth and the penguin is jittery.

@BarsMonster I can't trigger this issue on your model, regardless of vase mode, lin_adv, classic jerk of juction deviation settings.

@CarlosGS I can't seem to force a slice where the fill is of uneven lengths as you showed in the gcode analysis of the face shield. I took the same model and sliced, all similar arc lengths. It appears that this is only happening for specific slices of specific shell thickness / nozzle width configuration in slicers. I imagine also the STL quality affects this. What is your configured nozzle diameter in prusa? which version of prusa slicer are you using?

To replicate this here, we need a small simple model that triggers this reliably.

@BarsMonster Ah! Did you try with and without vase mode? (Only printing 1 perimeter too)

@swilkens 0.4mm for both Cura and Prusaslicer, though it also depends on infill overlap.. too many parameters x)

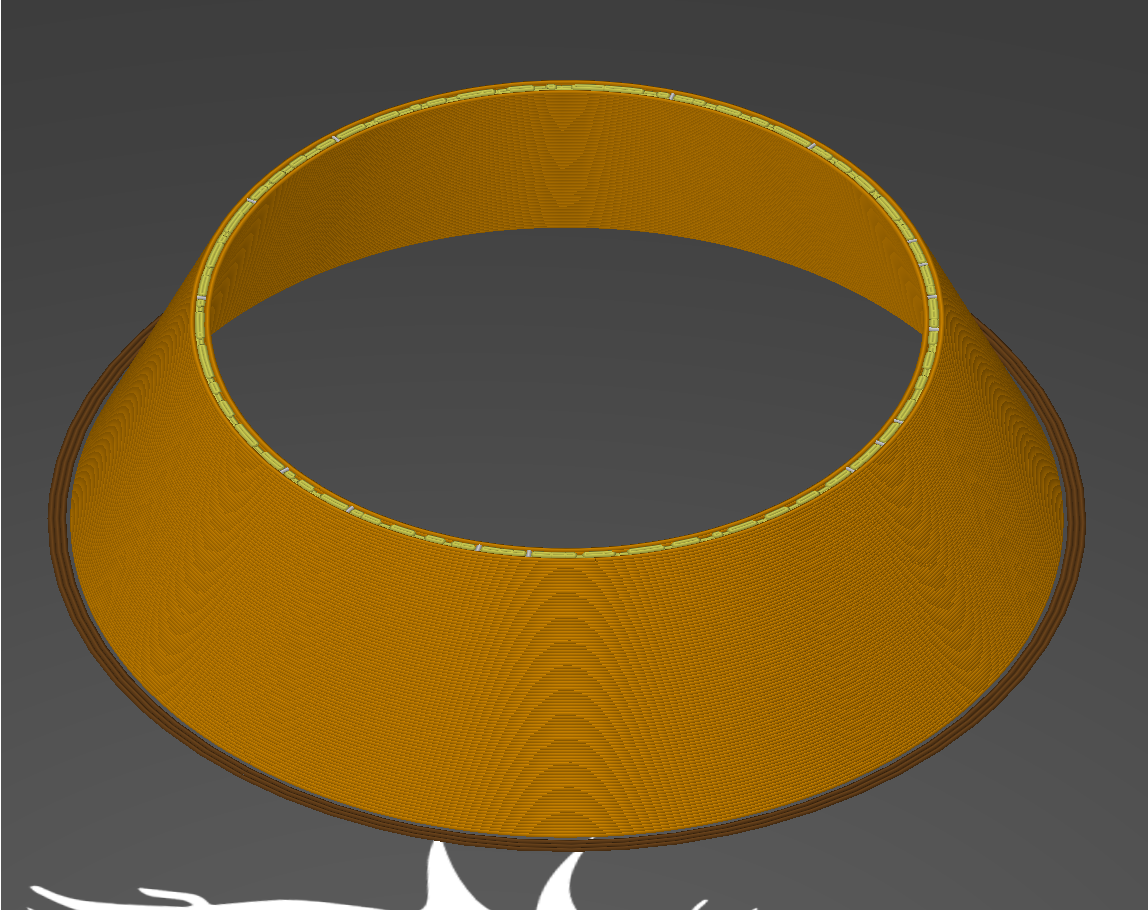

@CarlosGS I made a simple cone that goes from 2.0 mm thickness in the bottom to 0.6 mm thickness in the top, this should guarantee fill lines occurring over a part of the total height regardless of nozzle settings.

I still can't get it to slice in such a way that these variable segment length fills happen. Can you try to slice the same STL and check the fill segment lengths? I'm using PrusaSlicer 2.2.0

Even better - can you provide GCODE that triggers this?

@swilkens OK I've tried with standard PrusaSlicer settings, this is the result:

Great model BTW, the perfect test for "arcing" gap fill!

I still can't get this to misbehave, are you printing from SD card or over the serial interface (e.g. via octoprint or something)?

Possibly related: https://github.com/MarlinFirmware/Marlin/issues/17117

With the cone, I got a somewhat ok result, except for two layer where there was small segmented gap fill. On the upper and lower parts the issue is there, but not as noticeable as on the line in the middle. So, maybe if I got more of those gap fills then the results would have been awful.

Gents please attach slicer 3mf projects saves, this allows us to automagically have all your settings and hence slice in exactly the same way. I start wonderin whether this doesn't slowly turn into a slicer bug report rather than a marlin one.

I believe that the reason why CURA results seems to have been worse than Slic3r results can be related to (in my case) the following examples.Note that the Slic3r results with JD where also bad even without the jiggling inner wall.

Slic3r

Cura

Here is a slice by Slic3r with slighty different settings. We will be unable to find the root cause easily because I think it is an overlay of firmware settings, slicer settings and 3d model that produces these inconsistent end results. Compare to the above :

That's not gapfill in slic3r (that is a grey-white line)- what you have is Infill mixed with Gapfill perpendicular lines.

Got it.

Gents please attach slicer 3mf projects saves, this allows us to automagically have all your settings and hence slice in exactly the same way.

Here is my cone: Body1.zip

I start wonderin whether this doesn't slowly turn into a slicer bug report rather than a marlin one.

The penguin model has no gap fill, yet can see the rough outside with JD, but the different slicers (Cura, PrusaSlicer, Fusion 360) still have an affect on it.

Slicer settings like weird fills between two outside lines will produce rough surface.

But, the same gcode that is ugly with JD is smooth with CJ.

@qwewer0 : can you try and print this :

Body1_tzo.zip

@qwewer0 : can you try and print this :

Body1_tzo.zip

Yes, it will take 30+ min to print it.

Only with JD?

@thierryzoller This is the result. It is the same as it was, just the ugly layer went up, because the line width is 0.3 from 0.36.

"Fill gaps in walls" can cause problems with tiny extrusions and/or gaps between the walls on curved objects. I've had it happen two weeks ago on a totally different design. In Cura, it's a known bug. That's not a problem with JD, but there definitely is a problem with JD, too. Maybe we should take gap fill out of the equation for now and focus solely on curved surfaces and artifacts? Use thin-walled objects and avoid gaps?

This is my print, wall thickness 2, no infill or gap fill (also not necessary):

Jerk left, JD right. The curve is R=15, printed @ 100 mm/s with 750 mm/s² accel.

Can we have this as a 3MF file?

Thanks for the detailed test, it is clear now:

Notice there are still segments of different lenght and they match the area distorted with JD!!

G1 X139.056 Y114.37 E1229.2764

G1 X139.179 Y114.987 E1229.29733

G1 X139.276 Y115.613 E1229.3184

G1 X139.286 Y115.715 E1229.32181

G1 X139.345 Y116.248 E1229.33964

G1 X139.35 Y116.339 E1229.34267

G1 X139.386 Y116.874 E1229.36051

G1 X139.387 Y116.965 E1229.36354

G1 X139.399 Y117.592 E1229.38439

G1 X139.38 Y118.241 E1229.40599

I'm using https://ncviewer.com/ to view the paths & find these.

After seeing this, it matches with previous1 & previous2. Yes those were with Gap Fill and not in the perimeters, but the result is the same: random short segments interleaved with the equidistant ones.

Slightly off topic, but I wonder if something like this experimental plugin would help much:

https://community.octoprint.org/t/new-plugin-anti-stutter-need-testers/18077

The idea is that it would turn all those tiny segments into an arc move. The tiny segments that make up a curve seems to be the problem, or at least a big contributor.

Looks like that code could also find its way into a cura plugin.

Here is my result on the Teaching_Tech_speed_test.stl, and the 3mf file: Teaching_Tech_speed_test.zip

Can't see or feel any difference between them.

Left JD, Right CJ

Edit: I would love to see arc support in slicers...

@XDA-Bam Could you please test this G-code that simply repeats the problematic arc?

JD_single_arc_test.zip

Please note that I've removed all extrusion/temperature related parts. If you can see the same misbehavior, it means that the problem is not related to extrusion but XYZ motion. I've tested it on my printer with Classic Jerk and there is no visible stuttering.

@qwewer0 It's best to use my GCode if you want to compare, because it's got those tiny line segments around layer 120. This is where the artifacts appear for me. Also, you won't see them on the bottom layers. So only printing the lower section will always look OK. You might get away with only printing the top third - didn't test that, yet.

@CarlosGS I'll look into it.

@XDA-Bam You might be right, but I didn't just printed the bottom part, but squashed it in Z.

@XDA-Bam Could you please test this G-code that simply repeats the problematic arc?

JD_single_arc_test.zipPlease note that I've removed all extrusion/temperature related parts. If you can see the same misbehavior, it means that the problem is not related to extrusion but XYZ motion. I've tested it on my printer with Classic Jerk and there is no visible stuttering.

This is a smart way to do this, thanks.

No stutter on Classic Jerk, seems to stall on the apex of the curve with Junction Deviation. Massive difference for me, it appears to slow down dramatically halfway during the curve with JUNCTION_DEVIATION

@CarlosGS The JD_single_arc_test.gcode with CJ it feels ok, but with JD it is noticeably more jittery.

Aw yeah!! :)

If more people can confirm this, then we can start debugging!

Results so far, all was done on Marlin 2.0.5.3 release with the The JD_single_arc_test.gcode file from @CarlosGS on an SKR Mini V1.2 with TMC 2209's.

Print started from SD Card as well as via Serial interface, no difference.

LINEAR_ADVANCE doesn't seem to affect it, neither does the stepper mode. But increasing MINIMUM_STEPS_PER_SEGMENT to 16 (up from 6) certainly improved the situation dramatically. I assume this binds some of the smaller segments together.

S_CURVE_ACCELERATION also had no effect.

On a side note - it would be nice if these features could be turned on / off with a configuration setting if the device has sufficient RAM to compile them in.

STUTTER | CJ | JD | LA | Mode | MIN_STEPS_PER_SEG. | S_CURVE_ACC.

------------ | ------------ | ------------- | ------------- | ------------- | ------------- | -------------

NO | Y | - | Y | Stealth | 6 | Y

YES | - | Y | Y | Stealth | 6 | Y

YES | - | Y | - | Stealth | 6 | Y

YES | - | Y | - | SpreadCycle | 6 | Y

IMPROVED | - | Y | - | Stealth | 16 | Y

YES | - | Y | - | Stealth | 6 | -

With JD and MIN_STEPS_PER_SEGMENT 16 the JD_single_arc_test.gcode is close to the CJ results, and has definitely less stutter, but it is still not as smooth as with CJ. So over all it helped, but didn't solved it.

To replicate the table of @swilkens and summarize my tests (ASS is adaptive steps smoothing):

| Jerk type | LA | S-Curve | ASS | Min Steps | Stutter |

| --- | --- | --- | --- | --- | --- |

| JD | - | ON | ON | 6 |YES |

| JD | ON | - | ON | 6 | YES |

| JD | ON | - | - | 6 | YES |

| JD | ON | - | - | 16 | MUCH LESS* |

| CJ | ON | - | - | 6 | NO |

(*: Since I now know what to look for, I can still make out 3 tiny stutters on the surface even with MIN_STEPS_PER_SEGMENT = 16. But that's about 90% less than with 6 steps.)

@CarlosGS I just tested your JD_single_arc_test.gcode and can confirm:

- With JD, there is noticeable jitter. The Y-axis stutters about half way around the arc for a couple short steps. The X-axis sounds very rough but doesn't feel that bad.

- With CJ, both axis are much smoother, there is no hard stutter on Y and X sounds normal & smooth.

Looking in the right direction! Could you give a try to compile with JD and set it to zero? I want to know if it still produces the stuttering. [DISMISS THIS]

I'm not too familiar with the code, but this line might be the problem. It seems to reduce speed for small segments:

https://github.com/MarlinFirmware/Marlin/blob/fc11e7217460056473f91dfb7dd574884319f567/Marlin/src/module/planner.cpp#L2354

In the example arc gcode the regular segments are ~0.6mm and the tiny ones ~0.1mm, which would translate in suddenly resetting the limit_sqr variable down to a 17% of its normal value during the arc.

@CarlosGS I've compared JD code to GRBL implementation.

GRBL does not have this whole section "if (block->millimeters < 1) {", and does not try to limit speed for small segments. I am not sure what was the purpose of the special treatment of small segments. I will try to find a commit which introduced this special treatment of small segments.

The rest of JD math is almost identical.

So this is the commit that introduced code for handling of small print segments with JD: https://github.com/MarlinFirmware/Marlin/commit/a11eb50a3eab6d58d595a67e526fb51190018db3#diff-e4800bd68f101b55ac4ff95513184458

Comment was "Better encapsulation and considerably reduce stepper jitter"

It was authored by @ejtagle and commited by @thinkyhead

Probably they might know more.

On my side - I tried to (incorectly) replace approximate math with hardware FPU call to acos() - which made whole print infinitely slower (i.e. condition always taken). So probably this section does not trigger for all small segments but rather only for few of them, which might be the cause of this noise on the surface.

Ok, we are getting somewhere.

So in planner.cpp with (surely this could only be done as a test on a platform with HW FPU)

const float junction_theta = acos(junction_cos_theta);

instead of

const float junction_theta = (RADIANS(-40) * sq(junction_cos_theta) - RADIANS(50)) * junction_cos_theta + RADIANS(90) - 0.18f;

noise on the surface is gone. If I correctly understood intention of the code, we can just compare junction_cos_theta with -0.7071 (cos of RADIANS(135)), as we are not really interested in actual value of the acos.

Noise is also gone if I remove whole section with "if (block->millimeters < 1) {". So we need to figure out what was original intention of this block of code to not break something else.

It seems to me the acos operation was perhaps too expensive on other hardware, and was thus approximated by the current line, which probably (should check this) evaluates to a higher deviation for smaller segments in a curve.

https://github.com/MarlinFirmware/Marlin/issues/10341#issuecomment-388191754

* hoffbaked: on May 10 2018 tuned and improved the GRBL algorithm for Marlin:

Okay! It seems to be working good. I somewhat arbitrarily cut it off at 1mm

on then on anything with less sides than an octagon. With this, and the

reverse pass actually recalculating things, a corner acceleration value

of 1000 junction deviation of .05 are pretty reasonable. If the cycles

can be spared, a better acos could be used. For all I know, it may be

already calculated in a different place. */

Nice!!!

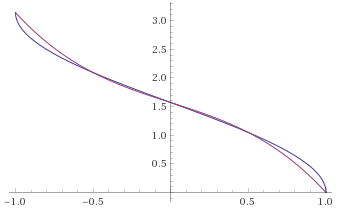

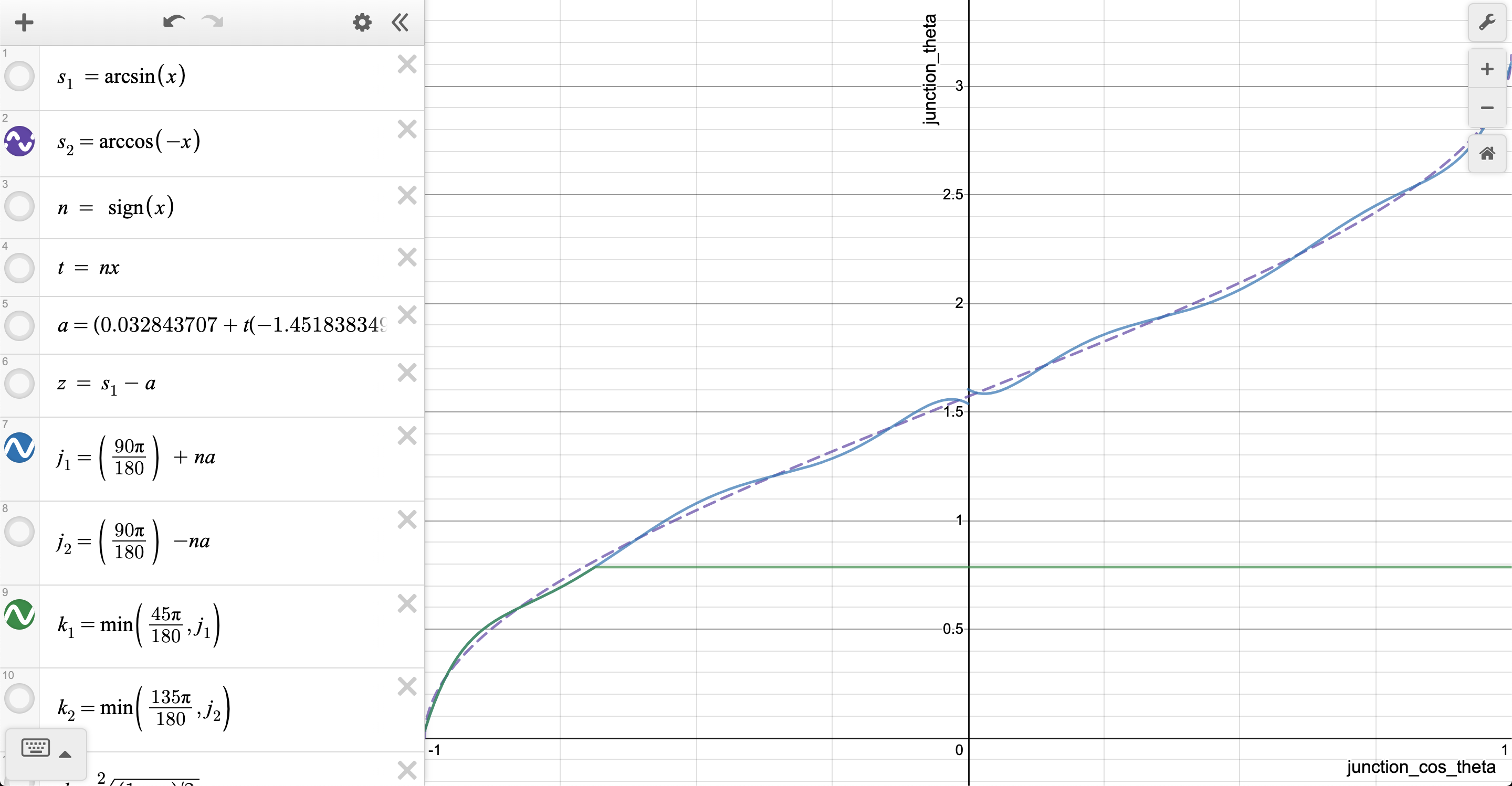

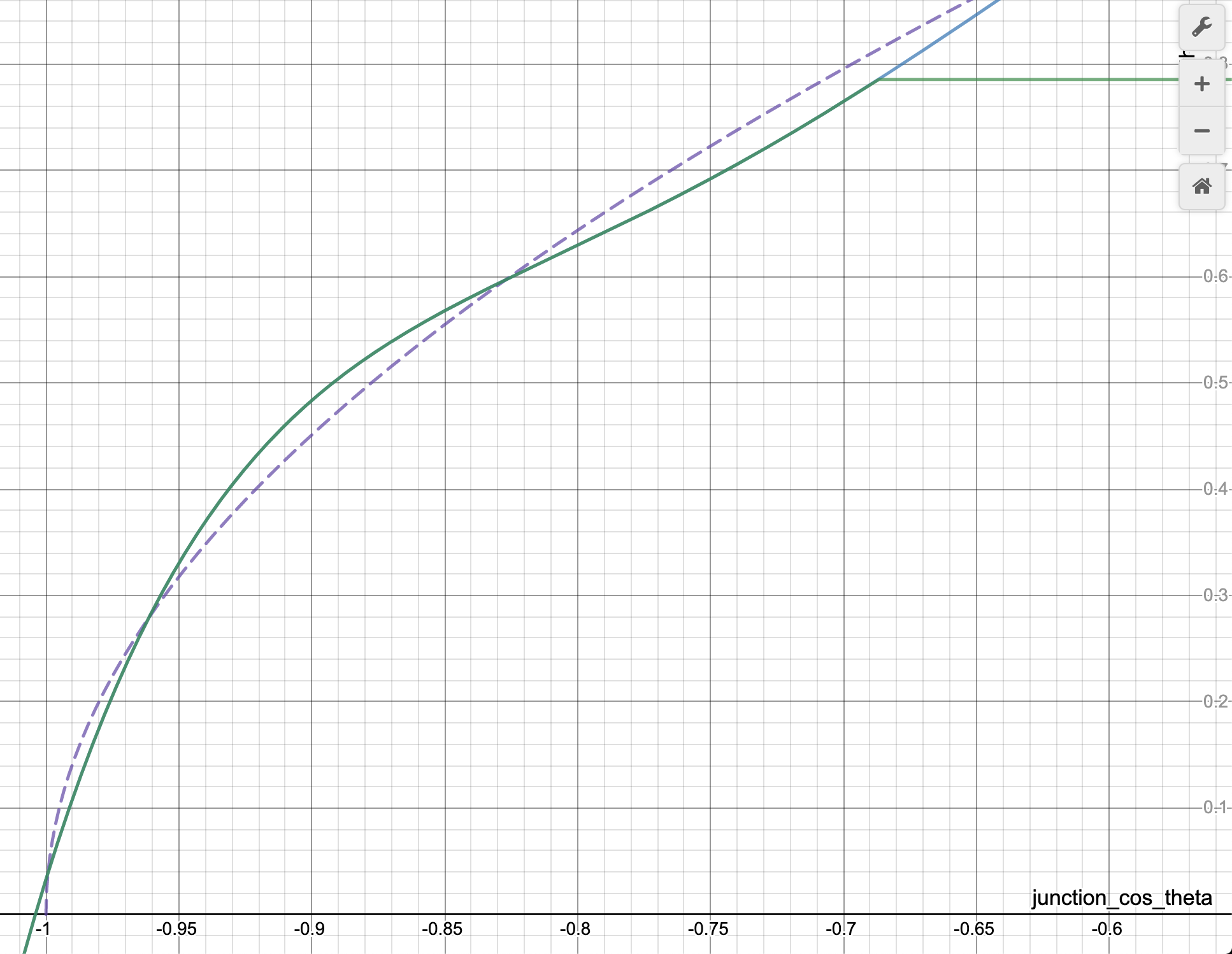

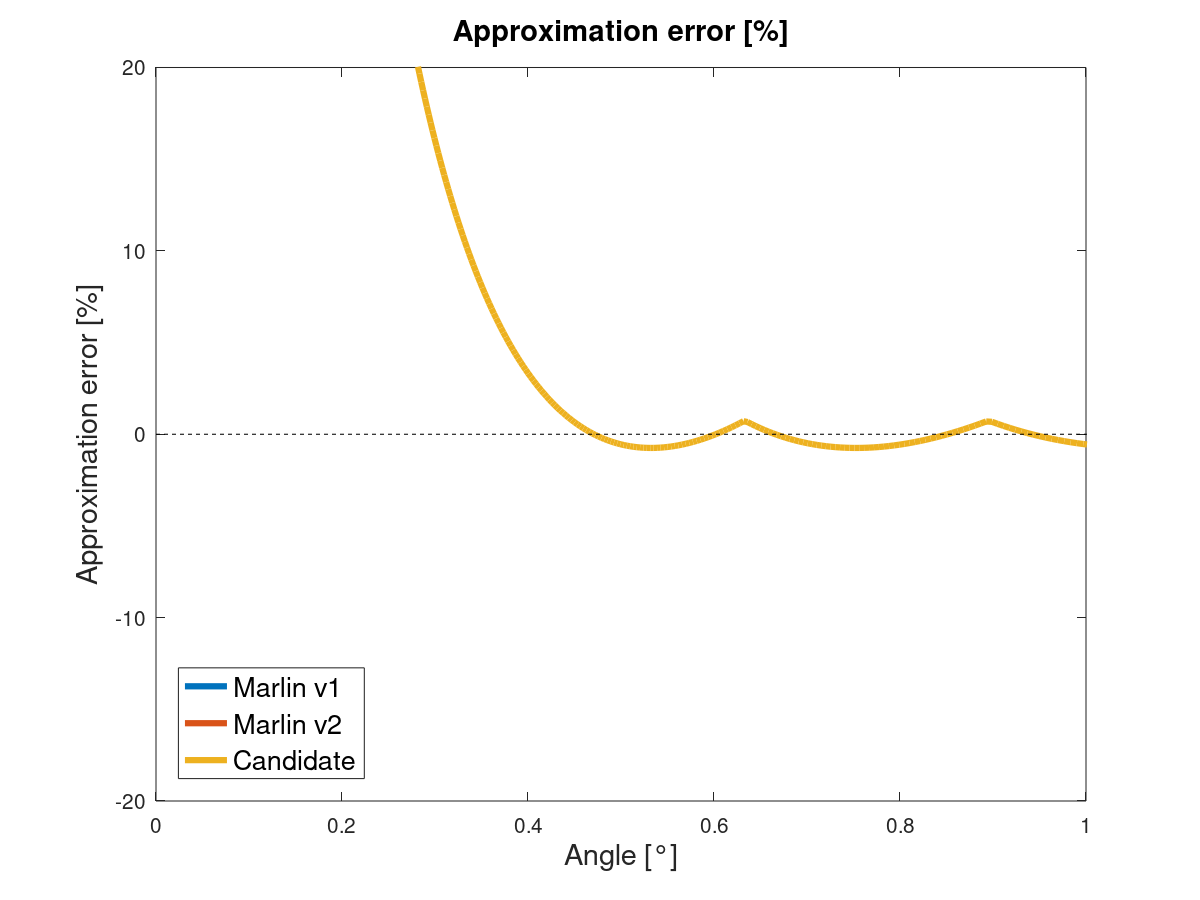

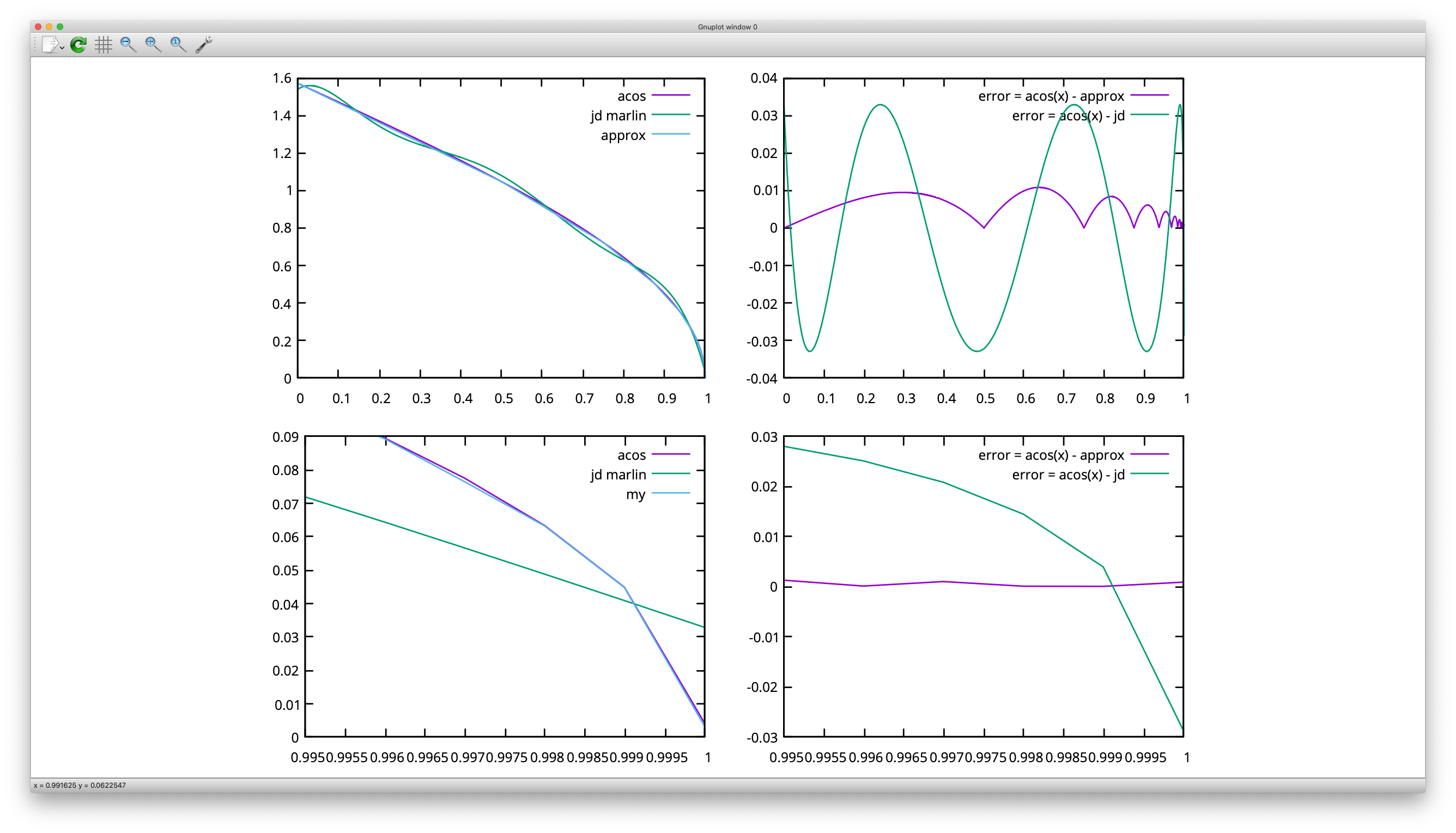

For anyone curious these are the differences between acos and the approximation:

You decide which line is acos() and which one the approximation ;)

I think the -0.18 shouldn't be there... otherwise it looks OKish:

But indeed as @BarsMonster points out this part could be simplified.

PS: I love how this is slowly turning into a "_how did this even work!_" bug :rofl:

The 0.18f is apparently an error bar correction on the approximation, or is at least intended as such. Perhaps a mistake was made here, looking at your plots of both functions.

https://github.com/MarlinFirmware/Marlin/commit/a11eb50a3eab6d58d595a67e526fb51190018db3#diff-e4800bd68f101b55ac4ff95513184458R2139

// Fast acos approximation, minus the error bar to be safe

float junction_theta = (RADIANS(-40) * sq(junction_cos_theta) - RADIANS(50)) * junction_cos_theta + RADIANS(90) - 0.18;

I also noticed this in planner.cpp

// TODO: Technically, the acceleration used in calculation needs to be limited by the minimum of the

// two junctions. However, this shouldn't be a significant problem except in extreme circumstances.

@ejtagle ?

The function is a perfect approximation... in this region :)

:rofl:

EDIT: It seems this is actually the desired behavior! :exploding_head:

Graphs are very... graphic.

But I believe difference between graphs could not explain the result.

As far as I understand - Intention was to have slower movements for large direction changes, around 45°. If it's not 45, but rather 35 or 55 - that's probably acceptable tolerance.

But in my test part - angular difference between consecutive segments is just a few degrees, it should have never gone as high to trigger this condition even with imperfect approximation. There should be something else going on with small segments. Probably we are loosing resolution somewhere on earlier steps due to very short segments.

Also, I am not sure why short segments should get special treatment. Sharp turns are equally hard for both long and short segments.

I was thinking of the test where you replaced the approximation directly with acos() and fixed things.

The problem is not only tolerance but the general offset that doesn't loop angles correctly.

Could you test again removing the 0.18f and leaving the rest as it was?

The problem of the acos()-approximation is, that its error is largest in the region of interest (>135°). If you drop the -0.18f, the error for theta becomes roughly +10,2°. That is, for 2,75 RAD or theta =157,6°, the uncorrected approximation will give you theta =167.8°. This, in term, reduces (RADIANS(180) - junction_theta) in this line and thereby causes an undesired increase in speed. This speed increase is, what the correction factor avoids.

This -0.18f correction also means, that our cutoff for the correction isn't the 135° in the if-condition, but actually 129,2°. I don't think that this is a problem, though.

An advantage of the current approximation and correction is, that (RADIANS(180) - junction_theta) will never be smaller than 0.18. As we divide by this term, this makes sure our results never "explode".

That being said, I do not see any problem with the acos-approximation other than that it's not very precise. A better acos-approximation would be nice and avoid this clumsy -0.18f-factor, but I don't see how this would be causing the stutter.

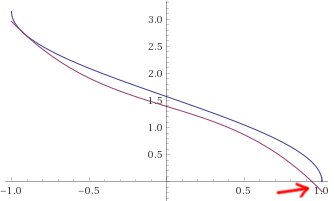

I've looked at the JD_single_arc_test.gcode and computed the segment lengths. The MEAN segment length is 0.60 mm, the MAX is 0.73 and the MIN is 0.09. Here is a plot:

You can clearly see the three tiny segments mid-arc, which is also roughly where you can feel the stutter when printing.

I then went on to estimate the junction speed limit, which is SQRT(limit_sqr) (cmp. limit_sqr). I assumed, that our angle is close to 180°, which means our (RADIANS(180) - junction_theta) is approximately 0.18. This is the best case for speed, as the difference (180-theta) never gets smaller than this. Anything above 175° will be close to identical. Our junction_acceleration is determined in planner.h using the minimum of all axis accelerations which are in motion. As Z and E are not in motion in JD_single_arc_test.gcode, this should - to my understanding - give the minimum of X and Y accelerations. For my printer, that would be 750 mm/s². This results in the following speeds:

| Segment length [mm] | Speed [mm/s] |

| --- | --- |

| 0.73 | 55.1 |

| 0.60 | 50.1 |

| 0.09 | 19.5 |

As you can see, we're more than halving our speed limit for those tiny segments in the arc. Even worse, our limit is jumping up and down a couple of times in quick succession. There is our damn stutter!

Nice!!! is it then a result of the scaling by block->millimeters? https://github.com/MarlinFirmware/Marlin/issues/17342#issuecomment-613716956

I'm really wondering why @BarsMonster could resolve the stutter by replacing the approximation directly the acos() function :thinking:

Nice!!! is it then a result of the scaling by block->millimeters? #17342 (comment)

I'm really wondering why @BarsMonster could resolve the stutter by replacing the approximation directly the acos() function 🤔

Well, kinda both: Using true acos() means junction_theta can become greater than 2.96, thereby (RADIANS(180) - junction_theta) can get smaller than 0.18 and the speed "explodes", mitigating the issue for angles close to 180°. This is pretty much a race of 1/(angle difference) vs. block length. However, for angles closer to 135°, the block length will still be the issue from my understanding.

Maybe we should look out for a better acos()-approximation AND think about handling the issue with alternating segments of vastly different length.

Ah!! Thanks so much for detailing the exact cause, no wonder this was driving us crazy :exploding_head:

Looking for a better implementation may take a while - many trigonometry approximations exist.

As an intermediary solution; We might change MIN_STEPS_PER_SEGMENT to be a function of STEPS_PER_MM and a lower limit on the segment length that we decide here. Probably this means increasing the standard value of MIN_STEPS_PER_SEGMENT for the majority of users. This should only be applied when using JUNCTION_DEVIATION obviously.

Pro: Reduce stuttering when using JD, bandage the issue until we find a good fix.

Con: Lose some model accuracy

Alternatively we go back to CLASSIC_JERK for the default while we evaluate this further.

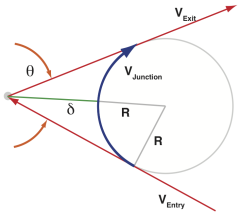

Quick update: And I also found the reasoning behind the handling of small segments. See this comment.

In short: If you divide a "real" curve into discrete segments, the angle between each segment will be determined by the number of segments. As JD normally only determines the junction speed limit based on this angle, it will go faster around an otherwise identical curve, if it has more segments. Ultimately, it wouldn't slow down at all for curves with an infinite amount of segments. That's not sensible, of course. Therefore, the if (block->millimeters < 1) discrimination was implented as far as I understand.

I think the current if < 1 solution is a good hack for most situations, but obviously, we discovered a couple of problems with it. Thinking about a different approach.

@swilkens: MIN_STEPS_PER_SEGMENT has to be 1 to get correct prints of fine detail. The current defaul of 6 already produces problems; increasing it is not a suitable fix.

It should be possible to fix the MIN_STEPS_PER_SEGMENT functionality to be non-breaking though. Rather than merging segments based on a small number of quantized steps, it should be merging them based on smallness relative to the (micro)step size and extremely low absolute change in angle between the segments prior to quantization (both conditions met), in which case the tiny segment is almost surely just an artifact of discretizing a curve and not possibly a microstep-scale feature.

Could you test again removing the 0.18f and leaving the rest as it was?

_I did that and got no noise._ But I also noticed some visible stuttering during brim printing on the first layer. I am trying to investigate what was that and whether it is relevant to this change.

The issue here is that if we make false-negative error in this acos comparison or calculate too high speed - there will also be no noise. So it does not mean that it fixes the issue. It means no stuttering on curves, but might mean faster than intended speed on them.

On acos approximation - while it is possible to compare junction_cos_theta to -0.7071 to avoid any error here, but it would only make comparison more precise, actual speed would still be calculated with error.

This could also explain issues I had with LA, JD and acceleration control #15473 - acceleration control in Cura breaks print moves into small segments with different acceleration, even if it is straight line.

Seems like regardless of whether the approximation is good or not, there should not be any discontinuous behaviors like the hard cutoff here. If the limiting were applied with a continuous window function rather than discrete on/off this kind of stutter shouldn't be possible.

@richfelker Slicers typically break moves into segments based by this logic - based on deviation and length. it would be hard to rethink quantization behind slicer and avoid any artifacts.

@BarsMonster: I'm aware and know how to configure the slicer not to mess this up. The slicer doesn't do step quantizatiion, just its own merging of segments based on maximum permissible deviation and limits on merged segment length, so the original floating point values are still availble with sufficient precision to distinguish between a 90 degree corner that's 1 microstep wide and an excessive-precision approximation of a curve.

Im trying to find out approximation of acos. Looks very close, but it uses sqrt, is it feasible?

Im trying to find out approximation of acos. Looks very close, but it uses sqrt, is it feasible?

There's two close ones using sqrt():

acos = sqrt(2-2*costheta)*pi/sqrt(8) with the possibility to just precalc pi/sqrt(8)=1.11072073

The correction factor pi/sqrt(8)=pi/2/sqrt(2) assures that we reach the same values at pi/2, which is where the sign flip occurs. Otherwise it's identical to your solution, I think. Sign flip has to be handled, as this is only good for [0, 1]. Maximum error +-0.066 rads (Error plot).

Then there's the inverse of the Bhaskara approximation:

pi*sqrt((1-costheta)/(4+costheta))

Same thing with valid range [0, 1] and sign flip. Maximum error is +-0.023 rads (Error plot). This one would be my favourite, if one sqrt() and one divide are acceptable.

EDIT: renamed theta to costheta

@XDA-Bam Square root and division are 14 clocks each on M4F. Multiplication is 1. So it is relatively slow. On 32-bit CPU without FPU we can expect 10 times more clocks. At 100mm/s printing speed and 0.05mm segments that would mean 560000 clocks per second on updated approximation math, which could be around 1% of low-end STM32. But AVR's will suffer.

But if we compare to -0.7071 and calculate acos only when condition is taken - this would save CPU utilization in average, as corners are suppose to trigger rarely.

Just idea: if we use it only at corners, where the printing speed is lower, it can work.

@XDA-Bam I believe if we use lookup table with some 4-8 ranges, we can get away with fast multiplication only and relatively high precision. I believe we have more flash memory than free CPU cycles. One more note: probably we don't need high precision everywhere, but rather near -1? Update2: We only need to cover range of -1..-0.7071 by approximation, which could significantly simplify the task.

@BarsMonster OK, I'm not used to programming C++ and certainly not on AVRs. If you have some good code for lookup tables in mind, maybe throw it in here? 😄

@XDA-Bam: Calling the independent variable theta is really misleading/confusing...

I've been taking a deeper look at the JD_single_arc_test.gcode. I've calculated equivalent radii and resulting junction speeds for a=750 mm/s². For the critical region with stutter, the result is the following (EDIT: velocities are about twice as high as in Marlin, because my spreadsheet uses precise arccos()):

Seg. length | Junc. angle (to _prev._) | Equiv. radius | V_max junction

-- | -- | -- | --

0.6291 | 177.47 | 14.2 | 103.278

0.6335 | 177.53 | 14.7 | 105.066

0.1025 | 176.79 | 1.8 ⏬ | 37.050

0.5363 | 179.28 | 42.8 ⏫ | 179.243

0.0911 | 176.83 | 1.6 ⏬ | 35.142

0.5362 | 179.30 | 43.6 ⏫| 180.831

0.0910 | 176.78 | 1.6 ⏬ | 34.852

0.6271 | 179.53 | 77.0 ⏫ | 240.261

The actual radius is 14.375 mm, so most estimates are reasonably close. What is odd, though, is that on the very short segments, _both_ segment length _and_ junction angle drop. The following junction angle is then longer. This difference of +-1.5° is very important here. The angle in my spreadsheet is calculated backwards, like in the code.

If I now switch to forward calculating the junction angle, the slightly increased junction angle always falls in line with the short segments:

Seg. length | Junc. angle (to _next_) | Equiv. radius | V_max junction

-- | -- | -- | --

0.6291 | 177.53 | 14.6 | 104.706

0.6335 | 176.79 | 11.3 🔽 | 92.112

0.1025 | 179.28 | 8.2 🔽 | 78.360

0.5363 | 176.83 | 9.7 🔽| 85.244

0.0911 | 179.30 | 7.4 🔽 | 74.551

0.5362 | 176.78 | 9.5 🔽 | 84.598

0.0910 | 179.53 | 11.2 🔽 | 91.526

0.6271 | 177.23 | 13.0 | 98.579

And would you look at that: The speed and radius dips are nearly gone! And they are all in one direction now, no more harsh ups and downs. There is still a max dip of about 25% in speed, but not +71/-66% anymore, as before.

Question therefore, for anybody knowing the code well: Why are we calculating the junction angle backwards and not forward?

EDIT: Important typo in header of second table corrected.

@XDA-Bam: Calling the independent variable

thetais really misleading/confusing...

True. Changed.

I've found a MinMax polynomial here, which reaches a maximum error of +-0.033 rads (error plot) using only multiplications.

acos(x) = π * 0.5f

- (0.032843707f

+ x * (-1.451838349f

+ x * (29.66153956f

+ x * (-131.1123477f

+ x * (262.8130562f

+ x * (-242.7199627f

+ x * (84.31466202f

)))))))

Again with valid range [0, 1] and requiring handling of sign flip.

@BarsMonster Would this be faster than sqrt+divide?

I haven't looked deep into the JD code since the last overhaul, but I seem to remember something concerning tight corners with very small segments, which are actually very fast turns, but which either classic jerk or JD would only see as slight turns and so wouldn't slow down properly. I might be remembering wrongly, or that might have been discussed and dismissed.

Anyway, I'm happy to see a better acos approximation being explored as a solution. Anything which is faster than the SQRT is perfectly acceptable. The planner code is all run in user context so if the maths are performed as double on ARMs that have FP acceleration the speed should be very fast.

@thinkyhead Yes, the problem of the main JD code is, that the junction angle shrinks, if the number of segments on a curve increases. See this comment on the original issue and resulting mitigation.

However, replacing the current acos() approximation will only _mitigate_ the stuttering issue somewhat for angles above 169.69° (that'S pi-0.18f rads). This will be a good first step. But as detailed in my comment from yesterday, there seems to be an issue with the way the junction angle is currently computed: We are computing it "backwards" to the last element, which seems to introduce strong oscillations in limit_sqr. This is, in my opinion, the main cause of the stutter. If we switch to compute the junction angle forwards, this seems to solve the problem. What's your opinion on such a change?

Sorry i don't understand. What is forward? What is backward?

The junctions speed we want to calculate depends an the angle between segment a (coming from) and segment b (going to). That should not depend on if we take the angle between (a and b) or between (b and a).

If any other segment is involved - that's an error.

the slightly increased junction angle always falls in line with the short segments:

That's impossible. The angle is always in between of two segments. Only in a spreadsheet the values may appear on the one or on the other line.

The planner code is all run in user context so if the maths are performed as double on ARMs that have FP acceleration the speed should be very fast.

The F3s (and DUE) don't have an Floating Point Unit (FPU) - only the F4s have, and that can only handle _floats_ directly, not _doubles_. However - on the ARMs the code, however it looks, will likely be fast enough. Not so at the AVRs. (For good reason we should always use the 1.F-notation to not accidentally use double constants. Except we really want doubles for the exactness. Usually we don't need that. (The F4s are usually compiled with a compiler option like USE_FLOAT_CONSTANTS - but for the F3s it matters. The AVRs always calculate in float only. I have no idea how the ESPs might handle this.))

As far as i remember acos() is implemented as a lookup table with interpolation for the most processors floating point libraries even for that with FPUs - at least it is not a hardware instruction. A benchmark against the library is mandatory for any wannabe faster approximation (for all relevant platforms and processor architectures (AVR, ARM32 with and without FPU))

Sorry i don't understand. What is forward? What is backward?

Sorry, that may have been unclear.

- Backward: Calculating the junction angle as the angle to the preceding segment.

- Forward: Calculating the junction angle as the angle to the following segment.

The junctions speed we want to calculate depends an the angle between segment a (coming from) and segment b (going to). That should not depend on if we take the angle between (a and b) or between (b and a).

If any other segment is involved - that's an error.

It looks to me, like the Marlin planner always operates segment by segment. Per segment, there is only one junction angle being calculated. I would therefore consider each angle to be more or less "attached" to a specific segment. As it is now, as far as I understand the code, the junction angle is defined as the angle between the segment currently being planned and the preceding segment. I hope this clarifies my train of thought.

Just out of curiosity, I've tested what happens if we define the junction angle as angle from the current segment to the following segment. That's what my second table shows. This definitely eliminates the stuttering. I am not sure though, if this change in the definition of the junction angle makes sense in the context of Marlin code. That's why I'm asking.

the slightly increased junction angle always falls in line with the short segments:

That's impossible. The angle is always in between of two segments. Only in a spreadsheet the values may appear on the one or on the other line.

This is a bit splitting hairs now. But yes. Nonetheless, each segment has exactly one corresponding junction angle in Marlin: The one to the preceding segment. That's what I meant by "falls in line with short segments". In the current code, the slightly smaller junction angles are calculated when the code is looking at the unusually short segments. This amplifies the stuttering problem.

The planner code is all run in user context so if the maths are performed as double on ARMs that have FP acceleration the speed should be very fast.

The F3s (and DUE) don't have an Floating Point Unit (FPU) - only the F4s have, and that can only handle _floats_ directly, not _doubles_. However - on the ARMs the code, however it looks, will likely be fast enough. Not so at the AVRs. (For good reason we should always use the 1.F-notation to not accidentally use double constants. Except we really want doubles for the exactness. Usually we don't need that. (The F4s are usually compiled with a compiler option like USE_FLOAT_CONSTANTS - but for the F3s it matters. The AVRs always calculate in float only. I have no idea how the ESPs might handle this.))

As far as i remember

acos()is implemented as a lookup table with interpolation for the most processors floating point libraries even for that with FPUs - at least it is not a hardware instruction. A benchmark against the library is mandatory for any wannabe faster approximation (for all relevant platforms and processor architectures (AVR, ARM32 with and without FPU))

I would expect a default library implementation to be fast. Nonetheless, the consensus in this thread up to this point seemed to be, that native/library acos() may be too slow to use for JD on some architectures. I have no idea of how fast or slow it actually is and I have no means of testing it myself. I hope someone can either provide some hard numbers, or do a quick test.

I have created a PR with the MinMax polynomial mentioned earlier. It runs fine on my ATMega1284, as far as I've tested it. If the default library acos() is even faster: Perfect. Let's use that. If not, the MinMax will do, I think. Should be easy to test using that PR. In any case, I am certain that we need the increased precision close to cos(180°) to mitigate the stuttering.

I would expect a default library implementation to be fast.

This is an incorrect expectation. The standard math library is there to give correct results, not fast approximations. There are countless different ways to do fast approximations, most of them simple, and all of them tuned to particular uses, so it doesn't make sense for them to be part of any standard library; you just write out the one that makes sense for what you need to do. The hard task that programmers can't be expected to do per-program is making a version that's accurate across the entire domain.

Marlin plans always exactly one planer-buffer-line, the new line, the last line. This segment always stops at zero speed. There is no knowledge about what move could come next or if there will be a next move. So the only junction that can be calculated is the one to the previous line segment. So the junction speed is the highest allowed entry speed for the currently planed segment.

In a second phase of the planing process forward/backward/recalculate-path (optimizer) we try to rise the exit speed of the previous segment (what is still zero) to the entry speed of the new segment, if possible (the last segment must be able to decelerate to zero).

The planer calculates the max. target speed, the max. de/accelerations und the max. entry speed for each segment depending on the involved axes and the settings plus the number of steps for each axis and determines the leading axis (with the most steps). The optimizer determines at what step-number (of the leading axis) the acceleration phase ends and the deceleration phase begins - trying to reach the maximum speed determined by the planing process. It is revisiting all but the currently stepped and the already maximized lines in the planner buffer.

Thank you for explaining this in detail, @AnHardt

I have also realized that calculating the junction angle to the following segment - while impossible anyway, with the current code - would also only mask the stutter problem for our specific test file JD_single_arc_test.gcode. If, in another GCode, the slicer decided to distribute the slightly smaller than average angles differently onto the tiny segments, we would have the same problem again. It's inherent to the discretization and there is no "error" in the code in that sense.

It still bugs me, that we see this sawtooth/stutter in limit_sqr. Would it be feasible to replace block->millimeters in the small segment hack with _an average of the lengths of the current and preceding block_? That would be geometrically bulls*, but it will act as a low-cost lowpass and smooth out the stutter. Would that be acceptable?

Thinking about the problem of junction speed naively…

On the one hand, when you have a Y-bed and an X-carriage that are each on different motors and linear rails, the torque is actually de-coupled between the two axes. So adjusting the speed based on changes of angle is unwarranted. For this reason, in a typical Mendel machine the better choice is Classic Jerk. (CAMB.)

Anyway, when you do want to consider the change in angle in a situation with small segments, you need a trick to be able to examine more of those segments (besides having a huge planner buffer) and to be able to determine that an obtuse change in angle is occurring. Which is to say, a way to "accumulate deceleration" where you're weighting the change in angle in inverse proportion to the amount of time elapsed since the last angular change.

One way to approach this would be to maintain a "change in angle over time" accumulator which starts out at zero, and you can think of it as pointed in the direction that the inertial mass is moving. In circumstances where no deceleration is needed, this value will stay close to zero — or at least below the deceleration threshold.

When there's a change in angle between segments, the amount of angular change (off-axis torque) is weighted by the inverse length (inverse duration) of the segment. If enough change in angle accumulates then a certain amount of deceleration is applied.

Meanwhile, over time you are also subtracting a certain amount from this accumulator, so that it takes a lot of angular change over a small period of time before it goes over its threshold. A perfect 90 degree turn spaced over ten very short segments might be the most obvious case where you would need to slow down to zero but the current planner might miss that completely and only slow down by half….

It bears some experimentation to see if an accumulator-plus-threshold approach could work.

Another thought is, if the original acos approximation wasn't great, and the new one also isn't great, maybe averaging the two together will be less than worse.

junction_theta = RADIANS(90)

+ ((t * (sq(t) * RADIANS(-40) - RADIANS(50)) - 0.18f) - (neg * asinx)) / 2;

…Optimizing out the extra operations…

const float

neg = junction_cos_theta < 0 ? -1 : 1,

t = neg * junction_cos_theta,

asinx = (0.032843707f / 2)

+ t * (-(1.451838349f / 2)

+ t * ( (29.66153956f / 2)

+ t * (-(131.1123477f / 2)

+ t * ( (262.8130562f / 2)

+ t * (-(242.7199627f / 2) + t * (84.31466202f / 2) ) )))),

junction_theta = RADIANS(90) - (neg * asinx)

+ (t * (sq(t) * (RADIANS(-40) / 2) - (RADIANS(50) / 2)) - (0.18f / 2));

From my benchmark in #17575 i'd say.

1/3 + 2/3 = 3/3. Means - if you can't considerably speed up the calculation of averaging the both approximations - take the original fp-libraries acos() function - that's, at least, exact.

The new acos() is pretty good, but it's not super fast. Averaging with the old will drastically reduce precison in the critical region close to 180°, where the old approx was bad. Plus it's slower as @AnHardt pointed out. So, averaging these two correlations has mostly downsides. It may be workable with totally different equations, but we would have to find those first. I like the idea of @BarsMonster to just "divide and conquer" multiple ranges seperately. Maybe this can also offer an advantage in calculaction time.

That being said, the acos() is better already, which eliminated the problem for most prints. And it will probably be improved even further. The main problem with short segments and 'unlucky' angles still holds. I like the idea of an accumulator approach for the change in angle. I will explore it a little more.

I like the idea of an accumulator approach for the change in angle. I will explore it a little more.

Yeah, me too. I only have a vague idea how it would work, but it will need lots of tuning to find the right balance of accumulator "heating and cooling" on those angular changes.

take the original fp-libraries acos() function - that's, at least, exact.

If only we could find our own "what the fuck?" for acos like there is for the fast inv sqrt.

float Q_rsqrt(float number) {

long i;

float x2, y;

const float threehalfs = 1.5F;

x2 = number * 0.5F;

y = number;

i = * ( long * ) &y; // evil floating point bit level hacking

i = 0x5f3759df - ( i >> 1 ); // what the fuck?

y = * ( float * ) &i;

y = y * ( threehalfs - ( x2 * y * y ) ); // 1st iteration

//y = y * ( threehalfs - ( x2 * y * y ) ); // 2nd iteration, this can be removed

return y;

}

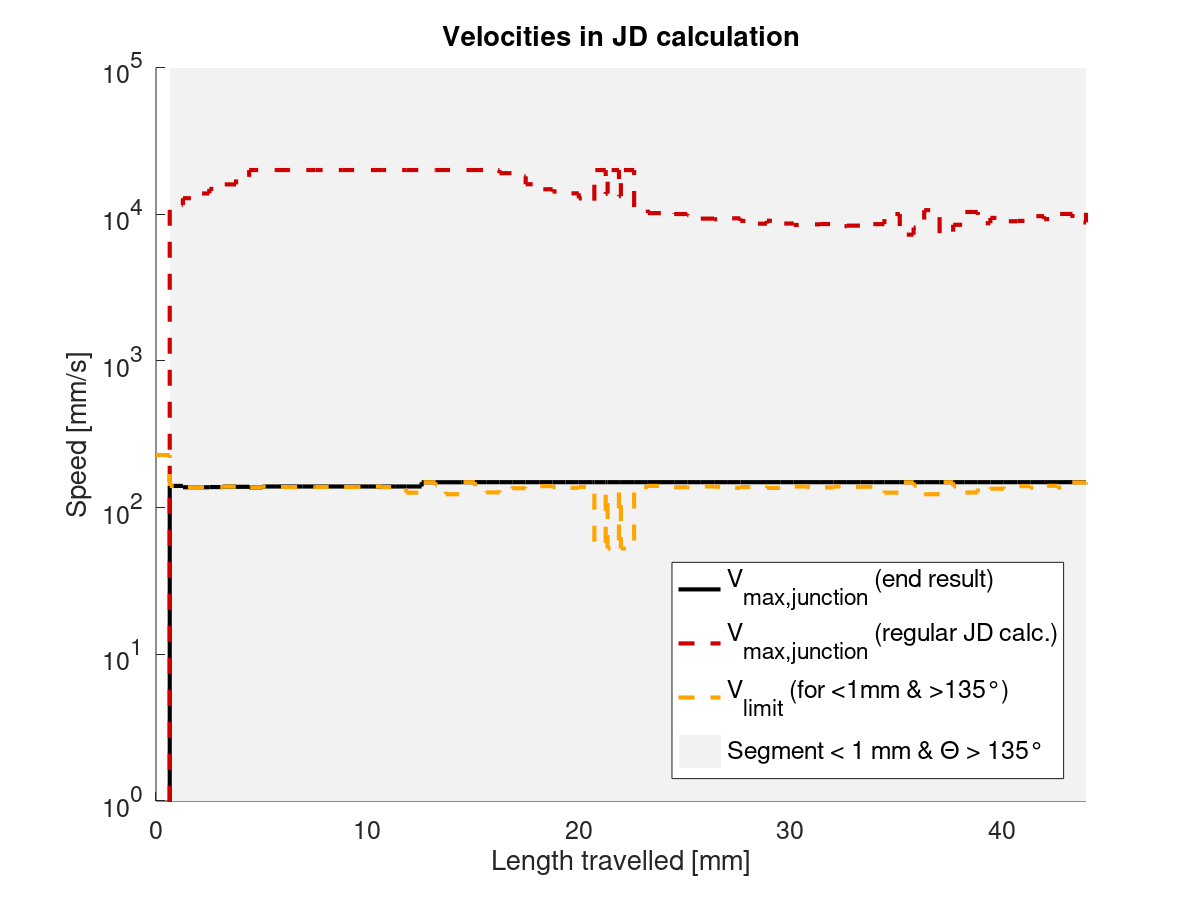

I have tinkered a bit with different ideas concerning the accumulator and have put together a little test script in MATLAB/Octave. I will present you a couple results.

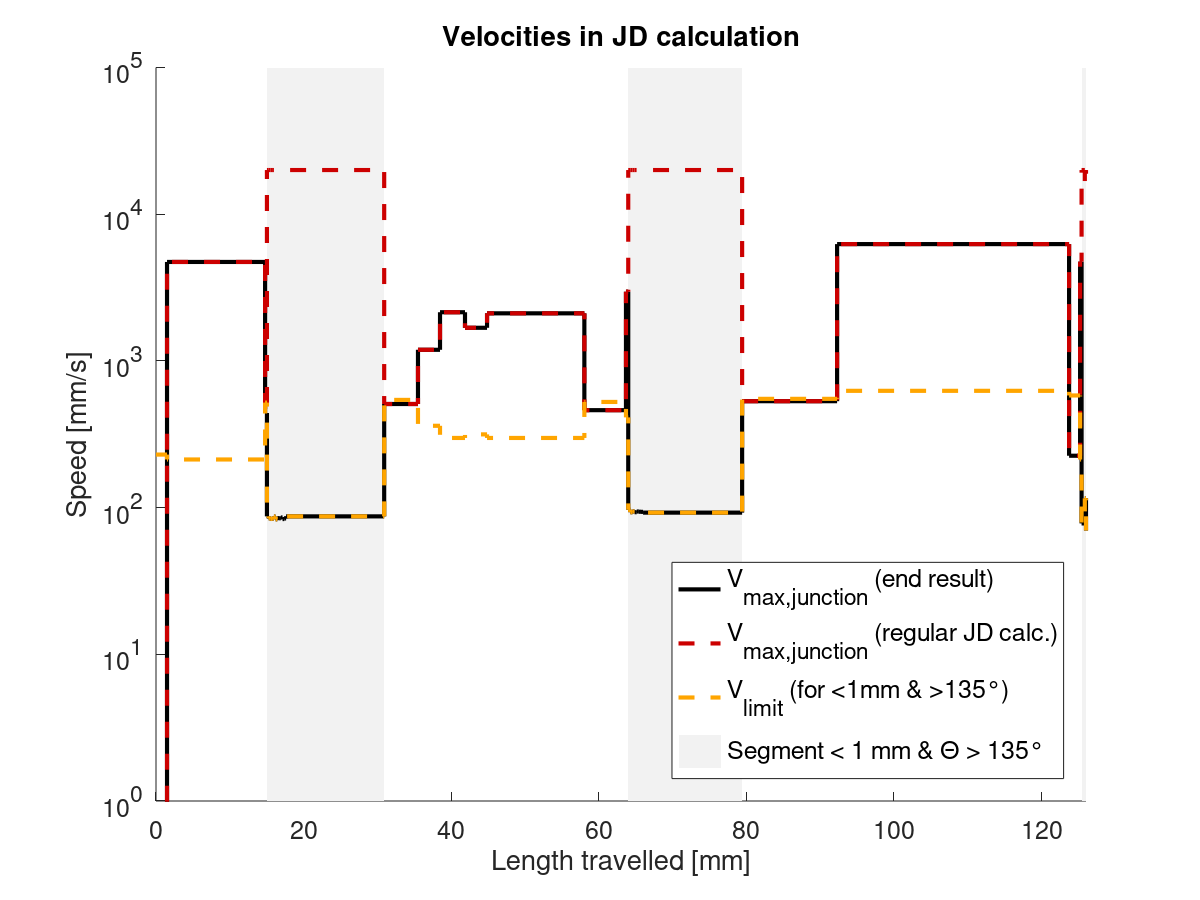

Current state

Let's first take a look at our beloved JD_single_arc_test.gcode, which is the half circle outer wall on this part (starting point marked with red arrow):

On this curve, the current limit speed profile in Marlin should look like this (LOG scaling on Y-axis!):

What is what:

- The red curve is the "default" junction deviation

v_max_junctionand can never be exceeded. - The yellow curve is the additional

limitfor segments < 1 mm with junction angles above >135°. - The light grey area in the background (which does not cover the whole graph!) is the area, where the

< 1 mm & >135°code path is used.

Keep in mind, that the planner could choose lower speeds for the segments, for example because the acceleration is too low to reach the speed limit given in the plot. Also, the print head/planner accelerate and decelerate, which is also not shown in the plot. The plot only shows speed limits.

Possible solution

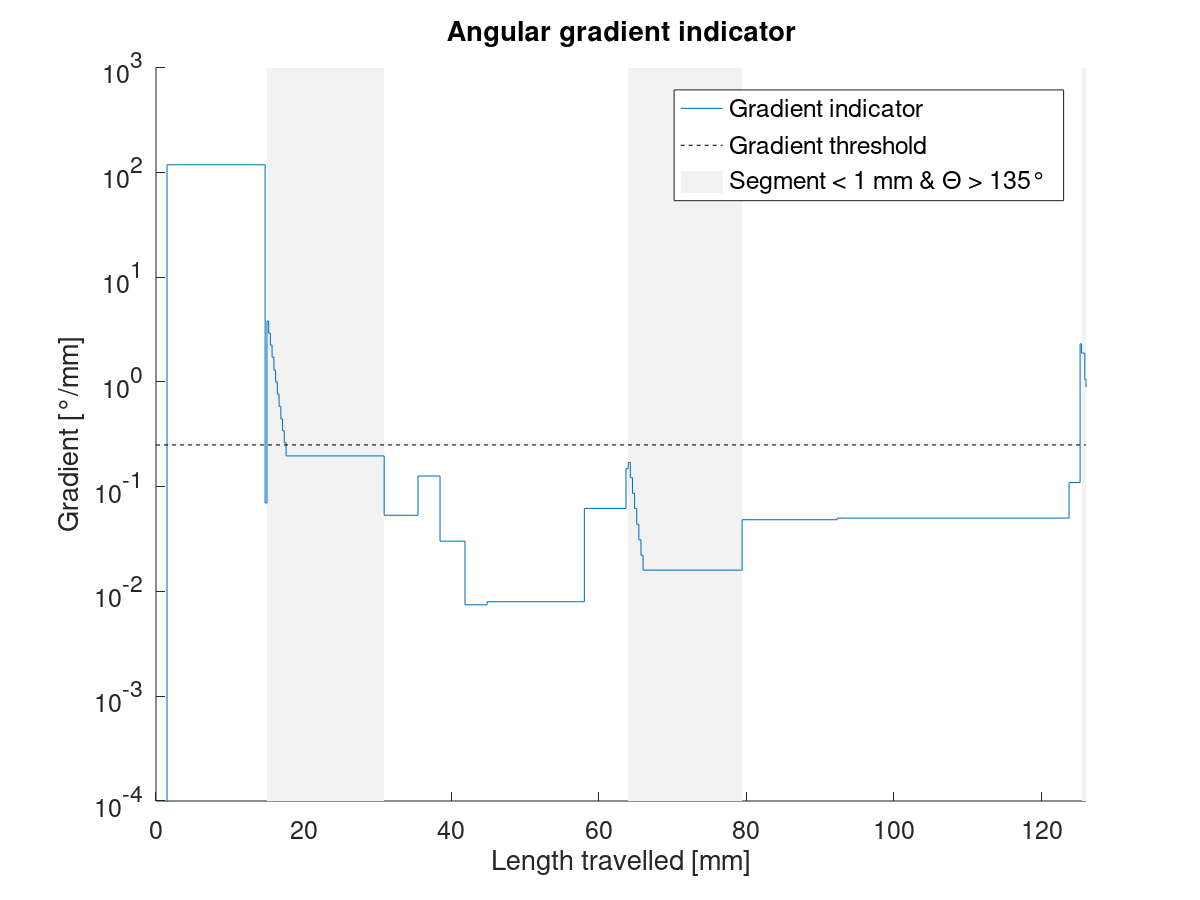

The idea was, to somehow avoid those dips at the 20 mm mark. @thinkyhead suggested some form of "accumulator", which takes into account the change rate of junction_theta. After a lot of tinkering, I have build an exponentially decaying delta_angle_indicator, which roughly tells us, how much our junction angle is changing per mm of travelled length. The main code looks like this:

[MATLAB pseudocode]

delta_angle_indicator = delta_angle_indicator * max(0, 1 - delta_angle_decay ...

* block_millimeters) + abs(delta_theta)) * inverse_millimeters;