Littlefs: Working with non standard sector size

Hi, I have implemented littlefs and seems to work for basic read / write / erase operations.

The littlefs configurations that I have used is as below :

// configuration of the filesystem is provided by this struct

const struct lfs_config cfg = {

// block device operations

.read = LittleFsRead,

.prog = LittleFsProgram,

.erase = LittleFsErase,

.sync = LittleFsSync,

// block device configuration

.read_size = 1,

.prog_size = 1,

.block_size = 262144,

.block_count = 510,

.cache_size = 64,

.lookahead_size = 64,

.block_cycles = 500,

};

We are using two flash chips (S25FS512S) of 64MB each. We will be using IR interface on our product to get data out, written through the file system. We would like to have maximum file size of 40960 in order to read the data quickly hence I changed max file size in lfs.h to be 40K as below :

define LFS_FILE_MAX 40960

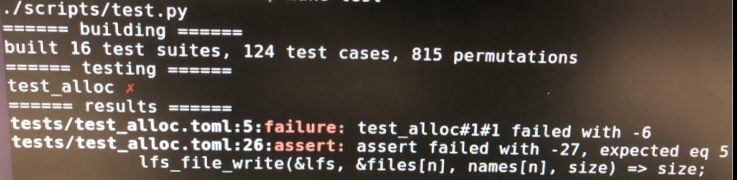

But when I ran the test suit provided along with the littfefs then it throws an error (-27 - file too large) on test file named test_alloc.toml. After going through failure analysis then got to know that its is failing due to following statement in test_alloc.toml :

define.SIZE = '(((LFS_BLOCK_SIZE-8)*(LFS_BLOCK_COUNT-6)) / FILES)'

As being non standard section size and result of changing the file size to 40K causes the failure. If I fix this line so that file size gets in limit then it fails somewhere else.

If I don't change the file size leaving the default size ( 2147483647) but keep the littlefs configuration listed above then all the test cases pass.

Before I go and analyse test failures further, can you please confirm that above littlefs configurations are correct and any other recommendations so that the PC test suit can pass all the test cases.

Regards,

Vivek

All 2 comments

On a quick look this appears to be a problem with the test suite; the uses of LFS_FILE_MAX in the actual code looks reasonable.

Personally I would manage this sort of constraint in the application rather than in the filesystem; as an application evolves, maybe a need for a large file could arise, and then you have a problem!

Oh interesting.

I really do appreciate the issues everyone posts. It's interesting learning what preconceptions I have.

@e107steved is right, the tests do not respect LFS_FILE_MAX _at all_. I assumed LFS_FILE_MAX would be extremely large. It's currently only used when there are metadata limits.

The test framework does support conditional testing, but we're not using it against LFS_FILE_MAX, this is something that should be fixed, however I think it will be low priority for a while:

https://github.com/ARMmbed/littlefs/blob/master/tests/test_exhaustion.toml#L359

Sidenote:

We would like to have maximum file size of 40960 in order to read the data quickly

LFS_FILE_MAX has no affect on performance. It only prevents files from being written larger than that size, which can be useful if your device doesn't support 32-bit integers.

Most helpful comment

On a quick look this appears to be a problem with the test suite; the uses of LFS_FILE_MAX in the actual code looks reasonable.

Personally I would manage this sort of constraint in the application rather than in the filesystem; as an application evolves, maybe a need for a large file could arise, and then you have a problem!