Feature request summary

Audits comparison would be a nice feature in order to try different building configurations and see easily the benefits or drawbacks being introduced.

With some help and guidance I'm willing to work on it.

What is the motivation or use case for changing this?

It would greatly help the auditor and developer team to ship better and more performant code.

How is this beneficial to Ligthhouse?

I think audits comparison is inline with the end goals of Lighthouse

BTW, I've using the viewer which is great.

It would just be great to be able to upload more than just one file and see the advantages and disadvantages

Thanks for the great work

All 10 comments

Yeah! We've called this "report diffing" but I think we're talking about the same thing. We definitely have wanted to do this as well.

We'd love to have you work on this. And I like your idea of adding to the viewer app first. We can then figure out where else we can expose the functionality.

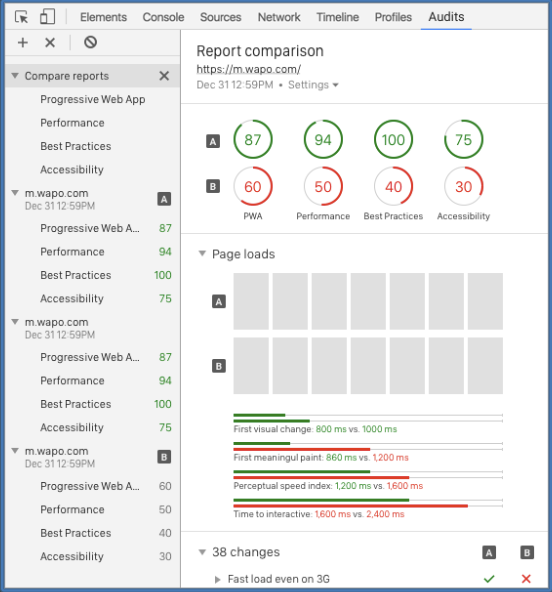

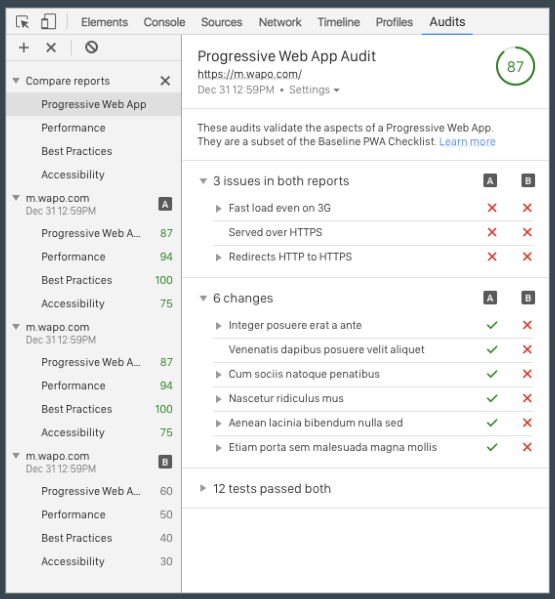

We actually have two older mocks that may be useful:

@nicoabie Does that UI look mostly like what you had in mind?

If so, then we can work out how we want to model the data.

Yeah @paulirish, those mockups look very good to me!

Great. I think first we'll need a data structure to represent the two reports and their differences. It's going to be a little while before I have the time to make a proposal here. (Quite busy with other work, unfortch)... But if you want to take a stab, I think that'd be great.

At a high level, within each category, I think we want to know which items 1) regressed 2) improved 3) are still failing 4) are still passing. And we should keep in mind that we want to compare details, eg. a certain URL is no longer listed in the image-compression audit, because it was fixed.

Maybe these groups are used instead of any existing category groups? (perf cat will get special handling, though.)

@paulirish I can begin with that, sure! I've been looking into the code and readme(s) and couldn't find the definition of the json report that it is being currently used by the report-renderer. For what I read that is where all begins when the html of the report is being generated.

Do you have any hint, so I can start from that json and extend it?

I think the viewer should be able to:

- Display the report as of now (taking lhr json)

- Display a report diff (taking a "lhrd" json) // this is the data structure proposal you mentioned

- Be able to import a second lhr json when a lhr was loaded before (not an lhdr) and display the diffs as you stated and it is show on the mocks.

Not you if you would agree on the third one.

Let me know your thoughts and hints, thanks.

sg. i like it.

third item makes sense to me. yeah i think creating an lhrd is something that will happen both inside the viewer and also probably in lighthouse-core (running in node, etc). the report-generator is an example of a module that runs in both places.

json report pointers:

Hi @paulirish,

This is a first draft on the data structure that could be used.

I've tried not to change much of the underlying structure and reuse things also I've added extra comments to the properties.

The basic idea is that every audit has two runs with specific results. I extracted the properties of each result from Audit.Result into RunResult. Maybe there are better names.

Some things should be the same for each run such as the Config, It would not make sense to compare one case with PWA and another without it, I believe.

For a moment it crossed my mind to have a list of RunResults, but then I realize we don't want to go that way for now at least.

Some interfaces I have omitted and that's because no changes are needed, one for example is AuditRef.

Let me know your thoughts.

declare global {

module LH {

/**

* The full output of a Lighthouse difference between runs.

*/

export interface Result {

/** The URL that was supplied to Lighthouse and initially navigated to. */

/** Should be the same for both runs. */

requestedUrl: string;

/** The post-redirects URL that Lighthouse loaded. */

/** Should be the same for both runs. */

finalUrl: string;

/** The ISO-8601 timestamp of when the first run results were generated. */

fetchTimeRunA: string;

/** The ISO-8601 timestamp of when the second run results were generated. */

fetchTimeRunB: string;

/** The version of Lighthouse with which these results were generated. */

/** Should be the same for both runs. */

lighthouseVersion: string;

/** An object containing the results of the audits, keyed by the audits' `id` identifier. */

audits: Record<string, Audit.Result>;

/** The top-level categories, their overall scores, and member audits. */

categories: Record<string, Result.Category>;

/** Descriptions of the groups referenced by CategoryMembers. */

categoryGroups?: Record<string, Result.ReportGroup>;

// Additional non-LHR-lite information.

/** The config settings used for these results. */

/** Should be the same for both runs. */

configSettings: Config.Settings;

/** List of top-level warnings for this Lighthouse run. */

/** I believe it should be the same for both runs. */

runWarnings: string[];

/** A top-level error message that, if present, indicates a serious enough problem that this Lighthouse result may need to be discarded. */

/** I believe it should be the same for both runs. */

runtimeError: {code: string, message: string};

/** The User-Agent string of the browser used run Lighthouse for these results. */

/** Should be the same for both runs. */

userAgent: string;

/** Information about the environment in which Lighthouse was run. */

/** Should be the same for both runs. */

environment: Environment;

/** Execution timings for the first Lighthouse run */

timingRunA: {total: number, [t: string]: number};

/** Execution timings for the second Lighthouse run */

timingRunB: {total: number, [t: string]: number};

/** The record of all formatted string locations in the LHR and their corresponding source values. */

/** Should be the same for both runs. */

i18n: {rendererFormattedStrings: I18NRendererStrings, icuMessagePaths: I18NMessages};

}

// Result namespace

export module Result {

export interface Category {

/** The string identifier of the category. */

id: string;

/** The human-friendly name of the category */

title: string;

/** A more detailed description of the category and its importance. */

description?: string;

/** A description for the manual audits in the category. */

manualDescription?: string;

/** The overall score of the category on the first run, the weighted average of all its audits. */

scoreRunA: number|null;

/** The overall score of the category on the second run, the weighted average of all its audits. */

scoreRunB: number|null;

/** An array of references to all the audit members of this category. */

auditRefs: AuditRef[];

}

}

}

module LH.Audit {

export interface RunResult {

rawValue: boolean | number | null;

displayValue?: DisplayValue;

explanation?: string;

errorMessage?: string;

warnings?: string[];

score: number|null;

// TODO(bckenny): define details

details?: any;

}

/* Audit result returned in Lighthouse report. All audits offer a description and score of 0-1 */

export interface Result {

/** Short, user-visible title for the audit. The text can change depending on if the audit passed or failed. */

title: string;

/** The string identifier of the audit, in kebab case. */

id: string;

/** A more detailed description that describes why the audit is important and links to Lighthouse documentation on the audit; markdown links supported. */

description: string;

/** A string identifying how the score should be interpreted for display. */

scoreDisplayMode: ScoreDisplayMode;

/** The result for the for the first run. */

runA: RunResult;

/** The result for the for the second run. */

runB: RunResult;

}

}

}

@nicoabie we shouldn't change anything in lighthouse-core. It's the lighthouse-viewer responsibility to handle multiple lighthouse runs.

I suggest looking into report-renderer.js and it's probably a good idea to copy it for the viewer so we only affect the viewer for now. If you need help feel free to shout 😄

@wardpeet but we need define a data structure to render it. Look on the comments above, I think you are going straight to the third point of the viewer responsabilities but paul told me to go first to the second with a structure.

@nicoabie I'm aware we need to change the structure to help us iterate over the items inside the report but I don't think @paulirish is referring to editing the core data structure but I might be mistaken 😛. What if we want to diff 3 reports? We'll have to add a C variant as well which doesn't scale that well.

IMO diffing of lighthouse has nothing to do with the lh-core (gatherers & audits) part and is more an implementation of the output which means a report-viewers' responsibility.

@paulirish could you shime in to elaborate some more

LHCI does report diffing. We'll eventually make it a standalone thing. closing.

Most helpful comment

Yeah! We've called this "report diffing" but I think we're talking about the same thing. We definitely have wanted to do this as well.

We'd love to have you work on this. And I like your idea of adding to the viewer app first. We can then figure out where else we can expose the functionality.

We actually have two older mocks that may be useful:

@nicoabie Does that UI look mostly like what you had in mind?

If so, then we can work out how we want to model the data.