Kubernetes: Node flapping between Ready/NotReady with PLEG issues

Is this a request for help? No

What keywords did you search in Kubernetes issues before filing this one? (If you have found any duplicates, you should instead reply there.): PLEG NotReady kubelet

Is this a BUG REPORT or FEATURE REQUEST? Bug

Kubernetes version (use kubectl version): 1.6.2

Environment:

- Cloud provider or hardware configuration: CoreOS on AWS

- OS (e.g. from /etc/os-release):CoreOS 1353.7.0

- Kernel (e.g.

uname -a): 4.9.24-coreos - Install tools:

- Others:

What happened:

I have a 3-worker cluster. Two and sometimes all three nodes keep dropping into NotReadywith the following messages in journalctl -u kubelet:

May 05 13:59:56 ip-10-50-20-208.ec2.internal kubelet[2858]: I0505 13:59:56.872880 2858 kubelet_node_status.go:379] Recording NodeNotReady event message for node ip-10-50-20-208.ec2.internal

May 05 13:59:56 ip-10-50-20-208.ec2.internal kubelet[2858]: I0505 13:59:56.872908 2858 kubelet_node_status.go:682] Node became not ready: {Type:Ready Status:False LastHeartbeatTime:2017-05-05 13:59:56.872865742 +0000 UTC LastTransitionTime:2017-05-05 13:59:56.872865742 +0000 UTC Reason:KubeletNotReady Message:PLEG is not healthy: pleg was last seen active 3m7.629592089s ago; threshold is 3m0s}

May 05 14:07:57 ip-10-50-20-208.ec2.internal kubelet[2858]: I0505 14:07:57.598132 2858 kubelet_node_status.go:379] Recording NodeNotReady event message for node ip-10-50-20-208.ec2.internal

May 05 14:07:57 ip-10-50-20-208.ec2.internal kubelet[2858]: I0505 14:07:57.598162 2858 kubelet_node_status.go:682] Node became not ready: {Type:Ready Status:False LastHeartbeatTime:2017-05-05 14:07:57.598117026 +0000 UTC LastTransitionTime:2017-05-05 14:07:57.598117026 +0000 UTC Reason:KubeletNotReady Message:PLEG is not healthy: pleg was last seen active 3m7.346983738s ago; threshold is 3m0s}

May 05 14:17:58 ip-10-50-20-208.ec2.internal kubelet[2858]: I0505 14:17:58.536101 2858 kubelet_node_status.go:379] Recording NodeNotReady event message for node ip-10-50-20-208.ec2.internal

May 05 14:17:58 ip-10-50-20-208.ec2.internal kubelet[2858]: I0505 14:17:58.536134 2858 kubelet_node_status.go:682] Node became not ready: {Type:Ready Status:False LastHeartbeatTime:2017-05-05 14:17:58.536086605 +0000 UTC LastTransitionTime:2017-05-05 14:17:58.536086605 +0000 UTC Reason:KubeletNotReady Message:PLEG is not healthy: pleg was last seen active 3m7.275467289s ago; threshold is 3m0s}

May 05 14:29:59 ip-10-50-20-208.ec2.internal kubelet[2858]: I0505 14:29:59.648922 2858 kubelet_node_status.go:379] Recording NodeNotReady event message for node ip-10-50-20-208.ec2.internal

May 05 14:29:59 ip-10-50-20-208.ec2.internal kubelet[2858]: I0505 14:29:59.648952 2858 kubelet_node_status.go:682] Node became not ready: {Type:Ready Status:False LastHeartbeatTime:2017-05-05 14:29:59.648910669 +0000 UTC LastTransitionTime:2017-05-05 14:29:59.648910669 +0000 UTC Reason:KubeletNotReady Message:PLEG is not healthy: pleg was last seen active 3m7.377520804s ago; threshold is 3m0s}

May 05 14:44:00 ip-10-50-20-208.ec2.internal kubelet[2858]: I0505 14:44:00.938266 2858 kubelet_node_status.go:379] Recording NodeNotReady event message for node ip-10-50-20-208.ec2.internal

May 05 14:44:00 ip-10-50-20-208.ec2.internal kubelet[2858]: I0505 14:44:00.938297 2858 kubelet_node_status.go:682] Node became not ready: {Type:Ready Status:False LastHeartbeatTime:2017-05-05 14:44:00.938251338 +0000 UTC LastTransitionTime:2017-05-05 14:44:00.938251338 +0000 UTC Reason:KubeletNotReady Message:PLEG is not healthy: pleg was last seen active 3m7.654775919s ago; threshold is 3m0s}

docker daemon is fine (local docker ps, docker images, etc. all work and respond immediately).

using weave networking installed via kubectl apply -f https://git.io/weave-kube-1.6

What you expected to happen:

Nodes to be ready.

How to reproduce it (as minimally and precisely as possible):

Wish I knew how!

Anything else we need to know:

All of the nodes (workers and masters) on same private subnet with NAT gateway to Internet. Workers in security group that allows unlimited access (all ports) from masters security group; masters allow all ports from same subnet. proxy is running on workers; apiserver, controller-manager, scheduler on masters.

kubectl logs and kubectl exec always hang, even when run from the master itself (or from outside).

All 225 comments

@deitch, how many containers were running on the node? What's the overall cpu utilization of your nodes?

Basically none. kube-dns, weave-net, weave-npc, and 3 template sample services. Actually only one, because two had no image and were going to be cleaned up. AWS m4.2xlarge. Not a resource issue.

I ended up having to destroy the nodes and recreate. No PLEG messages since destroy/recreate, and they seem 50% ok. They stay Ready, although they still refuse to allow kubectl exec or kubectl logs.

I really struggled to find any documentation on what PLEG really is, but more importantly how to check its own logs and state and debug it.

Hmm... to add to the mystery, no container can resolve any hostnames, and kubedns gives:

E0505 17:30:49.412272 1 reflector.go:199] pkg/dns/config/sync.go:114: Failed to list *api.ConfigMap: Get https://10.200.0.1:443/api/v1/namespaces/kube-system/configmaps?fieldSelector=metadata.name%3Dkube-dns&resourceVersion=0: dial tcp 10.200.0.1:443: getsockopt: no route to host

E0505 17:30:49.412285 1 reflector.go:199] pkg/dns/dns.go:148: Failed to list *api.Service: Get https://10.200.0.1:443/api/v1/services?resourceVersion=0: dial tcp 10.200.0.1:443: getsockopt: no route to host

E0505 17:30:49.412272 1 reflector.go:199] pkg/dns/dns.go:145: Failed to list *api.Endpoints: Get https://10.200.0.1:443/api/v1/endpoints?resourceVersion=0: dial tcp 10.200.0.1:443: getsockopt: no route to host

I0505 17:30:51.855370 1 logs.go:41] skydns: failure to forward request "read udp 10.100.0.3:60364->10.50.0.2:53: i/o timeout"

FWIW, 10.200.0.1 is the kube api service internally, 10.200.0.5 is DNS, 10.50.20.0/24 and 10.50.21.0/24 are the subnets (2 separate AZs) on which masters and workers run.

Something just really fubar in the networking?

Something just really fubar in the networking?

@bboreham could this be related to weave and not kube (or at least misconfigured weave)? Standard weave with the IPALLOC_RANGE=10.100.0.0/16 added as discussed at https://github.com/weaveworks/weave/issues/2736

@deitch pleg is for kubelet to periodically list pods in the node to check healthy and update cache. If you see pleg timeout log, it may not be related to dns, but because kubelet's call to docker is timeout.

Thanks @qiujian16 . The issue appears to have gone away, but I have no idea how to check it. Docker itself appeared healthy. I was wondering if it could be networking plugin, but that should not affect the kubelet itself.

Can you give me some pointers here on checking pleg healthiness and status? Then we can close this out until I see the issue recur.

@deitch pleg is the short for "pod lifecycle event generator", it is an internal component of kubelet and i do not think you can directly check its status, see (https://github.com/kubernetes/community/blob/master/contributors/design-proposals/pod-lifecycle-event-generator.md)

Is it an internal module in the kubelet binary? Is it another standalone container (docker, runc, cotnainerd)? It is just a standalone binary?

Basically, if kubelet reports PLEG errors, it is very helpful to find out what those errors are, and then check its status, try and replicate.

it is an internal module

@deitch most likely docker was not as responsive at times, causing PLEG to miss its threshold.

I am having a similar issue on all nodes but one a cluster I just created,

logs:

kube-worker03.foo.bar.com kubelet[3213]: E0511 19:00:59.139374 3213 remote_runtime.go:109] StopPodSandbox "12c6a5c6833a190f531797ee26abe06297678820385b402371e196c69b67a136" from runtime service failed: rpc error: code = 4 desc = context deadline exceeded

May 11 19:00:59 kube-worker03.foo.bar.com kubelet[3213]: E0511 19:00:59.139401 3213 kuberuntime_gc.go:138] Failed to stop sandbox "12c6a5c6833a190f531797ee26abe06297678820385b402371e196c69b67a136" before removing: rpc error: code = 4 desc = context deadline exceeded

May 11 19:01:04 kube-worker03.foo.bar.com kubelet[3213]: E0511 19:01:04.627954 3213 pod_workers.go:182] Error syncing pod 1c43d9b6-3672-11e7-a6da-00163e041106

("kube-dns-4240821577-1wswn_kube-system(1c43d9b6-3672-11e7-a6da-00163e041106)"), skipping: rpc error: code = 4 desc = context deadline exceeded

May 11 19:01:18 kube-worker03.foo.bar.com kubelet[3213]: E0511 19:01:18.627819 3213 pod_workers.go:182] Error syncing pod 1c43d9b6-3672-11e7-a6da-00163e041106

("kube-dns-4240821577-1wswn_kube-system(1c43d9b6-3672-11e7-a6da-00163e041106)"),

skipping: rpc error: code = 4 desc = context deadline exceeded

May 11 19:01:21 kube-worker03.foo.bar.com kubelet[3213]: I0511 19:01:21.627670 3213 kubelet.go:1752] skipping pod synchronization - [PLEG is not healthy: pleg was last seen active 3m0.339074625s ago; threshold is 3m0s]

I have downgraded docker and also restarted virtually everything to no avail, the nodes are all managed via puppet, so I expect them to be completely identical, I have no clue what is wrong. Docker logs in debug mode shows it's getting these requests

@bjhaid what are you using for networking? I was seeing some interesting networking issues at the time.

@deitch weave, but I don't think this is a networking related problem, since it seems to be a communication problem between kubelet and docker. I can confirm docker is getting these requests from kubelet via debug logging of docker

My Pleg issues appear to be gone, although I won't feel confident until next time I set up these clusters afresh (all via terraform modules I built).

Weave issues appear to exist, or possibly k8s/docker.

@deitch did you do anything to make the Pleg issues go away or they magic happened?

Actually it's hostname resolution, the controllers could not resolve the hostname for the newly created nodes, sorry for the noise

I was quick to report things being fine, problem still exists, I'll keep looking and report back if I find anything

I guess this issue is related to weave-kube I had the same issue and this time in order to solve it without recreating the cluster I had to remove weave and re-apply it (with a reboot of node in order to propagate the remove order)... And it's back

So I have no clue why or how by I'm pretty sure it's due to weave-kube-1.6

Forgot to return here, my problem was due to the weave interface not coming up so the containers didn't have networking, however this was due to our firewall blocking weave data and vxlan ports, once I opened this ports things were fine

There were two sets of issues I had, possibly related.

- PLEG. I believe they have gone away, but I have not recreated enough clusters to be completely confident. I do not believe I changed very much (i.e. anything) _directly_ to make that happen.

- Weave issues wherein containers were unable to connect to anything.

Suspiciously, all of the issues with pleg happened at exactly the same time as the weave network issues.

Bryan @ weaveworks, pointed me to the coreos issues. CoreOS has a rather aggressive tendency to try and manage bridges, veths, basically everything. Once I disabled CoreOS from doing it except on lo and actually physical interfaces on the host, all of my problems left.

Are the people still with problems running coreos?

We've been plagued by these issues for the last month or so, (I want to say after upgrading the clusters to 1.6.x from 1.5.x) and its just as mysterious.

we're running weave, debian jessie AMIs in aws, and every once in a while a cluster will decide that PLEG is not healthy.

Weave seems okay in this case, because pods are coming up fine up util a point.

One thing we noted is if scale ALL our replicas down, the issue seems to go away, but as we start scaling deployments and statefulsets back up, around a certain number of containers, this happens. (at least this time).

docker ps; docker info seem fine on the node.

resource utilization is nominal: 5% cpu util, 1.5/8gb of RAM used (according to root htop), total node resource provisioning sits around 30% with everything that's supposed to be scheduled on it, scheduled.

We cannot get our head around this at all.

I really wish PLEG check was a little more verbose, and we had some actual detailed docu about what the beep its doing, because there seem to be a HUGE number of issues open about it, no one really knows what it is, and for such a critical module, i would love ot be able to reproduce the checks that it sees as failing.

I second the thoughts on pleg mysteriousness. On my end though, after much work for my client, stabilizing coreos and its misbehaviour with networks helped a lot.

The PLEG health check does very little. In every iteration, it calls docker ps to detect container states changes, and call docker ps and inspect to get the details of those containers.

After finishing each iteration, it updates a timestamp. If the timestamp hasn't been updated for a while (i.e., 3 minutes), the health check fails.

Unless your node is loaded with huge number of pods that PLEG can't finish doing all these in 3 minutes (which should not happen), the most probable cause would be that docker is slow. You may not observe that in your occasional docker ps check, but that doesn't mean it's not there.

If we don't expose the "unhealthy" status, it'd hide many problems from the users and potentially cause more issue. For example, kubelet'd silently not reacting to changes in a timely manner and cause even more confusion.

Suggestions on how to make this more debuggable are welcome...

Running into PLEG unhealthy warnings and flapping node health status : k8s 1.6.4 with weave. Only appears on a subset of (otherwise identical) nodes.

Just a quick heads up, in our case the flapping workers and pods stuck in ContainerCreating was a problem with the security groups of our EC2 instances not allowing weave traffic between master and workers and among the workers. Therefore the node could not properly come up and got stuck in NotReady.

kuberrnetes 1.6.4

with proper security group it works now.

I am experiencing something like this issue with this config...

Kubernetes version (use kubectl version): 1.6.4

Environment:

Cloud provider or hardware configuration: single System76 server

OS (e.g. from /etc/os-release): Ubuntu 16.04.2 LTS

Kernel (e.g. uname -a): Linux system76-server 4.4.0-78-generic #99-Ubuntu SMP Thu Apr 27 15:29:09 UTC 2017 x86_64 x86_64 x86_64 GNU/Linux

Install tools: kubeadm + weave.works

Since this is a single-node cluster, I don't think my version of this issue is related to security groups or firewalls.

The issue with security groups would make sense if you're just starting up the cluster. But these issues we're seeing are on clusters that have been running for months, with security groups in place.

I had something similar just happen to me running kubelet version 1.6.2 on GKE.

One of our nodes got shifted into a not ready state, the kubelet logs on that node had two complaints, one that the PLEG status check failed, and two interestingly, that the image listing operations failed.

Some examples of which image function calls failed.

image_gc_manager.go:176

kuberuntime_image.go:106

remote_image.go:61

Which I'm assuming are calls to the the docker daemon.

As this was happening I saw disk IO spike a lot, especially the read operations. From ~50kb/s mark to 8mb/s mark.

It corrected itself after about 30-45 minutes, but maybe it was a image GC sweep causing the increased IO?

As has been said, PLEG monitors the pods through the docker daemon, if that's doing a lot of operations art once could the PLEG checks be queued?

I'm seeing this problem in 1.6.4 and 1.6.6 (on GKE) with flapping NotReady as the result. Since this is the latest version available on GKE I'd love to have any fixes backported to the next 1.6 release.

One interesting thing is that the time that PLEG was last seen active doesn't change and is always a _huge_ number (perhaps it's at some limit of whatever type it's stored in).

[container runtime is down PLEG is not healthy: pleg was last seen active 2562047h47m16.854775807s ago; threshold is 3m0s]

[container runtime is down PLEG is not healthy: pleg was last seen active 2562047h47m16.854775807s ago; threshold is 3m0s]

@bergman I haven't seen this but if this is the case, your node would have never been ready. Feel free to report this through the GKE channel so that the GKE team can investigate more.

It corrected itself after about 30-45 minutes, but maybe it was a image GC sweep causing the increased IO?

This is certainly possible. Image GC could sometimes cause docker daemon to respond extremely slowly. 30-45 minutes sounds quite long. @zoltrain, was images getting removed throughout the entire duration.

To reiterate my previous statement, PLEG does very little and it only fails the health check because the docker daemon is unresponsive. We surface this information through the PLEG health check to let the control plane know that the node is not getting the container stats (and react to them) properly. Blindly removing this check may mask more serious issues.

TO update: we found the issue on our end as was related to weave and ip-slice provisioning. as we terminate nodes often in AWS, weave originally did not account for the destruction of nodes in a cluster permanently, with new IPs taht follow. as a result networking would not setup correctly, so anything that had to do with internal ranges would not come up correctly.

https://github.com/weaveworks/weave/issues/2970

for those who use weave.

[container runtime is down PLEG is not healthy: pleg was last seen active 2562047h47m16.854775807s ago; threshold is 3m0s]

@bergman I haven't seen this but if this is the case, your node would have never been ready. Feel free to report this through the GKE channel so that the GKE team can investigate more.

The node is Ready most of the time. I believe kubelet is either restarted due to this check or some other check is signalling the Ready event. We see about 10 seconds of NotReady for every 60 seconds. The rest of the time the node is Ready.

@yujuhong I think the PLEG logging can be improved, saying PLEG is not healthy is super confusing to the end user and it doesn't help diagnosing problems, maybe including why the container runtime failed, or some details about the container runtime not responding will be more useful

I do not see flapping but consistently not ready state for the node with 1.6.4 and calico, not weave.

@yujuhong I think the PLEG logging can be improved, saying PLEG is not healthy is super confusing to the end user and it doesn't help diagnosing problems, maybe including why the container runtime failed, or some details about the container runtime not responding will be more useful

Sure. Feel free to send a PR.

I was having this issue while doing docker image clean up. I guess docker was too busy. After images are removed, it's back to normal.

I hit a same issue. I suspect the reason is that ntpd correct current time.

I has saw that the ntpd correct time in v1.6.9

Sep 12 19:05:08 node-6 systemd: Started logagt.

Sep 12 19:05:08 node-6 systemd: Starting logagt...

Sep 12 19:05:09 node-6 cnrm: "Log":"2017-09-12 19:05:09.197083#011ERROR#011node-6#011knitter.cnrm.mod-init#011TransactionID=1#011InstanceID=1174#011[ObjectType=null,ObjectID=null]#011registerOir: k8s.GetK8sClientSingleton().RegisterOir(oirName: hugepage, qty: 2048) FAIL, error: dial tcp 120.0.0.250:8080: getsockopt: no route to host, retry#011[init.go]#011[68]"

Sep 12 11:04:53 node-6 ntpd[902]: 0.0.0.0 c61c 0c clock_step -28818.771869 s

Sep 12 11:04:53 node-6 ntpd[902]: 0.0.0.0 c614 04 freq_mode

Sep 12 11:04:53 node-6 systemd: Time has been changed

Sep 12 11:04:54 node-6 ntpd[902]: 0.0.0.0 c618 08 no_sys_peer

Sep 12 11:05:04 node-6 systemd: Reloading.

Sep 12 11:05:04 node-6 systemd: Configuration file /usr/lib/systemd/system/auditd.service is marked world-inaccessible. This has no effect as configuration data is accessible via APIs without restrictions. Proceeding anyway.

Sep 12 11:05:04 node-6 systemd: Started opslet.

Sep 12 11:05:04 node-6 systemd: Starting opslet...

Sep 12 11:05:13 node-6 systemd: Reloading.

Sep 12 11:05:22 node-6 kubelet: E0912 11:05:22.425676 2429 event.go:259] Could not construct reference to: '&v1.Node{TypeMeta:v1.TypeMeta{Kind:"", APIVersion:""}, ObjectMeta:v1.ObjectMeta{Name:"120.0.0.251", GenerateName:"", Namespace:"", SelfLink:"", UID:"", ResourceVersion:"", Generation:0, CreationTimestamp:v1.Time{Time:time.Time{sec:0, nsec:0, loc:(*time.Location)(nil)}}, DeletionTimestamp:(*v1.Time)(nil), DeletionGracePeriodSeconds:(*int64)(nil), Labels:map[string]string{"beta.kubernetes.io/os":"linux", "beta.kubernetes.io/arch":"amd64", "kubernetes.io/hostname":"120.0.0.251"}, Annotations:map[string]string{"volumes.kubernetes.io/controller-managed-attach-detach":"true"}, OwnerReferences:[]v1.OwnerReference(nil), Finalizers:[]string(nil), ClusterName:""}, Spec:v1.NodeSpec{PodCIDR:"", ExternalID:"120.0.0.251", ProviderID:"", Unschedulable:false, Taints:[]v1.Taint(nil)}, Status:v1.NodeStatus{Capacity:v1.ResourceList{"cpu":resource.Quantity{i:resource.int64Amount{value:4000, scale:-3}, d:resource.infDecAmount{Dec:(*inf.Dec)(nil)}, l:[]int64(nil), s:"", Format:"DecimalSI"}, "memory":resource.Quantity{i:resource.int64Amount{value:3974811648, scale:0}, d:resource.infDecAmount{Dec:(*inf.Dec)(nil)}, l:[]int64(nil), s:"", Format:"BinarySI"}, "hugePages":resource.Quantity{i:resource.int64Amount{value:1024, scale:0}, d:resource.infDecAmount{Dec:(*inf.Dec)(nil)}, l:[]int64(nil), s:"", Format:"DecimalSI"}, "pods":resource.Quantity{i:resource.int64Amount{value:110, scale:0}, d:resource.infDecAmount{Dec:(*inf.Dec)(nil)}, l:[]int64(nil), s:"", Format:"DecimalSI"}}, Allocatable:v1.ResourceList{"cpu":resource.Quantity{i:resource.int64Amount{value:3500, scale:-3}, d:resource.infDecAmount{Dec:(*inf.Dec)(nil)}, l:[]int64(nil), s:"", Format:"DecimalSI"}, "memory":resource.Quantity{i:resource.int64Amount{value:1345666048, scale:0}, d:resource.infDecAmount{Dec:(*inf.Dec)(nil)}, l:[]int64(nil), s:"", Format:"BinarySI"}, "hugePages":resource.Quantity{i:resource.int64Amount{value:1024, scale:0}, d:resource.infDecAmount{Dec:(*inf.Dec)(nil)}, l:[]int64(nil), s:"",

Sep 12 11:05:22 node-6 kubelet: Format:"DecimalSI"}, "pods":resource.Quantity{i:resource.int64Amount{value:110, scale:0}, d:resource.infDecAmount{Dec:(*inf.Dec)(nil)}, l:[]int64(nil), s:"", Format:"DecimalSI"}}, Phase:"", Conditions:[]v1.NodeCondition{v1.NodeCondition{Type:"OutOfDisk", Status:"False", LastHeartbeatTime:v1.Time{Time:time.Time{sec:63640811081, nsec:196025689, loc:(*time.Location)(0x4e8e3a0)}}, LastTransitionTime:v1.Time{Time:time.Time{sec:63640811081, nsec:196025689, loc:(*time.Location)(0x4e8e3a0)}}, Reason:"KubeletHasSufficientDisk", Message:"kubelet has sufficient disk space available"}, v1.NodeCondition{Type:"MemoryPressure", Status:"False", LastHeartbeatTime:v1.Time{Time:time.Time{sec:63640811081, nsec:196099492, loc:(*time.Location)(0x4e8e3a0)}}, LastTransitionTime:v1.Time{Time:time.Time{sec:63640811081, nsec:196099492, loc:(*time.Location)(0x4e8e3a0)}}, Reason:"KubeletHasSufficientMemory", Message:"kubelet has sufficient memory available"}, v1.NodeCondition{Type:"DiskPressure", Status:"False", LastHeartbeatTime:v1.Time{Time:time.Time{sec:63640811081, nsec:196107935, loc:(*time.Location)(0x4e8e3a0)}}, LastTransitionTime:v1.Time{Time:time.Time{sec:63640811081, nsec:196107935, loc:(*time.Location)(0x4e8e3a0)}}, Reason:"KubeletHasNoDiskPressure", Message:"kubelet has no disk pressure"}, v1.NodeCondition{Type:"Ready", Status:"False", LastHeartbeatTime:v1.Time{Time:time.Time{sec:63640811081, nsec:196114314, loc:(*time.Location)(0x4e8e3a0)}}, LastTransitionTime:v1.Time{Time:time.Time{sec:63640811081, nsec:196114314, loc:(*time.Location)(0x4e8e3a0)}}, Reason:"KubeletNotReady", Message:"container runtime is down,PLEG is not healthy: pleg was last seen active 2562047h47m16.854775807s ago; threshold is 3m0s,network state unknown"}}, Addresses:[]v1.NodeAddress{v1.NodeAddress{Type:"LegacyHostIP", Address:"120.0.0.251"}, v1.NodeAddress{Type:"InternalIP", Address:"120.0.0.251"}, v1.NodeAddress{Type:"Hostname", Address:"120.0.0.251"}}, DaemonEndpoints:v1.NodeDaemonEndpoints{KubeletEndpoint:v1.DaemonEndpoint{Port:10250}}, NodeInfo:v1.NodeS

mark.

Same issue here.

It appears when pod killing but stuck in killing state Normal Killing Killing container with docker id 472802bf1dba: Need to kill pod.

and kubelet logs like this:

skipping pod synchronization - [PLEG is not healthy: pleg was last seen active

k8s cluste version: 1.6.4

@xcompass Do you using --image-gc-high-threshold and --image-gc-low-threshold flags for kubelet config? I suspect kubelet gc keep busy docker deamon.

@alirezaDavid I encountered the same issue just like yours, pod start and terminate very slow, and node became notReady every now and then, restart kubelet on node or restart docker looks solve the problem, but this is not the right way.

@yu-yang2 Yap exactly, I restart kubelet

But before restart kubelet i checked out docker ps and systemctl -u docker and everything seems working.

We had this issue on kubernetes with weave and autoscalers. It turned out to be that weave had no more ip addresses to assign out. This was detected by running. weave status ipam from this issue: https://github.com/weaveworks/weave/issues/2970

The root cause is here: https://github.com/weaveworks/weave/issues/2797

The documentation warns about autoscalers and weave: https://www.weave.works/docs/net/latest/operational-guide/tasks/

When we ran weave --local status ipam there were hundreds of unavailable nodes with large numbers of ip addresses assigned to them. This happens because the autoscaler terminates the instances without letting weave know. This left only a handful for the actually connected nodes. I used weave rmpeer to clear out some of the unavailable peers. This then gave the node i was running on a group of ip addresses. I then went to other running weave nodes and ran a few rmpeer commands from them as well (i'm not sure if that's necessary).

I terminated some of the ec2 instances, and new ones were brought up by the autoscaler and were immediately assigned ip addresses.

Hi folks. In my case I had the PLEG problem with a deletion of sandboxes, because they didn't have a network namespace. That situation described in https://github.com/kubernetes/kubernetes/issues/44307

My problem was:

- Pod deployed.

- Pod deleted. Container of application deleted without problems. Sandbox of application wasn't deleted.

- PLEG tries to commit/delete/finish the sandbox, PLEG cannot do this and marks node as unhealthy.

As I can see, all people in this bug use 1.6.* of Kubernetes, it should be fixed in 1.7.

PS. Saw this situation with origin 3.6 (kubernetes 1.6).

Hi,

I've got a PLEG issue myself (Azure, k8s 1.7.7) :

Oct 5 08:13:27 k8s-agent-27569017-1 docker[1978]: E1005 08:13:27.386295 2209 remote_runtime.go:168] ListPodSandbox with filter "nil" from runtime service failed: rpc error: code = 4 desc = context deadline exceeded

Oct 5 08:13:27 k8s-agent-27569017-1 docker[1978]: E1005 08:13:27.386351 2209 kuberuntime_sandbox.go:197] ListPodSandbox failed: rpc error: code = 4 desc = context deadline exceeded

Oct 5 08:13:27 k8s-agent-27569017-1 docker[1978]: E1005 08:13:27.386360 2209 generic.go:196] GenericPLEG: Unable to retrieve pods: rpc error: code = 4 desc = context deadline exceeded

Oct 5 08:13:30 k8s-agent-27569017-1 docker[1978]: I1005 08:13:30.953599 2209 helpers.go:102] Unable to get network stats from pid 60677: couldn't read network stats: failure opening /proc/60677/net/dev: open /proc/60677/net/dev: no such file or directory

Oct 5 08:13:30 k8s-agent-27569017-1 docker[1978]: I1005 08:13:30.953634 2209 helpers.go:125] Unable to get udp stats from pid 60677: failure opening /proc/60677/net/udp: open /proc/60677/net/udp: no such file or directory

Oct 5 08:13:30 k8s-agent-27569017-1 docker[1978]: I1005 08:13:30.953642 2209 helpers.go:132] Unable to get udp6 stats from pid 60677: failure opening /proc/60677/net/udp6: open /proc/60677/net/udp6: no such file or directory

Oct 5 08:13:31 k8s-agent-27569017-1 docker[1978]: I1005 08:13:31.763914 2209 kubelet.go:1820] skipping pod synchronization - [PLEG is not healthy: pleg was last seen active 13h42m52.628402637s ago; threshold is 3m0s]

Oct 5 08:13:35 k8s-agent-27569017-1 docker[1978]: I1005 08:13:35.977487 2209 kubelet_node_status.go:467] Using Node Hostname from cloudprovider: "k8s-agent-27569017-1"

Oct 5 08:13:36 k8s-agent-27569017-1 docker[1978]: I1005 08:13:36.764105 2209 kubelet.go:1820] skipping pod synchronization - [PLEG is not healthy: pleg was last seen active 13h42m57.628610126s ago; threshold is 3m0s]

Oct 5 08:13:39 k8s-agent-27569017-1 docker[1275]: time="2017-10-05T08:13:39.185111999Z" level=warning msg="Health check error: rpc error: code = 4 desc = context deadline exceeded"

Oct 5 08:13:41 k8s-agent-27569017-1 docker[1978]: I1005 08:13:41.764235 2209 kubelet.go:1820] skipping pod synchronization - [PLEG is not healthy: pleg was last seen active 13h43m2.628732806s ago; threshold is 3m0s]

Oct 5 08:13:41 k8s-agent-27569017-1 docker[1978]: I1005 08:13:41.875074 2209 helpers.go:102] Unable to get network stats from pid 60677: couldn't read network stats: failure opening /proc/60677/net/dev: open /proc/60677/net/dev: no such file or directory

Oct 5 08:13:41 k8s-agent-27569017-1 docker[1978]: I1005 08:13:41.875102 2209 helpers.go:125] Unable to get udp stats from pid 60677: failure opening /proc/60677/net/udp: open /proc/60677/net/udp: no such file or directory

Oct 5 08:13:41 k8s-agent-27569017-1 docker[1978]: I1005 08:13:41.875113 2209 helpers.go:132] Unable to get udp6 stats from pid 60677: failure opening /proc/60677/net/udp6: open /proc/60677/net/udp6: no such file or directory

We're running v1.7.4+coreos.0 on stable CoreOS. We experience that our k8s nodes go down (and doesn't come up until we restart the docker and/or kubelet service) as frequent as every 8 hour because of PLEG. Containers keep running but in k8s are reported as Unknown. I should mention we deploy using Kubespray.

We've tracked down the problem to what we believe is the backoff algorithm in GRPC when communicating with docker in order to list containers. This PR https://github.com/moby/moby/pull/33483 changes the backoff to a maximum of 2 seconds and is available in 17.06 however kubernetes doesn't support 17.06 until 1.8 right?

The line in PLEG causing problems is this.

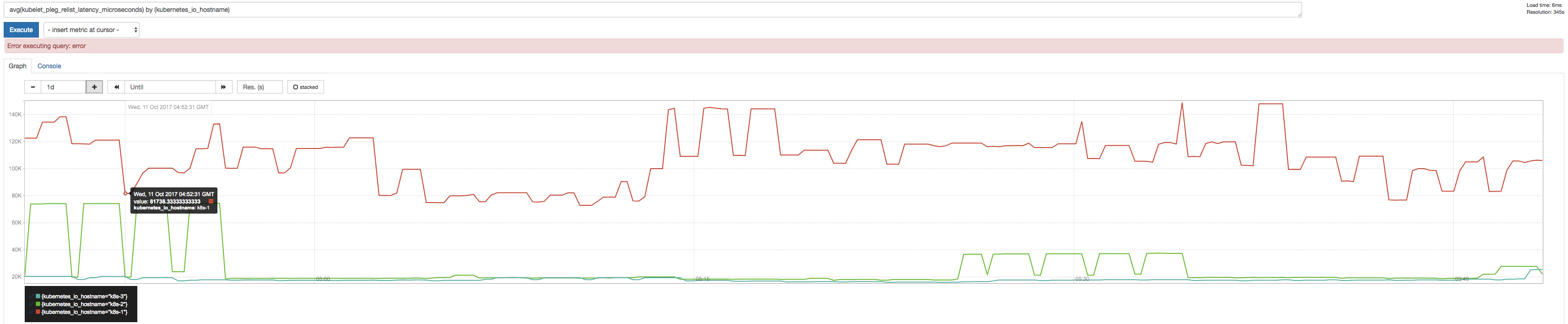

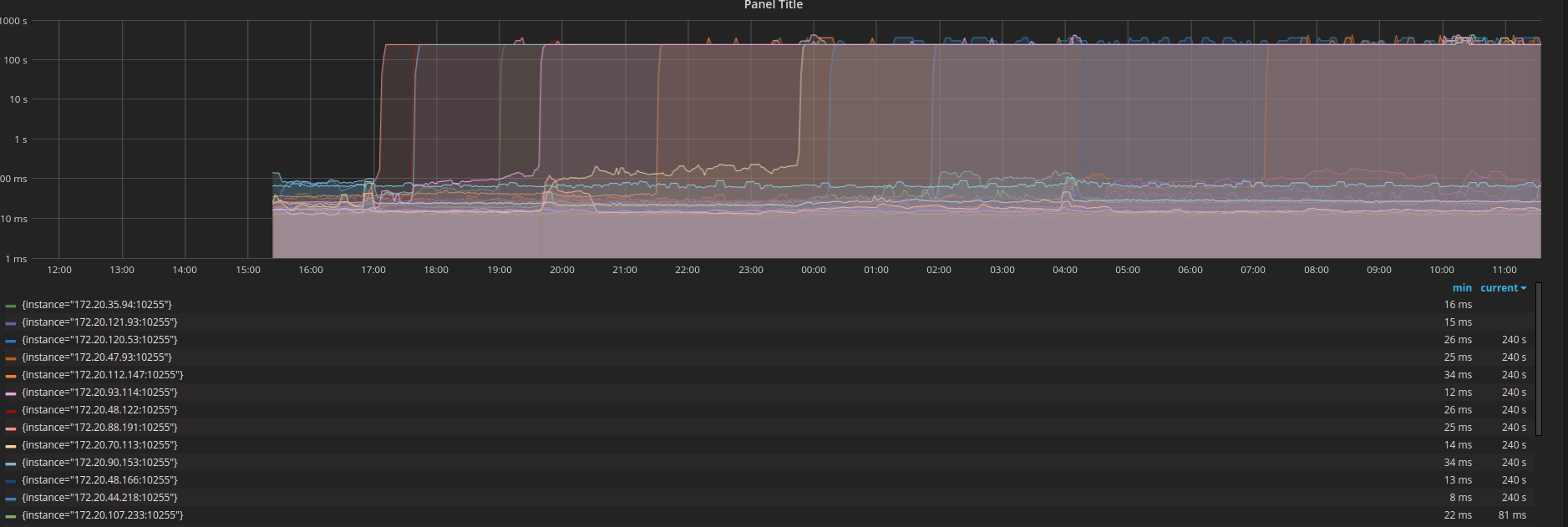

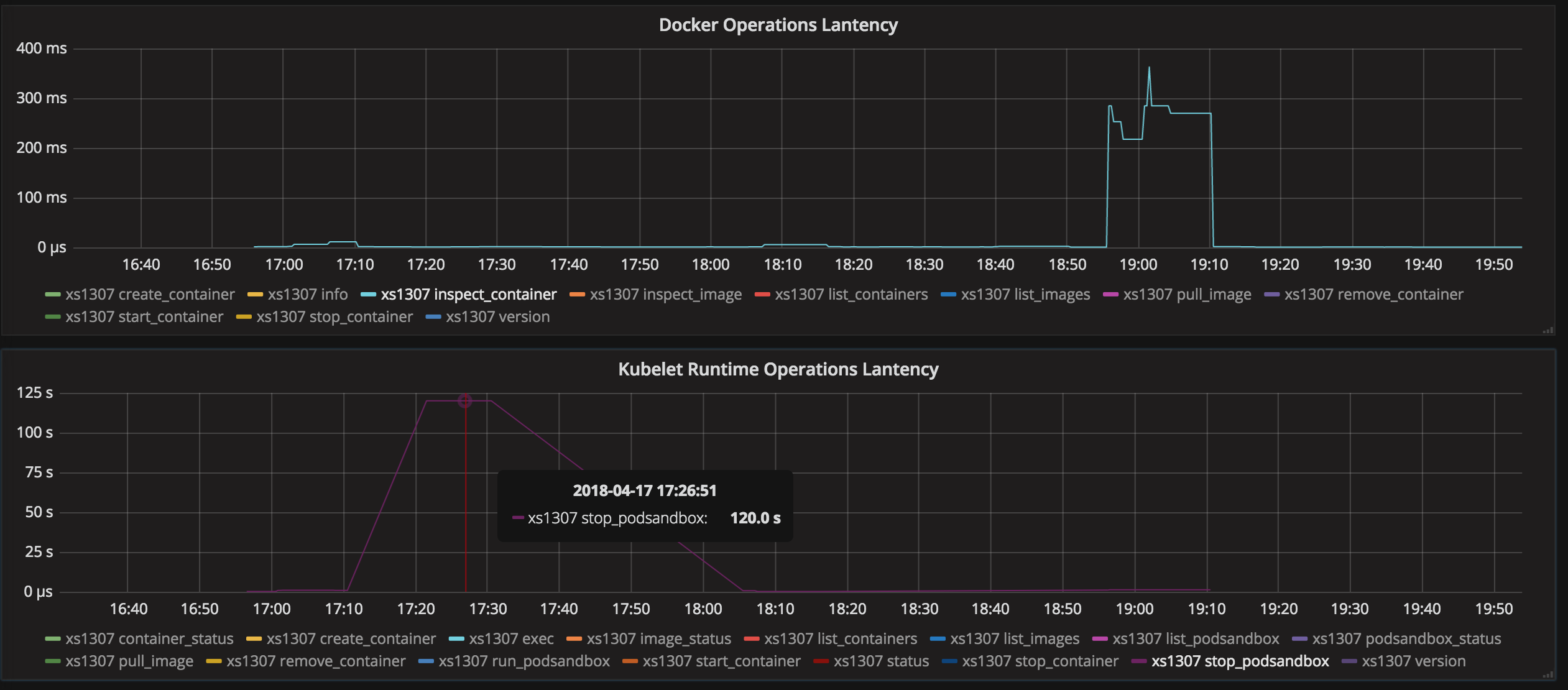

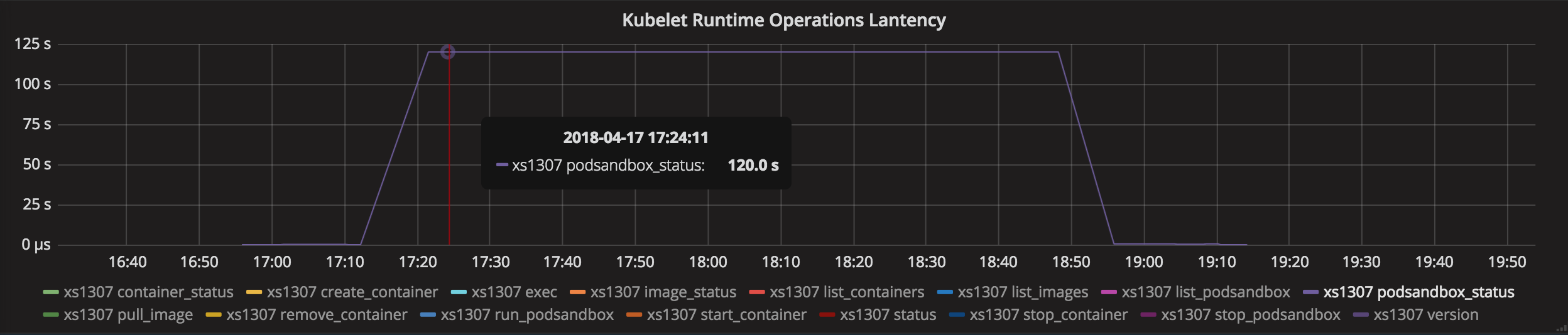

We used prometheus to inspect the PLEGRelistInterval and PLEGRelistLatency metrics and got the following result which is pretty consistent with the backoff algorithm theory.

@ssboisen thanks for reporting with the graphs (they do look interesting)!

We experience that our k8s nodes go down (and doesn't come up until we restart the docker and/or kubelet service) as frequent as every 8 hour because of PLEG. Containers keep running but in k8s are reported as Unknown. I should mention we deploy using Kubespray.

Some questions I have:

- Does restarting any one of docker and kubelet solve the issue?

- When the issue happens, does

docker psresponds normally?

We've tracked down the problem to what we believe is the backoff algorithm in GRPC when communicating with docker in order to list containers. This PR moby/moby#33483 changes the backoff to a maximum of 2 seconds and is available in 17.06 however kubernetes doesn't support 17.06 until 1.8 right?

I looked at the moby issues you mentioned, but in that discussion, all docker ps calls were still functioning properly (even though the dockerd <-> containerd connection was broken). This seems different from the PLEG issue you mentioned. Also, kubelet doesn't talk to dockerd using grpc. It does use grpc to communicate with the dockershim, but they are essentially the same process and should not encounter the problem of one gets killed while the other still alive (leading to a broken connection).

grpc http grpc

kubelet <----> dockershim <----> dockerd <----> containerd

What's the error message you saw in the kubelet log? Most of the comments above had "context deadline exceeded" error messages,

- Does restarting any one of docker and kubelet solve the issue?

It changes, most often it's enough to restart kubelet but we have had situations where a docker restart was necessary.

- When the issue happens, does

docker psresponds normally?

We have no problem running docker ps on the node when the PLEG is acting up. I didn't know about the dockershim, I wonder if it's the connection between kubelet and dockershim that is the problem, could the shim not be answering in time leading to climbing backoffs?

The error message in the log is a combination of the following two lines:

generic.go:196] GenericPLEG: Unable to retrieve pods: rpc error: code = 14 desc = grpc: the connection is unavailable

kubelet.go:1820] skipping pod synchronization - [container runtime is down PLEG is not healthy: pleg was last seen active 11h5m56.959313178s ago; threshold is 3m0s]

Do you have any suggestions as to how we might get more info so we can better debug this problem?

- Does restarting any one of docker and kubelet solve the issue?

Yes, just restart docker will fix it so it is not k8s issue - When the issue happens, does docker ps responds normally?

Nope. it hangs. Docker run any container on that node will hang.

Most likely a docker issue for me, not k8s, which is doing the right thing. However, could not find why docker is misbehaving here. All CPU/memory/disk resources is great.

restart docker service will resume good state.

Do you have any suggestions as to how we might get more info so we can better debug this problem?

I think the first step is to confirm what component (dockershim or docker/containerd) returned the error message.

You could probably figure this out by cross referencing kubelet and docker logs.

Most likely a docker issue for me, not k8s, which is doing the right thing. However, could not find why docker is misbehaving here. All CPU/memory/disk resources is great.

Yep. In your case, it looks like docker daemon actually hangs. You could start the docker daemon in the debugging mode and get a stacktrace when it happens.

https://docs.docker.com/engine/admin/#force-a-stack-trace-to-be-logged

@yujuhong I encountered this problem again after a load test of k8s, almost all nodes become not ready and didn't recovery after I clean the pods for several days, I opened the verbose mode in every kubelet and got the logs below, hope that these logs will help solve the problem:

Oct 24 21:16:39 docker34-91 kubelet[24165]: I1024 21:16:39.539054 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:39 docker34-91 kubelet[24165]: I1024 21:16:39.639305 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:39 docker34-91 kubelet[24165]: I1024 21:16:39.739585 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:39 docker34-91 kubelet[24165]: I1024 21:16:39.839829 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:39 docker34-91 kubelet[24165]: I1024 21:16:39.940111 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:40 docker34-91 kubelet[24165]: I1024 21:16:40.040374 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:40 docker34-91 kubelet[24165]: I1024 21:16:40.128789 24165 kubelet.go:2064] Container runtime status: Runtime Conditions: RuntimeReady=true reason: message:, NetworkReady=true reason: message:

Oct 24 21:16:40 docker34-91 kubelet[24165]: I1024 21:16:40.140634 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:40 docker34-91 kubelet[24165]: I1024 21:16:40.240851 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:40 docker34-91 kubelet[24165]: I1024 21:16:40.341125 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:40 docker34-91 kubelet[24165]: I1024 21:16:40.441471 24165 config.go:101] Looking for [api file], have seen map[api:{} file:{}]

Oct 24 21:16:40 docker34-91 kubelet[24165]: I1024 21:16:40.541781 24165 config.go:101] Looking for [api file], have seen map[api:{} file:{}]

Oct 24 21:16:40 docker34-91 kubelet[24165]: I1024 21:16:40.642070 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:40 docker34-91 kubelet[24165]: I1024 21:16:40.742347 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:40 docker34-91 kubelet[24165]: I1024 21:16:40.842562 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:40 docker34-91 kubelet[24165]: I1024 21:16:40.942867 24165 config.go:101] Looking for [api file], have seen map[api:{} file:{}]

Oct 24 21:16:41 docker34-91 kubelet[24165]: I1024 21:16:41.006656 24165 kubelet.go:1752] skipping pod synchronization - [PLEG is not healthy: pleg was last seen active 6m20.171705404s ago; threshold is 3m0s]

Oct 24 21:16:41 docker34-91 kubelet[24165]: I1024 21:16:41.043126 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:41 docker34-91 kubelet[24165]: I1024 21:16:41.143372 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:41 docker34-91 kubelet[24165]: I1024 21:16:41.243620 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:41 docker34-91 kubelet[24165]: I1024 21:16:41.343911 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:41 docker34-91 kubelet[24165]: I1024 21:16:41.444156 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:41 docker34-91 kubelet[24165]: I1024 21:16:41.544420 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:41 docker34-91 kubelet[24165]: I1024 21:16:41.644732 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:41 docker34-91 kubelet[24165]: I1024 21:16:41.745002 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:41 docker34-91 kubelet[24165]: I1024 21:16:41.845268 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:41 docker34-91 kubelet[24165]: I1024 21:16:41.945524 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 24 21:16:42 docker34-91 kubelet[24165]: I1024 21:16:42.045814 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

^C

[root@docker34-91 ~]# journalctl -u kubelet -f

-- Logs begin at Wed 2017-10-25 17:19:29 CST. --

Oct 27 10:22:35 docker34-91 kubelet[24165]: 00000000 6b 38 73 00 0a 0b 0a 02 76 31 12 05 45 76 65 6e |k8s.....v1..Even|

Oct 27 10:22:35 docker34-91 kubelet[24165]: 00000010 74 12 d3 03 0a 4f 0a 33 6c 64 74 65 73 74 2d 37 |t....O.3ldtest-7|

Oct 27 10:22:35 docker34-91 kubelet[24165]: 00000020 33 34 33 39 39 64 67 35 39 2d 33 33 38 32 38 37 |34399dg59-338287|

Oct 27 10:22:35 docker34-91 kubelet[24165]: 00000030 31 36 38 35 2d 78 32 36 70 30 2e 31 34 66 31 34 |1685-x26p0.14f14|

Oct 27 10:22:35 docker34-91 kubelet[24165]: 00000040 63 30 39 65 62 64 32 64 66 66 34 12 00 1a 0a 6c |c09ebd2dff4....l|

Oct 27 10:22:35 docker34-91 kubelet[24165]: 00000050 64 74 65 73 74 2d 30 30 35 22 00 2a 00 32 00 38 |dtest-005".*.2.8|

Oct 27 10:22:35 docker34-91 kubelet[24165]: 00000060 00 42 00 7a 00 12 6b 0a 03 50 6f 64 12 0a 6c 64 |.B.z..k..Pod..ld|

Oct 27 10:22:35 docker34-91 kubelet[24165]: 00000070 74 65 73 74 2d 30 30 35 1a 22 6c 64 74 65 73 74 |test-005."ldtest|

Oct 27 10:22:35 docker34-91 kubelet[24165]: 00000080 2d 37 33 34 33 39 39 64 67 35 39 2d 33 33 38 32 |-734399dg59-3382|

Oct 27 10:22:35 docker34-91 kubelet[24165]: 00000090 38 37 31 36 38 35 2d 78 32 36 70 30 22 24 61 35 |871685-x26p0"$a5|

Oct 27 10:23:02 docker34-91 kubelet[24165]: I1027 10:23:02.098922 24165 kubelet.go:2064] Container runtime status: Runtime Conditions: RuntimeReady=true reason: message:, NetworkReady=true reason: message:

Oct 27 10:23:02 docker34-91 kubelet[24165]: I1027 10:23:02.175027 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:02 docker34-91 kubelet[24165]: I1027 10:23:02.275290 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:02 docker34-91 kubelet[24165]: I1027 10:23:02.375594 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:02 docker34-91 kubelet[24165]: I1027 10:23:02.475872 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:02 docker34-91 kubelet[24165]: I1027 10:23:02.576140 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:02 docker34-91 kubelet[24165]: I1027 10:23:02.676412 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:02 docker34-91 kubelet[24165]: I1027 10:23:02.776613 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:02 docker34-91 kubelet[24165]: I1027 10:23:02.876855 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:02 docker34-91 kubelet[24165]: I1027 10:23:02.977126 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.000354 24165 status_manager.go:410] Status Manager: syncPod in syncbatch. pod UID: "a052cabc-bab9-11e7-92f6-3497f60062c3"

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.000509 24165 round_trippers.go:398] curl -k -v -XGET -H "Accept: application/vnd.kubernetes.protobuf, */*" -H "User-Agent: kubelet/v1.6.4 (linux/amd64) kubernetes/d6f4332" http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-276aa6023f-1106740979-hbtcv

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.001753 24165 round_trippers.go:417] GET http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-276aa6023f-1106740979-hbtcv 404 Not Found in 1 milliseconds

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.001768 24165 round_trippers.go:423] Response Headers:

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.001773 24165 round_trippers.go:426] Content-Type: application/vnd.kubernetes.protobuf

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.001776 24165 round_trippers.go:426] Date: Fri, 27 Oct 2017 02:23:03 GMT

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.001780 24165 round_trippers.go:426] Content-Length: 154

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.001838 24165 request.go:989] Response Body:

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000000 6b 38 73 00 0a 0c 0a 02 76 31 12 06 53 74 61 74 |k8s.....v1..Stat|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000010 75 73 12 81 01 0a 04 0a 00 12 00 12 07 46 61 69 |us...........Fai|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000020 6c 75 72 65 1a 33 70 6f 64 73 20 22 6c 64 74 65 |lure.3pods "ldte|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000030 73 74 2d 32 37 36 61 61 36 30 32 33 66 2d 31 31 |st-276aa6023f-11|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000040 30 36 37 34 30 39 37 39 2d 68 62 74 63 76 22 20 |06740979-hbtcv" |

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000050 6e 6f 74 20 66 6f 75 6e 64 22 08 4e 6f 74 46 6f |not found".NotFo|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000060 75 6e 64 2a 2e 0a 22 6c 64 74 65 73 74 2d 32 37 |und*.."ldtest-27|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000070 36 61 61 36 30 32 33 66 2d 31 31 30 36 37 34 30 |6aa6023f-1106740|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000080 39 37 39 2d 68 62 74 63 76 12 00 1a 04 70 6f 64 |979-hbtcv....pod|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000090 73 28 00 30 94 03 1a 00 22 00 |s(.0....".|

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.001885 24165 status_manager.go:425] Pod "ldtest-276aa6023f-1106740979-hbtcv" (a052cabc-bab9-11e7-92f6-3497f60062c3) does not exist on the server

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.001900 24165 status_manager.go:410] Status Manager: syncPod in syncbatch. pod UID: "a584c63e-bab7-11e7-92f6-3497f60062c3"

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.001946 24165 round_trippers.go:398] curl -k -v -XGET -H "Accept: application/vnd.kubernetes.protobuf, */*" -H "User-Agent: kubelet/v1.6.4 (linux/amd64) kubernetes/d6f4332" http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-734399dg59-3382871685-x26p0

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.002559 24165 round_trippers.go:417] GET http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-734399dg59-3382871685-x26p0 404 Not Found in 0 milliseconds

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.002569 24165 round_trippers.go:423] Response Headers:

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.002573 24165 round_trippers.go:426] Content-Type: application/vnd.kubernetes.protobuf

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.002577 24165 round_trippers.go:426] Date: Fri, 27 Oct 2017 02:23:03 GMT

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.002580 24165 round_trippers.go:426] Content-Length: 154

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.002627 24165 request.go:989] Response Body:

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000000 6b 38 73 00 0a 0c 0a 02 76 31 12 06 53 74 61 74 |k8s.....v1..Stat|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000010 75 73 12 81 01 0a 04 0a 00 12 00 12 07 46 61 69 |us...........Fai|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000020 6c 75 72 65 1a 33 70 6f 64 73 20 22 6c 64 74 65 |lure.3pods "ldte|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000030 73 74 2d 37 33 34 33 39 39 64 67 35 39 2d 33 33 |st-734399dg59-33|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000040 38 32 38 37 31 36 38 35 2d 78 32 36 70 30 22 20 |82871685-x26p0" |

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000050 6e 6f 74 20 66 6f 75 6e 64 22 08 4e 6f 74 46 6f |not found".NotFo|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000060 75 6e 64 2a 2e 0a 22 6c 64 74 65 73 74 2d 37 33 |und*.."ldtest-73|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000070 34 33 39 39 64 67 35 39 2d 33 33 38 32 38 37 31 |4399dg59-3382871|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000080 36 38 35 2d 78 32 36 70 30 12 00 1a 04 70 6f 64 |685-x26p0....pod|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000090 73 28 00 30 94 03 1a 00 22 00 |s(.0....".|

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.002659 24165 status_manager.go:425] Pod "ldtest-734399dg59-3382871685-x26p0" (a584c63e-bab7-11e7-92f6-3497f60062c3) does not exist on the server

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.002668 24165 status_manager.go:410] Status Manager: syncPod in syncbatch. pod UID: "2727277f-bab3-11e7-92f6-3497f60062c3"

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.002711 24165 round_trippers.go:398] curl -k -v -XGET -H "User-Agent: kubelet/v1.6.4 (linux/amd64) kubernetes/d6f4332" -H "Accept: application/vnd.kubernetes.protobuf, */*" http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-4bc7922c25-2238154508-xt94x

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.003318 24165 round_trippers.go:417] GET http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-4bc7922c25-2238154508-xt94x 404 Not Found in 0 milliseconds

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.003328 24165 round_trippers.go:423] Response Headers:

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.003332 24165 round_trippers.go:426] Date: Fri, 27 Oct 2017 02:23:03 GMT

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.003336 24165 round_trippers.go:426] Content-Length: 154

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.003339 24165 round_trippers.go:426] Content-Type: application/vnd.kubernetes.protobuf

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.003379 24165 request.go:989] Response Body:

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000000 6b 38 73 00 0a 0c 0a 02 76 31 12 06 53 74 61 74 |k8s.....v1..Stat|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000010 75 73 12 81 01 0a 04 0a 00 12 00 12 07 46 61 69 |us...........Fai|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000020 6c 75 72 65 1a 33 70 6f 64 73 20 22 6c 64 74 65 |lure.3pods "ldte|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000030 73 74 2d 34 62 63 37 39 32 32 63 32 35 2d 32 32 |st-4bc7922c25-22|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000040 33 38 31 35 34 35 30 38 2d 78 74 39 34 78 22 20 |38154508-xt94x" |

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000050 6e 6f 74 20 66 6f 75 6e 64 22 08 4e 6f 74 46 6f |not found".NotFo|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000060 75 6e 64 2a 2e 0a 22 6c 64 74 65 73 74 2d 34 62 |und*.."ldtest-4b|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000070 63 37 39 32 32 63 32 35 2d 32 32 33 38 31 35 34 |c7922c25-2238154|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000080 35 30 38 2d 78 74 39 34 78 12 00 1a 04 70 6f 64 |508-xt94x....pod|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000090 73 28 00 30 94 03 1a 00 22 00 |s(.0....".|

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.003411 24165 status_manager.go:425] Pod "ldtest-4bc7922c25-2238154508-xt94x" (2727277f-bab3-11e7-92f6-3497f60062c3) does not exist on the server

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.003423 24165 status_manager.go:410] Status Manager: syncPod in syncbatch. pod UID: "43dd5201-bab4-11e7-92f6-3497f60062c3"

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.003482 24165 round_trippers.go:398] curl -k -v -XGET -H "Accept: application/vnd.kubernetes.protobuf, */*" -H "User-Agent: kubelet/v1.6.4 (linux/amd64) kubernetes/d6f4332" http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-g02c441308-3753936377-d6q69

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004051 24165 round_trippers.go:417] GET http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-g02c441308-3753936377-d6q69 404 Not Found in 0 milliseconds

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004059 24165 round_trippers.go:423] Response Headers:

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004062 24165 round_trippers.go:426] Content-Type: application/vnd.kubernetes.protobuf

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004066 24165 round_trippers.go:426] Date: Fri, 27 Oct 2017 02:23:03 GMT

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004069 24165 round_trippers.go:426] Content-Length: 154

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004115 24165 request.go:989] Response Body:

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000000 6b 38 73 00 0a 0c 0a 02 76 31 12 06 53 74 61 74 |k8s.....v1..Stat|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000010 75 73 12 81 01 0a 04 0a 00 12 00 12 07 46 61 69 |us...........Fai|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000020 6c 75 72 65 1a 33 70 6f 64 73 20 22 6c 64 74 65 |lure.3pods "ldte|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000030 73 74 2d 67 30 32 63 34 34 31 33 30 38 2d 33 37 |st-g02c441308-37|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000040 35 33 39 33 36 33 37 37 2d 64 36 71 36 39 22 20 |53936377-d6q69" |

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000050 6e 6f 74 20 66 6f 75 6e 64 22 08 4e 6f 74 46 6f |not found".NotFo|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000060 75 6e 64 2a 2e 0a 22 6c 64 74 65 73 74 2d 67 30 |und*.."ldtest-g0|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000070 32 63 34 34 31 33 30 38 2d 33 37 35 33 39 33 36 |2c441308-3753936|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000080 33 37 37 2d 64 36 71 36 39 12 00 1a 04 70 6f 64 |377-d6q69....pod|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000090 73 28 00 30 94 03 1a 00 22 00 |s(.0....".|

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004142 24165 status_manager.go:425] Pod "ldtest-g02c441308-3753936377-d6q69" (43dd5201-bab4-11e7-92f6-3497f60062c3) does not exist on the server

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004148 24165 status_manager.go:410] Status Manager: syncPod in syncbatch. pod UID: "8fd9d66f-bab7-11e7-92f6-3497f60062c3"

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004195 24165 round_trippers.go:398] curl -k -v -XGET -H "Accept: application/vnd.kubernetes.protobuf, */*" -H "User-Agent: kubelet/v1.6.4 (linux/amd64) kubernetes/d6f4332" http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-cf2eg79b08-3660220702-x0j2j

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004752 24165 round_trippers.go:417] GET http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-cf2eg79b08-3660220702-x0j2j 404 Not Found in 0 milliseconds

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004761 24165 round_trippers.go:423] Response Headers:

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004765 24165 round_trippers.go:426] Date: Fri, 27 Oct 2017 02:23:03 GMT

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004769 24165 round_trippers.go:426] Content-Length: 154

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004773 24165 round_trippers.go:426] Content-Type: application/vnd.kubernetes.protobuf

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004812 24165 request.go:989] Response Body:

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000000 6b 38 73 00 0a 0c 0a 02 76 31 12 06 53 74 61 74 |k8s.....v1..Stat|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000010 75 73 12 81 01 0a 04 0a 00 12 00 12 07 46 61 69 |us...........Fai|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000020 6c 75 72 65 1a 33 70 6f 64 73 20 22 6c 64 74 65 |lure.3pods "ldte|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000030 73 74 2d 63 66 32 65 67 37 39 62 30 38 2d 33 36 |st-cf2eg79b08-36|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000040 36 30 32 32 30 37 30 32 2d 78 30 6a 32 6a 22 20 |60220702-x0j2j" |

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000050 6e 6f 74 20 66 6f 75 6e 64 22 08 4e 6f 74 46 6f |not found".NotFo|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000060 75 6e 64 2a 2e 0a 22 6c 64 74 65 73 74 2d 63 66 |und*.."ldtest-cf|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000070 32 65 67 37 39 62 30 38 2d 33 36 36 30 32 32 30 |2eg79b08-3660220|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000080 37 30 32 2d 78 30 6a 32 6a 12 00 1a 04 70 6f 64 |702-x0j2j....pod|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000090 73 28 00 30 94 03 1a 00 22 00 |s(.0....".|

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004841 24165 status_manager.go:425] Pod "ldtest-cf2eg79b08-3660220702-x0j2j" (8fd9d66f-bab7-11e7-92f6-3497f60062c3) does not exist on the server

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004853 24165 status_manager.go:410] Status Manager: syncPod in syncbatch. pod UID: "eb5a5f4a-baba-11e7-92f6-3497f60062c3"

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.004921 24165 round_trippers.go:398] curl -k -v -XGET -H "Accept: application/vnd.kubernetes.protobuf, */*" -H "User-Agent: kubelet/v1.6.4 (linux/amd64) kubernetes/d6f4332" http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-9b47680d12-2536408624-jhp18

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.005436 24165 round_trippers.go:417] GET http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-9b47680d12-2536408624-jhp18 404 Not Found in 0 milliseconds

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.005446 24165 round_trippers.go:423] Response Headers:

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.005450 24165 round_trippers.go:426] Content-Type: application/vnd.kubernetes.protobuf

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.005454 24165 round_trippers.go:426] Date: Fri, 27 Oct 2017 02:23:03 GMT

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.005457 24165 round_trippers.go:426] Content-Length: 154

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.005499 24165 request.go:989] Response Body:

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000000 6b 38 73 00 0a 0c 0a 02 76 31 12 06 53 74 61 74 |k8s.....v1..Stat|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000010 75 73 12 81 01 0a 04 0a 00 12 00 12 07 46 61 69 |us...........Fai|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000020 6c 75 72 65 1a 33 70 6f 64 73 20 22 6c 64 74 65 |lure.3pods "ldte|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000030 73 74 2d 39 62 34 37 36 38 30 64 31 32 2d 32 35 |st-9b47680d12-25|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000040 33 36 34 30 38 36 32 34 2d 6a 68 70 31 38 22 20 |36408624-jhp18" |

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000050 6e 6f 74 20 66 6f 75 6e 64 22 08 4e 6f 74 46 6f |not found".NotFo|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000060 75 6e 64 2a 2e 0a 22 6c 64 74 65 73 74 2d 39 62 |und*.."ldtest-9b|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000070 34 37 36 38 30 64 31 32 2d 32 35 33 36 34 30 38 |47680d12-2536408|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000080 36 32 34 2d 6a 68 70 31 38 12 00 1a 04 70 6f 64 |624-jhp18....pod|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000090 73 28 00 30 94 03 1a 00 22 00 |s(.0....".|

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.005526 24165 status_manager.go:425] Pod "ldtest-9b47680d12-2536408624-jhp18" (eb5a5f4a-baba-11e7-92f6-3497f60062c3) does not exist on the server

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.005533 24165 status_manager.go:410] Status Manager: syncPod in syncbatch. pod UID: "2db95639-bab5-11e7-92f6-3497f60062c3"

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.005588 24165 round_trippers.go:398] curl -k -v -XGET -H "Accept: application/vnd.kubernetes.protobuf, */*" -H "User-Agent: kubelet/v1.6.4 (linux/amd64) kubernetes/d6f4332" http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-5f8ba1eag0-2191624653-dm374

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.006150 24165 round_trippers.go:417] GET http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-5f8ba1eag0-2191624653-dm374 404 Not Found in 0 milliseconds

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.006176 24165 round_trippers.go:423] Response Headers:

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.006182 24165 round_trippers.go:426] Date: Fri, 27 Oct 2017 02:23:03 GMT

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.006189 24165 round_trippers.go:426] Content-Length: 154

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.006195 24165 round_trippers.go:426] Content-Type: application/vnd.kubernetes.protobuf

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.006251 24165 request.go:989] Response Body:

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000000 6b 38 73 00 0a 0c 0a 02 76 31 12 06 53 74 61 74 |k8s.....v1..Stat|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000010 75 73 12 81 01 0a 04 0a 00 12 00 12 07 46 61 69 |us...........Fai|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000020 6c 75 72 65 1a 33 70 6f 64 73 20 22 6c 64 74 65 |lure.3pods "ldte|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000030 73 74 2d 35 66 38 62 61 31 65 61 67 30 2d 32 31 |st-5f8ba1eag0-21|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000040 39 31 36 32 34 36 35 33 2d 64 6d 33 37 34 22 20 |91624653-dm374" |

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000050 6e 6f 74 20 66 6f 75 6e 64 22 08 4e 6f 74 46 6f |not found".NotFo|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000060 75 6e 64 2a 2e 0a 22 6c 64 74 65 73 74 2d 35 66 |und*.."ldtest-5f|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000070 38 62 61 31 65 61 67 30 2d 32 31 39 31 36 32 34 |8ba1eag0-2191624|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000080 36 35 33 2d 64 6d 33 37 34 12 00 1a 04 70 6f 64 |653-dm374....pod|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000090 73 28 00 30 94 03 1a 00 22 00 |s(.0....".|

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.006297 24165 status_manager.go:425] Pod "ldtest-5f8ba1eag0-2191624653-dm374" (2db95639-bab5-11e7-92f6-3497f60062c3) does not exist on the server

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.006330 24165 status_manager.go:410] Status Manager: syncPod in syncbatch. pod UID: "ecf58d7f-bab2-11e7-92f6-3497f60062c3"

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.006421 24165 round_trippers.go:398] curl -k -v -XGET -H "Accept: application/vnd.kubernetes.protobuf, */*" -H "User-Agent: kubelet/v1.6.4 (linux/amd64) kubernetes/d6f4332" http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-0fe4761ce1-763135991-2gv5x

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.006983 24165 round_trippers.go:417] GET http://172.23.48.211:8080/api/v1/namespaces/ldtest-005/pods/ldtest-0fe4761ce1-763135991-2gv5x 404 Not Found in 0 milliseconds

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.006995 24165 round_trippers.go:423] Response Headers:

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.007001 24165 round_trippers.go:426] Content-Type: application/vnd.kubernetes.protobuf

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.007007 24165 round_trippers.go:426] Date: Fri, 27 Oct 2017 02:23:03 GMT

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.007014 24165 round_trippers.go:426] Content-Length: 151

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.007064 24165 request.go:989] Response Body:

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000000 6b 38 73 00 0a 0c 0a 02 76 31 12 06 53 74 61 74 |k8s.....v1..Stat|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000010 75 73 12 7f 0a 04 0a 00 12 00 12 07 46 61 69 6c |us..........Fail|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000020 75 72 65 1a 32 70 6f 64 73 20 22 6c 64 74 65 73 |ure.2pods "ldtes|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000030 74 2d 30 66 65 34 37 36 31 63 65 31 2d 37 36 33 |t-0fe4761ce1-763|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000040 31 33 35 39 39 31 2d 32 67 76 35 78 22 20 6e 6f |135991-2gv5x" no|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000050 74 20 66 6f 75 6e 64 22 08 4e 6f 74 46 6f 75 6e |t found".NotFoun|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000060 64 2a 2d 0a 21 6c 64 74 65 73 74 2d 30 66 65 34 |d*-.!ldtest-0fe4|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000070 37 36 31 63 65 31 2d 37 36 33 31 33 35 39 39 31 |761ce1-763135991|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000080 2d 32 67 76 35 78 12 00 1a 04 70 6f 64 73 28 00 |-2gv5x....pods(.|

Oct 27 10:23:03 docker34-91 kubelet[24165]: 00000090 30 94 03 1a 00 22 00 |0....".|

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.007106 24165 status_manager.go:425] Pod "ldtest-0fe4761ce1-763135991-2gv5x" (ecf58d7f-bab2-11e7-92f6-3497f60062c3) does not exist on the server

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.077334 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.177546 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.277737 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.377939 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.478169 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.578369 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.603649 24165 eviction_manager.go:197] eviction manager: synchronize housekeeping

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.678573 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.682080 24165 summary.go:389] Missing default interface "eth0" for node:172.23.34.91

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.682132 24165 summary.go:389] Missing default interface "eth0" for pod:kube-system_kube-proxy-qcft5

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.682176 24165 helpers.go:744] eviction manager: observations: signal=imagefs.available, available: 515801344Ki, capacity: 511750Mi, time: 2017-10-27 10:22:56.499173632 +0800 CST

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.682197 24165 helpers.go:744] eviction manager: observations: signal=imagefs.inodesFree, available: 523222251, capacity: 500Mi, time: 2017-10-27 10:22:56.499173632 +0800 CST

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.682203 24165 helpers.go:746] eviction manager: observations: signal=allocatableMemory.available, available: 65544340Ki, capacity: 65581868Ki

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.682207 24165 helpers.go:744] eviction manager: observations: signal=memory.available, available: 57973412Ki, capacity: 65684268Ki, time: 2017-10-27 10:22:56.499173632 +0800 CST

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.682213 24165 helpers.go:744] eviction manager: observations: signal=nodefs.available, available: 99175128Ki, capacity: 102350Mi, time: 2017-10-27 10:22:56.499173632 +0800 CST

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.682218 24165 helpers.go:744] eviction manager: observations: signal=nodefs.inodesFree, available: 104818019, capacity: 100Mi, time: 2017-10-27 10:22:56.499173632 +0800 CST

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.682233 24165 eviction_manager.go:292] eviction manager: no resources are starved

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.778792 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.879040 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:03 docker34-91 kubelet[24165]: I1027 10:23:03.979304 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:04 docker34-91 kubelet[24165]: I1027 10:23:04.079534 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:04 docker34-91 kubelet[24165]: I1027 10:23:04.179753 24165 config.go:101] Looking for [api file], have seen map[api:{} file:{}]

Oct 27 10:23:04 docker34-91 kubelet[24165]: I1027 10:23:04.280026 24165 config.go:101] Looking for [api file], have seen map[api:{} file:{}]

Oct 27 10:23:04 docker34-91 kubelet[24165]: I1027 10:23:04.380246 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:04 docker34-91 kubelet[24165]: I1027 10:23:04.480450 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:04 docker34-91 kubelet[24165]: I1027 10:23:04.580695 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:04 docker34-91 kubelet[24165]: I1027 10:23:04.680957 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:04 docker34-91 kubelet[24165]: I1027 10:23:04.781224 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:04 docker34-91 kubelet[24165]: I1027 10:23:04.881418 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:04 docker34-91 kubelet[24165]: I1027 10:23:04.981643 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.081882 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.182810 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.283410 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.383626 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.483942 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.584211 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.684460 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.784699 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.884949 24165 config.go:101] Looking for [api file], have seen map[file:{} api:{}]

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.960855 24165 factory.go:115] Factory "docker" was unable to handle container "/system.slice/data-docker-overlay-c0d3c4b3834cfe9f12cd5c35345cab9c8e71bb64c689c8aea7a458c119a5a54e-merged.mount"

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.960885 24165 factory.go:108] Factory "systemd" can handle container "/system.slice/data-docker-overlay-c0d3c4b3834cfe9f12cd5c35345cab9c8e71bb64c689c8aea7a458c119a5a54e-merged.mount", but ignoring.

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.960906 24165 manager.go:867] ignoring container "/system.slice/data-docker-overlay-c0d3c4b3834cfe9f12cd5c35345cab9c8e71bb64c689c8aea7a458c119a5a54e-merged.mount"

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.960912 24165 factory.go:115] Factory "docker" was unable to handle container "/system.slice/data-docker-overlay-ce9656ff9d3cd03baaf93e42d0874377fa37bfde6c9353b3ba954c90bf4332f3-merged.mount"

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.960919 24165 factory.go:108] Factory "systemd" can handle container "/system.slice/data-docker-overlay-ce9656ff9d3cd03baaf93e42d0874377fa37bfde6c9353b3ba954c90bf4332f3-merged.mount", but ignoring.

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.960926 24165 manager.go:867] ignoring container "/system.slice/data-docker-overlay-ce9656ff9d3cd03baaf93e42d0874377fa37bfde6c9353b3ba954c90bf4332f3-merged.mount"

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.960931 24165 factory.go:115] Factory "docker" was unable to handle container "/system.slice/data-docker-overlay-b3600c0fe81445773b9241c5d1da8b1f97612d0a235f8b32139478a5717f79e1-merged.mount"

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.960937 24165 factory.go:108] Factory "systemd" can handle container "/system.slice/data-docker-overlay-b3600c0fe81445773b9241c5d1da8b1f97612d0a235f8b32139478a5717f79e1-merged.mount", but ignoring.

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.960944 24165 manager.go:867] ignoring container "/system.slice/data-docker-overlay-b3600c0fe81445773b9241c5d1da8b1f97612d0a235f8b32139478a5717f79e1-merged.mount"

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.960949 24165 factory.go:115] Factory "docker" was unable to handle container "/system.slice/data-docker-overlay-ed2fe0d57c56cf6b051e1bda1ca0185ceef4756b1a8f9af4c19f4e512bcc60f4-merged.mount"

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.960955 24165 factory.go:108] Factory "systemd" can handle container "/system.slice/data-docker-overlay-ed2fe0d57c56cf6b051e1bda1ca0185ceef4756b1a8f9af4c19f4e512bcc60f4-merged.mount", but ignoring.

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.960979 24165 manager.go:867] ignoring container "/system.slice/data-docker-overlay-ed2fe0d57c56cf6b051e1bda1ca0185ceef4756b1a8f9af4c19f4e512bcc60f4-merged.mount"

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.960984 24165 factory.go:115] Factory "docker" was unable to handle container "/system.slice/data-docker-overlay-0ba6483a0117c539493cd269be9f87d31d1d61aa813e7e0381c5f5d8b0623275-merged.mount"

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.960990 24165 factory.go:108] Factory "systemd" can handle container "/system.slice/data-docker-overlay-0ba6483a0117c539493cd269be9f87d31d1d61aa813e7e0381c5f5d8b0623275-merged.mount", but ignoring.

Oct 27 10:23:05 docker34-91 kubelet[24165]: I1027 10:23:05.960997 24165 manager.go:867] ignoring container "/system.slice/data-docker-overlay-0ba6483a0117c539493cd269be9f87d31d1d61aa813e7e0381c5f5d8b0623275-merged.mount"

Hit similar issue:

Oct 28 09:15:38 ip-10-72-17-119.us-west-2.compute.internal kubelet[3299]: E1028 09:15:38.711430 3299 pod_workers.go:182] Error syncing pod 7d3b94f3-afa7-11e7-aaec-06936c368d26 ("pickup-566929041-bn8t9_staging(7d3b94f3-afa7-11e7-aaec-06936c368d26)"), skipping: rpc error: code = 4 desc = context deadline exceeded

Oct 28 09:15:51 ip-10-72-17-119.us-west-2.compute.internal kubelet[3299]: E1028 09:15:51.439135 3299 kuberuntime_manager.go:843] PodSandboxStatus of sandbox "9c1c1f2d4a9d277a41a97593c330f41e00ca12f3ad858c19f61fd155d18d795e" for pod "pickup-566929041-bn8t9_staging(7d3b94f3-afa7-11e7-aaec-06936c368d26)" error: rpc error: code = 4 desc = context deadline exceeded

Oct 28 09:15:51 ip-10-72-17-119.us-west-2.compute.internal kubelet[3299]: E1028 09:15:51.439188 3299 generic.go:241] PLEG: Ignoring events for pod pickup-566929041-bn8t9/staging: rpc error: code = 4 desc = context deadline exceeded

Oct 28 09:15:51 ip-10-72-17-119.us-west-2.compute.internal kubelet[3299]: E1028 09:15:51.711168 3299 pod_workers.go:182] Error syncing pod 7d3b94f3-afa7-11e7-aaec-06936c368d26 ("pickup-566929041-bn8t9_staging(7d3b94f3-afa7-11e7-aaec-06936c368d26)"), skipping: rpc error: code = 4 desc = context deadline exceeded

Oct 28 09:16:03 ip-10-72-17-119.us-west-2.compute.internal kubelet[3299]: E1028 09:16:03.711164 3299 pod_workers.go:182] Error syncing pod 7d3b94f3-afa7-11e7-aaec-06936c368d26 ("pickup-566929041-bn8t9_staging(7d3b94f3-afa7-11e7-aaec-06936c368d26)"), skipping: rpc error: code = 4 desc = context deadline exceeded

Oct 28 09:16:18 ip-10-72-17-119.us-west-2.compute.internal kubelet[3299]: E1028 09:16:18.715381 3299 pod_workers.go:182] Error syncing pod 7d3b94f3-afa7-11e7-aaec-06936c368d26 ("pickup-566929041-bn8t9_staging(7d3b94f3-afa7-11e7-aaec-06936c368d26)"), skipping: rpc error: code = 4 desc = context deadline exceeded

Oct 28 09:16:33 ip-10-72-17-119.us-west-2.compute.internal kubelet[3299]: E1028 09:16:33.711198 3299 pod_workers.go:182] Error syncing pod 7d3b94f3-afa7-11e7-aaec-06936c368d26 ("pickup-566929041-bn8t9_staging(7d3b94f3-afa7-11e7-aaec-06936c368d26)"), skipping: rpc error: code = 4 desc = context deadline exceeded

Oct 28 09:16:46 ip-10-72-17-119.us-west-2.compute.internal kubelet[3299]: E1028 09:16:46.712983 3299 pod_workers.go:182] Error syncing pod 7d3b94f3-afa7-11e7-aaec-06936c368d26 ("pickup-566929041-bn8t9_staging(7d3b94f3-afa7-11e7-aaec-06936c368d26)"), skipping: rpc error: code = 4 desc = context deadline exceeded

Oct 28 09:16:51 ip-10-72-17-119.us-west-2.compute.internal kubelet[3299]: I1028 09:16:51.711142 3299 kubelet.go:1820] skipping pod synchronization - [PLEG is not healthy: pleg was last seen active 3m0.31269053s ago; threshold is 3m0s]

Oct 28 09:16:56 ip-10-72-17-119.us-west-2.compute.internal kubelet[3299]: I1028 09:16:56.711341 3299 kubelet.go:1820] skipping pod synchronization - [PLEG is not healthy: pleg was last seen active 3m5.312886434s ago; threshold is 3m0s]

Oct 28 09:17:01 ip-10-72-17-119.us-west-2.compute.internal kubelet[3299]: I1028 09:17:01.351771 3299 kubelet_node_status.go:734] Node became not ready: {Type:Ready Status:False LastHeartbeatTime:2017-10-28 09:17:01.35173325 +0000 UTC LastTransitionTime:2017-10-28 09:17:01.35173325 +0000 UTC Reason:KubeletNotReady Message:PLEG is not healthy: pleg was last seen active 3m9.95330596s ago; threshold is 3m0s}

Oct 28 09:17:01 ip-10-72-17-119.us-west-2.compute.internal kubelet[3299]: I1028 09:17:01.711552 3299 kubelet.go:1820] skipping pod synchronization - [PLEG is not healthy: pleg was last seen active 3m10.31309378s ago; threshold is 3m0s]

Oct 28 09:17:06 ip-10-72-17-119.us-west-2.compute.internal kubelet[3299]: I1028 09:17:06.711871 3299 kubelet.go:1820] skipping pod synchronization - [PLEG is not healthy: pleg was last seen active 3m15.313406671s ago; threshold is 3m0s]