We are currently testing our application via KONG(2.1.0 beta1) based on gRPC(HTTP/2).

In this case(gRPC), KONG hasn't applied to the new properties(upstream_keepalive_pool_size, upstream_keepalive_max_requests, upstream_keepalive_idle_timeout).

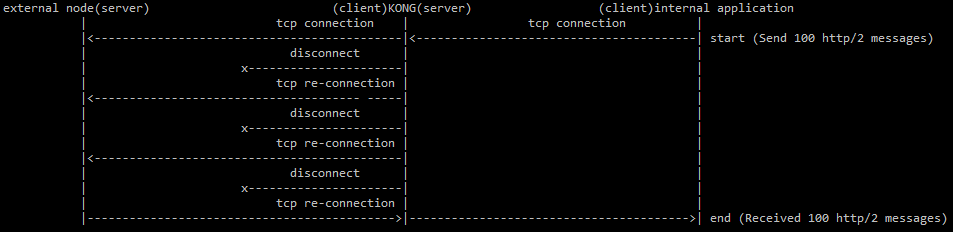

The TCP connection is maintained well between application(Client) and KONG, but TCP connection is not maintained between an external node(Server) and KONG(Client), and it is continuously disconnected as in http/1.1.

I don't know why KONG(Client) does not maintain the TCP connections.

However, the service works well at both end-to-end (application(HTTP/2 Get) <-> external node(200 OK)).

In the previous version(2.0.4), KONG can send multiple messages(two or more http/2 messages) over a TCP connection,

however, in the 2.1.0beta1, KONG sends only one message over a TCP connection.

I think KONG can provide better performance if it supports the HTTP/2 feature when using gRPC settings.

Do you have plans to support these features?

Regards.

All 8 comments

@maskofaction, this is probably shooting in a dark, but can you try one thing:

Can you add above this line:

https://github.com/Kong/kong/blob/release/2.1.0/kong/templates/nginx_kong.lua#L169

grpc_set_header Connection '';

Also same above this line:

https://github.com/Kong/kong/blob/release/2.1.0/kong/templates/nginx_kong.lua#L187

It probably does not make any difference, but if you could try it and report back, it would be great!

@bungle I tried as you said, but the results are the same.

location @grpc {

internal;

default_type '';

set $kong_proxy_mode 'grpc';

grpc_set_header TE $upstream_te;

grpc_set_header Host $upstream_host;

grpc_set_header Connection '';

grpc_set_header X-Forwarded-For $upstream_x_forwarded_for;

grpc_set_header X-Forwarded-Proto $upstream_x_forwarded_proto;

grpc_set_header X-Forwarded-Host $upstream_x_forwarded_host;

grpc_set_header X-Forwarded-Port $upstream_x_forwarded_port;

grpc_set_header X-Forwarded-Prefix $upstream_x_forwarded_prefix;

grpc_set_header X-Real-IP $remote_addr;

grpc_pass_header Server;

grpc_pass_header Date;

grpc_pass grpc://kong_upstream;

}

Thank you, we'll investigate it further, especially as the behavior has changed, and that feels unexpected.

I just tried to proxy a gRPC service locally with Kong 2.1.0-beta.1 and keepalive worked as expected with the appropriate 1.15.8.3 OpenResty build with Kong patches (without needing the above grpc_set_header directive).

@maskofaction Could you check the error logs for any [warn] logs? If the new keepalive pooling cannot find the Kong patches in OpenResty it will log so:

2020/07/09 16:30:03 [warn] 248192#0: [lua] init.lua:113: missing method 'ngx_balancer.enable_keepalive()' (was the dyn_upstream_keepalive patch applied?) set the 'nginx_upstream_keepalive' configuration property instead of 'upstream_keepalive_pool_size'

The log also suggests an alternative to enable keepalive by setting nginx_upstream_keepalive instead, which enables the keepalive Nginx directive (instead of Kong's dynamic keepalive pooling).

@thibaultcha I took the test at Kong 2.1.0-beta.1 and OpenResty 1.15.8.3, and I could find the same log you told me.

So I set up keepalive but the new upstream_keepalive setting in kong.conf did not apply to nginx_ kong.conf but rather nginx_upstream_keeplive was applied to nginx_kong.conf.

2020/07/14 10:54:20 [warn] 11211#0: [lua] init.lua:113: missing method 'ngx_balancer.enable_keepalive()' (was the dyn_upstream_keepalive patch applied?) set the 'nginx_upstream_keepalive' configuration property instead of 'upstream_keepalive_pool_size'

And I could check some warn logs.

Kong stopped

2020/07/14 10:54:20 [warn] the 'nginx_upstream_keepalive_requests' configuration property is deprecated, use 'upstream_keepalive_max_requests' instead

2020/07/14 10:54:20 [warn] the 'nginx_upstream_keepalive_timeout' configuration property is deprecated, use 'upstream_keepalive_idle_timeout' instead

2020/07/14 10:54:20 [warn] the 'nginx_upstream_keepalive' configuration property is deprecated, use 'upstream_keepalive_pool_size' instead

Kong started

However, the Keepalive setting still didn't work.

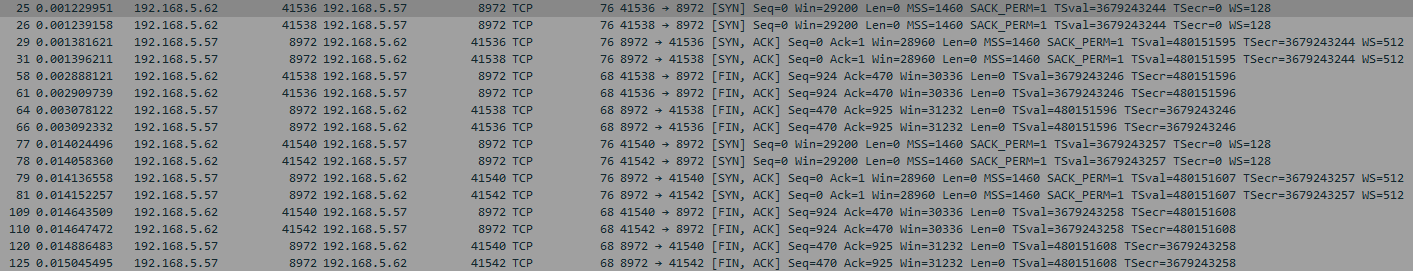

Repeatedly, KONG, the server role, processed 1,000 HTTP/2 messages and terminated TCP connections, while KONG, the Client role, processed only one HTTP/2 message after the TCP connection and terminated the TCP connection.

I don't know why this is happening.

The IP address of 192.168.5.62 is the client KONG.

@maskofaction What Kong distribution are you using?

@maskofaction It seems like the Kong distribution you are using did not successfully apply our OpenResty patches (hence why @gszr is asking you which distribution that is).

In any case, the new upstream_keepalive property will not result in an Nginx directive in nginx.conf, it is fully "dynamic" and managed by the OpenResty C keepalive module. The old nginx_upstream_keeplive property, while deprecated, can still be used to inject the keepalive directive in nginx.conf. Note that the former attempts to solve many issues caused by the latter, so ideally you'd want to switch to the new upstream_keepalive as soon as your distribution fixes this patching issue.

Closing this. @maskofaction please reopen if the issue persists, adding more info on the distro you are using.