kong 1.3.0 can NOT process https request suddenly when handle many https request

Summary

I use this dockerfile to build an arm64v8 kong 1.3.0 docker image. Then I use it for my API gateway. I found when many https requests been send to kong,the kong may not process https request suddenly.

Steps To Reproduce

- use this dockerfile to build an arm64v8 kong 1.3.0 docker image

- run the docker image above

- try to send many https requests to kong

- then the kong may not process https request suddenly

Additional Details & Logs

- Kong version (1.3.0)

- Kong debug-level startup logs (

$ kong start --vv)

2019/09/17 09:49:43 [notice] 1#0: using the "epoll" event method

2019/09/17 09:49:43 [notice] 1#0: openresty/1.15.8.1

2019/09/17 09:49:43 [notice] 1#0: built by gcc 5.4.0 20160609 (Ubuntu/Linaro 5.4.0-6ubuntu1~16.04.11)

2019/09/17 09:49:43 [notice] 1#0: OS: Linux 4.4.131-20190505.kylin.server-generic

2019/09/17 09:49:43 [notice] 1#0: getrlimit(RLIMIT_NOFILE): 1048576:1048576

...

- Kong error logs (

<KONG_PREFIX>/logs/error.log)

2019/09/17 09:50:54 [error] 90#0: *124660 lua entry thread aborted: runtime error: /usr/local/share/lua/5.1/kong/cache.lua:243: key at index 2.1217691418087e-314 must be a string for operation 1 (got nil)

stack traceback:

coroutine 0:

[C]: in function 'error'

/usr/local/share/lua/5.1/resty/mlcache.lua:984: in function 'get_bulk'

/usr/local/share/lua/5.1/kong/cache.lua:243: in function 'get_bulk'

/usr/local/share/lua/5.1/kong/runloop/certificate.lua:185: in function 'find_certificate'

/usr/local/share/lua/5.1/kong/runloop/certificate.lua:210: in function 'execute'

/usr/local/share/lua/5.1/kong/runloop/handler.lua:879: in function 'before'

/usr/local/share/lua/5.1/kong/init.lua:565: in function 'ssl_certificate'

ssl_certificate_by_lua:2: in main chunk, context: ssl_certificate_by_lua*, client: 10.10.10.12, server: 10.10.10.55:443

2019/09/17 09:50:54 [crit] 90#0: *124659 SSL_do_handshake() failed (SSL: error:1417A179:SSL routines:tls_post_process_client_hello:cert cb error) while SSL handshaking, client: 10.10.10.12, server: 10.10.10.55:443

- Operating system

Linux Kylin 4.4.131-20190505.kylin.server-generic #kylin SMP Mon May 6 14:34:13 CST 2019 aarch64 aarch64 aarch64 GNU/Linux

All 39 comments

I changed lua-resty-mlcache's code, then the problem disappeared. Strange!!!

https://github.com/thibaultcha/lua-resty-mlcache/blob/2.4.0/lib/resty/mlcache.lua:978

for i = 1, n_bulk, 4 do

-- make sure i is initialized with 1

if i < 1 then

i = 1

end

local b_key = bulk[i]

local b_opts = bulk[i + 1]

local b_cb = bulk[i + 2]

if type(b_key) ~= "string" then

error("key at index " .. i .. " must be a string for operation " ..

ceil(i / 4) .. " (got " .. type(b_key) .. ")", 2)

end

It seems i is not initailized with 1 when run into the for logic body.

Thanks for reporting the issue @jeremyxu2010.

From the looks of it, it seems like an issue with LuaJIT itself. If it affects mlcache (which isn't responsible for this behavior as far as I can tell), it is very likely that it affects other areas of Kong or of its dependencies unpredictably as well. A likely culprit would be the LuaJIT dynamic lightuserdata mapping patch introduced in Kong 1.3.0. Pinging @javierguerragiraldez @hishamhm as well.

i don't see any relation with lightuserdata here. on a quick look (and without reproducing locally (yet)), it looks to me about snapshot replaying. in short: on a tight loop (like this numeric for) some variables (especially integers) aren't put into the Lua stack; instead they're managed directly in CPU registers. on a trace exit (like this seldom-taken if), at first it's handle by dropping to the interpreter, so every variable (and all local state) must be replayed from a snapshot (visible on IR listings). what I've seen previously is that when the exit itself is compiled (as a side trace), the snapshot replaying is also compiled into machine code, and there have been bugs on that process, even in x86 (long ago). Maybe they're still lurking on ARM64 code (which has a weird standard for 64-bit integer reinstantiation).

it's just a hunch, right now; unfortunately. I'd have to look at the trace IR and mcode to confirm.

i'll try to reproduce locally.

@javierguerragiraldez @hishamhm

Is there any progress with this issue? I found that the kong compiled by this method is unstable. I must restart kong often. I don't know if this is related to the lua interpreter or not.

I've tried to reproduce the issue, unsuccessfully so far. The last run was over 20 hours under heavy load in an AWS instance.

Yes, it was based on your dockerfile, but the last script (which I guess did some config) isn't included.

Do you have any other details to share?

@jamesgeorge007 I wrote a test script to reproduce the issue

#!/bin/bash

# build kong 1.3.0 arm64 image

docker build -t kong:1.3.0-aws-arm64 ./docker -f ./docker/Dockerfile

# start demo service

docker run -d --restart always --name registry arm64v8/registry:2

# start kong api gateway service

docker run -d --name kong-database \

--restart always \

-p 5432:5432 \

-e "POSTGRES_USER=kong" \

-e "POSTGRES_DB=kong" \

arm64v8/postgres:9.6

docker run \

--link kong-database:kong-database \

-e "KONG_DATABASE=postgres" \

-e "KONG_PG_HOST=kong-database" \

-e "KONG_CASSANDRA_CONTACT_POINTS=kong-database" \

kong:1.3.0-aws-arm64 kong migrations bootstrap

docker run -d --name kong \

--restart always \

--link kong-database:kong-database \

--link registry:registry \

-e "KONG_DATABASE=postgres" \

-e "KONG_PG_HOST=kong-database" \

-e "KONG_PROXY_ACCESS_LOG=/dev/stdout" \

-e "KONG_ADMIN_ACCESS_LOG=/dev/stdout" \

-e "KONG_PROXY_ERROR_LOG=/dev/stderr" \

-e "KONG_ADMIN_ERROR_LOG=/dev/stderr" \

-e "KONG_PROXY_LISTEN=0.0.0.0:80, 0.0.0.0:443 ssl" \

-e "KONG_ADMIN_LISTEN=0.0.0.0:8001, 0.0.0.0:8444 ssl" \

-p 80:80 \

-p 443:443 \

-p 8001:8001 \

-p 8444:8444 \

kong:1.3.0-aws-arm64

# make registry.test.com be resolved with '127.0.0.1'

echo '127.0.0.1 registry.test.com' >> /etc/hosts

# create upstream

curl -X POST http://127.0.0.1:8001/upstreams \

--data "name=registry.service"

# create upstream's target

curl -X POST http://127.0.0.1:8001/upstreams/registry.service/targets \

--data "target=registry:5000" \

--data "weight=100"

# create service

curl -X POST http://127.0.0.1:8001/services/ \

--data "name=registry-service" \

--data "host=registry.service" \

--data "path=/" \

--data "protocol=http"

# create route

curl -X POST http://127.0.0.1:8001/services/registry-service/routes/ \

--data "hosts[]=registry.test.com" \

--data "protocols[]=https"

# create certificate and sni

curl -X POST http://127.0.0.1:8001/certificates \

-F "cert=@certs/registry.pem" \

-F "key=@certs/registry-key.pem" \

-F "snis[]=registry.test.com"

# verify certificate is ok

# openssl s_client -showcerts -servername registry.test.com -connect registry.test.com:443

# make docker trust in my self-signed certificate

mkdir -p /etc/docker/certs.d/registry.test.com

cp certs/ca.pem /etc/docker/certs.d/registry.test.com/ca.crt

systemctl restart docker

# try to send https request to kong

docker tag arm64v8/postgres:9.6 registry.test.com/arm64v8/postgres:9.6

docker push registry.test.com/arm64v8/postgres:9.6

# try to send https requests to kong concurrently

parallel -j 10 -N 0 docker push registry.test.com/arm64v8/ubuntu:16.04 ::: {1..50}

# check kong's logs

docker logs kong 2>&1 | grep -A 15 'entry thread aborted'

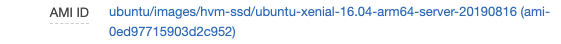

OS Info:

root@ip-172-31-6-9:~# uname -a

Linux ip-172-31-6-9 4.15.0-1045-aws #47~16.04.1-Ubuntu SMP Fri Aug 2 16:23:47 UTC 2019 aarch64 aarch64 aarch64 GNU/Linux

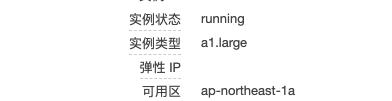

aws virtual machine:

type: a1.large

ami: ubuntu/images/hvm-ssd/ubuntu-xenial-16.04-arm64-server-20190816

thanks, will check

After several runs of roughly 1.2 billion https requests each, under high concurrency, and separately several thousands multimegabyte transfers, also with several threads overlapping, all without a single failure; I'm less inclined to thing that it's a load issue. So far, the biggest difference between my tests and what i can read from your scripts is the SNI/certificate association. I'll do a different setup to try this soon.

@javierguerragiraldez I guess it is not related with high concurrency. In my test script, just 10 concurrent client https request can lead to the issue.

aws virtual machine info:

type: a1.large

ami: ubuntu/images/hvm-ssd/ubuntu-xenial-16.04-arm64-server-20190816

@javierguerragiraldez @hishamhm

Is there any progress with this issue?

@javierguerragiraldez

I try to use official kong arm64 image,but the issue still exists.

#!/bin/bash

# start demo service

docker run -d --restart always --name registry arm64v8/registry:2

# start kong api gateway service

docker run -d --name kong-database \

--restart always \

-p 5432:5432 \

-e "POSTGRES_USER=kong" \

-e "POSTGRES_DB=kong" \

arm64v8/postgres:9.6

docker run \

--link kong-database:kong-database \

-e "KONG_DATABASE=postgres" \

-e "KONG_PG_HOST=kong-database" \

-e "KONG_CASSANDRA_CONTACT_POINTS=kong-database" \

arm64v8/kong:1.3.0-ubuntu kong migrations bootstrap

docker run -d --name kong \

--restart always \

--link kong-database:kong-database \

--link registry:registry \

-e "KONG_DATABASE=postgres" \

-e "KONG_PG_HOST=kong-database" \

-e "KONG_PROXY_ACCESS_LOG=/dev/stdout" \

-e "KONG_ADMIN_ACCESS_LOG=/dev/stdout" \

-e "KONG_PROXY_ERROR_LOG=/dev/stderr" \

-e "KONG_ADMIN_ERROR_LOG=/dev/stderr" \

-e "KONG_PROXY_LISTEN=0.0.0.0:80, 0.0.0.0:443 ssl" \

-e "KONG_ADMIN_LISTEN=0.0.0.0:8001, 0.0.0.0:8444 ssl" \

-p 80:80 \

-p 443:443 \

-p 8001:8001 \

-p 8444:8444 \

arm64v8/kong:1.3.0-ubuntu

# make registry.test.com be resolved with '127.0.0.1'

echo '127.0.0.1 registry.test.com' >> /etc/hosts

# create upstream

curl -X POST http://127.0.0.1:8001/upstreams \

--data "name=registry.service"

# create upstream's target

curl -X POST http://127.0.0.1:8001/upstreams/registry.service/targets \

--data "target=registry:5000" \

--data "weight=100"

# create service

curl -X POST http://127.0.0.1:8001/services/ \

--data "name=registry-service" \

--data "host=registry.service" \

--data "path=/" \

--data "protocol=http"

# create route

curl -X POST http://127.0.0.1:8001/services/registry-service/routes/ \

--data "hosts[]=registry.test.com" \

--data "protocols[]=https"

# create certificate and sni

curl -X POST http://127.0.0.1:8001/certificates \

-F "cert=@certs/registry.pem" \

-F "key=@certs/registry-key.pem" \

-F "snis[]=registry.test.com"

# verify certificate is ok

# openssl s_client -showcerts -servername registry.test.com -connect registry.test.com:443

# make docker trust in my self-signed certificate

mkdir -p /etc/docker/certs.d/registry.test.com

cp certs/ca.pem /etc/docker/certs.d/registry.test.com/ca.crt

systemctl restart docker

# try to send https request to kong

docker tag arm64v8/postgres:9.6 registry.test.com/arm64v8/postgres:9.6

docker push registry.test.com/arm64v8/postgres:9.6

# try to send https requests to kong concurrently

parallel -j 10 -N 0 docker push registry.test.com/arm64v8/ubuntu:16.04 ::: {1..50}

# check kong's logs

docker logs kong 2>&1 | grep -A 15 'entry thread aborted'

@javierguerragiraldez @hishamhm

Is there any progress with this issue? Urgent!!!

@javierguerragiraldez @hishamhm

Is there any progress with this issue? Urgent!!!

I tried this and I am unable to reproduce 🤔

Why in your example are running architecture tagged images arm64v8/postgres:9.6 vs just running postgres:9.6 which will pull the correct architecture for you?

I didn't rebuild the Kong image I used the one from docker hub kong:1.3.0-ubuntu

I'm using a newer OS and possibly a newer Docker version but neither of those should make any difference.

docker-machine create --driver amazonec2 \

--amazonec2-instance-type a1.medium \

--amazonec2-region us-east-1 \

--amazonec2-ami ami-0c46f9f09e3a8c2b5 \

--amazonec2-vpc-id vpc-74f9ac0c \

--amazonec2-monitoring \

--amazonec2-tags created-by,ubuntu docker-machine-arm64-ubuntu

docker-machine ssh docker-machine-arm64-ubuntu

sudo usermod -aG docker $USER

exit

docker-machine ssh docker-machine-arm64-ubuntu

docker run -d --restart always --name registry registry:2

docker run -d --name kong-database \

--restart always \

-p 5432:5432 \

-e "POSTGRES_USER=kong" \

-e "POSTGRES_DB=kong" \

postgres:9.6

docker run \

--link kong-database:kong-database \

-e "KONG_DATABASE=postgres" \

-e "KONG_PG_HOST=kong-database" \

kong:1.3.0-ubuntu kong migrations bootstrap

docker run -d --name kong \

--restart always \

--link kong-database:kong-database \

--link registry:registry \

-e "KONG_DATABASE=postgres" \

-e "KONG_PG_HOST=kong-database" \

-e "KONG_PROXY_ACCESS_LOG=/dev/stdout" \

-e "KONG_ADMIN_ACCESS_LOG=/dev/stdout" \

-e "KONG_PROXY_ERROR_LOG=/dev/stderr" \

-e "KONG_ADMIN_ERROR_LOG=/dev/stderr" \

-e "KONG_PROXY_LISTEN=0.0.0.0:80, 0.0.0.0:443 ssl" \

-e "KONG_ADMIN_LISTEN=0.0.0.0:8001, 0.0.0.0:8444 ssl" \

-p 80:80 \

-p 443:443 \

-p 8001:8001 \

-p 8444:8444 \

kong:1.3.0-ubuntu

echo '127.0.0.1 registry.test.com' >> /etc/hosts

curl -X POST http://127.0.0.1:8001/upstreams \

--data "name=registry.service"

curl -X POST http://127.0.0.1:8001/upstreams/registry.service/targets \

--data "target=registry:5000" \

--data "weight=100"

curl -X POST http://127.0.0.1:8001/services/ \

--data "name=registry-service" \

--data "host=registry.service" \

--data "path=/" \

--data "protocol=http"

curl -X POST http://127.0.0.1:8001/services/registry-service/routes/ \

--data "hosts[]=registry.test.com" \

--data "protocols[]=https"

curl -X POST http://127.0.0.1:8001/certificates \

-F "cert=@certs/registry.pem" \

-F "key=@certs/registry-key.pem" \

-F "snis[]=registry.test.com"

sudo mkdir -p /etc/docker/certs.d/registry.test.com

sudo cp certs/ca.pem /etc/docker/certs.d/registry.test.com/ca.crt

sudo systemctl restart docker

docker tag postgres:9.6 registry.test.com/postgres:9.6

docker push registry.test.com/postgres:9.6

sudo apt install -y parallel

docker pull ubuntu:16.04

docker tag ubuntu:16.04 registry.test.com/ubuntu:16.04

parallel -j 50 -N 0 docker push registry.test.com/ubuntu:16.04 ::: {1..50}

uname -a

Linux docker-machine-arm64-ubuntu 4.15.0-1043-aws #45-Ubuntu SMP Mon Jun 24 14:08:49 UTC 2019 aarch64 aarch64 aarch64 GNU/Linux

docker version

Client: Docker Engine - Community

Version: 19.03.2

API version: 1.40

Go version: go1.12.8

Git commit: 6a30dfc

Built: Thu Aug 29 05:32:21 2019

OS/Arch: linux/arm64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.2

API version: 1.40 (minimum version 1.12)

Go version: go1.12.8

Git commit: 6a30dfc

Built: Thu Aug 29 05:30:53 2019

OS/Arch: linux/arm64

Experimental: false

containerd:

Version: 1.2.6

GitCommit: 894b81a4b802e4eb2a91d1ce216b8817763c29fb

runc:

Version: 1.0.0-rc8

GitCommit: 425e105d5a03fabd737a126ad93d62a9eeede87f

docker-init:

Version: 0.18.0

GitCommit: fec3683

lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 18.04.2 LTS

Release: 18.04

Codename: bionic

a1.medium

ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-arm64-server-20190627.1 (ami-0c46f9f09e3a8c2b5)

@hutchic I have tried on ubuntu xenial and bionic, the issue still exists. It doesn't appear every time, so I write a shell script to reproduce it.

# make registry.test.com be resolved with '127.0.0.1'

echo '127.0.0.1 registry.test.com' >> /etc/hosts

# make docker trust in my self-signed certificate

mkdir -p /etc/docker/certs.d/registry.test.com

cp -f certs/ca.pem /etc/docker/certs.d/registry.test.com/ca.crt

systemctl restart docker

function try_reproduce_issue {

# delete all containers

docker ps -q -a|xargs docker rm -f

docker volume prune -f

# start demo service

docker run -d --restart always --name registry registry:2

# start kong api gateway service

docker run -d --name kong-database \

--restart always \

-p 5432:5432 \

-e "POSTGRES_USER=kong" \

-e "POSTGRES_DB=kong" \

postgres:9.6

sleep 5

docker run \

--link kong-database:kong-database \

-e "KONG_DATABASE=postgres" \

-e "KONG_PG_HOST=kong-database" \

-e "KONG_CASSANDRA_CONTACT_POINTS=kong-database" \

kong:1.3.0-ubuntu kong migrations bootstrap

sleep 2

docker run -d --name kong \

--restart always \

--link kong-database:kong-database \

--link registry:registry \

-e "KONG_DATABASE=postgres" \

-e "KONG_PG_HOST=kong-database" \

-e "KONG_PROXY_ACCESS_LOG=/dev/stdout" \

-e "KONG_ADMIN_ACCESS_LOG=/dev/stdout" \

-e "KONG_PROXY_ERROR_LOG=/dev/stderr" \

-e "KONG_ADMIN_ERROR_LOG=/dev/stderr" \

-e "KONG_PROXY_LISTEN=0.0.0.0:80, 0.0.0.0:443 ssl" \

-e "KONG_ADMIN_LISTEN=0.0.0.0:8001, 0.0.0.0:8444 ssl" \

-p 80:80 \

-p 443:443 \

-p 8001:8001 \

-p 8444:8444 \

kong:1.3.0-ubuntu

sleep 5

# create upstream

curl -X POST http://127.0.0.1:8001/upstreams \

--data "name=registry.service"

# create upstream's target

curl -X POST http://127.0.0.1:8001/upstreams/registry.service/targets \

--data "target=registry:5000" \

--data "weight=100"

# create service

curl -X POST http://127.0.0.1:8001/services/ \

--data "name=registry-service" \

--data "host=registry.service" \

--data "path=/" \

--data "protocol=http"

# create route

curl -X POST http://127.0.0.1:8001/services/registry-service/routes/ \

--data "hosts[]=registry.test.com" \

--data "protocols[]=https"

# create certificate and sni

curl -X POST http://127.0.0.1:8001/certificates \

-F "cert=@certs/registry.pem" \

-F "key=@certs/registry-key.pem" \

-F "snis[]=registry.test.com"

# verify certificate is ok

# openssl s_client -showcerts -servername registry.test.com -connect registry.test.com:443

sleep 5

docker pull arm64v8/ubuntu:16.04

docker tag arm64v8/ubuntu:16.04 registry.test.com/arm64v8/ubuntu:16.04

# try to send https requests to kong concurrently

timeout 60 parallel -j 20 -N 0 docker push registry.test.com/arm64v8/ubuntu:16.04 ::: {1..50}

}

while [[ true ]]; do

try_reproduce_issue

docker logs kong 2>&1 | grep 'entry thread aborted'

if [[ $? -eq 0 ]]; then

break

else

continue

fi

done

@hutchic Could you try to reproduce it with my script file?

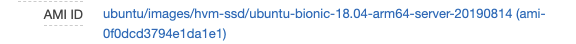

- ubuntu xenial info:

root@ip-172-31-6-9:~# uname -a

Linux ip-172-31-6-9 4.15.0-1051-aws #53~16.04.1-Ubuntu SMP Wed Sep 18 14:58:34 UTC 2019 aarch64 aarch64 aarch64 GNU/Linux

root@ip-172-31-6-9:~# docker version

Client:

Version: 18.09.9

API version: 1.39

Go version: go1.11.13

Git commit: 039a7df

Built: Wed Sep 4 16:54:46 2019

OS/Arch: linux/arm64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 18.09.9

API version: 1.39 (minimum version 1.12)

Go version: go1.11.13

Git commit: 039a7df

Built: Wed Sep 4 16:21:47 2019

OS/Arch: linux/arm64

Experimental: false

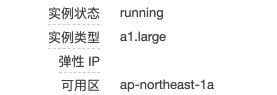

- ubuntu bionic info:

root@ip-172-31-9-110:~# uname -a

Linux ip-172-31-9-110 4.15.0-1045-aws #47-Ubuntu SMP Fri Aug 2 13:51:14 UTC 2019 aarch64 aarch64 aarch64 GNU/Linux

root@ip-172-31-9-110:~# docker version

Client: Docker Engine - Community

Version: 19.03.2

API version: 1.40

Go version: go1.12.8

Git commit: 6a30dfc

Built: Thu Aug 29 05:32:21 2019

OS/Arch: linux/arm64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.2

API version: 1.40 (minimum version 1.12)

Go version: go1.12.8

Git commit: 6a30dfc

Built: Thu Aug 29 05:30:53 2019

OS/Arch: linux/arm64

Experimental: false

containerd:

Version: 1.2.6

GitCommit: 894b81a4b802e4eb2a91d1ce216b8817763c29fb

runc:

Version: 1.0.0-rc8

GitCommit: 425e105d5a03fabd737a126ad93d62a9eeede87f

docker-init:

Version: 0.18.0

GitCommit: fec3683

Why in your example are running architecture tagged images

arm64v8/postgres:9.6vs just runningpostgres:9.6which will pull the correct architecture for you?

@hutchic in arm64 virtual machine, pull image postgres:9.6 and arm64v8/postgres:9.6, the result is the same. These two images have the same image sha ID. I just want to distinguish the image of x86 and arm64 from the image name.

@javierguerragiraldez @hishamhm @hutchic

Is there any progress with this issue? Urgent!!!

These two images have the same image sha ID

Yup I know. I was just double checking that you weren't running on qemu etc

Is there any progress with this issue

None yet I haven't found cycles to run the script

One thing I plan on doing is running the test suite from https://github.com/thibaultcha/lua-resty-mlcache on arm hardware to see if that will expose the problem more reliably / consistently if you want to have a quick go at that?

@hutchic

Running the test suite from https://github.com/thibaultcha/lua-resty-mlcache on arm hardware have encountered error:

docker run -ti kong:1.3.0-ubuntu bash

> apt-get update && apt-get install -y git

> mkdir /workspace && cd /workspace

> git clone https://github.com/thibaultcha/lua-resty-mlcache.git

> cd lua-resty-mlcache && git checkout 2.4.0

> cpan Test::Nginx # install Test::Nginx perl module on arm64 encountered error

> perl ./t/13-get_bulk.t

@javierguerragiraldez @hishamhm @hutchic

We use kong on arm64 hardware in production environment now. This issue is really urgent.

The lua-resty-mlcache tests seem to pass when ran on travis-ci arm64 hardware

git diff: https://github.com/thibaultcha/lua-resty-mlcache/compare/master...hutchic:master

travis run: https://travis-ci.org/hutchic/lua-resty-mlcache/builds/596240899

I'll try to use your reproduction script but the challenge of the behaviour being inconsistent makes it difficult to reproduce and additionally difficult to resolve.

I tried a simpler version of your script

while [[ true ]]; do

timeout 60 parallel -j 50 -N 0 docker push registry.test.com/ubuntu:16.04 ::: {1..50}

done

so far haven't seen any failures in the docker logs kong output. What the context around the error ( grep -C 5 )

@hutchic

It seems that re-creating kong repeatedly can make this problem reappear.

I send you a email which contains credentials to access my aws virtual machine, you can test it quickly.

ssh -i ./aws.pem [email protected]

> sudo su - root

> ./test.sh

It seems that re-creating kong repeatedly can make this problem reappear

That's very curious. Does your production scenario require the ability to frequently (re)create the Kong instance?

@hutchic

No,but in my production scenario the issue appeared just after the kong have started sometimes. If it is convenient, could you help me? The environment is ready. Wait online.

I send you a email which contains credentials to access my aws virtual machine, you can test it quickly.

@hutchic Is there any progress with this issue?

@jeremyxu2010 I haven't had time to look at this. Just a hunch: could you add a readiness check after starting Kong and before starting to use it? Given the new information that in order to demonstrate the issue Kong needs to be continually cycled I wonder if the issue isn't we're using a Kong instance that isn't fully prepared to proxy requests or one that's in the process of shutting down

@hutchic

Actually I am now deployed a 3-replicas kong cluster in kubernetes with readinessProbe:

ports:

- name: admin

containerPort: 8001

hostPort: 8001

protocol: TCP

- name: proxy

containerPort: 80

hostPort: 80

protocol: TCP

- name: proxy-tls

containerPort: 443

hostPort: 443

protocol: TCP

readinessProbe:

failureThreshold: 5

httpGet:

path: /status

port: admin

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

livenessProbe:

failureThreshold: 5

httpGet:

path: /status

port: admin

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 30

successThreshold: 1

timeoutSeconds: 5

However, kong seems not stable. Sometimes it reported errors just after it is ready. The error message is the same as I gave before. To make the problem is easier to appear, so I wrote that shell script.

BTW, is there any other method to check a kong instance is fully prepared to proxy requests?

What you have looks similar to how we verify Kong control plane readiness

https://github.com/Kong/kong-dist-kubernetes/blob/master/kong-control-plane-postgres.yaml#L132-L140

readinessProbe:

failureThreshold: 3

httpGet:

path: /status

port: 8001

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

The data plane check is a basic tcp check

https://github.com/Kong/kong-dist-kubernetes/blob/master/kong-ingress-data-plane-postgres.yaml#L62-L71

readinessProbe:

tcpSocket:

port: 8000

initialDelaySeconds: 5

periodSeconds: 10

livenessProbe:

tcpSocket:

port: 8000

initialDelaySeconds: 15

periodSeconds: 20

@jeremyxu2010 seeing as we can't reproduce this when leaving a gap for readiness I'm going to close this issue at this time

The issue still exists, why close it? @hutchic

We've been unable to reproduce unless you had additional information about the issue. We even tested mlcache on arm64 and it was 100% successful

@hutchic

Run this shell script on aws arm64 virtual machine, the issue can be reproduced 100%.

That script hits Kong during shutdown / startup period which I would advise against.

I've ran https://github.com/Kong/kong/issues/5042#issuecomment-540730159 which is a parsed down / simpler version of yours for a day straight without issue on equivalent AWS hardware.

@hutchic

I added the readinessProbe, livenessProbe configuration, but the problem still exists. After comparing the deployment manifest files, I found that the configuration item KONG_NGINX_WORKER_PROCESSES is different. It has a value of 1 in your deployment manifest and is not explicitly set in my deployment manifest. So is this question still related to the concurrency conflict of multiple processes?

I am having a similar issue with running Kong on Arm64 (AWS A1).

It only appears to happen when the system is required to handle more than 5rps.

@lyndon160 we weren't able to reproduce this issue and it's k8s specific and utilizes kong during shutdown / startup. Let's start a new issue and we'll try to collaborate on how to reproduce / debug 👍

An update here - the issue has been fixed in OpenResty's fork of LuaJIT already, which will be bundled in OpenResty's 1.17.8.1 release. See LuaJIT/LuaJIT#579 (comment).

A workaround fix has been pushed in 2.0.4 via #5797 in the meantime.

Most helpful comment

i don't see any relation with lightuserdata here. on a quick look (and without reproducing locally (yet)), it looks to me about snapshot replaying. in short: on a tight loop (like this numeric

for) some variables (especially integers) aren't put into the Lua stack; instead they're managed directly in CPU registers. on a trace exit (like this seldom-takenif), at first it's handle by dropping to the interpreter, so every variable (and all local state) must be replayed from a snapshot (visible on IR listings). what I've seen previously is that when the exit itself is compiled (as a side trace), the snapshot replaying is also compiled into machine code, and there have been bugs on that process, even in x86 (long ago). Maybe they're still lurking on ARM64 code (which has a weird standard for 64-bit integer reinstantiation).it's just a hunch, right now; unfortunately. I'd have to look at the trace IR and mcode to confirm.

i'll try to reproduce locally.